python (6) 卷积神经网络实例

深度学习之卷积_冰激凌啊的博客-CSDN博客_深度学习卷积

1,卷积模型解决手写数字分类

transforms.Compose()函数_马鹏森的博客-CSDN博客_transforms.compose

主要是修改了自定义模型那块

plt.show()后暂停的处理

plt.imshow与plt.show区别之交互与阻塞模式_鬼扯子的博客-CSDN博客_plt 交互

matplotlib中ion()和ioff()的使用_一只小Kevin的博客-CSDN博客

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as opt

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import torchvision

from torchvision import datasets,transforms

#Compose把多个步骤整合到一起,transformation = ToTensor()

#ToTensor()能够把灰度范围从0-255变换到0-1之间

transformation = transforms.Compose([transforms.ToTensor()])

train_dataset = torchvision.datasets.MNIST('data',train=True,download=True,transform=transformation)

test_dataset = torchvision.datasets.MNIST('data',train =False,download=True,transform=transformation)

train_dataloader = torch.utils.data.DataLoader(train_dataset,batch_size = 64,shuffle = True)

test_dataloader = torch.utils.data.DataLoader(test_dataset,batch_size = 256)

imgs,labels = next(iter(train_dataloader))

#64是批次有多少图片,1是通道,黑白,然后是高宽

print(imgs.shape)

#卷积神经网络一开始不需要展平,要提取完特征后才展平

class model(nn.Module):

def __init__(self):

super(model, self).__init__()

#第一层卷积

self.conv1 = nn.Conv2d(1,6,[5,5]) #1:当前图片的通道数,6:表示用6个卷积核提取特征并输出厚度为6的图.最后是卷积核形式

#池化层

self.pool = nn.MaxPool2d([2,2])

#第二层卷积

self.conv2 = nn.Conv2d(6, 16, [5, 5]) #因为前面输出图厚度为6,所以现在有6个通道

self.linear_1 = nn.Linear(16*4*4, 120) #这里的16*4*4是在notebook下用model(imgs)查看的,会报错。先写上

self.linear_2 = nn.Linear(120, 10)

def forward(self, input):

x = F.relu(self.conv1(input)) #也要用激活函数

x = self.pool(x) #因为没训练什么参数,所以不用激活

x = F.relu(self.conv2(x))

x = self.pool(x)

#print(x.size()) #查看当前图的数值,通道,高宽。可看见当前参数为torch.Size([64, 16, 4, 4])

#也可写成x = x.view(-1, 16*4*4)一般都这样写

x = x.view(x.size(0), 16*4*4) #x.size(0)表示x的第0个维度,就是batch值。然后展平后的features自己计算

x = torch.relu(self.linear_1(x))

logits = self.linear_2(x)

return logits

# 初始化模型,使模型在当前可用设备上使用.标准写法

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = model().to(device)

# 损失函数

loss_fn = torch.nn.CrossEntropyLoss()

# 优化

opt = torch.optim.SGD(model.parameters(), lr=0.001)

# 编写通用训练函数代码

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 获得当前数据的总样本数

num_batches = len(dataloader) # 批次数:如全部数据为100,batch=16,则7次可全部训练一遍,7就是批次数

train_loss, correct = 0, 0 # train_loss所有批次的损失和,correct为累加的预测正确的样本数

for x, y in dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad(): # argmax(axis),这里axis=1,即按行看,返回最大元素的位置

correct += (pred.argmax(1) == y).type(torch.float).sum().item() # 计算预测对了多少个样本。地0维是batch,所以用1

train_loss += loss.item()

# 计算正确率和每个样本的平均损失值

correct /= size

train_loss /= num_batches

return correct, train_loss

# 编写通用测试函数代码

def ttest(test_dataloader, model, loss_fn): # 注意名字不要取成test,不然会自动运行pytest

size = len(test_dataloader.dataset) # 获得当前数据的总样本数

num_batches = len(test_dataloader) # 批次数

test_loss, correct = 0, 0 # train_loss所有批次的损失和,correct为累加的预测正确的样本数

with torch.no_grad(): # 测试流程不需要跟踪梯度

for x, y in test_dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss += loss.item()

correct /= size

test_loss /= num_batches

return correct, test_loss

def fit(epoches, train_dataloader, test_dataloader, model, loss_fn, opt):

train_loss = []

train_correct = []

test_loss = []

test_correct = []

for epoch in range(epoches):

epoch_correct, epoch_loss = train(train_dataloader, model, loss_fn, opt)

epoch_test_correct, epoch_test_loss = ttest(test_dataloader, model, loss_fn)

# 记录训练情况

test_loss.append(epoch_test_loss)

train_loss.append(epoch_loss)

test_correct.append(epoch_test_correct)

train_correct.append(epoch_correct)

# 打印模板,用的字符串格式化.注意双引号是中文双引号

template = ("epoch:{:2d},train_loss:{:.5f},train_correct:{:.1f},test_loss:{:.5f},test_correct:{:.1f}")

# 注意这里输出的是当前循环的loss和correct,不是存入列表的train_loss

print(template.format(epoch, epoch_loss, epoch_correct * 100, epoch_test_loss,

epoch_test_correct * 100))

print('Done')

return train_loss, train_correct, test_loss, test_correct

# 直接调用fit函数

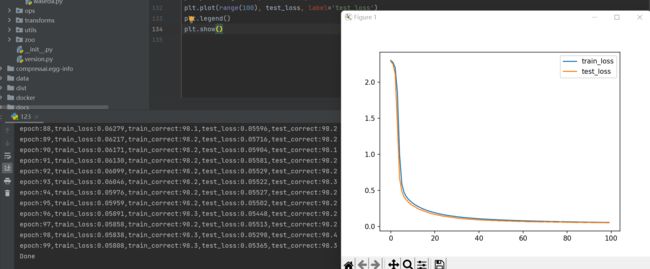

train_loss, train_correct, test_loss, test_correct = fit(100, train_dataloader, test_dataloader, model, loss_fn, opt)

# 也可绘图

plt.plot(range(100), train_loss, label='train_loss')

plt.plot(range(100), test_loss, label='test_loss')

plt.legend()

plt.show() 图像的shape计算(即其中的16*4*4),最好就是再中间用print打印出来

2.天气判断

因为数据集图片的大小,形状都不一样,所以需要在数据预处理时处理好。第一种读取图片的方法

【Python杂项】os.path.join()函数用法详解_bbcen的博客-CSDN博客_python中os.path.join

Python中os.mkdir()与os.makedirs()的区别及用法_威震四海的博客-CSDN博客_os.makedir

torchvision自带的transforms.to_tensor用法_华zyh的博客-CSDN博客

PyTorch数据归一化处理:transforms.Normalize及计算图像数据集的均值和方差_紫芝的博客-CSDN博客_pytorch 数据归一化

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as opt

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import os

import shutil #拷贝图片

#plt.ion()

import torchvision

from torchvision import datasets,transforms

print(torch.cuda.is_available()) #查看GPU是否可用

#1.用torchvision的方法读取图片

#ImageFolder从分类的文件夹中创建dataset类型数据

#分类的意思是单独文件夹内只存放一种类别的数据,比如rain,cloudy

torchvision.datasets.ImageFolder

#在当前目录下创建目录分别存放四个分类的图片

base_dir = r'C:\\Users\\qjjt\\Desktop\\python\\datasets\\4weather'

#判断base_dir是否是文件目录,如果不是就创建

if not os.path.isdir(base_dir):

os.mkdir(base_dir)

#然后在其中创建文件夹并存放分类数据

train_dir = os.path.join(base_dir,'train')

test_dir = os.path.join(base_dir, 'test')

os.mkdir(train_dir)

os.mkdir(test_dir)

#现在有4种类别

#注意,若已经创建成功。再运行会报错,因为不允许重复创建

specises = ['cloudy','rain','shine','sunrise']

for train_or_test in ['train','test']: #train,test选择

for spec in specises: #类别选择

os.mkdir(os.path.join(base_dir,train_or_test,spec))#在train或test目录下分别创建四个天气文件夹

#把图片分别复制到相应文件夹中

image_dir = r'C:\\Users\\qjjt\\Desktop\\python\\datasets\\dataset2'

#把目录中的所有图片列出来

print(os.listdir(image_dir))

#加个序号,能被5整除的就放在test中

for i,img in enumerate(os.listdir(image_dir)):

for spec in specises: #判断图片属于哪一类

if spec in img: #字符串判断.如果选择的spec在img列表的当前名字中。比如cloudy,当前img为cloudy03

sourse =os.path.join(image_dir,img) #当前图的原始路径和名字.原始目录

if i%5 == 0:

destination =os.path.join(base_dir,'test',spec,img) #将要复制到的路径和名字.目标目录

else:

destination = os.path.join(base_dir, 'train', spec, img) # 将要复制到的路径和名字.目标目录

shutil.copy(sourse,destination) #从原目录复制到目标目录

#得到train,test具体图片数

for train_or_test in ['train','test']:

for spec in specises:

#打印出当前路径下拥有的图片的列表的长度

print(train_or_test,spec,len(os.listdir(os.path.join(base_dir,train_or_test,spec))))

#读取,预处理图片并创建dataset

#给图片规定统一大小

from torchvision import transforms

#所有的转换指令都写在这里面

#resize重新设置图片大小,小的速度快,大的保留细节多

#Normalize 标准化.这里的mean,std是猜测的.有些数据集会公布这两项数据

transform = transforms.Compose([transforms.Resize((192,192)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.5,0.5,0.5],std=[0.5,0.5,0.5])

])

#从图片文件夹加载数据

#地址和transform

train_ds = torchvision.datasets.ImageFolder(train_dir,transform=transform)

test_ds = torchvision.datasets.ImageFolder(test_dir,transform=transform)

#查看dataset有哪些类别

print(train_ds.classes)

#每个类别对应的编号

print(train_ds.class_to_idx)

print(len(train_ds))

#dataloader

BATCHSIZE = 64

train_dl = torch.utils.data.DataLoader(train_ds,batch_size=BATCHSIZE,shuffle=True) #注意是batch_size,不要为了横杠

test_dl = torch.utils.data.DataLoader(test_ds,batch_size=BATCHSIZE) #DataLoader记得有两个大写

#绘图来看这一批次的图的样子.图和对应标签

#因为train_dl为可迭代的对象,用iter后变成一个生成器,再用next即可取出一个批次的数据

imgs,labels = next(iter(train_dl))

print(imgs.shape) #[64, 3, 192, 192] 3:rgb通道 192:重设后图片大小

#单张绘图

#也可交换几个维度之间的顺序

#把通道维度交换到最后去了

im = imgs[0].permute(1,2,0)

print(im.shape)

#转化类型来绘图

im = im.numpy()

#因为前面transforms.Normalize使数值类型取值在[-1,1]间,这里变成[0,1]

im = (im+1)/2

#plt.imshow(im)

print(labels[0])

#同事显示多张图片及对应标签

#class_to_idx的形式是rain:1,这里想改变为1:rain,用数字表现一种天气。items()取出所有的值

id_to_class = dict((v,k) for k,v in train_ds.class_to_idx.items())

print(id_to_class)#可看见现在变为1:“rain"

#同时显示六张图片

plt.figure(figsize=(12,8))

for i,(img,label) in enumerate(zip(imgs[:6],labels[:6])):

img = (img.permute(1,2,0).numpy()+1)/2 #规范值范围

plt.subplot(2,4,i+1)

plt.title(id_to_class.get(label.item()))#张量取值用item()

#plt.imshow(img)

#模型创建

class net(nn.Module):

def __init__(self):

super(net, self).__init__()

self.conv1 = nn.Conv2d(3,16,3) #input:3通道 16个卷积核 3x3的卷积

self.conv2 = nn.Conv2d(16, 32, 3)

self.conv3 = nn.Conv2d(32, 64, 3)

self.pool = nn.MaxPool2d(2,2)

self.linear_1 = nn.Linear(64*22*22, 1024)

self.linear_2 = nn.Linear(1024, 4)

def forward(self, input):

x = F.relu(self.conv1(input))

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

x = F.relu(self.conv3(x))

x = self.pool(x)

#print(x.size()) #但这个还是需要打印,因为初始化那里需要这个参数.算出来[64, 64, 22, 22]

x = x.view(-1, x.size(1)*x.size(2)*x.size(3)) #直接用x.size(),不用再自己计算了

x = torch.relu(self.linear_1(x))

logits = self.linear_2(x)

return logits

# #批次数据的预测结果

# preds = model(imgs)

# #因为第0维是batch,1维是4分类。这里选出四个分类中数值最大的那个

# torch.argmax(preds,1)

#model = net(),和下面的model = model().to(device)不能同时用

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(device)

model = net().to(device)#直接赋model

#损失函数

loss_fn = torch.nn.CrossEntropyLoss()

#优化

opt = torch.optim.Adam(model.parameters(),lr=0.001)

#训练测试函数

def fit(epoches, train_dataloader, test_dataloader, model):

#训练部分

correct = 0

total = 0

running_loss = 0

for x, y in train_dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

opt.zero_grad()

loss.backward()

opt.step()

with torch.no_grad():

pred = torch.argmax(pred,dim=1)

correct += (pred == y).sum().item()

total += y.size(0)

running_loss += loss.item()

epoch_loss = running_loss/len(train_dataloader.dataset)

epoch_acc = correct/total

#测试部分

test_correct = 0

test_total = 0

test_running_loss = 0

with torch.no_grad():

for x, y in test_dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

pred = torch.argmax(pred, dim=1)

test_correct += (pred == y).sum().item()

test_total += y.size(0)

test_running_loss += loss.item()

epoch_test_loss = test_running_loss / len(test_dataloader.dataset)

epoch_test_acc = test_correct / test_total

print('epoch: ', epoches,

'loss: ', round(epoch_loss, 3),

'accuracy:', round(epoch_acc, 3),

'test_loss: ', round(epoch_test_loss, 3),

'test_accuracy:', round(epoch_test_acc, 3)

)

return epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc

epochs = 30

train_loss = []

train_correct = []

test_loss = []

test_correct = []

#直接用训练测试函数

for epoch in range(epochs):

epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc = fit(epoch, train_dl, test_dl, model)

test_loss.append(epoch_test_loss)

train_loss.append(epoch_loss)

test_correct.append(epoch_test_acc)

train_correct.append(epoch_acc)

plt.plot(range(1, epochs+1),train_loss,label='train_loss')

plt.plot(range(1, epochs+1),test_loss,label='test_loss')

plt.legend()

#plt.ioff()

plt.show()3.dropout解决过拟合

前向传播时随机去掉一些神经元的结果,相当于多个模型训练,接触过拟合问题

Dropout层到底在干些什么(Pytorch实现)_NorthSmile的博客-CSDN博客_dropout层 pytorch

因为测试时不需要dropout,所以其中的fit函数也做了相应改变.加了mode.train(),mode.eval()

自定义模型时也定义了dropout层

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as opt

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import os

import shutil #拷贝图片

#plt.ion()

import torchvision

from torchvision import datasets,transforms

print(torch.cuda.is_available()) #查看GPU是否可用

#1.用torchvision的方法读取图片

#ImageFolder从分类的文件夹中创建dataset类型数据

#分类的意思是单独文件夹内只存放一种类别的数据,比如rain,cloudy

torchvision.datasets.ImageFolder

#读取图片

base_dir = r'C:\\Users\\qjjt\\Desktop\\python\\datasets\\4weather'

train_dir = os.path.join(base_dir,'train')

test_dir = os.path.join(base_dir, 'test')

specises = ['cloudy','rain','shine','sunrise']

image_dir = r'C:\\Users\\qjjt\\Desktop\\python\\datasets\\dataset2'

#读取,预处理图片并创建dataset

#给图片规定统一大小

from torchvision import transforms

#所有的转换指令都写在这里面

#resize重新设置图片大小,小的速度快,大的保留细节多

#Normalize 标准化.这里的mean,std是猜测的.有些数据集会公布这两项数据

transform = transforms.Compose([transforms.Resize((192,192)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.5,0.5,0.5],std=[0.5,0.5,0.5])

])

#从图片文件夹加载数据

#地址和transform

train_ds = torchvision.datasets.ImageFolder(train_dir,transform=transform)

test_ds = torchvision.datasets.ImageFolder(test_dir,transform=transform)

#查看dataset有哪些类别

print(train_ds.classes)

#每个类别对应的编号

print(train_ds.class_to_idx)

print(len(train_ds))

#dataloader

BATCHSIZE = 64

train_dl = torch.utils.data.DataLoader(train_ds,batch_size=BATCHSIZE,shuffle=True) #注意是batch_size,不要为了横杠

test_dl = torch.utils.data.DataLoader(test_ds,batch_size=BATCHSIZE) #DataLoader记得有两个大写

#绘图来看这一批次的图的样子.图和对应标签

#因为train_dl为可迭代的对象,用iter后变成一个生成器,再用next即可取出一个批次的数据

imgs,labels = next(iter(train_dl))

print(imgs.shape) #[64, 3, 192, 192] 3:rgb通道 192:重设后图片大小

#单张绘图

#也可交换几个维度之间的顺序

#把通道维度交换到最后去了

im = imgs[0].permute(1,2,0)

print(im.shape)

#转化类型来绘图

im = im.numpy()

#因为前面transforms.Normalize使数值类型取值在[-1,1]间,这里变成[0,1]

im = (im+1)/2

#plt.imshow(im)

print(labels[0])

#同事显示多张图片及对应标签

#class_to_idx的形式是rain:1,这里想改变为1:rain,用数字表现一种天气。items()取出所有的值

id_to_class = dict((v,k) for k,v in train_ds.class_to_idx.items())

print(id_to_class)#可看见现在变为1:“rain"

#同时显示六张图片

plt.figure(figsize=(12,8))

for i,(img,label) in enumerate(zip(imgs[:6],labels[:6])):

img = (img.permute(1,2,0).numpy()+1)/2 #规范值范围

plt.subplot(2,4,i+1)

plt.title(id_to_class.get(label.item()))#张量取值用item()

#plt.imshow(img)

#模型创建

class net(nn.Module):

def __init__(self):

super(net, self).__init__()

self.conv1 = nn.Conv2d(3,16,3) #input:3通道 16个卷积核 3x3的卷积

self.conv2 = nn.Conv2d(16, 32, 3)

self.conv3 = nn.Conv2d(32, 64, 3)

self.pool = nn.MaxPool2d(2,2)

self.drop = nn.Dropout(0.3) #丢掉30%的神经元.linear层使用dropout,4维用dropout2d

self.drop2d = nn.Dropout2d(0.4)

self.linear_1 = nn.Linear(64*22*22, 1024)

self.linear_2 = nn.Linear(1024, 256)

self.linear_3 = nn.Linear(256, 4)

def forward(self, input):

x = F.relu(self.conv1(input))

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

x = F.relu(self.conv3(x))

x = self.pool(x)

x = self.drop2d(x) #为为卷积层添加了dropout

#print(x.size()) #但这个还是需要打印,因为初始化那里需要这个参数.算出来[64, 64, 22, 22]

x = x.view(-1, x.size(1)*x.size(2)*x.size(3)) #直接用x.size(),不用再自己计算了

x = torch.relu(self.linear_1(x))

x = self.drop(x) #这个drop是用于linear层的,最好放在后面

x = torch.relu(self.linear_2(x))

x = self.drop(x)

logits = self.linear_3(x)

return logits

# #批次数据的预测结果

# preds = model(imgs)

# #因为第0维是batch,1维是4分类。这里选出四个分类中数值最大的那个

# torch.argmax(preds,1)

#model = net(),和下面的model = model().to(device)不能同时用

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(device)

model = net().to(device)#直接赋model

#损失函数

loss_fn = torch.nn.CrossEntropyLoss()

#优化

opt = torch.optim.Adam(model.parameters(),lr=0.001)

#训练测试函数

def fit(epoches, train_dataloader, test_dataloader, model):

#训练部分

correct = 0

total = 0

running_loss = 0

model.train() #表明现在处于训练模式,会使用dropout

for x, y in train_dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

opt.zero_grad()

loss.backward()

opt.step()

with torch.no_grad():

pred = torch.argmax(pred,dim=1)

correct += (pred == y).sum().item()

total += y.size(0)

running_loss += loss.item()

epoch_loss = running_loss/len(train_dataloader.dataset)

epoch_acc = correct/total

#测试部分

test_correct = 0

test_total = 0

test_running_loss = 0

model.eval() #表明现在是测试模式,不用dropout

with torch.no_grad():

for x, y in test_dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

pred = torch.argmax(pred, dim=1)

test_correct += (pred == y).sum().item()

test_total += y.size(0)

test_running_loss += loss.item()

epoch_test_loss = test_running_loss / len(test_dataloader.dataset)

epoch_test_acc = test_correct / test_total

print('epoch: ', epoches,

'loss: ', round(epoch_loss, 3),

'accuracy:', round(epoch_acc, 3),

'test_loss: ', round(epoch_test_loss, 3),

'test_accuracy:', round(epoch_test_acc, 3)

)

return epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc

epochs = 30

train_loss = []

train_correct = []

test_loss = []

test_correct = []

#直接用训练测试函数

for epoch in range(epochs):

epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc = fit(epoch, train_dl, test_dl, model)

test_loss.append(epoch_test_loss)

train_loss.append(epoch_loss)

test_correct.append(epoch_test_acc)

train_correct.append(epoch_acc)

plt.plot(range(1, epochs+1),train_loss,label='train_loss')

plt.plot(range(1, epochs+1),test_loss,label='test_loss')

plt.legend()

#plt.ioff()

plt.show()4.批标准化

不是只对输入标准化,归一化,而是对每一层的输出都标准化

什么是批标准化 (Batch Normalization)_hebi123s的博客-CSDN博客_batchnormalization

bn层放在激活函数后效果更好

注意:dropout,bn层在训练和测试表现结果不同,所以必须加model.train(),model.eval()加以区别

是

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as opt

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import os

import shutil #拷贝图片

#plt.ion()

import torchvision

from torchvision import datasets,transforms

print(torch.cuda.is_available()) #查看GPU是否可用

#1.用torchvision的方法读取图片

#ImageFolder从分类的文件夹中创建dataset类型数据

#分类的意思是单独文件夹内只存放一种类别的数据,比如rain,cloudy

torchvision.datasets.ImageFolder

#读取图片

base_dir = r'C:\\Users\\qjjt\\Desktop\\python\\datasets\\4weather'

train_dir = os.path.join(base_dir,'train')

test_dir = os.path.join(base_dir, 'test')

specises = ['cloudy','rain','shine','sunrise']

image_dir = r'C:\\Users\\qjjt\\Desktop\\python\\datasets\\dataset2'

#读取,预处理图片并创建dataset

#给图片规定统一大小

from torchvision import transforms

#所有的转换指令都写在这里面

#resize重新设置图片大小,小的速度快,大的保留细节多

#Normalize 标准化.这里的mean,std是猜测的.有些数据集会公布这两项数据

transform = transforms.Compose([transforms.Resize((192,192)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.5,0.5,0.5],std=[0.5,0.5,0.5])

])

#从图片文件夹加载数据

#地址和transform

train_ds = torchvision.datasets.ImageFolder(train_dir,transform=transform)

test_ds = torchvision.datasets.ImageFolder(test_dir,transform=transform)

#查看dataset有哪些类别

print(train_ds.classes)

#每个类别对应的编号

print(train_ds.class_to_idx)

print(len(train_ds))

#dataloader

BATCHSIZE = 64

train_dl = torch.utils.data.DataLoader(train_ds,batch_size=BATCHSIZE,shuffle=True) #注意是batch_size,不要为了横杠

test_dl = torch.utils.data.DataLoader(test_ds,batch_size=BATCHSIZE) #DataLoader记得有两个大写

#绘图来看这一批次的图的样子.图和对应标签

#因为train_dl为可迭代的对象,用iter后变成一个生成器,再用next即可取出一个批次的数据

imgs,labels = next(iter(train_dl))

print(imgs.shape) #[64, 3, 192, 192] 3:rgb通道 192:重设后图片大小

#单张绘图

#也可交换几个维度之间的顺序

#把通道维度交换到最后去了

im = imgs[0].permute(1,2,0)

print(im.shape)

#转化类型来绘图

im = im.numpy()

#因为前面transforms.Normalize使数值类型取值在[-1,1]间,这里变成[0,1]

im = (im+1)/2

#plt.imshow(im)

print(labels[0])

#同事显示多张图片及对应标签

#class_to_idx的形式是rain:1,这里想改变为1:rain,用数字表现一种天气。items()取出所有的值

id_to_class = dict((v,k) for k,v in train_ds.class_to_idx.items())

print(id_to_class)#可看见现在变为1:“rain"

#同时显示六张图片

plt.figure(figsize=(12,8))

for i,(img,label) in enumerate(zip(imgs[:6],labels[:6])):

img = (img.permute(1,2,0).numpy()+1)/2 #规范值范围

plt.subplot(2,4,i+1)

plt.title(id_to_class.get(label.item()))#张量取值用item()

#plt.imshow(img)

#模型创建

class net(nn.Module):

def __init__(self):

super(net, self).__init__()

self.conv1 = nn.Conv2d(3,16,3) #input:3通道 16个卷积核 3x3的卷积

self.bn1 = nn.BatchNorm2d(16) #因为应用到卷积层上(2d),第一层16个卷积核

self.conv2 = nn.Conv2d(16, 32, 3)

self.bn2 = nn.BatchNorm2d(32)

self.conv3 = nn.Conv2d(32, 64, 3)

self.bn3 = nn.BatchNorm2d(64)

self.pool = nn.MaxPool2d(2,2)

self.drop = nn.Dropout(0.3) #丢掉30%的神经元.linear层使用dropout,4维用dropout2d

self.drop2d = nn.Dropout2d(0.4)

self.linear_1 = nn.Linear(64*22*22, 1024)

self.bn_l1 = nn.BatchNorm1d(1024)

self.linear_2 = nn.Linear(1024, 256)

self.bn_l2 = nn.BatchNorm1d(256)

self.linear_3 = nn.Linear(256, 4)

def forward(self, input):

x = F.relu(self.conv1(input))

x = self.pool(x)

x = self.bn1(x) #放在池化层后前都没什么区别

x = F.relu(self.conv2(x))

x = self.pool(x)

x = self.bn2(x)

x = F.relu(self.conv3(x))

x = self.pool(x)

x = self.bn3(x)

x = self.drop2d(x) #为为卷积层添加了dropout

#print(x.size()) #但这个还是需要打印,因为初始化那里需要这个参数.算出来[64, 64, 22, 22]

x = x.view(-1, x.size(1)*x.size(2)*x.size(3)) #直接用x.size(),不用再自己计算了

x = torch.relu(self.linear_1(x))

x = self.bn_l1(x) #放在dropout前

x = self.drop(x) #这个drop是用于linear层的,最好放在后面

x = torch.relu(self.linear_2(x))

x = self.bn_l2(x)

x = self.drop(x)

logits = self.linear_3(x)

return logits

# #批次数据的预测结果

# preds = model(imgs)

# #因为第0维是batch,1维是4分类。这里选出四个分类中数值最大的那个

# torch.argmax(preds,1)

#model = net(),和下面的model = model().to(device)不能同时用

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(device)

model = net().to(device)#直接赋model

#损失函数

loss_fn = torch.nn.CrossEntropyLoss()

#优化

opt = torch.optim.Adam(model.parameters(),lr=0.001)

#训练测试函数

def fit(epoches, train_dataloader, test_dataloader, model):

#训练部分

correct = 0

total = 0

running_loss = 0

model.train() #表明现在处于训练模式,会使用dropout

for x, y in train_dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

opt.zero_grad()

loss.backward()

opt.step()

with torch.no_grad():

pred = torch.argmax(pred,dim=1)

correct += (pred == y).sum().item()

total += y.size(0)

running_loss += loss.item()

epoch_loss = running_loss/len(train_dataloader.dataset)

epoch_acc = correct/total

#测试部分

test_correct = 0

test_total = 0

test_running_loss = 0

model.eval() #表明现在是测试模式,不用dropout

with torch.no_grad():

for x, y in test_dataloader: # 注意:x,y都是一个批次的值

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

pred = torch.argmax(pred, dim=1)

test_correct += (pred == y).sum().item()

test_total += y.size(0)

test_running_loss += loss.item()

epoch_test_loss = test_running_loss / len(test_dataloader.dataset)

epoch_test_acc = test_correct / test_total

print('epoch: ', epoches,

'loss: ', round(epoch_loss, 3),

'accuracy:', round(epoch_acc, 3),

'test_loss: ', round(epoch_test_loss, 3),

'test_accuracy:', round(epoch_test_acc, 3)

)

return epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc

epochs = 30

train_loss = []

train_correct = []

test_loss = []

test_correct = []

#直接用训练测试函数

for epoch in range(epochs):

epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc = fit(epoch, train_dl, test_dl, model)

test_loss.append(epoch_test_loss)

train_loss.append(epoch_loss)

test_correct.append(epoch_test_acc)

train_correct.append(epoch_acc)

plt.plot(range(1, epochs+1),train_loss,label='train_loss')

plt.plot(range(1, epochs+1),test_loss,label='test_loss')

plt.legend()

#plt.ioff()

plt.show()