深度学习实战——CNN训练识别COVID-19肺炎肺部CT图模型

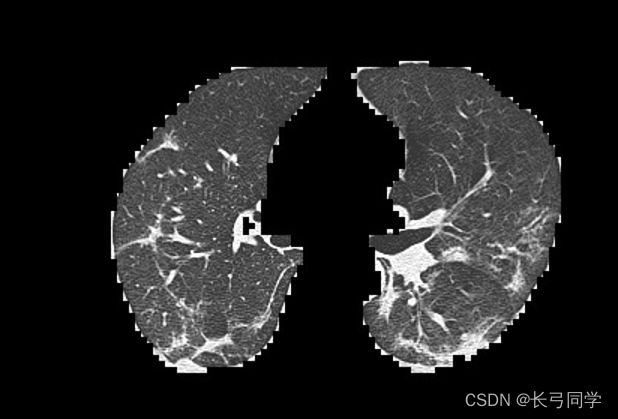

1. COVID-19肺炎肺部CT图特征简述

(以下内容为查阅资料后整理,本人并非医学影像专业,如有错误敬请指正)

主要为间质化改变,磨玻璃影

2.数据预处理

为减少GPU计算量,先使用crop函数裁去CT图黑边,并对图像进行resize

(此处处理后的图片大小为420*290)

训练集与测试集数据总数:

| train | test | |

| covid-19 | 2289 | 572 |

| normal | 1592 | 398 |

3.使用卷积神经网络(CNN)

参数:

steps_per_epoch=10,

validation_steps=50,

verbose=1,

epochs=70代码如下:

import os, glob

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow import keras

from keras import backend as K

from tensorflow.keras.optimizers import Adam

from keras import layers, models

from tensorflow.keras import activations

####### Generatiiing Data

batch_size = 64

SIZE1 = 420

SIZE2 = 290

train_datagen = ImageDataGenerator(rescale=1. / 255)

# Change the training path '/home/idu/Desktop/COV19D/train/' to where your training set's directory is

train_generator = train_datagen.flow_from_directory(

'../train_CNN', ## COV19-CT-DB Training set (335672 Images)

target_size=(SIZE1, SIZE2),

batch_size=batch_size,

color_mode='grayscale',

classes=['class1', 'class2'],

class_mode='binary')

val_datagen = ImageDataGenerator(rescale=1. / 255)

# Change the training path '/home/idu/Desktop/COV19D/validation/' to where your validaiton set's directory is

val_generator = val_datagen.flow_from_directory(

'../test_CNN', ## COV19-CT-DB Validation set (75532 images)

target_size=(SIZE1, SIZE2),

batch_size=batch_size,

color_mode='grayscale',

classes=['class1', 'class2'],

class_mode='binary')

################ CNN Model Architecture

def make_model():

model = models.Sequential()

# Convulotional Layer 1

model.add(layers.Conv2D(16, (3, 3), input_shape=(SIZE1, SIZE2, 1), padding="same"))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.MaxPooling2D((2, 2)))

# Convulotional Layer 2

model.add(layers.Conv2D(32, (3, 3), padding="same"))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.MaxPooling2D((2, 2)))

# Convulotional Layer 3

model.add(layers.Conv2D(64, (3, 3), padding="same"))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.MaxPooling2D((2, 2)))

# Convulotional Layer 4

model.add(layers.Conv2D(128, (3, 3), padding="same"))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.MaxPooling2D((2, 2)))

# Fully Connected Layer

model.add(layers.Flatten())

model.add(layers.Dense(256))

model.add(layers.BatchNormalization())

model.add(layers.ReLU())

model.add(layers.Dropout(0.1))

# Dense Layer

model.add(layers.Dense(1, activation='sigmoid'))

return model

model = make_model()

###################################### Compiling and Training the model

n_epochs = 70

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=[tf.keras.metrics.Precision(), tf.keras.metrics.Recall(), 'accuracy'])

history = model.fit(train_generator,

steps_per_epoch=10,

validation_data=val_generator,

validation_steps=50,

verbose=1,

epochs=n_epochs)

######################## Evaluating

print(history.history.keys())

Train_accuracy = history.history['accuracy']

print(Train_accuracy)

print(np.mean(Train_accuracy))

model.save("model_cnn.pkl")

val_accuracy = history.history['val_accuracy']

print(val_accuracy)

print(np.mean(val_accuracy))

epochs = range(1, len(Train_accuracy) + 1)

plt.figure(figsize=(12, 6))

plt.plot(epochs, Train_accuracy, 'g', label='Training acc')

plt.plot(epochs, val_accuracy, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('accuracy')

plt.ylim(0.45, 1)

plt.xlim(0, 50)

plt.legend()

plt.show()

val_recall = history.history['val_recall']

print(val_recall)

avg_recall = np.mean(val_recall)

avg_recall

val_precision = history.history['val_precision']

avg_precision = np.mean(val_precision)

avg_precision

epochs = range(1, len(Train_accuracy) + 1)

plt.figure(figsize=(12, 6))

plt.plot(epochs, val_recall, 'g', label='Validation Recall')

plt.plot(epochs, val_precision, 'b', label='Validation Prcision')

plt.title('Validation recall and Validation Percision')

plt.xlabel('Epochs')

plt.ylabel('Recall and Precision')

plt.legend()

plt.ylim(0, 1)

plt.show()

Macro_F1score = (2 * avg_precision * avg_recall) / (avg_precision + avg_recall)

Macro_F1score

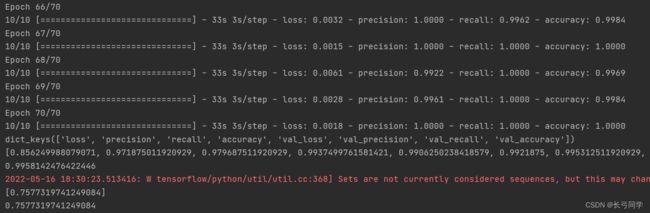

4.训练模型结果

如图所示accuracy为0.9958 ,val_recall为0.7577

4.问题

使用plt库显示召回率时,会显示报错

ValueError: x and y must have same first dimension, but have shapes (70,) and (1,)

这是由于plt库中规定X,Y的维度应该一致,而我设置的参数维度不一致,所以导致报错并且无法显示,但是此处不影响模型的训练