GridSearchCV ‘roc_auc‘结果和roc_auc_score不一致

最近XGBoost调参时发现这个问题,解决后记录一下。

from sklearn.model_selection import GridSearchCV

import xgboost as xgb

from sklearn.metrics import classification_report, roc_curve, auc, confusion_matrix, recall_score, precision_score, f1_score, roc_auc_score, make_scorer

param_test1 = {

'max_depth': [i for i in range(3, 10, 2)],

'min_child_weight': [i for i in range(1, 6, 2)]

}

gsearch = GridSearchCV(

estimator=xgb.XGBClassifier(

learning_rate=0.1,

n_estimators=140,

max_depth=5,

min_child_weight=1,

gamma=0,

subsample=0.8,

colsample_bytree=0.8,

nthread=4,

scale_pos_weight=1,

seed=27),

param_grid=param_test1,

scoring='roc_auc',

cv=5)

gsearch.fit(X_train, y_train)

y_pred = gsearch.predict(X_test)

print('max_depth, min_child_weight')

print('Best parameters:', gsearch.best_params_)

print('Best cross-validation score(AUC):{:.3f}'.format(gsearch.best_score_))

print('Test set score(AUC):{:.3f}'.format(gsearch.score(X_test, y_test)))

print('Test set AUC:{:.3f}'.format(roc_auc_score(y_test, y_pred)))

print('Test set recall:{:.3f}'.format(recall_score(y_test, y_pred)))

print('Test set precision:{:.3f}'.format(precision_score(y_test,y_pred)))

print('Test set F1:{:.3f}'.format(f1_score(y_test, y_pred)))运行结果:

max_depth, min_child_weight

Best parameters: {'max_depth': 3, 'min_child_weight': 3}

Best cross-validation score(AUC):0.835

Test set score(AUC):0.836

Test set AUC:0.680

Test set recall:0.371

Test set precision0.911

Test set F1:0.528看出GridSearchCV在验证集的AUC得分0.836,和直接用roc_auc_score算出的0.680不一样,后者偏小。

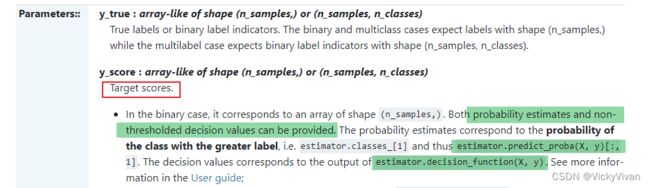

原因在于我这里roc_auc_score计算错误,官方文档sklearn.metrics.roc_auc_score中这样说:

正确方法应该是:

y_score = gsearch.predict_proba(X_test)[:, 1]

print('Test set AUC:{:.3f}'.format(roc_auc_score(y_test, y_score)))这时候就会发现和GridSearchCV在验证集的AUC得分一致了。

同样的,如果使用roc_curve + auc, 也需注意这个问题,正确的调用应该是↓

y_score = gsearch.predict_proba(X_test)[:, 1]

fpr, tpr, thresholds = roc_curve(y_test, y_score)

roc_auc = auc(fpr, tpr)有些算法可能也支持decision_function,y_score也可以如下调用

y_score = gsearch.decision_function(X_test)当然除了roc_auc需要的是y_score,其他指标大多数使用的是预测标签y_pred

y_pred = gsearch.predict(X_test)

precision = precision_score(y_test, y_pred)

recall = recall_score(y_test, y_pred)

f1 = f1_score(y_test, y_pred)同时,有些人在GridSearchCV的scoring方法中使用make_scorer,间接调用roc_auc_score,那么注意以下三种写法是一致的(重点看scoring = ),只有这样才能保证roc_auc_score里调用的是y_score (probability estimates, or non-thresholded decision values)

param_test = {

'max_depth': [i for i in range(3, 10, 2)],

'min_child_weight': [i for i in range(1, 6, 2)]

}

estimator = xgb.XGBClassifier(

learning_rate=0.1,

n_estimators=140,

max_depth=5,

min_child_weight=1,

gamma=0,

subsample=0.8,

colsample_bytree=0.8,

nthread=4,

scale_pos_weight=1,

seed=27)

gsearch0 = GridSearchCV(

estimator = estimator,

param_grid = param_test,

scoring='roc_auc',

cv=5)

gsearch1 = GridSearchCV(

estimator = estimator,

param_grid = param_test,

scoring = make_scorer(roc_auc_score, needs_threshold=True),

cv=5)

gsearch2 = GridSearchCV(

estimator = estimator,

param_grid = param_test,

scoring = make_scorer(roc_auc_score, needs_proba=True),

cv=5)参考:

The score evaluation with "roc_auc" is not the same in GridSearchCV() and roc_auc_score() functon · Issue #23158 · scikit-learn/scikit-learn · GitHub

Inconsistency between GridSearchCV 'roc_auc' scoring and roc_auc_score metric · Issue #24905 · scikit-learn/scikit-learn · GitHub

'roc_auc' scoring function differs from roc_auc_score() when used inside GridSearchCV · Issue #16565 · scikit-learn/scikit-learn · GitHub

3.3. Metrics and scoring: quantifying the quality of predictions — scikit-learn 1.1.3 documentation