python爬虫——爬取快读小说app

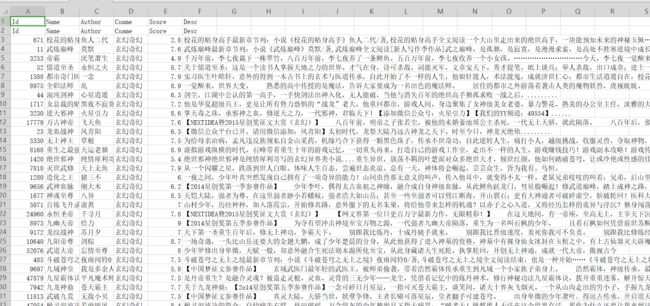

1. 爬取结果(csv文件,出现了有两个表头…不明所以,无关大雅)

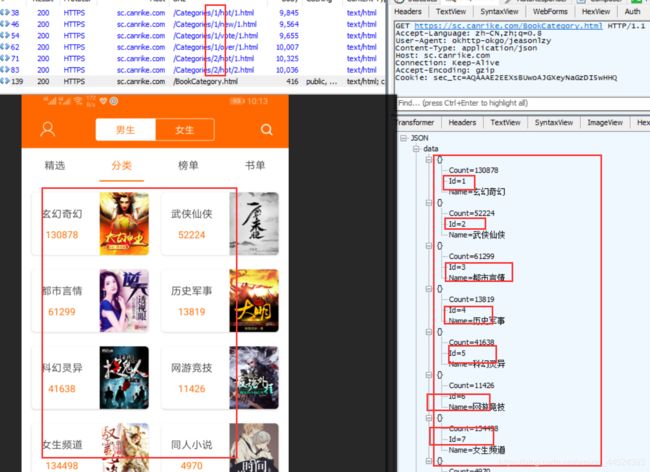

2. 使用fiddler4进行抓包

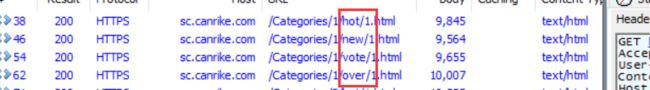

通过观察url,我们不难发现其中的规律,要实现进行分类抓取,需要更改url第一个数字,如下

https://sc.canrike.com/Categories/1/hot/1.html

https://sc.canrike.com/Categories/2/hot/1.html

要实现翻页需要更改url的最后一个数字,如下

https://sc.canrike.com/Categories/2/hot/1.html

https://sc.canrike.com/Categories/2/hot/2.html

要实现抓取某个分类中的分类(就是最热,最新,榜单,完结),需要修改url的关键字

3. 先抓取分类里面的Id

# 构建请求头

headers = {

'Accept-Language': 'zh-CN,zh;q=0.8',

'User-Agent': 'okhttp-okgo/jeasonlzy',

'Content-Type': 'application/json',

'Host': 'sc.canrike.com',

'Connection': 'Keep-Alive',

'Accept-Encoding': 'gzip',

}

# 抓取Id

def get_Id():

url = 'https://sc.canrike.com/BookCategory.html'

# 请求url,返回的数据是json格式

resp = requests.get(url, headers=headers).json()

# 判断resp是否存在

if resp:

# 取出返回json数据里面的data键值对,data是一个列表

# 里面有我们需要的Id,通过Id来获取后面我们需要的信息

Ids = resp.get('data')

for temp in Ids:

Id = temp.get('Id')

yield Id

4. 先写获取最热书籍的信息,后面最新,榜单,完结的都是一样的代码,只需要更改url里面的关键字(比如hot,new,vote,over这些)。

# 获取最热的书籍信息

def get_hot_info(Id):

page = 1

while True:

time.sleep(1)

url = 'https://sc.canrike.com/Categories/{0}/hot/{1}.html'.format(Id, page)

resp = requests.get(url, headers=headers)

if resp.status_code == 200:

try:

data = json.loads(resp.content.decode('utf-8-sig'))

BookLists = data.get('data').get('BookList')

if BookLists:

for BookList in BookLists:

data_dict = {}

data_dict['Id'] = BookList.get('Id')

data_dict['Name'] = BookList.get('Name')

data_dict['Author'] = BookList.get('Author')

data_dict['CName'] = BookList.get('CName')

data_dict['Score'] = BookList.get('Score')

data_dict['Desc'] = BookList.get('Desc').replace('\u3000', '').replace('\r\n', '')

# 实时保存文件

title = data_dict.keys()

with open('快读小说.csv', 'a+', encoding='utf-8-sig', newline='') as f:

writer = csv.DictWriter(f, title)

writer.writerow(data_dict)

print(data_dict)

page += 1

except Exception as e:

page += 1

continue

else:

print('到底了')

break

5. 上一步的函数里面有个实时保存数据,先在main函数写上表头,不然的话csv文件没表头就不知道你保存的信息分别是啥了。

def main():

# 先对csv文件写入表头,后面就不需要在写了

title = ['Id', 'Name', 'Author', 'Cname', 'Score', 'Desc']

with open('快读小说.csv', 'a+', encoding='utf-8-sig', newline='') as f:

writer = csv.writer(f)

writer.writerow(title)

print('表头写入完成, 开始爬取数据')

for Id in get_Id():

get_hot_info(Id)

get_new_info(Id)

get_vote_info(Id)

get_over_info(Id)

print('爬取完成')

6. 全部代码附上

import requests

import json

import csv

import time

# 构建请求头

headers = {

'Accept-Language': 'zh-CN,zh;q=0.8',

'User-Agent': 'okhttp-okgo/jeasonlzy',

'Content-Type': 'application/json',

'Host': 'sc.canrike.com',

'Connection': 'Keep-Alive',

'Accept-Encoding': 'gzip',

}

# 抓取Id

def get_Id():

url = 'https://sc.canrike.com/BookCategory.html'

# 请求url,返回的数据是json格式

resp = requests.get(url, headers=headers).json()

# 判断resp是否存在

if resp:

# 取出返回json数据里面的data键值对,data是一个列表

# 里面有我们需要的Id,通过Id来获取后面我们需要的信息

Ids = resp.get('data')

for temp in Ids:

Id = temp.get('Id')

yield Id

# 获取最热的书籍信息

def get_hot_info(Id):

page = 1

while True:

time.sleep(1)

url = 'https://sc.canrike.com/Categories/{0}/hot/{1}.html'.format(Id, page)

resp = requests.get(url, headers=headers)

if resp.status_code == 200:

try:

data = json.loads(resp.content.decode('utf-8-sig'))

BookLists = data.get('data').get('BookList')

if BookLists:

for BookList in BookLists:

data_dict = {}

data_dict['Id'] = BookList.get('Id')

data_dict['Name'] = BookList.get('Name')

data_dict['Author'] = BookList.get('Author')

data_dict['CName'] = BookList.get('CName')

data_dict['Score'] = BookList.get('Score')

data_dict['Desc'] = BookList.get('Desc').replace('\u3000', '').replace('\r\n', '')

# 实时保存文件

title = data_dict.keys()

with open('快读小说.csv', 'a+', encoding='utf-8-sig', newline='') as f:

writer = csv.DictWriter(f, title)

writer.writerow(data_dict)

print(data_dict)

page += 1

except Exception as e:

page += 1

continue

else:

print('到底了')

break

# 获取最新的书籍信息

def get_new_info(Id):

page = 1

while True:

time.sleep(1)

url = 'https://sc.canrike.com/Categories/{0}/new/{1}.html'.format(Id, page)

resp = requests.get(url, headers=headers)

if resp.status_code == 200:

try:

data = json.loads(resp.content.decode('utf-8-sig'))

BookLists = data.get('data').get('BookList')

if BookLists:

for BookList in BookLists:

data_dict = {}

data_dict['Id'] = BookList.get('Id')

data_dict['Name'] = BookList.get('Name')

data_dict['Author'] = BookList.get('Author')

data_dict['CName'] = BookList.get('CName')

data_dict['Score'] = BookList.get('Score')

data_dict['Desc'] = BookList.get('Desc').replace('\u3000', '').replace('\r\n', '')

# 实时保存文件

title = data_dict.keys()

with open('快读小说.csv', 'a+', encoding='utf-8-sig', newline='') as f:

writer = csv.DictWriter(f, title)

writer.writerow(data_dict)

print(data_dict)

page += 1

except Exception as e:

page += 1

continue

else:

print('到底了')

break

# 获取榜单的书籍信息

def get_vote_info(Id):

page = 1

while True:

time.sleep(1)

url = 'https://sc.canrike.com/Categories/{0}/vote/{1}.html'.format(Id, page)

resp = requests.get(url, headers=headers)

if resp.status_code == 200:

try:

data = json.loads(resp.content.decode('utf-8-sig'))

BookLists = data.get('data').get('BookList')

if BookLists:

for BookList in BookLists:

data_dict = {}

data_dict['Id'] = BookList.get('Id')

data_dict['Name'] = BookList.get('Name')

data_dict['Author'] = BookList.get('Author')

data_dict['CName'] = BookList.get('CName')

data_dict['Score'] = BookList.get('Score')

data_dict['Desc'] = BookList.get('Desc').replace('\u3000', '').replace('\r\n', '')

# 实时保存文件

title = data_dict.keys()

with open('快读小说.csv', 'a+', encoding='utf-8-sig', newline='') as f:

writer = csv.DictWriter(f, title)

writer.writerow(data_dict)

print(data_dict)

page += 1

except Exception as e:

page += 1

continue

else:

print('到底了')

break

# 获取完结的书籍信息

def get_over_info(Id):

page = 1

while True:

time.sleep(1)

url = 'https://sc.canrike.com/Categories/{0}/over/{1}.html'.format(Id, page)

resp = requests.get(url, headers=headers)

if resp.status_code == 200:

try:

data = json.loads(resp.content.decode('utf-8-sig'))

BookLists = data.get('data').get('BookList')

if BookLists:

for BookList in BookLists:

data_dict = {}

data_dict['Id'] = BookList.get('Id')

data_dict['Name'] = BookList.get('Name')

data_dict['Author'] = BookList.get('Author')

data_dict['CName'] = BookList.get('CName')

data_dict['Score'] = BookList.get('Score')

data_dict['Desc'] = BookList.get('Desc').replace('\u3000', '').replace('\r\n', '')

# 实时保存文件

title = data_dict.keys()

with open('快读小说.csv', 'a+', encoding='utf-8-sig', newline='') as f:

writer = csv.DictWriter(f, title)

writer.writerow(data_dict)

print(data_dict)

page += 1

except Exception as e:

page += 1

continue

else:

print('到底了')

break

def main():

# 先对csv文件写入表头,后面就不需要在写了

title = ['Id', 'Name', 'Author', 'Cname', 'Score', 'Desc']

with open('快读小说.csv', 'a+', encoding='utf-8-sig', newline='') as f:

writer = csv.writer(f)

writer.writerow(title)

print('表头写入完成, 开始爬取数据')

for Id in get_Id():

get_hot_info(Id)

get_new_info(Id)

get_vote_info(Id)

get_over_info(Id)

print('爬取完成')

if __name__ == '__main__':

main()