Python预测2022世界杯1/8决赛胜负

目录:

- why to do?

- how to do?

why to do?

简单介绍一下为什么要做这个吧? 首先呢, 最近一直在看基于tensorflow框架实现facenet等一些人脸识别的网络. 再加上昨天(2022年12月3日)是2022年世界杯1/8决赛第一个比赛日. 就在不同平台搜了一下, 看看有没有其他大牛也做了这方面的预测, 最后, 发现了几个博主做了相关分析.

但是他们做的时间比较久, 是在小组赛之前就做了, 所以, 和我想要的结果有些出入.

于是呢, 就将别人的项目进行了分析, 并根据kaggle给的一些足球历史比赛数据进行一个预测.

先将一些参考地址贴一下, 也感谢这些大牛的无私奉献, 祝大家可以学的更好~

- https://www.kaggle.com/code/sslp23/predicting-fifa-2022-world-cup-with-ml/notebook#Conclusion

- https://www.bilibili.com/video/BV1324y117jq/?spm_id_from=333.337.search-card.all.click

- https://www.bilibili.com/read/cv19949591

how to do?

已有的数据集如下:

代码如下:

- 导包:

import numpy as np

import pandas as pd

from operator import itemgetter

from save_res import save_res, save_res_draw

import time

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier

from sklearn.model_selection import train_test_split, GridSearchCV

- 特征处理:

df = pd.read_csv("./kaggle/results.csv")

df["date"] = pd.to_datetime(df["date"])

df.dropna(inplace=True)

df = df[(df["date"] >= "2018-8-1")].reset_index(drop=True)

rank = pd.read_csv("./kaggle/fifa_ranking-2022-10-06.csv")

rank["rank_date"] = pd.to_datetime(rank["rank_date"])

rank = rank[(rank["rank_date"] >= "2018-8-1")].reset_index(drop=True)

rank["country_full"] = rank["country_full"].str.replace("IR Iran", "Iran").str.replace("Korea Republic", "South Korea").str.replace("USA", "United States")

rank = rank.set_index(['rank_date']).groupby(['country_full'], group_keys=False).resample('D').first().fillna(method='ffill').reset_index()

df_wc_ranked = df.merge(rank[["country_full", "total_points", "previous_points", "rank", "rank_change", "rank_date"]], left_on=["date", "home_team"], right_on=["rank_date", "country_full"]).drop(["rank_date", "country_full"], axis=1)

df_wc_ranked = df_wc_ranked.merge(rank[["country_full", "total_points", "previous_points", "rank", "rank_change", "rank_date"]], left_on=["date", "away_team"], right_on=["rank_date", "country_full"], suffixes=("_home", "_away")).drop(["rank_date", "country_full"], axis=1)

df = df_wc_ranked

# print(df[(df_wc_ranked.home_team == "Brazil") | (df.away_team == "Brazil")].tail(10))

def result_finder(home, away):

if home > away:

return pd.Series([0, 3, 0])

if home < away:

return pd.Series([1, 0, 3])

else:

return pd.Series([2, 1, 1])

results = df.apply(lambda x: result_finder(x["home_score"], x["away_score"]), axis=1)

df[["result", "home_team_points", "away_team_points"]] = results

plt.figure(figsize=(15, 10))

sns.heatmap(df[["total_points_home", "rank_home", "total_points_away", "rank_away"]].corr())

plt.show()

df["rank_dif"] = df["rank_home"] - df["rank_away"]

df["sg"] = df["home_score"] - df["away_score"]

df["points_home_by_rank"] = df["home_team_points"]/df["rank_away"]

df["points_away_by_rank"] = df["away_team_points"]/df["rank_home"]

home_team = df[["date", "home_team", "home_score", "away_score", "rank_home", "rank_away","rank_change_home", "total_points_home", "result", "rank_dif", "points_home_by_rank", "home_team_points"]]

away_team = df[["date", "away_team", "away_score", "home_score", "rank_away", "rank_home","rank_change_away", "total_points_away", "result", "rank_dif", "points_away_by_rank", "away_team_points"]]

home_team.columns = [h.replace("home_", "").replace("_home", "").replace("away_", "suf_").replace("_away", "_suf") for h in home_team.columns]

away_team.columns = [a.replace("away_", "").replace("_away", "").replace("home_", "suf_").replace("_home", "_suf") for a in away_team.columns]

team_stats = home_team.append(away_team)#.sort_values("date")

team_stats_raw = team_stats.copy()

stats_val = []

for index, row in team_stats.iterrows():

team = row["team"]

date = row["date"]

past_games = team_stats.loc[(team_stats["team"] == team) & (team_stats["date"] < date)].sort_values(by=['date'], ascending=False)

last5 = past_games.head(5)

goals = past_games["score"].mean()

goals_l5 = last5["score"].mean()

goals_suf = past_games["suf_score"].mean()

goals_suf_l5 = last5["suf_score"].mean()

rank = past_games["rank_suf"].mean()

rank_l5 = last5["rank_suf"].mean()

if len(last5) > 0:

points = past_games["total_points"].values[0] - past_games["total_points"].values[-1]#qtd de pontos ganhos

points_l5 = last5["total_points"].values[0] - last5["total_points"].values[-1]

else:

points = 0

points_l5 = 0

gp = past_games["team_points"].mean()

gp_l5 = last5["team_points"].mean()

gp_rank = past_games["points_by_rank"].mean()

gp_rank_l5 = last5["points_by_rank"].mean()

stats_val.append([goals, goals_l5, goals_suf, goals_suf_l5, rank, rank_l5, points, points_l5, gp, gp_l5, gp_rank, gp_rank_l5])

stats_cols = ["goals_mean", "goals_mean_l5", "goals_suf_mean", "goals_suf_mean_l5", "rank_mean", "rank_mean_l5", "points_mean", "points_mean_l5", "game_points_mean", "game_points_mean_l5", "game_points_rank_mean", "game_points_rank_mean_l5"]

stats_df = pd.DataFrame(stats_val, columns=stats_cols)

full_df = pd.concat([team_stats.reset_index(drop=True), stats_df], axis=1, ignore_index=False)

home_team_stats = full_df.iloc[:int(full_df.shape[0]/2),:]

away_team_stats = full_df.iloc[int(full_df.shape[0]/2):,:]

home_team_stats = home_team_stats[home_team_stats.columns[-12:]]

away_team_stats = away_team_stats[away_team_stats.columns[-12:]]

home_team_stats.columns = ['home_'+str(col) for col in home_team_stats.columns]

away_team_stats.columns = ['away_'+str(col) for col in away_team_stats.columns]

match_stats = pd.concat([home_team_stats, away_team_stats.reset_index(drop=True)], axis=1, ignore_index=False)

full_df = pd.concat([df, match_stats.reset_index(drop=True)], axis=1, ignore_index=False)

def find_friendly(x):

if x == "Friendly":

return 1

else: return 0

full_df["is_friendly"] = full_df["tournament"].apply(lambda x: find_friendly(x))

full_df = pd.get_dummies(full_df, columns=["is_friendly"])

base_df = full_df[["date", "home_team", "away_team", "rank_home", "rank_away","home_score", "away_score","result", "rank_dif", "rank_change_home", "rank_change_away", 'home_goals_mean',

'home_goals_mean_l5', 'home_goals_suf_mean', 'home_goals_suf_mean_l5',

'home_rank_mean', 'home_rank_mean_l5', 'home_points_mean',

'home_points_mean_l5', 'away_goals_mean', 'away_goals_mean_l5',

'away_goals_suf_mean', 'away_goals_suf_mean_l5', 'away_rank_mean',

'away_rank_mean_l5', 'away_points_mean', 'away_points_mean_l5','home_game_points_mean', 'home_game_points_mean_l5',

'home_game_points_rank_mean', 'home_game_points_rank_mean_l5','away_game_points_mean',

'away_game_points_mean_l5', 'away_game_points_rank_mean',

'away_game_points_rank_mean_l5',

'is_friendly_0', 'is_friendly_1']]

base_df_no_fg = base_df.dropna()

df = base_df_no_fg

def no_draw(x):

if x == 2:

return 1

else:

return x

df["target"] = df["result"].apply(lambda x: no_draw(x))

def create_db(df):

columns = ["home_team", "away_team", "target", "rank_dif", "home_goals_mean", "home_rank_mean", "away_goals_mean", "away_rank_mean", "home_rank_mean_l5", "away_rank_mean_l5", "home_goals_suf_mean", "away_goals_suf_mean", "home_goals_mean_l5", "away_goals_mean_l5", "home_goals_suf_mean_l5", "away_goals_suf_mean_l5", "home_game_points_rank_mean", "home_game_points_rank_mean_l5", "away_game_points_rank_mean", "away_game_points_rank_mean_l5","is_friendly_0", "is_friendly_1"]

base = df.loc[:, columns]

base.loc[:, "goals_dif"] = base["home_goals_mean"] - base["away_goals_mean"]

base.loc[:, "goals_dif_l5"] = base["home_goals_mean_l5"] - base["away_goals_mean_l5"]

base.loc[:, "goals_suf_dif"] = base["home_goals_suf_mean"] - base["away_goals_suf_mean"]

base.loc[:, "goals_suf_dif_l5"] = base["home_goals_suf_mean_l5"] - base["away_goals_suf_mean_l5"]

base.loc[:, "goals_per_ranking_dif"] = (base["home_goals_mean"] / base["home_rank_mean"]) - (base["away_goals_mean"] / base["away_rank_mean"])

base.loc[:, "dif_rank_agst"] = base["home_rank_mean"] - base["away_rank_mean"]

base.loc[:, "dif_rank_agst_l5"] = base["home_rank_mean_l5"] - base["away_rank_mean_l5"]

base.loc[:, "dif_points_rank"] = base["home_game_points_rank_mean"] - base["away_game_points_rank_mean"]

base.loc[:, "dif_points_rank_l5"] = base["home_game_points_rank_mean_l5"] - base["away_game_points_rank_mean_l5"]

model_df = base[["home_team", "away_team", "target", "rank_dif", "goals_dif", "goals_dif_l5", "goals_suf_dif", "goals_suf_dif_l5", "goals_per_ranking_dif", "dif_rank_agst", "dif_rank_agst_l5", "dif_points_rank", "dif_points_rank_l5", "is_friendly_0", "is_friendly_1"]]

return model_df

model_db = create_db(df)

X = model_db.iloc[:, 3:]

y = model_db[["target"]]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size= 0.2, random_state=1)

gb = GradientBoostingClassifier(random_state=5)

params = {"learning_rate": [0.01, 0.1, 0.5],

"min_samples_split": [5, 10],

"min_samples_leaf": [3, 5],

"max_depth":[3,5,10],

"max_features":["sqrt"],

"n_estimators":[100, 200]

}

gb_cv = GridSearchCV(gb, params, cv = 3, n_jobs = -1, verbose = False)

gb_cv.fit(X_train.values, np.ravel(y_train))

gb = gb_cv.best_estimator_

params_rf = {"max_depth": [20],

"min_samples_split": [10],

"max_leaf_nodes": [175],

"min_samples_leaf": [5],

"n_estimators": [250],

"max_features": ["sqrt"],

}

rf = RandomForestClassifier(random_state=1)

rf_cv = GridSearchCV(rf, params_rf, cv = 3, n_jobs = -1, verbose = False)

rf_cv.fit(X_train.values, np.ravel(y_train))

rf = rf_cv.best_estimator_

- 开始做比赛胜负的准备:

with open("country_name.txt", "r", encoding="utf-8") as f:

info = f.readlines()

info = list(map(lambda x:x.strip(), info))

English_name = info[:32]

Chinese_name = info[32:]

country_name = {}

for each in zip(English_name, Chinese_name):

country_name[each[0]] = each[1]

- 因为这里是预测了小组赛, 从而设置了如下变量, 因我后期不是预测小组赛结果, 所以把相应的变量放在这, 需要研究的可以看一下

table = {'A': [['Qatar', 0, []],

['Ecuador', 0, []],

['Senegal', 0, []],

['Netherlands', 0, []]],

'B': [['England', 0, []],

['Iran', 0, []],

['United States', 0, []],

['Wales', 0, []]],

'C': [['Argentina', 0, []],

['Saudi Arabia', 0, []],

['Mexico', 0, []],

['Poland', 0, []]],

'D': [['France', 0, []],

['Australia', 0, []],

['Denmark', 0, []],

['Tunisia', 0, []]],

'E': [['Spain', 0, []],

['Costa Rica', 0, []],

['Germany', 0, []],

['Japan', 0, []]],

'F': [['Belgium', 0, []],

['Canada', 0, []],

['Morocco', 0, []],

['Croatia', 0, []]],

'G': [['Brazil', 0, []],

['Serbia', 0, []],

['Switzerland', 0, []],

['Cameroon', 0, []]],

'H': [['Portugal', 0, []],

['Ghana', 0, []],

['Uruguay', 0, []],

['South Korea', 0, []]]}

groups = ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H']

group_count = 7

matches = [('A', 'Qatar', 'Ecuador'),

('A', 'Senegal', 'Netherlands'),

('A', 'Qatar', 'Senegal'),

('A', 'Netherlands', 'Ecuador'),

('A', 'Ecuador', 'Senegal'),

('A', 'Netherlands', 'Qatar'),

('B', 'England', 'Iran'),

('B', 'United States', 'Wales'),

('B', 'Wales', 'Iran'),

('B', 'England', 'United States'),

('B', 'Wales', 'England'),

('B', 'Iran', 'United States'),

('C', 'Argentina', 'Saudi Arabia'),

('C', 'Mexico', 'Poland'),

('C', 'Poland', 'Saudi Arabia'),

('C', 'Argentina', 'Mexico'),

('C', 'Poland', 'Argentina'),

('C', 'Saudi Arabia', 'Mexico'),

('D', 'Denmark', 'Tunisia'),

('D', 'France', 'Australia'),

('D', 'Tunisia', 'Australia'),

('D', 'France', 'Denmark'),

('D', 'Australia', 'Denmark'),

('D', 'Tunisia', 'France'),

('E', 'Germany', 'Japan'),

('E', 'Spain', 'Costa Rica'),

('E', 'Japan', 'Costa Rica'),

('E', 'Spain', 'Germany'),

('E', 'Japan', 'Spain'),

('E', 'Costa Rica', 'Germany'),

('F', 'Morocco', 'Croatia'),

('F', 'Belgium', 'Canada'),

('F', 'Belgium', 'Morocco'),

('F', 'Croatia', 'Canada'),

('F', 'Croatia', 'Belgium'),

('F', 'Canada', 'Morocco'),

('G', 'Switzerland', 'Cameroon'),

('G', 'Brazil', 'Serbia'),

('G', 'Cameroon', 'Serbia'),

('G', 'Brazil', 'Switzerland'),

('G', 'Serbia', 'Switzerland'),

('G', 'Cameroon', 'Brazil'),

('H', 'Uruguay', 'South Korea'),

('H', 'Portugal', 'Ghana'),

('H', 'South Korea', 'Ghana'),

('H', 'Portugal', 'Uruguay'),

('H', 'Ghana', 'Uruguay'),

('H', 'South Korea', 'Portugal')]

- 对预测最关键的两个函数如下设置:

def find_stats(team_1):

#team_1 = "Qatar"

past_games = team_stats_raw[(team_stats_raw["team"] == team_1)].sort_values("date")

last5 = team_stats_raw[(team_stats_raw["team"] == team_1)].sort_values("date").tail(5)

team_1_rank = past_games["rank"].values[-1]

team_1_goals = past_games.score.mean()

team_1_goals_l5 = last5.score.mean()

team_1_goals_suf = past_games.suf_score.mean()

team_1_goals_suf_l5 = last5.suf_score.mean()

team_1_rank_suf = past_games.rank_suf.mean()

team_1_rank_suf_l5 = last5.rank_suf.mean()

team_1_gp_rank = past_games.points_by_rank.mean()

team_1_gp_rank_l5 = last5.points_by_rank.mean()

return [team_1_rank, team_1_goals, team_1_goals_l5, team_1_goals_suf, team_1_goals_suf_l5, team_1_rank_suf, team_1_rank_suf_l5, team_1_gp_rank, team_1_gp_rank_l5]

def find_features(team_1, team_2):

rank_dif = team_1[0] - team_2[0]

goals_dif = team_1[1] - team_2[1]

goals_dif_l5 = team_1[2] - team_2[2]

goals_suf_dif = team_1[3] - team_2[3]

goals_suf_dif_l5 = team_1[4] - team_2[4]

goals_per_ranking_dif = (team_1[1]/team_1[5]) - (team_2[1]/team_2[5])

dif_rank_agst = team_1[5] - team_2[5]

dif_rank_agst_l5 = team_1[6] - team_2[6]

dif_gp_rank = team_1[7] - team_2[7]

dif_gp_rank_l5 = team_1[8] - team_2[8]

return [rank_dif, goals_dif, goals_dif_l5, goals_suf_dif, goals_suf_dif_l5, goals_per_ranking_dif, dif_rank_agst, dif_rank_agst_l5, dif_gp_rank, dif_gp_rank_l5, 1, 0]

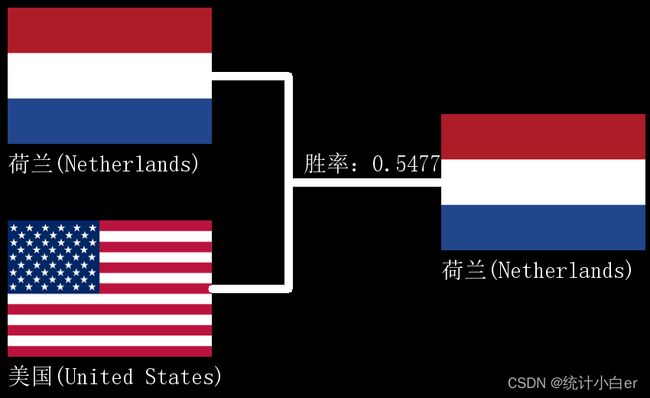

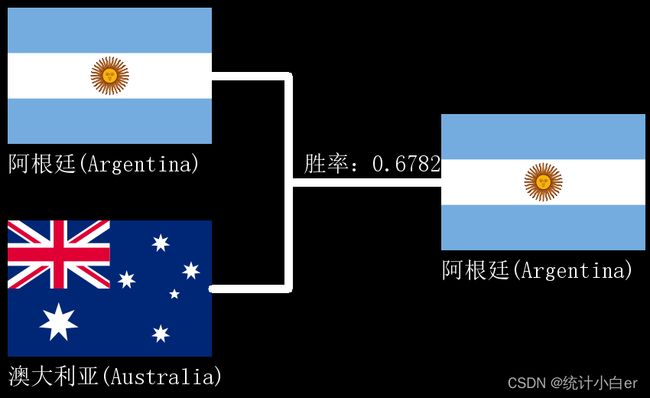

因为昨晚两场是荷兰vs美国, 阿根廷vs澳大利亚. 所以, 代码如下设置

# 这里需要引入一个博主编写的一个可视化的py文件, 详细信息可以去看引用路径

import save_res

teams = ['Netherlands', 'United States']

# # 阿根廷: Argentina

# # 澳大利亚: Australia

# teams = ['Argentina', 'Australia'] # 当预测下一场的话, 需要用到这句

team_1 = find_stats(teams[0])

team_2 = find_stats(teams[1])

features_g1 = find_features(team_1, team_2)

features_g2 = find_features(team_2, team_1)

probs_g1 = gb.predict_proba([features_g1])

probs_g2 = gb.predict_proba([features_g2])

team_1_prob_g1 = probs_g1[0][0]

team_1_prob_g2 = probs_g2[0][1]

team_2_prob_g1 = probs_g1[0][1]

team_2_prob_g2 = probs_g2[0][0]

team_1_prob = (probs_g1[0][0] + probs_g2[0][1])/2

team_2_prob = (probs_g2[0][0] + probs_g1[0][1])/2

prob = 0

if team_1_prob >= team_2_prob:

prob = team_1_prob

else:

prob = team_2_prob

win_group = ''

if team_1_prob >= team_2_prob:

win_group = teams[0]

else:

win_group = teams[1]

prob = round(prob, 5)

save_res.save_res(teams[0], teams[1], win_group, prob, "tmp1.png")

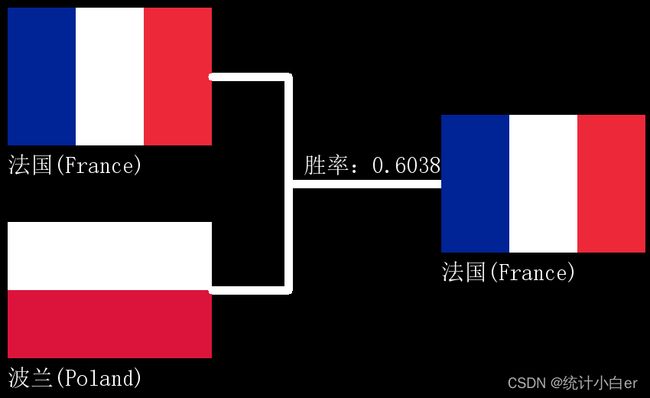

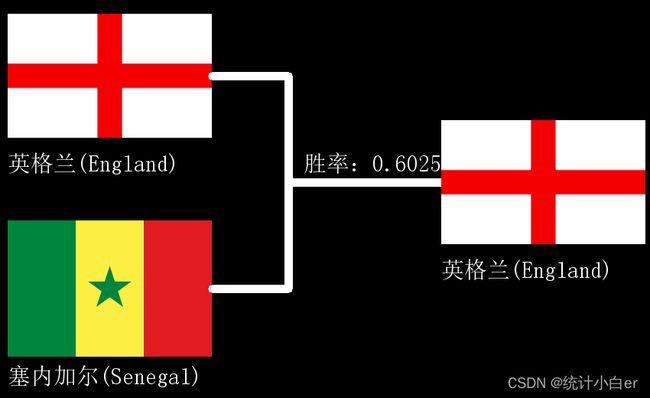

- 结果:

1/8决赛 - 荷兰vs美国:

1/8决赛 - 法国vs波兰:

就写到这吧, 就图一乐, 这模型可以优化, 毕竟随机森林的效果还不是很好, 而且这个模型也没考虑各种比较现实的因素, 亲情球呀, 快乐球呀, 单纯不想赢呀, 等等.

各位看官, 就图一乐即可~

如果自己想研究的话, 可以去那几位大佬的文章帖子去学习一下~

本篇文章, 仅供学习和娱乐~

再贴一下参考链接:

- https://www.kaggle.com/code/sslp23/predicting-fifa-2022-world-cup-with-ml/notebook#Conclusion

- https://www.bilibili.com/video/BV1324y117jq/?spm_id_from=333.337.search-card.all.click

- https://www.bilibili.com/read/cv19949591