李沐动手学深度学习V2-模型微调和代码实现

微调

由于数据集有限,收集和标记数据可能需要大量的时间和金钱,因此需要应用迁移学习(transfer learning)将从源数据集学到的知识迁移到目标数据集。例如,尽管ImageNet数据集中的大多数图像与识别图像无关,但在此数据集上训练的模型可能会提取更通用的图像特征,这有助于识别边缘、纹理、形状和对象组合, 这些类似的特征也可能有效地识别当前图像。

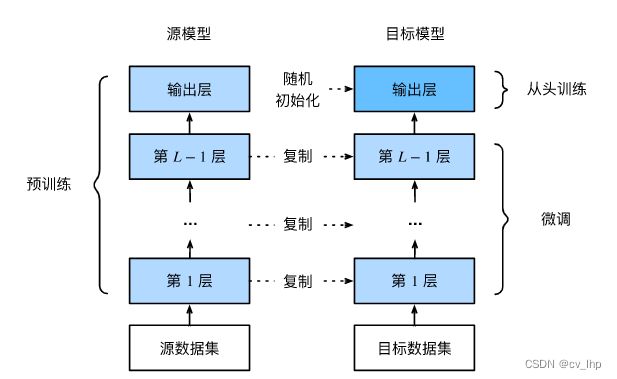

1. 微调步骤

- 在源数据集(例如ImageNet数据集)上预训练神经网络模型,即源模型。

- 创建一个新的神经网络模型,即目标模型。这将复制源模型上的所有模型设计及其参数(输出层除外)。假定这些模型参数包含从源数据集中学到的知识,这些知识也将适用于目标数据集。假设源模型的输出层与源数据集的标签密切相关,因此不在目标模型中使用该层。

- 向目标模型添加输出层,其输出数是目标数据集中的类别数。然后随机初始化该层的模型参数。

- 在目标数据集上训练目标模型。输出层将从头开始进行训练,而所有其他层的参数将根据源模型的参数进行微调。

当目标数据集比源数据集小得多时,微调有助于提高模型的泛化能力,如下图所示:

2. 使用微调对热狗识别

在一个小型热狗数据集上微调ResNet模型,该模型已在ImageNet数据集上进行了预训练。 这个小型数据集包含数千张包含热狗和不包含热狗的图像,我们将使用微调模型来识别图像中是否包含热狗。

- 获取数据集

热狗数据集包含1400张热狗的“正类”图像,以及包含尽可能多的其他食物的“负类”图像。 包含两个类别的1000张图片用于训练,其余的则用于测试。

解压下载的数据集,我们获得了两个文件夹hotdog/train和hotdog/test。 这两个文件夹都有hotdog(有热狗)和not-hotdog(无热狗)两个子文件夹, 子文件夹内都包含相应类的图像。

import os.path

import d2l.torch

import torch

import torchvision.datasets

from torch import nn

from torch.utils import data

d2l.torch.DATA_HUB['hotdog'] = (d2l.torch.DATA_URL+'hotdog.zip','fba480ffa8aa7e0febbb511d181409f899b9baa5')

data_dir = d2l.torch.download_extract('hotdog')

print(data_dir)

#创建两个实例来分别读取训练和测试数据集中的所有图像文件。

train_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'))

test_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'))

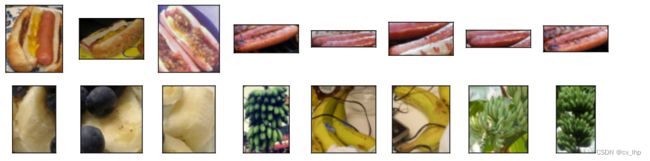

显示前8个正类样本图片和最后8张负类样本图片(图像的大小和纵横比各有不同)

hotdogs = [train_images[i][0] for i in range(8)]

non_hotdogs = [train_images[-i-1][0] for i in range(8)]

d2l.torch.show_images(hotdogs+non_hotdogs,2,8,scale=1.4)

2. 数据增广

在训练期间,首先从图像中裁切随机大小和随机长宽比的区域,然后将该区域缩放为 224×224 输入图像。 在测试过程中,我们将图像的高度和宽度都缩放到256像素,然后裁剪中央 224×224 区域作为输入。 此外,对于RGB(红、绿和蓝)颜色通道,我们分别标准化每个通道。 具体而言,该通道的每个值减去该通道的平均值,然后将结果除以该通道的标准差。

#RGB三个通道的均值为:0.485, 0.456, 0.406,都是从ImageNet数据集中求出来的;RGB三个通道的标准差为:0.229, 0.224, 0.225,也都是从ImageNet数据集中求出来的

## 使用ImageNet数据集中所有图片RGB通道求出的均值和标准差,以标准化当前数据集图片的每个通道

normalize = torchvision.transforms.Normalize([0.485, 0.456, 0.406],[0.229, 0.224, 0.225])#将图片RGB三个通道标准化

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(224),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

normalize

])

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(256),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

normalize

])

- 定义和初始化模型

使用在ImageNet数据集上预训练的ResNet-18作为源模型,在这里,我们指定pretrained=True以自动下载预训练的模型参数。 如果你首次使用此模型,则需要连接互联网才能下载。

#使用在ImageNet上面预训练好的模型

pretrain_net = torchvision.models.resnet18(pretrained=True)

预训练的源模型实例包含许多特征层和一个输出层fc,此划分的主要目的是对除输出层以外所有层的模型参数进行微调,下面给出了源模型的成员变量fc。

print(pretrain_net.fc)

'''

输出结果:

Linear(in_features=512, out_features=1000, bias=True)

'''

在ResNet的全局平均汇聚层后,全连接层转换为ImageNet数据集的1000个类输出。 之后构建一个新的神经网络作为目标模型, 定义方式与预训练源模型的定义方式相同,只是最终层中的输出数量被设置为目标数据集中的类数(而不是1000个)。

下面的代码中,目标模型finetune_net中除最后一层线性层外的参数被初始化为源模型相应层的模型参数,由于模型参数是在ImageNet数据集上预训练的,并且足够好,因此通常只需要较小的学习率即可微调这些参数。

最后一层线性层的参数是随机初始化的,通常需要更高的学习率才能从头开始训练,最后一层线性层参数学习率通常为前面所有层学习率的10 。

finetuning_net = torchvision.models.resnet18(pretrained=True)

finetuning_net.fc = nn.Linear(in_features=finetuning_net.fc.in_features,out_features=2)

nn.init.xavier_uniform_(finetuning_net.fc.weight)

- 模型微调

def train_fine_tuning(net,learning_rate,batch_size=128,epochs=5,param_group=True):

train_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'),transform=train_transforms),

batch_size=batch_size,shuffle=True)

test_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'),transform=test_transforms),

batch_size=batch_size,shuffle=False)

#reduction='none'表示直接求出loss,然后再求和,得出样本总loss大小,不是求样本平均loss,因此loss值会与样本数有直接线性关系,从而当batch_size批量样本数目变多时,一般都会增加学习率。样本平均loss一般与样本数目关系不大,当batch_size批量样本数目变多时,一般学习率不会增加

'''

这里nn.CrossEntropyLoss(reduction="None")和之前的写法不同

默认reduction="mean",也就是求完一个batch中的所有样例的损失值后做平均

reduction有三个选项,“mean”:做平均;“sum”:求和;"none":什么也不做就得到一个tensor

在代码中,最后使用l.sum().backward(),实际和一开始指定reduction="sum"效果相同

使用sum的话,求得的梯度与batch_size正相关,这里取batch_size=128,lr=5e-5,近似于reduction='mean'后lr=5e-5*128,因为使用mean后会除以样本数从而导致更新权重参数时梯度变小了,需要把学习率增大

'''

loss = nn.CrossEntropyLoss(reduction='none')

devices = d2l.torch.try_all_gpus()

if param_group:

#param_group=True表示使用微调

#表示除最后一层外前面所有层的参数使用微调,最后一层参数学习率是前面所有层参数学习率的10倍

param_conv2d = [param for name,param in net.named_parameters() if name not in ['fc.weight','fc.bias']]

optim = torch.optim.SGD([{'params':param_conv2d},

{'params':net.fc.parameters(),

'lr':learning_rate*10}],

lr=learning_rate,weight_decay=0.001)

else:

# param_group=False表示不使用微调,直接使用当前数据集对目标模型从零开始进行训练

optim = torch.optim.SGD(net.parameters(),lr=learning_rate,weight_decay=0.001)

d2l.torch.train_ch13(net,train_iter,test_iter,loss,optim,epochs,devices)

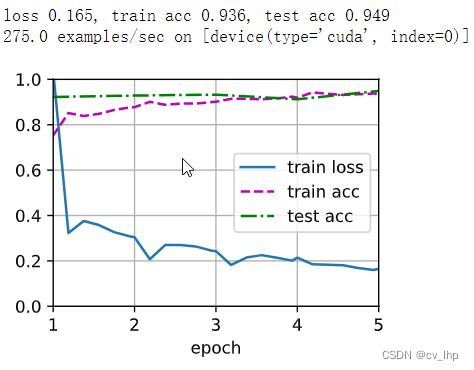

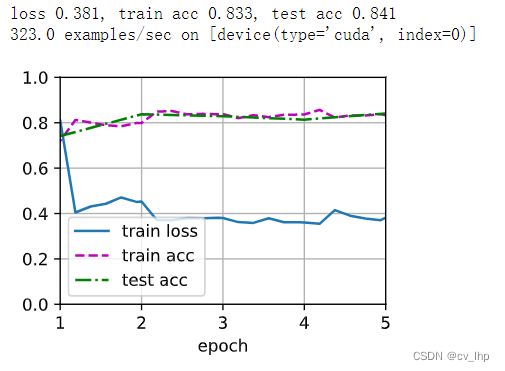

- 使用微调后的结果

#使用预训练的模型对目标模型进行训练,使用较小的学习率5e-5

train_fine_tuning(finetuning_net,learning_rate=5e-5)

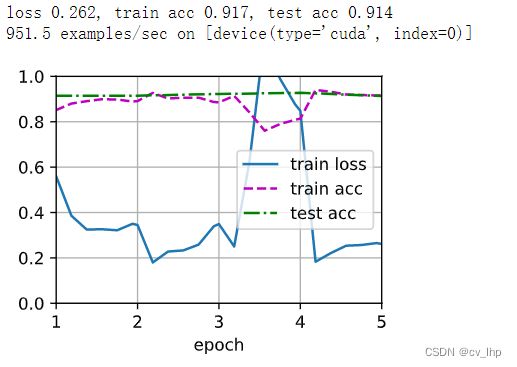

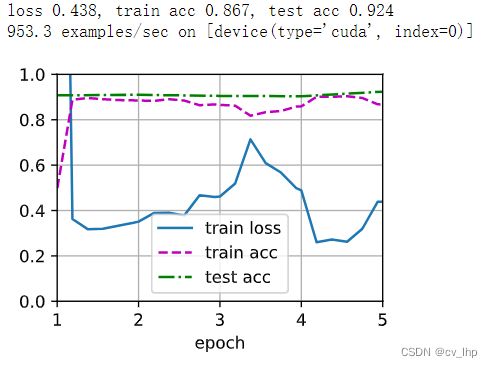

- 从零开始训练模型(与微调模型对比)

#从头开始使用resnet18模型训练,所有模型参数初始化为随机值。由于整个模型需要从头开始训练,因此需要使用更大的学习率。

scratch_resnet18 = torchvision.models.resnet18(pretrained=False)

scratch_resnet18.fc = nn.Linear(in_features=scratch_resnet18.fc.in_features,out_features=2)

train_fine_tuning(scratch_resnet18,learning_rate=5e-4,param_group=False)

从零开始训练模型结果如下图所示:

从上面两个图看出:微调模型往往表现更好,因为它的初始参数值更有效。

3.小结

- 迁移学习将从源数据集中学到的知识“迁移”到目标数据集,微调是迁移学习的常见技巧。

- 除输出层外,目标模型从源模型中复制所有模型结构设计及其参数,并根据目标数据集对这些参数进行微调,但是目标模型的输出层需要从头开始训练。

- 通常,微调参数使用较小的学习率,而从头开始训练输出层可以使用更大的学习率。模型微调轮数一般很少就行了,10轮左右

4. 模型微调全部代码(轮数5轮,微调参数学习率为5e-5,最后一层输出层参数学习率为5e-4,batch_size为64)

import os.path

import d2l.torch

import torch

import torchvision.datasets

from torch import nn

from torch.utils import data

d2l.torch.DATA_HUB['hotdog'] = (d2l.torch.DATA_URL+'hotdog.zip','fba480ffa8aa7e0febbb511d181409f899b9baa5')

data_dir = d2l.torch.download_extract('hotdog')

print(data_dir)

train_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'))

test_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'))

hotdogs = [train_images[i][0] for i in range(8)]

non_hotdogs = [train_images[-i-1][0] for i in range(8)]

d2l.torch.show_images(hotdogs+non_hotdogs,2,8,scale=1.4)

#RGB三个通道的均值为:0.485, 0.456, 0.406,都是从ImageNet数据集中求出来的;RGB三个通道的标准差为:0.229, 0.224, 0.225,也都是从ImageNet数据集中求出来的

normalize = torchvision.transforms.Normalize([0.485, 0.456, 0.406],[0.229, 0.224, 0.225])#将图片RGB三个通道标准化

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(224),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

normalize

])

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(256),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

normalize

])

#使用在ImageNet上面预训练好的模型

pretrain_net = torchvision.models.resnet18(pretrained=True)

print(pretrain_net.fc)

finetuning_net = torchvision.models.resnet18(pretrained=True)

finetuning_net.fc = nn.Linear(in_features=finetuning_net.fc.in_features,out_features=2)

nn.init.xavier_uniform_(finetuning_net.fc.weight)

def train_fine_tuning(net,learning_rate,batch_size=128,epochs=5,param_group=True):

train_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'),transform=train_transforms),

batch_size=batch_size,shuffle=True)

test_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'),transform=test_transforms),

batch_size=batch_size,shuffle=False)

#reduction='none'表示直接求出loss,不是求样本平均loss,因此loss值会与样本数有直接线性关系,平均loss一般与样本数目关系不大

loss = nn.CrossEntropyLoss(reduction='none')

devices = d2l.torch.try_all_gpus()

if param_group:

#param_group=True表示使用微调

#表示除最后一层外前面所有层的参数使用微调,最后一层参数学习率是前面所有层参数学习率的10倍

param_conv2d = [param for name,param in net.named_parameters() if name not in ['fc.weight','fc.bias']]

optim = torch.optim.SGD([{'params':param_conv2d},

{'params':net.fc.parameters(),

'lr':learning_rate*10}],

lr=learning_rate,weight_decay=0.001)

else:

# param_group=False表示不使用微调,直接使用当前数据集对目标模型从零开始进行训练

optim = torch.optim.SGD(net.parameters(),lr=learning_rate,weight_decay=0.001)

d2l.torch.train_ch13(net,train_iter,test_iter,loss,optim,epochs,devices)

#使用预训练的模型对目标模型进行训练

train_fine_tuning(finetuning_net,learning_rate=5e-5)

#从头开始使用resnet18模型训练

# scratch_resnet18 = torchvision.models.resnet18(pretrained=False)

# scratch_resnet18.fc = nn.Linear(in_features=scratch_resnet18.fc.in_features,out_features=2)

# train_fine_tuning(scratch_resnet18,learning_rate=5e-4,param_group=False)

5.补充

- 将输出层finetune_net之前的参数设置为源模型的参数,在训练期间不要更新它们,模型的准确性如何变化?

#解决思路:将除输出层外的其他所有层的参数梯度设置为False,如下面代码:

for param in finetune_net.parameters():

param.requires_grad = False

#关键代码如下:

if param_group==True:

# param_conv2d = [param for name,param in net.named_parameters() if name not in ['fc.weight','fc.bias']]

param_conv2d = []

for name,param in net.named_parameters():

if name not in ['fc.weight','fc.bias']:

param.requires_grad = False

param_conv2d.append(param)

optim = torch.optim.SGD([{'params':param_conv2d},

{'params':net.fc.parameters(),

'lr':learning_rate*10}],

lr=learning_rate,weight_decay=0.001)

#所有代码如下:

import os.path

import d2l.torch

import torch

import torchvision.datasets

from torch import nn

from torch.utils import data

d2l.torch.DATA_HUB['hotdog'] = (d2l.torch.DATA_URL+'hotdog.zip','fba480ffa8aa7e0febbb511d181409f899b9baa5')

data_dir = d2l.torch.download_extract('hotdog')

print(data_dir)

train_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'))

test_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'))

hotdogs = [train_images[i][0] for i in range(8)]

non_hotdogs = [train_images[-i-1][0] for i in range(8)]

d2l.torch.show_images(hotdogs+non_hotdogs,2,8,scale=1.4)

#RGB三个通道的均值为:0.485, 0.456, 0.406,都是从ImageNet数据集中求出来的;RGB三个通道的标准差为:0.229, 0.224, 0.225,也都是从ImageNet数据集中求出来的

normalize = torchvision.transforms.Normalize([0.485, 0.456, 0.406],[0.229, 0.224, 0.225])#将图片RGB三个通道标准化

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(224),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

normalize

])

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(256),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

normalize

])

#使用在ImageNet上面预训练好的模型

pretrain_net = torchvision.models.resnet18(pretrained=True)

print(pretrain_net.fc)

finetuning_net = torchvision.models.resnet18(pretrained=True)

finetuning_net.fc = nn.Linear(in_features=finetuning_net.fc.in_features,out_features=2)

nn.init.xavier_uniform_(finetuning_net.fc.weight)

def train_fine_tuning(net,learning_rate,batch_size=128,epochs=5,param_group=True):

train_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'),transform=train_transforms),

batch_size=batch_size,shuffle=True)

test_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'),transform=test_transforms),

batch_size=batch_size,shuffle=False)

loss = nn.CrossEntropyLoss(reduction='none')

devices = d2l.torch.try_all_gpus()

if param_group:

# param_conv2d = [param for name,param in net.named_parameters() if name not in ['fc.weight','fc.bias']]

param_conv2d = []

for name,param in net.named_parameters():

if name not in ['fc.weight','fc.bias']:

param.requires_grad = False

param_conv2d.append(param)

optim = torch.optim.SGD([{'params':param_conv2d},

{'params':net.fc.parameters(),

'lr':learning_rate*10}],

lr=learning_rate,weight_decay=0.001)

else:

optim = torch.optim.SGD(net.parameters(),lr=learning_rate,weight_decay=0.001)

d2l.torch.train_ch13(net,train_iter,test_iter,loss,optim,epochs,devices)

#使用预训练的模型对目标模型进行训练

train_fine_tuning(finetuning_net,learning_rate=5e-5)

#从头开始使用resnet18模型训练

# scratch_resnet18 = torchvision.models.resnet18(pretrained=False)

# scratch_resnet18.fc = nn.Linear(in_features=scratch_resnet18.fc.in_features,out_features=2)

# train_fine_tuning(scratch_resnet18,learning_rate=5e-4,param_group=False)

不微调除输出层外其他层的参数,只更新最后一层输出层参数的训练和测试结果如下图所示:

- 事实上,ImageNet数据集中有一个“热狗”类别。我们可以通过以下代码获取其输出层中的相应权重参数,但是我们怎样才能利用这个权重参数?

#关键代码

#获取预训练模型中最后一层输出层的权重参数

weight = pretrained_net.fc.weight

#将最后一层权重参数在行上面分割成多块,每块为1行数据,因此第935行权重参数为识别热狗类的权重参数

hotdog_w = torch.split(tensor=weight.data,split_size_or_sections=1, dim=0)[934]

print(hotdog_w)

#将输出层得出第一个神经元的权重参数使用ImageNet预训练好的热狗类的参数初始化

finetuning_net.fc.weight.data[0]= hotdogs_w.data.reshape(-1)

#所有代码如下所示:

import os.path

import d2l.torch

import torch

import torchvision.datasets

from torch import nn

from torch.utils import data

d2l.torch.DATA_HUB['hotdog'] = (d2l.torch.DATA_URL+'hotdog.zip','fba480ffa8aa7e0febbb511d181409f899b9baa5')

data_dir = d2l.torch.download_extract('hotdog')

print(data_dir)

train_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'))

test_images = torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'))

hotdogs = [train_images[i][0] for i in range(8)]

non_hotdogs = [train_images[-i-1][0] for i in range(8)]

d2l.torch.show_images(hotdogs+non_hotdogs,2,8,scale=1.4)

#RGB三个通道的均值为:0.485, 0.456, 0.406,都是从ImageNet数据集中求出来的;RGB三个通道的标准差为:0.229, 0.224, 0.225,也都是从ImageNet数据集中求出来的

normalize = torchvision.transforms.Normalize([0.485, 0.456, 0.406],[0.229, 0.224, 0.225])#将图片RGB三个通道标准化

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(224),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

normalize

])

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(256),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

normalize

])

#使用在ImageNet上面预训练好的模型

pretrain_net = torchvision.models.resnet18(pretrained=True)

print(pretrain_net.fc)

finetuning_net = torchvision.models.resnet18(pretrained=True)

weight_hot = finetuning_net.fc.weight

print(weight_hot.shape)

hotdogs_w = torch.split(weight_hot.data,1,dim=0)[934]

print(hotdogs_w.data.grad)

# hotdogs_w.requires_grad = False

finetuning_net.fc = nn.Linear(in_features=finetuning_net.fc.in_features,out_features=2)

nn.init.xavier_uniform_(finetuning_net.fc.weight[1].reshape(1,-1))

#将输出层得出第一个神经元的权重参数使用ImageNet预训练好的热狗类的参数初始化

finetuning_net.fc.weight.data[0]= hotdogs_w.data.reshape(-1)

print(finetuning_net.fc.weight.data[0].shape)

print(finetuning_net.fc.weight.requires_grad)

def train_fine_tuning(net,learning_rate,batch_size=128,epochs=5,param_group=True):

train_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'train'),transform=train_transforms),

batch_size=batch_size,shuffle=True)

test_iter = torch.utils.data.DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir,'test'),transform=test_transforms),

batch_size=batch_size,shuffle=False)

loss = nn.CrossEntropyLoss(reduction='none')

devices = d2l.torch.try_all_gpus()

if param_group:

# param_conv2d = [param for name,param in net.named_parameters() if name not in ['fc.weight','fc.bias']]

param_conv2d = []

param_hotdog = []

param_nodog = []

for name,param in net.named_parameters():

if name not in ['fc.weight','fc.bias']:

param.requires_grad = False

param_conv2d.append(param)

optim = torch.optim.SGD([{'params':param_conv2d},

{'params':net.fc.parameters(),

'lr':learning_rate*10}],

lr=learning_rate,weight_decay=0.001)

else:

optim = torch.optim.SGD(net.parameters(),lr=learning_rate,weight_decay=0.001)

d2l.torch.train_ch13(net,train_iter,test_iter,loss,optim,epochs,devices)

#使用预训练的模型对目标模型进行训练

train_fine_tuning(finetuning_net,learning_rate=5e-5)

#从头开始使用resnet18模型训练

# scratch_resnet18 = torchvision.models.resnet18(pretrained=False)

# scratch_resnet18.fc = nn.Linear(in_features=scratch_resnet18.fc.in_features,out_features=2)

# train_fine_tuning(scratch_resnet18,learning_rate=5e-4,param_group=False)

#查看模型训练完后输出层的权重参数

#finetuning_net.fc.weight[0]

#finetuning_net.fc.weight[1]