Android MTK PDAF流程

PDAF:Phase Detection Auto Focus

PDAF sensor的实现原理(SPC结构):

PDAF通过比较L/R PD pixel构成的两幅图像,PD算法会计算出当前的相位差,根据相位差和模组的PD calibration data,估算出像距,从而移动lens快速对焦,PDAF快速对焦的搜索范围[infinity,macro]主要来自于烧录的OTP中的AF段,此距离并未实际与物体的物理距离即转换后的DAC值。PDAF OTP中主要烧录以上的SPC(shield pixel calibration)用于补偿遮光后的亮度增益,DCC(defocus conversion coefficient)主要是用于将相位差转换为Lens移动的距离,DCC中数值是通用过PD Diff 与DAC的关系拟合一条曲线的斜率(即PDAF线性度斜率)

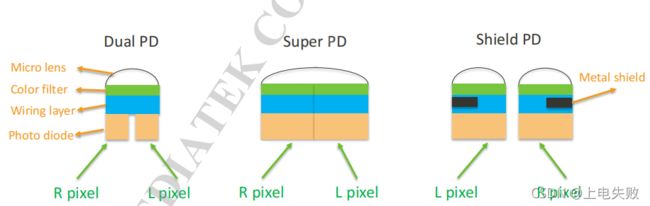

PD pixel types主要有以下三类,Dual PD、Super PD、Shield PD。

目前接触使用的都是Shield PD(像素点有一半会被遮挡),信噪比SNR一般是越大越好(S/N S表示摄像机在假设无噪声时的图像信号值,N表示摄像机本身产生的噪声值);Shield PD 信噪比SNR一般比较差,但是能支持大尺寸像素;

Dual PD指每一个像素底部的感光区域一分为二,在同一个像素内即可获得相位差,也称2PD,全像素双核对焦,PD点覆盖率100%;

Super PD相邻两个像素共用一个micor lens(微透镜用于提高感光度)得到相位差信息

CMOS传感器的结构:1.微透镜 2.色彩滤镜 3.感光片(光电二极管)4.高速传输电路 (Mono sensor没有色彩滤镜,黑白)

SensorType:不同的类型主要是针对PD pixel和PD value是由sensor还是ISP处理;接触较多的Type 2 PDAF_SUPPORT_CAMSV

| Sensor Type | Character | Porting guide |

|---|---|---|

| Type1 | PD pixel corrected by sensor PD value calculate by sensor |

Type1 PDAF porting |

| Type2 | PD pixel corrected by sensor PD pixel output to ISP via VC |

Type2 PDAF porting |

| Type3 | PD pixel corrected by ISP PD pixel extracted by ISP from raw image PD pixel extracted by PDAF algo on ISP3.0 |

Type3 PDAF porting |

| Dual PD | No need to correct PD pixel PD pixel extracted by ISP, under mode 1 PD pixel output to ISP under mode 3 |

DualPD PDAF porting |

vendor/mediatek/proprietary/custom/mt6853/hal/pd_buf_mgr/src/pd_buf_mgr.cpp MTK

vendor/mediatek/proprietary/custom/mt6853/hal/pd_buf_mgr/src/pd_buf_mgr_open.cpp 3rd party

vendor/mediatek/proprietary/hardware/mtkcam/aaa/source/common/hal3a/v3.0/HAL3AFlowCtrl.cpp

vendor/mediatek/proprietary/hardware/mtkcam/aaa/source/common/utils/pdtblgen/pdtblgen.cpp

vendor/mediatek/proprietary/hardware/mtkcam/aaa/source/isp_6s/af_assist_mgr.cpp

vendor/mediatek/proprietary/custom/mt6853/hal/pd_buf_mgr/src/pd_buf_mgr.cpp

vendor/mediatek/proprietary/custom/mt6853/hal/pd_buf_mgr/src/pd_buf_mgr/xxx_mipi_raw/pd_xxx_mipiraw.cpp

vendor/mediatek/proprietary/hardware/mtkcam/drv/src/mem/common/v2/cam_cal_drv.cpp

vendor/mediatek/proprietary/hardware/mtkcam/drv/src/mem/common/v2/cam_cal_helper.cpp

vendor/mediatek/proprietary/custom/common/hal/imgsensor_src/camera_calibration_cam_cal.cpp

kernel-4.14/drivers/misc/mediatek/cam_cal/src/common/v2/eeprom_driver.c

static struct SET_PD_BLOCK_INFO_T imgsensor_pd_info_1920_1080 =

{

.i4OffsetX = 16, // x offset of PD area

.i4OffsetY = 12, // y offset of PD area

.i4PitchX = 16, // x pitch/width of a PD block

.i4PitchY = 16, // y pitch/height of a PD block

.i4PairNum = 8, // num of pairs L/R PD pixel within a PD block

.i4SubBlkW = 8, // x interval of 1 pair L/R PD pixel within a PD block

.i4SubBlkH = 4, // y interval of 1 pair L/R PD pixel within a PD block

.i4BlockNumX = 120, // PD block number in X direction

.i4BlockNumY = 67, // PD block number in Y direction

.iMirrorFlip = 0,

.i4PosR = {

{16,13}, {24,13}, {20,17}, {28,17},

{16,21}, {24,21}, {20,25}, {28,25},

},

.i4PosL = {

{17,13}, {25,13}, {21,17}, {29,17},

{17,21}, {25,21}, {21,25}, {29,25},

},

.i4Crop = { {0, 0}, {0, 0}, {1040, 960}, {0, 0}, {0, 0}, {1040,960},{0, 0}, {0, 0}, {0, 0}, {0, 0} },

};

(1) 前4个变量和L/R的坐标可直接从PD INI文档中获取

(2) i4PairNum指一个block中有几对L/R pixel

(3) i4SubBlkW 和 i4SubBlkH 分别对应PD INI文档中的 PD_DENSITY_X/Y

(4) i4BlockNumX 和 i4BlockNumY 分别对应PD INI文档中的 PD_BLOCK_NUM_X_Y

(5) iMirrorFlip 指出图方向与 模组厂 calibration出图方向的相对方向

pd_info都是从4000*3000 尺寸上操作的,原来值的计算方式是

i4BlockNumX = ( 4000 - 16 * 2 ) / 16 = 248

i4BlockNumY = ( 3000 - 12 * 2 ) / 16 = 186

由于1920*1080是crop后的,故i4BlockNumX 和 i4BlockNumY 是需要修改的 ,与otp中的是不一致的

i4BlockNumX = 1920 / 16 = 120

i4BlockNumY = 1080 / 16 = 67.5 = 67

i4Crop用于记录[Scenario][Crop] -> [x_crop][y_crop] RAW_OFFSET_X = 1040 RAW_OFFSET_Y = 960

1920*1080在驱动中用于配置SensorMode 6,应该前面一个{1040, 960}不用填,是SensorMode2

i4Crop = (4000 - 1040) / 2 (3000 - 960) / 2 = 1920 1080

vendor/mediatek/proprietary/custom/mt6853/hal/pd_buf_mgr/src/pd_buf_mgr/xxx_mipi_raw/pd_xxx_mipiraw.cpp

MBOOL PD_xxxMIPIRAW::IsSupport( SPDProfile_t &iPdProfile)

{

if (( iPdProfile.i4SensorMode == 5) && ((iPdProfile.uImgXsz == 1920) && (iPdProfile.uImgYsz == 1080)))

{

m_PDBufXSz = 240;

m_PDBufYSz = 536;

if(m_PDBuf)

{

delete m_PDBuf;

m_PDBuf = nullptr;

}

m_PDBufSz = m_PDBufXSz*m_PDBufYSz;

m_PDBuf = new uint16_t [m_PDBufSz];

ret = MTRUE;

AAA_LOGD("[1080P 60fps] is Support : i4SensorMode:%d w[%d] s[%d]\n", iPdProfile.i4SensorMode,iPdProfile.uImgXsz, iPdProfile.uImgYsz);

}

...

}

m_PDXSz代表每一行传送pixel num = PitchX / DensityX * BlockNumX = 16 / 8 * 120 = 240

m_PDYSz代表传送的行数line num = PitchY / DensityY * 2 * BlockNumY = 16 / 8 *2 *67 = 536

{//AF_NVRAM

{ // i4HybridAFCoefs1[64]

1, // [0] hybrid_default_param

37, // [1] tracking_width

38, // [2] tracking_height

3, // [3] max_pd_win_x

3, // [4] max_pd_win_y

...

{//PD_NVRAM_T

{//PD_CALIBRATION_DATA_T

{0},

0,

},//PD_CALIBRATION_DATA_T

{//PD_ALGO_TUNING_T

//--------------------------------------------------------------------------------/

// Section: PD Block Size

// Description: Determine PD block width and height

//

// i4FocusPDSizeX (width)

// i4FocusPDSizeY (height)

// range: [0] 32 to (raw_width/x_density), [0] 24 to (raw_height/y_density)

// default:

// S5K3P8: SizeX=32, SizeY=24 (density_x=16, density_y=16)

// OV13855: SizeX=32, SizeY=48 (density_x=16, density_y=8)

// IMX258: SizeX=64, SizeY=24 (density_x=8, density_y=16)

// IMX398: SizeX=64, SizeY=48 (density_x=8, y_density_y=8)

// S5K2L8: SizeX=240, SizeY=96 (density_x=2, y_density_y=4)

// constraints: must be a multiplier of 4

// effect: A large block takes longer computation time than a small block.

//--------------------------------------------------------------------------------/

28, // i4FocusPDSizeX

32, // i4FocusPDSizeY

//--------------------------------------------------------------------------------/

i4FocusPDSizeY = RAW_HIGHT * tracking_height / 100 / max_pd_win_y / PD_DENSITY_Y

= 1080 * 38 / 100 / 3 / 4 = 34.2 = 32

i4FocusPDSizex = RAW_WIDTH * tracking_width / 100 / max_pd_win_x / PD_DENSITY_X

= 1920 * 37 / 100 / 3 / 8 = 29.6 = 28

static struct SENSOR_WINSIZE_INFO_STRUCT imgsensor_winsize_info[7] = {

{8032, 6032, 0, 12, 8032, 6008, 4016, 3004, 8, 2, 4000, 3000, 0, 0, 4000, 3000}, //preview(4000 x 3000)

{8032, 6032, 0, 12, 8032, 6008, 4016, 3004, 8, 2, 4000, 3000, 0, 0, 4000, 3000}, //capture(4000 x 3000)

{8032, 6032, 0, 12, 8032, 6008, 4016, 3004, 8, 2, 4000, 3000, 0, 0, 4000, 3000}, // VIDEO (4000 x 3000)

{8032, 6032, 0, 1568, 8032, 2896, 2008, 724, 364, 2, 1280, 720, 0, 0, 1280, 720}, // hight speed video (1280 x 720)

{8032, 6032, 0, 12, 8032, 6008, 4016, 3004, 8, 2, 4000, 3000, 0, 0, 4000, 3000}, // slim video (1280 x 720)

{8032, 6032, 2080, 1932, 3872, 2168, 1936, 1084, 8, 2, 1920, 1080,0, 0, 1920, 1080}, // custom1 (1920x 1080)

{8032, 6032, 0, 14, 8032, 6004, 8032, 6004, 16, 2, 8000, 6000, 0, 0, 8000, 6000}, //remosaic (8000 x 6000)

};

8032 6032 sensor内部有效像素 crop → binning → crop 如果有还要小的尺寸还需要crop

为了保持中心一致 0 12 上下都裁剪 crop

8032-(0 * 2) 6032 -(12 * 2)= 8032 6008 再binning

4016 3004 继续上下crop

4016-(8 * 2)3004(2*2)= 4000 * 3000 最终输出 tgsize

static struct SENSOR_VC_INFO_STRUCT SENSOR_VC_INFO[4]=

{

/* Preview mode setting */

{0x02, //VC_Num

0x0a, //VC_PixelNum

0x00, //ModeSelect /* 0:auto 1:direct */

0x00, //EXPO_Ratio /* 1/1, 1/2, 1/4, 1/8 */

0x00, //0DValue /* 0D Value */

0x00, //RG_STATSMODE /* STATS divistion mode 0:16x16 1:8x8 2:4x4 3:1x1 */

0x00, 0x2B, 0x0FA0, 0x0BB8, // VC0 image data

0x00, 0x00, 0x0000, 0x0000, // VC1 MVHDR

0x01, 0x30, 0x026C, 0x05D0, // VC2 PDAF

0x00, 0x00, 0x0000, 0x0000}, // VC3

/* Capture mode setting */

/* Video mode setting */

/* Custom1 mode setting */

{0x02, //VC_Num

0x0a, //VC_PixelNum

0x00, //ModeSelect /* 0:auto 1:direct */

0x00, //EXPO_Ratio /* 1/1, 1/2, 1/4, 1/8 */

0x00, //0DValue /* 0D Value */

0x00, //RG_STATSMODE /* STATS divistion mode 0:16x16 1:8x8 2:4x4 3:1x1 */

0x00, 0x2B, 0x0780, 0x0438, // VC0 image data

0x00, 0x00, 0x0000, 0x0000, // VC1 MVHDR

0x01, 0x30, 0x012C, 0x0218, // VC2 PDAF

0x00, 0x00, 0x0000, 0x0000}, // VC3

};};

Type 2会使用VC(Virtual channel control),VC的主要作用就是将数据流的数据通过不同的通道分离给不同的流程。通过每一帧都是包含图像帧+PD 虚拟帧(将Bayer数据和PD数据按照MIPI协议打包)

图像帧(Image Data VC=0 DT=0x2B RAW10)

0x0780 = 1920 0x0438 = 1080 ;

PD 虚拟帧(PDAF Data VC=1 DT=0x30 通过date type区分通道)

0x012C = 120 * 2 * 10 / 8 = 300 //.i4BlockNumX = 120, // PD block number in X direction

0x0218 = 67 * 4 * 2 = 536 //.i4BlockNumY = 67, // PD block number in Y direction

PDAF线性度测试:

ISO<200,对着菱形图20cm 位置下,在Confidence > 60的场景下抓取log,在log里查找PD Value与AF DAC,看他们是否是呈线性关系

插入一下OTP相关的知识

LCS Lens Shade Correction gridx gridy 垂直和网格大小,周围亮度不均匀,处理不好可能经算法会有竖条纹,光晕等

BLC BlackLevel Correction 黑电平校正 (没有做黑电平校正的的图会更亮,影响图像的对比度)

sensor输出的电压越高,电流越大,而sensor电路本算存在暗电流,导致没有光线输入时也

有一定的输出电压,所以需要把这一部分去掉,所有像素都减去一个校准值,raw10对应的OB

(Optical Black)一般为64,需要注意init setting中的寄存器修改

AWB Auto White Balance RGB转成Bayer模式的图一般由(R Gr Gb B四个通道的值) ,AWB一般比较关注R/G B/G的值,

Gr Gb一般值都比较接近,R/G 变大时,意味着R gain减小,整幅图R分量少了,就会呈现泛绿泛蓝,

烧录的数据主要是当前模组和Golden模组(一批生产中较为平均的做基准)的R Gr Gb B的数据