TransE全文中文翻译(Translating Embeddings for Modeling Multi-relational Data)

最近接触自然语言处理,英文论文看不懂,不才结合google translate将其翻译了一遍,还是看不懂……

欢迎路过的网友指出翻译中的错误~

Translating Embeddings for Modeling Multi-relational Data

-

- Abstract

- 1 Introduction

- 2 Translation-based model

- 3 Related work

- 4 Experience

-

- 4.2 Experimental setup

- 4.3 Link prediction

- 4.4 Learning to predict new relationships with few examples

- 5 Conclusion and future work

- References

Abstract

We consider the problem of embedding entities and relationships of multi-relational data in low-dimensional vector spaces. Our objective is to propose a canonical model which is easy to train, contains a reduced number of parameters and can scale up to very large databases. Hence, we propose TransE, a method which models relationships by interpreting them as translations operating on the low-dimensional embeddings of the entities. Despite its simplicity, this assumption proves to be powerful since extensive experiments show that TransE significantly outperforms state-of-the-art methods in link prediction on two knowledge bases. Besides, it can be successfully trained on a large scale data set with 1M entities, 25k relationships and more than 17M training samples.

我们考虑在低维向量空间中嵌入实体和多维数据关系的问题。我们的目标是提出一种易于训练的规范模型,该模型包含数量减少的参数,并且可以扩展到非常大的数据库。因此,我们提出了TransE,一种通过将关系解释为对实体的低维嵌入进行操作的翻译来建模关系的方法。尽管它很简单,但由于广泛的实验表明TransE在两个知识库的链接预测中明显优于最新方法,因此该假设被证明是有效的。此外,它可以在具有1M个实体,25k个关系和超过1700万个训练样本的大规模数据集上成功进行训练。

1 Introduction

Multi-relational data refers to directed graphs whose nodes correspond to entities and edges of the form (head, label, tail) (denoted (h, ℓ, t)), each of which indicates that there exists a relationship of name label between the entities head and tail. Models of multi-relational data play a pivotal role in many areas. Examples are social network analysis, where entities are members and edges (relationships) are friendship/social relationship links, recommender systems where entities are users and products and relationships are buying, rating, reviewing or searching for a product, or knowledge bases (KBs) such as Freebase 1 , Google Knowledge Graph 2 or GeneOntology 3 , where each entity of the KB represents an abstract concept or concrete entity of the world and relationships are predicates that represent facts involving two of them. Our work focuses on modeling multi-relational data from KBs (Wordnet [9] and Freebase [1] in this paper), with the goal of providing an efficient tool to complete them by automatically adding new facts, without requiring extra knowledge.

[^1] freebase.com

[^2] google.com/insidesearch/features/search/knowledge.html

[^3] geneontology.org

多重关系数据是指有向图,其节点对应于形式为(头,标签,尾巴)(表示为(h,ℓ,t))的实体和边缘,每个都表明实体的头和尾的名称标签之间存在关系。多重关系数据模型在许多领域起着关键作用。例如社交网络分析,其中实体是成员,边缘(关系)是友谊/社交关系链接;推荐系统,其中实体是用户和产品,关系是购买,评级,评论或搜索产品;或知识库(KB)例如Freebase 1,Google Knowledge Graph 2或GeneOntology 3,其中KB的每个实体代表世界的抽象概念或具体实体,而关系是表示涉及其中两个事实的谓词。我们的工作重点是为知识库(Wordnet [9]和Freebase [1]中的知识库)建立多关系数据建模,目的是提供一种有效的工具,通过自动添加新事实来完成这些数据,而无需额外的知识。

Modeling multi-relational data

In general, the modeling process boils down to extracting local or global connectivity patterns between entities, and prediction is performed by using these patterns to generalize the observed relationship between a specific entity and all others. The notion of locality for a single relationship may be purely structural, such as the friend of my friend is my friend in social networks, but can also depend on the entities, such as those who liked Star Wars IV also liked Star Wars V, but they may or may not like Titanic. In contrast to single-relational data where ad-hoc but simple modeling assumptions can be made after some descriptive analysis of the data, the difficulty of relational data is that the notion of locality may involve relationships and entities of different types at the same time, so that modeling multi-relational data requires more generic approaches that can choose the appropriate patterns considering all heterogeneous relationships at the same time.

建模多关系数据

通常,建模过程归结为提取实体之间的局部或全局连接模式,并通过使用这些模式来概括特定实体与所有其他实体之间观察到的关系来进行预测。单一关系的本地性概念可能纯粹是结构性的,例如我朋友的朋友是我在社交网络中的朋友,但也可以依赖于实体,例如喜欢《星球大战四》的人也喜欢《星球大战五》,但是他们可能喜欢也可能不喜欢《泰坦尼克号》。与可以在对数据进行描述性分析后做出即席但简单的建模假设的单关系数据相反,关系数据的困难在于局部性的概念可能同时涉及不同类型的关系和实体,因此,对多关系数据建模需要更通用的方法,这些方法可以同时考虑所有异构关系来选择适当的模式。

Following the success of user/item clustering or matrix factorization techniques in collaborative filtering to represent non-trivial similarities between the connectivity patterns of entities in single-relational data, most existing methods for multi-relational data have been designed within the frame-work of relational learning from latent attributes, as pointed out by [6]; that is, by learning and operating on latent representations (or embeddings) of the constituents (entities and relationships). Starting from natural extensions of these approaches to the multi-relational domain such as non-parametric Bayesian extensions of the stochastic blockmodel [7, 10, 17] and models based on tensor factorization [5] or collective matrix factorization [13, 11, 12], many of the most recent approaches have focused on increasing the expressivity and the universality of the model in either Bayesian clustering frameworks [15] or energy-based frameworks for learning embeddings of entities in low-dimensional spaces [3, 15, 2, 14]. The greater expressivity of these models comes at the expense of substantial increases in model complexity which results in modeling assumptions that are hard to interpret, and in higher computational costs. Besides, such approaches are potentially subject to either overfitting since proper regularization of such high-capacity models is hard to design, or underfitting due to the non-convex optimization problems with many local minima that need to be solved to train them. As a matter of fact, it was shown in [2] that a simpler model (linear instead of bilinear) achieves almost as good performance as the most expressive models on several multi-relational data sets with a relatively large number of different relationships. This suggests that even in complex and heterogeneous multi-relational domains simple yet appropriate modeling assumptions can lead to better trade-offs between accuracy and scalability.

继用户/项目聚类或矩阵分解技术在协作过滤中成功地代表单关系数据中实体的连通性模式之间的非平凡相似性之后,大多数现有的多关系数据方法都在框架内进行了设计。如[6]所指出的,从潜在属性进行关系学习;也就是说,通过学习和操作成分(实体和关系)的潜在表示(或嵌入)。从这些方法对多关系域的自然扩展开始,例如随机块模型的非参数贝叶斯扩展[7,10,17]和基于张量分解[5]或集合矩阵分解[13,11,12]的模型,许多最新方法都集中于提高模型的表达性和通用性,无论是在贝叶斯聚类框架[15]还是基于能量的框架中,用于学习低维空间中实体的嵌入[3,15,2, 14]。这些模型的更高表现力通过持续增加模型的复杂性得以实现,这将导致建模假设难以解释,以及更高的计算成本。此外,由于难以设计此类大容量模型的正则化,因此此类方法可能会过拟合,或者由于需要解决许多局部最小值的非凸优化问题来训练它们而导致拟合不足。实际上,在[2]中表明,在几个具有相对大量不同关系的多关系数据集上,较简单的模型(线性而不是双线性)可实现与最具表现力的模型几乎相同的性能。这表明,即使在复杂且异构的多关系域中,简单而适当的建模假设也可以导致准确性和可伸缩性之间的更好权衡。

Relationships as translations in the embedding space

In this paper, we introduce TransE, an energy-based model for learning low-dimensional embeddings of entities. In TransE, relationships are represented as translations in the embedding space: if (h,ℓ,t) holds, then the embedding of the tail entity t should be close to the embedding of the head entity h plus some vector that depends on the relationship ℓ. Our approach relies on a reduced set of parameters as it learns only one low-dimensional vector for each entity and each relationship.

关系作为嵌入空间中的翻译

在本文中,我们介绍了TransE,一种基于能量的模型,用于学习实体的低维嵌入。在TransE中,关系表示为嵌入空间中的平移:如果(h,ℓ,t)成立,则尾部实体t的嵌入应与头部实体h的嵌入加上依赖于该关系的向量ℓ接近。我们的方法依靠减少的参数集,因为它对于每个实体和每个关系仅学习一个低维向量。

The main motivation behind our translation-based parameterization is that hierarchical relationships are extremely common in KBs and translations are the natural transformations for representing them. Indeed, considering the natural representation of trees (i.e. embeddings of the nodes in dimension 2), the siblings are close to each other and nodes at a given height are organized on the x-axis, the parent-child relationship corresponds to a translation on the y-axis. Since a null translation vector corresponds to an equivalence relationship between entities, the model can then represent the sibling relationship as well. Hence, we chose to use our parameter budget per relationship (one low-dimensional vector) to represent what we considered to be the key relationships in KBs. Another, secondary, motivation comes from the recent work of [8], in which the authors learn word embeddings from free text, and some 1-to-1 relationships between entities of different types, such “capital of” between countries and cities, are (coincidentally rather than willingly) represented by the model as translations in the embedding space. This suggests that there may exist embedding spaces in which 1-to-1 relationships between entities of different types may, as well, be represented by translations. The intention of our model is to enforce such a structure of the embedding space.

我们基于翻译的参数化背后的主要动机是,层次关系在KB中极为常见,而翻译是表示它们的自然转换。实际上,考虑到树的自然表示(即,节点在2维中的嵌入),兄弟姐妹彼此靠近,并且在x轴上组织了给定高度的节点,因此父子关系对应于y轴。由于空翻译向量对应于实体之间的等价关系,因此模型也可以表示同级关系。因此,我们选择使用每个关系的参数预算(一个低维向量)来表示我们认为是KB的关键关系。另一个次要动机来自[8]的最新工作,在该工作中,作者从自由文本中学习词嵌入,并学习了不同类型实体之间的一对一关系,例如国家和城市之间的“首都”,由模型(巧合地而不是故意地)表示为嵌入空间中的翻译。这表明可能存在嵌入空间,其中不同类型的实体之间的一对一关系也可以由翻译表示。我们模型的目的是要加强嵌入空间的这种结构。

Our experiments in Section 4 demonstrate that this new model, despite its simplicity and its architecture primarily designed for modeling hierarchies, ends up being powerful on most kinds of relationships, and can significantly outperform state-of-the-art methods in link prediction on real-world KBs. Besides, its light parameterization allows it to be successfully trained on a large scale split of Freebase containing 1M entities, 25k relationships and more than 17M training samples.

我们在第4节中的实验表明,尽管这种新模型简单易用,并且其体系结构主要是用于对层次结构进行建模而设计的,但最终它在大多数关系上都具有强大的功能,并且可以显著优于基于现实世界KBs的链接预测中的最新方法。此外,它的轻量级参数化使其可以在Freebase的大规模拆分中成功进行训练,其中包含1M个实体,25k个关系和超过1700万个训练样本。

In the remainder of the paper, we describe our model in Section 2 and discuss its connections with related methods in Section 3. We detail an extensive experimental study on Wordnet and Freebase in Section 4, comparing TransE with many methods from the literature. We finally conclude by sketching some future work directions in Section 5.

在本文的其余部分,我们将在第2节中描述我们的模型,并在第3节中讨论该模型与相关方法的联系。在第4节中,我们将对Wordnet和Freebase进行广泛的实验研究,并将TransE与文献中的许多方法进行比较。最后,我们在第5节中概述了一些未来的工作方向。

2 Translation-based model

Given a training set S of triplets (h,ℓ,t) composed of two entities h,t ∈ E (the set of entities) and a relationship ℓ ∈ L (the set of relationships), our model learns vector embeddings of the entities and the relationships. The embeddings take values in Rk (k is a model hyperparameter) and are denoted with the same letters, in boldface characters. The basic idea behind our model is that the functional relation induced by the ℓ-labeled edges corresponds to a translation of the embeddings, i.e. we want that h+ ℓ ≈ t when (h, ℓ, t) holds (t should be a nearest neighbor of h+ℓ), while h+ℓ should be far away from t otherwise. Following an energy-based framework, the energy of a triplet is equal to d(h+ℓ, t) for some dissimilarity measure d, which we take to be either the L1 or the L2 -norm.

给定三元组(h,ℓ,t)的训练集S,该训练集S由两个实体h,t∈E(实体的集合)和关系ℓ∈L(关系的集合)组成,我们的模型学习实体和关系的向量嵌入。嵌入采用Rk中的值(k是模型超参数),并用相同的字母用黑体字表示。我们模型背后的基本思想是,由ℓ标记的边引起的函数关系对应于嵌入的平移,即当(h,ℓ,t)成立时(t应该是h +ℓ最近的邻居),我们希望h +ℓ≈t;否则h +ℓ则应远离t。遵循基于能量的框架,对于某些差异度量d,三元组的能量等于d(h +ℓ,t),我们将其取为L1或L2-范数。

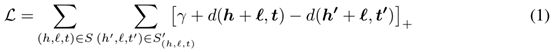

To learn such embeddings, we minimize a margin-based ranking criterion over the training set:

where [x] + denotes the positive part of x, γ > 0 is a margin hyperparameter, and

其中[x] +表示x的正部分,γ> 0是余量超参数,并且

![]()

The set of corrupted triplets, constructed according to Equation 2, is composed of training triplets with either the head or tail replaced by a random entity (but not both at the same time). The loss function (1) favors lower values of the energy for training triplets than for corrupted triplets, and is thus a natural implementation of the intended criterion. Note that for a given entity, its embedding vector is the same when the entity appears as the head or as the tail of a triplet.

根据等式2构造的一组损坏的三元组由训练三元组组成,头部或尾部被随机实体代替(但不能同时出现)。较于损坏的三元组,损失函数(1)赋予训练三元组的能量值更低,因此自然是预期标准的实现。请注意,对于给定实体,当实体显示为三元组的头或尾时,其嵌入向量相同。

The optimization is carried out by stochastic gradient descent (in minibatch mode), over the possible h, ℓ and t, with the additional constraints that the L2 -norm of the embeddings of the entities is 1 (no regularization or norm constraints are given to the label embeddings ℓ). This constraint is important for our model, as it is for previous embedding-based methods [3, 6, 2], because it prevents the training process to trivially minimize L by artificially increasing entity embeddings norms.

优化是通过随机梯度下降(在最小批处理模式下)在可能的h,ℓ和t上进行的,另外还有实体嵌入的L2-范数为1的附加约束(没有正则化或范式约束被给予标签嵌入ℓ)。这个约束对于我们的模型很重要,因为它对于以前的基于嵌入的方法[3,6,2]很重要,因为它阻止了训练过程通过人为增加实体嵌入范数来琐碎地使L最小化。

The detailed optimization procedure is described in Algorithm 1. All embeddings for entities and relationships are first initialized following the random procedure proposed in [4]. At each main iteration of the algorithm, the embedding vectors of the entities are first normalized. Then, a small set of triplets is sampled from the training set, and will serve as the training triplets of the minibatch. For each such triplet, we then sample a single corrupted triplet. The parameters are then updated by taking a gradient step with constant learning rate. The algorithm is stopped based on its performance on a validation set.

算法1描述了详细的优化过程。首先根据[4]中提出的随机过程初始化所有实体和关系的嵌入。在算法的每个主要迭代中,最先将实体的嵌入向量归一化。然后,从训练集中采样一小部分三元组,并将其作为微型批次的训练三元组。对于每个这样的三元组,我们然后采样一个损坏的三元组。然后通过采用具有恒定学习率的梯度步骤来更新参数。根据其在验证集上性能表现停止算法。

3 Related work

Section 1 described a large body of work on embedding KBs. We detail here the links between our model and those of [3] (Structured Embeddings or SE) and [14].

第1节介绍了嵌入KB的大量工作。我们在这里详细说明我们的模型与[3](结构化嵌入或SE)和[14]模型之间的联系。

SE [3] embeds entities into Rk , and relationships into two matrices L1 ∈ Rk×k and L2 ∈ Rk×ksuch that d(L1h,L2t) is large for corrupted triplets (h, ℓ,t) (and small otherwise). The basic idea is that when two entities belong to the same triplet, their embeddings should be close to each other in some subspace that depends on the relationship. Using two different projection matrices for the head and for the tail is intended to account for the possible asymmetry of relationship ℓ. When the dissimilarity function takes the form of d(x,y) = g(x − y) for some g : Rk → R (e.g. g is a norm), then SE with an embedding of size k + 1 is strictly more expressive than our model with an embedding of size k, since linear operators in dimension k+1 can reproduce affine transformations in a subspace of dimension k (by constraining the k+1th dimension of all embeddings to be equal to 1). SE, with L2 as the identity matrix and L1 taken so as to reproduce a translation is then equivalent to TransE. Despite the lower expressiveness of our model, we still reach better performance than SE in our experiments. We believe this is because (1) our model is a more direct way to represent the true properties of the relationship, and (2) optimization is difficult in embedding models. For SE, greater expressiveness seems to be more synonymous to underfitting than to better performance. Training errors (in Section 4.3) tend to confirm this point.

SE [3]将实体嵌入到Rk中,并将关系嵌入到两个矩阵L1∈Rk×k和L2∈Rk×k中,使得对于损坏的三元组(h,ℓ,t),d(L1h,L2t)大(否则小)。其基本思想是,当两个实体属于同一个三元组时,它们的嵌入应在依赖关系的某个子空间中彼此接近。为头部和尾部使用两个不同的投影矩阵是为了解决关系ℓ的可能不对称性。当对于某些g:Rk→R(例如g为范数),差异函数采用d(x,y)= g(x-y)的形式时,则嵌入大小为k+1的SE较之我们的嵌入大小为k的模型有更好的表现性,因为尺寸为k + 1的线性算子可以在尺寸为k的子空间中复制仿射变换(通过将所有嵌入的k + 1维度约束为1)。 SE,以L2作为单位矩阵,并采用L1来复制翻译,则等效于TransE。尽管模型的表现力较低,但在实验中我们仍然达到比SE更好的性能。我们认为这是因为:(1)我们的模型是表示关系的真实属性的更直接方法,并且(2)嵌入模型很难进行优化。对于SE,较之表现更好,更高的表现力似乎更与不合适同义。训练错误(在第4.3节中)倾向于证实这一点。

Another related approach is the Neural Tensor Model [14]. A special case of this model corresponds to learning scores s(h, ℓ,t) (lower scores for corrupted triplets) of the form:

另一个相关的方法是神经张量模型[14]。此模型的一个特例对应于以下形式的学习分数s(h,ℓ,t)(损坏的三元组的分数较低):

![]()

where L∈R^(k×k) , L1∈ Rk and L2∈ Rk , all of them depending on ℓ.

其中L∈R^(k×k),L1∈ Rk , L2∈ Rk , 它们都取决于ℓ。

If we consider TransE with the squared euclidean distance as dissimilarity function, we have:

Considering our norm constraints (||h||2(上)2(下)=||t||2(上)2(下)=1) and the ranking criterion (1), in which ||l||2(上)2(下) does not play any role in comparing corrupted triplets, our model thus involves scoring the triplets with h^T t+l^T (t-h), and hence corresponds to the model of [14] (Equation (3)) where L is the identity matrix, and ℓ = ℓ1 = − ℓ2 . We could not run experiments with this model (since it has been published simultaneously as ours), but once again TransE has much fewer parameters: this could simplify the training and prevent underfitting, and may compensate for a lower expressiveness.

考虑到我们的范式约束(||h||2(上)2(下)=||t||2(上)2(下)=1) 和排名标准(1),其中||l||2(上)2(下)在比较损坏的三元组中没有任何作用,因此我们的模型涉及用h^T t+l^T (t-h)对三元组进行评分,因此而呼应[14](等式(3))的模型,其中L是同一性矩阵,并且 ℓ =ℓ1= −ℓ2。我们无法使用此模型进行实验(因为它已与我们同时发布),但TransE再次具有更少的参数:这可以简化训练并防止拟合不足,并可以弥补较低的表现力。

Nevertheless, the simple formulation of TransE, which can be seen as encoding a series of 2-way interactions (e.g. by developing the L2 version), involves drawbacks. For modeling data where 3-way dependencies between h, ℓ and t are crucial, our model can fail. For instance, on the small-scale Kinships data set [7], TransE does not achieve performance in cross-validation (measured with the area under the precision-recall curve) competitive with the state-of-the-art [11, 6], because such ternary interactions are crucial in this case (see discussion in [2]). Still, our experiments of Section 4 demonstrate that, for handling generic large-scale KBs like Freebase, one should first model properly the most frequent connectivity patterns, as TransE does.

然而,TransE的简单表述(可以看作是编码一系列双向交互)(例如,通过开发L2版本)存在缺陷。对于在h,ℓ和t之间的三向依赖性至关重要的数据建模,我们的模型可能会失败。例如,在小规模的亲属关系数据集[7]上,TransE无法获得与最新技术相抗衡的交叉验证(以精确召回曲线下的面积衡量)的性能,因为在这种情况下,这种三元相互作用至关重要(请参见[2]中的讨论)。尽管如此,我们在第4节中的实验表明,要处理通用大型KB(如Freebase),应该像TransE一样,首先正确建模最频繁的连接模式。

4 Experience

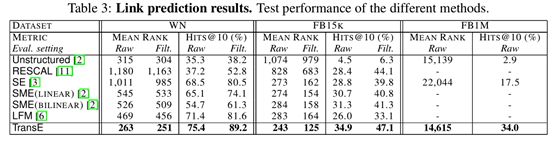

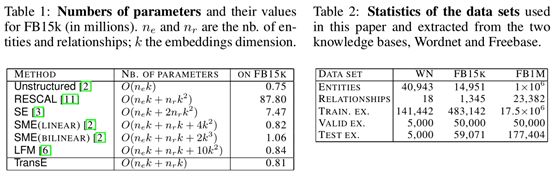

Our approach, TransE, is evaluated on data extracted from Wordnet and Freebase (their statistics are given in Table 2), against several recent methods from the literature which were shown to achieve the best current performance on various benchmarks and to scale to relatively large data sets.

我们的方法,TransE,是根据从Wordnet和Freebase提取的数据(它们的统计数据在表2中给出)进行评估的,与文献中的几种最新方法进行了比较,这些方法在各种基准上均显示出最佳的当前性能,并且可以扩展到相对较大的数据套。

Wordnet

This KB is designed to produce an intuitively usable dictionary and thesaurus, and support automatic text analysis. Its entities (termed synsets) correspond to word senses, and relationships define lexical relations between them. We considered the data version used in [2], which we denote WN in the following. Examples of triplets are ( score NN 1, hypernym, evaluation NN 1)or ( score NN 2, has part, musical notation NN 1). 4[^4]WN is composed of senses, its entities are denoted by the concatenation of a word, its part-of-speech tag and a digit indicating which sense it refers to i.e. _score_NN_1 encodes the first meaning of the noun “score”.

此KB旨在生成直观可用的词典和同义词库,并支持自动文本分析。它的实体(称为同义词集)对应于词义,并且关系定义了它们之间的词汇关系。我们考虑了[2]中使用的数据版本,以下将其表示为WN。三元组的示例是(得分NN 1,上位字母,评估NN 1)或(得分NN 2,含有部分,乐谱NN 1)。

Freebase

Freebase is a huge and growing KB of general facts; there are currently around 1.2 billion triplets and more than 80 million entities. We created two data sets with Freebase. First, to make a small data set to experiment on we selected the subset of entities that are also present in the Wikilinks database 5 and that also have at least 100 mentions in Freebase (for both entities and relationships). We also removed relationships like ’!/people/person/nationality’ which just reverses the head and tail compared to the relationship ’/people/person/nationality’. This resulted in 592,213 triplets with 14,951 entities and 1,345 relationships which were randomly split as shown in Table 2. This data set is denoted FB15k in the rest of this section. We also wanted to have large-scale data in order to test TransE at scale. Hence, we created another data set from Freebase, by selecting the most frequently occurring 1 million entities. This led to a split with around 25k relationships and more than 17 millions training triplets, which we refer to as FB1M.

[^5]code.google.com/p/wiki-links

Freebase是一个庞大且不断增长的知识库。目前大约有12亿个三元组和8000万个实体。我们使用Freebase创建了两个数据集。首先,为了建立一个小的数据集进行试验,我们选择了Wikilinks数据库中也存在的实体子集,并且在Freebase中也至少有100个提及(针对实体和关系)。我们还删除了’!/people/person/nationality’之类的关系,与’/people/person/nationality’关系相比,这恰恰相反。如表2所示,这产生了592,213个三元组,其中有14,951个实体和1,345个关系被随机拆分。此数据集在本节的其余部分表示为FB15k。我们还希望拥有大规模数据,以便大规模测试TransE,因此,我们通过在Freebase中选择最常发生的一百万个实体创造了另一个数据集。这导致了大约25,000个关系的分裂,以及超过1,700万个训练三元组,我们称之为FB1M。

4.2 Experimental setup

Evaluation protocol

For evaluation, we use the same ranking procedure as in [3]. For each test triplet, the head is removed and replaced by each of the entities of the dictionary in turn. Dissimilarities (or energies) of those corrupted triplets are first computed by the models and then sorted by ascending order; the rank of the correct entity is finally stored. This whole procedure is repeated while removing the tail instead of the head. We report the mean of those predicted ranks and the hits@10, i.e. the proportion of correct entities ranked in the top 10.

评估协议

为了进行评估,我们使用与[3]中相同的排名程序。对于每个测试三元组,都将头部移开并依次替换为字典中的每个实体。这些损坏的三元组的差异性(或能量)首先由模型计算,然后按升序排序;最终存储正确实体的等级。当去除尾巴而不是头部时整个过程再被重复。我们报告这些预测排名的平均数和hits @ 10,即正确的实体在前10名中所占的比例。

These metrics are indicative but can be flawed when some corrupted triplets end up being valid ones, from the training set for instance. In this case, those may be ranked above the test triplet, but this should not be counted as an error because both triplets are true. To avoid such a misleading behavior, we propose to remove from the list of corrupted triplets all the triplets that appear either in the training, validation or test set (except the test triplet of interest). This ensures that all corrupted triplets do not belong to the data set. In the following, we report mean ranks and hits@10 according to both settings: the original (possibly flawed) one is termed raw, while we refer to the newer as filtered (or filt.). We only provide raw results for experiments on FB1M.

这些指标是指示性的,但是当某些损坏的三元组最终成为有效的三元组(例如,来自训练集)时,可能会存在缺陷。在这种情况下,这些三元组的排名可能会高于测试三元组,但这不应被视为错误,因为两个三元组都是真实的。为避免这种误导性行为,我们建议从损坏的三元组列表中删除出现在训练、验证或测试集中的所有三元组(感兴趣的测试三元组除外)。这样可以确保所有损坏的三元组都不属于数据集。下面,我们根据两种设置报告平均排名和命中率@ 10:原始版(可能有缺陷)一个被称为原始,而我们将较新的称为过滤(或过滤)。我们仅提供在FB1M上进行实验的原始结果。

Baselines

The first method is Unstructured, a version of TransE which considers the data as mono-relational and sets all translations to 0 (it was already used as baseline in [2]). We also compare with RESCAL, the collective matrix factorization model presented in [11, 12], and the energy-based models SE [3], SME(linear)/SME(bilinear) [2] and LFM [6]. RESCAL is trained via an alternating least-square method, whereas the others are trained by stochastic gradient descent, like TransE. Table 1 compares the theoretical number of parameters of the baselines to our model, and gives the order of magnitude on FB15k. While SME(linear), SME(bilinear), LFM and TransE have about the same number of parameters as Unstructured for low dimensional embeddings, the other algorithms SE and RESCAL, which learn at least one k × k matrix for each relationship rapidly need to learn many parameters. RESCAL needs about 87 times more parameters on FB15k because it requires a much larger embedding space than other models to achieve good performance. We did not experiment on FB1M with RESCAL, SME(bilinear) and LFM for scalability reasons in terms of numbers of parameters or training duration.

基准线

第一种方法是非结构化,这是TransE的一种版本,该版本将数据视为单关系并将所有转换设置为0(在[2]中已将其用作基线)。我们还与RESCAL,[11,12]中提出的集体矩阵分解模型以及基于能量的模型SE [3],SME(线性)/ SME(双线性)[2]和LFM [6]进行了比较。 RESCAL是通过交替最小二乘法训练的,而其他方法是通过随机梯度下降训练的,例如TransE。表1将基线的理论参数数量与我们的模型进行了比较,并给出了FB15k的数量级。对于低维嵌入,SME(线性),SME(双线性),LFM和TransE的参数数量与非结构化大约相同,而其他算法SE和RESCAL则需要快速学习每种关系的至少一个k×k矩阵,学习很多参数。 RESCAL在FB15k上需要更多约87倍的参数,因为与其他模型相比,RESCAL需要更大的嵌入空间才能获得良好的性能。由于参数数量或训练持续时间的可扩展性原因,我们没有在带有RESCAL,SME(双线性)和LFM的FB1M上进行实验。

We trained all baseline methods using the code provided by the authors. For RESCAL, we had to set the regularization parameter to 0 for scalability reasons, as it is indicated in [11], and chose the latent dimension k among {50,250,500,1000,2000} that led to the lowest mean predicted ranks on the validation sets (using the raw setting). For Unstructured, SE, SME(linear) and SME(bilinear), we selected the learning rate among {0.001,0.01,0.1}, k among {20,50}, and selected the best model by early stopping using the mean rank on the validation sets (with a total of at most 1,000 epochs over the training data). For LFM, we also used the mean validation ranks to select the model and to choose the latent dimension among {25,50,75}, the number of factors among {50,100,200,500} and the learning rate among {0.01,0.1,0.5}.

我们使用作者提供的代码训练了所有基线方法。对于RESCAL,我们出于可伸缩性原因将正则化参数设置为0(如[11]所示),并在{50,250,500,1000,2000}中选择了潜在维度k,该潜在维度导致验证中的最低平均预测等级设置(使用原始设置)。对于非结构化,SE,SME(线性)和SME(双线性),我们在{0.001,0.01,0.1}中选择学习率,在{20,50}中选择k,并通过使用验证集(整个训练数据最多不超过1,000个纪元)。对于LFM,我们还使用均值验证等级来选择模型并在{25,50,75}中选择潜在维度,在{50,100,200,500}中选择因子数量,并在{0.01,0.1,0.5}中选择学习率。

Implementation For experiments with TransE, we selected the learning rate λ for the stochastic gradient descent among {0.001,0.01,0.1}, the margin γ among {1,2,10} and the latent dimension k among {20,50} on the validation set of each data set. The dissimilarity measure d was set either to the L1 or L2 distance according to validation performance as well. Optimal configurations were: k = 20, λ = 0.01, γ = 2, and d = L1 on Wordnet; k = 50, λ = 0.01, γ = 1, and d = L1 on FB15k; k = 50, λ = 0.01, γ = 1, and d = L2 on FB1M. For all data sets, training time was limited to at most 1,000 epochs over the training set. The best models were selected by early stopping using the mean predicted ranks on the validation sets (raw setting). An open-source implementation of TransE is available from the project webpage .6

[^6]Available at http://goo.gl/0PpKQe

实施

对于TransE实验,我们在每个数据集的验证集中选择了{0.001,0.01,0.1}中随机梯度下降的学习率λ,{1,2,10}中的余量γ和{20,50}中的潜在维k。根据验证性能,也将差异度量d设置为L1或L2距离。最佳配置为:Wordnet上的k = 20,λ= 0.01,γ= 2和d = L1;在FB15k上k = 50,λ= 0.01,γ= 1,d = L1;在FB1M上k = 50,λ= 0.01,γ= 1和d = L2。对于所有数据集,训练时间限制在训练集的最多1,000个纪元。通过尽早停止使用验证集(原始设置)上的平均预测等级,来选择最佳模型。可从项目网页上获得TransE的开源实现。

4.3 Link prediction

Overall results

Tables 3 displays the results on all data sets for all compared methods. As expected, the filtered setting provides lower mean ranks and higher hits@10, which we believe are a clearer evaluation of the performance of the methods in link prediction. However, generally the trends between raw and filtered are the same.

总体结果

表3显示了所有比较方法的所有数据集的结果。不出所料,经过过滤的设置可提供较低的平均排名和较高的点击数@ 10,我们认为这是对链接预测中方法性能的更清晰评估。但是,通常原始和过滤后的趋势是相同的。

Our method, TransE, outperforms all counterparts on all metrics, usually with a wide margin, and reaches some promising absolute performance scores such as 89% of hits@10 on WN (over more than 40k entities) and 34% on FB1M (over 1M entities). All differences between TransE and the best runner-up methods are important.

我们的方法,TransE在所有指标上通常都在所有指标上胜过所有同类指标,并且达到了一些有希望的绝对性能得分,例如WN(超过4万个实体)的hits @ 10达到89%,而FB1M则达到130%(超过1M)实体)。 TransE和最佳亚军方法之间的所有差异都很重要。

We believe that the good performance of TransE is due to an appropriate design of the model according to the data, but also to its relative simplicity. This means that it can be optimized efficiently with stochastic gradient. We showed in Section 3 that SE is more expressive than our proposal. However, its complexity may make it quite hard to learn, resulting in worse performance. On FB15k, SE achieves a mean rank of 165 and hits@10 of 35.5% on a subset of 50k triplets of the training set, whereas TransE reaches 127 and 42.7%, indicating that TransE is indeed less subject to underfitting and that this could explain its better performances. SME(bilinear) and LFM suffer from the same training issue: we never managed to train them well enough so that they could exploit their full capabilities. The poor results of LFM might also be explained by our evaluation setting, based on ranking entities, whereas LFM was originally proposed to predict relationships. RESCAL can achieve quite good hits@10 on FB15k but yields poor mean ranks, especially on WN, even when we used large latent dimensions (2,000 on Wordnet).

我们认为,TransE的良好性能是由于根据数据对模型进行了适当的设计,还因为其相对简单。这意味着可以使用随机梯度有效地对其进行优化。在第3节中,我们表明SE比我们的建议更具表现力。但是,它的复杂性可能使其很难学习,从而导致性能变差。在FB15k上,SE在训练集的50k三元组的子集中达到了165的平均等级和35.5%的hits @ 10,而TransE达到127和42.7%,这表明TransE确实较少受到欠拟合的影响,这可以解释其更好的性能。 SME(双线性)和LFM遭受相同的培训问题:我们从未设法对它们进行足够好的培训,以至于他们无法充分利用其能力。基于排名实体,我们的评估设置也可能解释了LFM的不良结果,而LFM最初是用来预测关系的。 RESCAL可以在FB15k上获得不错的hits @ 10,但即使我们使用了较大的潜在维度(在Wordnet上为2,000),平均排名也很低,尤其是在WN上。

The impact of the translation term is huge. When one compares performance of TransE and Unstructured (i.e. TransE without translation), mean ranks of Unstructured appear to be rather good (best runner-up on WN), but hits@10 are very poor. Unstructured simply clusters all entities co-occurring together, independent of the relationships involved, and hence can only make guesses of which entities are related. On FB1M, the mean ranks of TransE and Unstructured are almost similar, but TransE places 10 times more predictions in the top 10.

翻译术语的影响是巨大的。当比较TransE和非结构化(即不进行翻译的TransE)的效果时,非结构化的平均排名似乎比较好(WN最佳亚军),但hits @ 10却很差。非结构化只是将所有同时出现的实体聚集在一起,而与所涉及的关系无关,因此只能猜测与哪些实体相关。在FB1M上,TransE和非结构化的平均排名几乎相似,但TransE在前10名中的预测高出10倍。

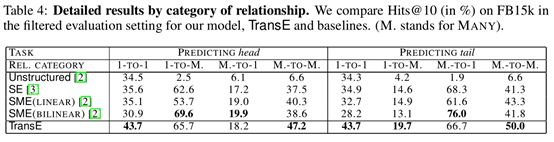

Detailed results

Table 4 classifies the results (in hits@10) on FB15k depending on several categories of the relationships and on the argument to predict for several of the methods. We categorized the relationships according to the cardinalities of their head and tail arguments into four classes: 1- TO -1, 1- TO -M ANY , M ANY - TO -1, M ANY - TO -M ANY . A given relationship is 1- TO -1 if a head can appear with at most one tail, 1- TO -M ANY if a head can appear with many tails, M ANY - TO -1 if many heads can appear with the same tail, or M ANY - TO -M ANY if multiple heads can appear with multiple tails. We classified the relationships into these four classes by computing, for each relationship ℓ, the averaged number of heads h (respect. tails t) appearing in the FB15k data set, given a pair(ℓ,t) (respect. a pair (h, ℓ)). If this average number was below 1.5 then the argument was labeled as 1 and M ANY otherwise. For example, a relationship having an average of 1.2 head per tail and of 3.2 tails per head was classified as 1-to-Many. We obtained that FB15k has 26.2% of 1- TO -1 relationships, 22.7% of 1- TO -M ANY , 28.3% of M ANY - TO -1, and 22.8% of M ANY - TO -M ANY

细节性的结果

表4根据关系的几种类别以及预测几种方法的参数对FB15k的结果进行分类(按hits @ 10)。我们根据它们的头和尾参数的基数将这些关系分为四类:1-TO-1、1- TO-M ANY,M ANY-TO -1,M ANY-TO-M ANY。如果一个头部最多可以出现一条尾巴,则给定的关系为1- TO -1;如果一个头部可以出现很多尾巴,则给定的关系为1- TO -M ANY;如果许多头部可以出现同一尾巴,则为M ANY-TO -1;或者M ANY-TO -M ANY(如果可以出现多个头部且带有多个尾巴)。对于每个关系,给定实体对(ℓ,t) (相对于实体对(h, ℓ)),通过计算它出现在FB15k数据集中的平均头实体h数量(相对于尾实体t),来将其划入以上4个类别。如果该平均值小于1.5,则将参数标记为1,否则将其标记为M ANY。例如,平均每条尾巴对应1.2头,每头对应3.2尾巴的关系被分类为1对多。我们得到FB15k具有26.2%的1-TO -1关系,22.7%的1- TO -M ANY关系,28.3%的M ANY-TO -1关系和22.8%的M ANY-TO -M ANY关系。

These detailed results in Table 4 allow for a precise evaluation and understanding of the behavior of the methods. First, it appears that, as one would expect, it is easier to predict entities on the “side 1” of triplets (i.e., predicting head in 1- TO -M ANY and tail in M ANY - TO -1), that is when multiple entities point to it. These are the well-posed cases. SME(bilinear) proves to be very accurate in such cases because they are those with the most training examples. Unstructured performs well on 1- TO -1 relationships: this shows that arguments of such relationships must share common hidden types that Unstructured is able to somewhat uncover by clustering entities linked together in the embedding space. But this strategy fails for any other category of relationship. Adding the translation term (i.e. upgrading Unstructured into TransE) brings the ability to move in the embeddings space, from one entity cluster to another by following relationships. This is particularly spectacular for the well-posed cases.

表4中的这些详细结果可对方法进行精确评估和理解。首先,正如人们所期望的那样,似乎更容易预测三元组的“第1边”上的实体(即,预测头在1- TO -M ANY中,尾在M ANY-TO -1中),即当多个实体指向它时。这些都是有根据的情况。在这种情况下,SME(双线性)被证明是非常准确的,因为它们是训练有素的例子。非结构化在1-TO -1关系上表现良好:这表明此类关系的参数必须共享公共的隐藏类型,而非结构化则可以通过将嵌入空间中链接在一起的实体聚类而在某种程度上揭示。但是对于任何其他类别的关系,此策略都将失败。添加翻译术语(即,将非结构化升级为TransE)可以通过遵循关系,将嵌入空间从一个实体群集移动到另一个实体群集。对于状况良好的案例而言,这尤为壮观。

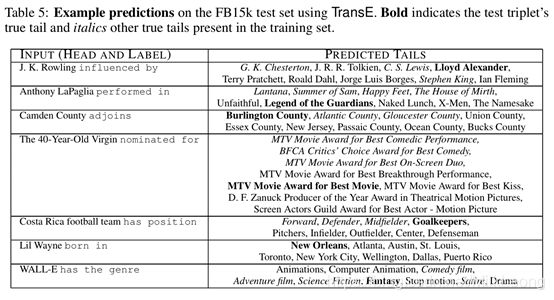

Illustration

Table 5 gives examples of link prediction results of TransE on the FB15k test set (predicting tail). This illustrates the capabilities of our model. Given a head and a label, the top predicted tails (and the true one) are depicted. The examples come from the FB15k test set. Even if the good answer is not always top-ranked, the predictions reflect common-sense.

例证

表5给出了FB15k测试集上TransE的链路预测结果的示例(预测尾部)。这说明了我们模型的功能。给定一个头和一个标签,将描绘出顶部预测的尾巴(和真实的尾巴)。这些示例来自FB15k测试集。即使好答案并不总是排名靠前,但这些预测仍反映了常识。

4.4 Learning to predict new relationships with few examples

Using FB15k, we wanted to test how well methods could generalize to new facts by checking how fast they were learning new relationships. To that end, we randomly selected 40 relationships and split the data into two sets: a set (named FB15k-40rel) containing all triplets with these 40 relationships and another set (FB15k-rest) containing the rest. We made sure that both sets contained all entities. FB15k-rest has then been split into a training set of 353,788 triplets and a validation set of 53,266, and FB15k-40rel into a training set of 40,000 triplets (1,000 for each relationship) and a test set of 45,159. Using these data sets, we conducted the following experiment: (1) models were trained and selected using FB15k-rest training and validation sets, (2) they were subsequently trained on the training set FB15k-40rel but only to learn the parameters related to the fresh 40 relationships, (3) they were evaluated in link prediction on the test set of FB15k-40rel (containing only relationships unseen during phase (1)). We repeated this procedure while using 0, 10, 100 and 1000 examples of each relationship in phase (2).

我们想使用FB15k,通过检查方法学习新关系的速度,来测试方法对新事实的泛化能力。为此,我们随机选择了40个关系并将数据分成两组:一组(名为FB15k-40rel)包含具有这40个关系的所有三元组,另一组(FB15k-rest)包含其余关系。我们确保两个集合都包含所有实体。然后,将FB15k-rest分为353,788个三元组的训练集和53,266个验证集,并将FB15k-40rel分为40,000个三元组(每个关系1,000个)的训练集和45,159个测试集。使用这些数据集,我们进行了以下实验:(1)使用FB15k-rest训练和验证集对模型进行训练和选择,(2)随后在训练集FB15k-40rel上对模型进行训练,但仅学习与之相关的参数新的40种关系,(3)在FB15k-40rel测试集的链接预测中进行了评估(仅包含阶段(1)中未发现的关系)。我们在阶段(2)中使用每种关系的0、10、100和1000个示例重复了此过程。

Results for Unstructured, SE, SME(linear), SME(bilinear) and TransE are presented in Figure 1. The performance of Unstructured is the best when no example of the unknown relationship is provided, because it does not use this information to predict. But, of course, this performance does not improve while providing labeled examples. TransE is the fastest method to learn: with only 10 examples of a new relationship, the hits@10 is already 18% and it improves monotonically with the number of provided samples. We believe the simplicity of the TransE model makes it able to generalize well, without having to modify any of the already trained embeddings.

图1给出了非结构化,SE,SME(线性),SME(双线性)和TransE的结果。当未提供未知关系的示例时,非结构化的性能最佳,因为它不使用此信息进行预测。但是,当然,在提供带有标签的示例时,此性能不会提高。 TransE是学习最快的方法:只有10个新关系示例,hits @ 10已经达到18%,并且随着提供的样本数量单调提高。我们相信,TransE模型的简单性使其能够很好地泛化,而无需修改任何已经受过训练的嵌入。

5 Conclusion and future work

We proposed a new approach to learn embeddings of KBs, focusing on the minimal parametrization of the model to primarily represent hierarchical relationships. We showed that it works very well compared to competing methods on two different knowledge bases, and is also a highly scalable model, whereby we applied it to a very large-scale chunk of Freebase data. Although it remains unclear to us if all relationship types can be modeled adequately by our approach, by breaking down the evaluation into categories (1-to-1, 1-to-Many, …) it appears to be performing well compared to other approaches across all settings.

我们提出了一种学习知识库嵌入的新方法,其着力于模型的最小参数化以表示表示层次关系。我们证明,与在两个不同知识库上的竞争方法相比,它非常有效,并且还是一个高度可扩展的模型,因此,我们将其应用于了很大一部分的Freebase数据。尽管我们尚不清楚是否可以通过我们的方法对所有关系类型进行适当的建模,但通过将评估划分为类别(1对1、1对许多…),与所有设置中的其他方法。

Future work could analyze this model further, and also concentrates on exploiting it in more tasks, in particular, applications such as learning word representations inspired by [8]. Combining KBs with text as in [2] is another important direction where our approach could prove useful. Hence, we recently fruitfully inserted TransE into a framework for relation extraction from text [16].

未来的工作可以进一步分析该模型,并且还可以集中精力在更多任务中加以利用,尤其是在应用中,例如受[8]启发的单词表示法。如[2]中所述,将KB与文本结合起来是我们的方法可能有用的另一个重要方向。因此,我们最近将TransE卓有成效地插入了从文本中提取关系的框架[16]。

Acknowledgements

This work was carried out in the framework of the Labex MS2T (ANR-11-IDEX-0004-02), and funded by the French National Agency for Research (EVEREST-12-JS02-005-01). We thank X. Glorot for providing the code infrastructure, T. Strohmann and K. Murphy for useful discussions.

致谢

这项工作是在Labex MS2T(ANR-11-IDEX-0004-02)的框架内进行的,并由法国国家研究局(EVEREST-12-JS02-005-01)资助。感谢X. Glorot提供的代码基础结构,感谢T. Strohmann和K. Murphy的有益讨论。

References

[1] K. Bollacker, C. Evans, P. Paritosh, T. Sturge, and J. Taylor. Freebase: a collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD international conference on Management of data, 2008.

[2] A. Bordes, X. Glorot, J. Weston, and Y. Bengio. A semantic matching energy function for learning with multi-relational data. Machine Learning, 2013.

[3] A. Bordes, J. Weston, R. Collobert, and Y. Bengio. Learning structured embeddings of knowledge bases. In Proceedings of the 25th Annual Conference on Artificial Intelligence (AAAI), 2011.

[4] X. Glorot and Y. Bengio. Understanding the difficulty of training deep feedforward neural net-

works. In Proceedings of the International Conference on Artificial Intelligence and Statistics

(AISTATS)., 2010.

[5] R. A. Harshman and M. E. Lundy. Parafac: parallel factor analysis. Computational Statistics & Data Analysis, 18(1):39–72, Aug. 1994.

[6] R. Jenatton, N. Le Roux, A. Bordes, G. Obozinski, et al. A latent factor model for highly multirelational data. In Advances in Neural Information Processing Systems (NIPS 25), 2012.

[7] C. Kemp, J. B. Tenenbaum, T. L. Griffiths, T. Yamada, and N. Ueda. Learning systems of concepts with an infinite relational model. In Proceedings of the 21st Annual Conference on Artificial Intelligence (AAAI), 2006.

[8] T. Mikolov, I. Sutskever, K. Chen, G. Corrado, and J. Dean. Distributed representations of words and phrases and their compositionality. In Advances in Neural Information Processing Systems (NIPS 26), 2013.

[9] G. Miller. WordNet: a Lexical Database for English. Communications of the ACM, 38(11):39–41, 1995.

[10] K. Miller, T. Griffiths, and M. Jordan. Nonparametric latent feature models for link prediction. In Advances in Neural Information Processing Systems (NIPS 22), 2009.

[11] M. Nickel, V. Tresp, and H.-P. Kriegel. A three-way model for collective learning on multi-relational data. In Proceedings of the 28th International Conference on Machine Learning(ICML), 2011.

[12] M. Nickel, V. Tresp, and H.-P. Kriegel. Factorizing YAGO: scalable machine learning for linked data. In Proceedings of the 21st international conference on World Wide Web (WWW),2012.

[13] A. P. Singh and G. J. Gordon. Relational learning via collective matrix factorization. In Proceedings of the 14th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD), 2008.

[14] R. Socher, D. Chen, C. D. Manning, and A. Y. Ng. Learning new facts from knowledge bases with neural tensor networks and semantic word vectors. In Advances in Neural Information Processing Systems (NIPS 26), 2013.

[15] I. Sutskever, R. Salakhutdinov, and J. Tenenbaum. Modelling relational data using Bayesian clustered tensor factorization. In Advances in Neural Information Processing Systems (NIPS 22), 2009.

[16] J. Weston, A. Bordes, O. Yakhnenko, and N. Usunier. Connecting language and knowledge bases with embedding models for relation extraction. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), 2013.

[17] J. Zhu. Max-margin nonparametric latent feature models for link prediction. In Proceedings of the 29th International Conference on Machine Learning (ICML), 2012.