python 小知识点

1、获取当前文件路径

now_file_path = os.path.dirname(file)

2、对列表删除重复元素,且不改变原有顺序

list = sorted(set(list_data), key=list_data.index)

3、pandas数据可以矩阵乘法

pd.mean()求平均;.min();.max()

4、字典按某值排序,并取前几个

sort_data = dict(sorted(dict_data.items(), key=lambda x: x[1], reverse=True))

most_near = list(sort_data .keys())[0:choose_num]

#reverse=True是升序

5、pd按条件删除

df = df.drop(df[(df[‘a’] >= “500000”) & (df[‘b’] < “600000”)].index)

6、list交集、并、差

print list(set(a).intersection(set(b)))

print list(set(a).union(set(b)))

print list(set(b).difference(set(a))) # b中有而a中没有的

7、pandas按条件去重,不改变原始数据

data = pd_data.drop_duplicates(subset=[‘井1’, ‘井2’])

#去重后变数组,不能用list

data= np.array(pd_data.drop_duplicates(subset=[‘井1’, ‘井2’])[[‘井1’, ‘井2’]])

#去除重复列

curves_datas = curves_datas.loc[:, ~curves_datas.columns.duplicated()]

#去除重复列,列不相同时去除失败

curves_datas = curves_datas.T.drop_duplicates().T

#pd按指定顺序排序

list_custom = ["CON_Cal_标准测井", 'CON1_标准测井', "CON_Cal_3700测井", 'CAL_标准测井', 'Cs_标准测井']

n_sort_all_curve_sim_k_split['Service_Name'] = n_sort_all_curve_sim_k_split['Service_Name'].astype('category')

n_sort_all_curve_sim_k_split['Service_Name'].cat.reorder_categories(list_custom, inplace=True)

n_sort_all_curve_sim_k_split.sort_values('Service_Name', inplace=True)

8、pd按条件搜索

#这样会令其他字段都为NAN

part_data = big_sim_choose_curve[big_sim_choose_curve[[‘井1’, ‘井2’]] == two_well_name]

#这样是正确的

part_data = big_sim_choose_curve[(big_sim_choose_curve[‘井1’] == two_well_name[0]) & (big_sim_choose_curve[‘井2’] == two_well_name[1])]

#pd某列为列表,在列表中查找符合要求的所有pd

pd_data = each_split_ref[each_split_ref[‘对应参考井’].apply(lambda x: well_i == x)]

#删除为’‘的数据

pds = pd.DataFrame([[1,2,3],[1,2,“”],[1,“b”,1],[2,3,4]],columns=[“a”,“b”,“c”])

t = np.frompyfunc(lambda x:not isinstance(x,str),1,1)(pds.values)

or t = np.frompyfunc(lambda x: not x==’', 1, 1)(data.values)

new_pds = pds.iloc[t.sum(axis=1)==t.shape[1]]

#统计每行为空的个数

null_sum = file_data.isna().sum(axis=1) + (file_data == ‘’).sum(axis=1)

#null_sum= file_data.apply(lambda x: x.value_counts().get(0, 0), axis=1)#value_counts统计每个元素出现的次数,get0,0第一个0是获取0的个数,第二个是将其它的个数置为0–如果有’',处理会有问题

#统计有空的行的索引,并在元数据中删除

null_index = null_sum[null_sum>0].index

file_data = file_data.drop(null_index)

9、字典按key删除

dict_data.pop(key)

new_data = {k:v for k, v in data_ori.items() if v < 0}

10、嵌套list展开

def flatten(li):

return sum(([x] if not isinstance(x, list) else flatten(x) for x in li), [])

#把二维矩阵展开

from itertools import chain # 嵌套list展开为一维向量

all_dc_sim_array = list(chain.from_iterable(all_dc_sim))

11、pandas按条件搜索在某list中的值

choose_part_data = pd_data[pd_data[‘井1’].isin(list_data)]

choose_part_data = pd_data[~(pd_data[‘井1’].isin(list_data))]#不在list中的

data = data[~data[‘fc_name’].str.contains(‘油层底深|井底|岩’)]#模糊按条件搜索

12、获取字典中某些key对应的值

list2 = list(map(dict_data.get, keys))

13、字典拼接

a.update(b)

14.pd按某字段排序

f_c = list(curves_datas.columns)

curves_datas = curves_datas.sort_values(by=f_c[0], ascending=True) # 按depth排序

15、读取xls的sheet

wb = load_workbook(now_path + “/7.2交流拿到的数据/229-80.块分层数据.xlsx”)

sheetnames = wb.sheetnames

dc_data = list(wb[choose_sheet_name].values)

dc_data = pd.DataFrame(dc_data[1:], columns=dc_data[0])#对应井的地层数据

data = xlrd.open_workbook(‘E:\电网故障数据1.xlsx’) # 获取数据

table = data.sheet_by_name(‘Sheet1’)

rownum = table.nrows

colnum = table.ncols - 1

x = np.zeros((rownum, colnum))

for i in range(rownum):

for j in range(colnum):

x[i, j] = table.cell(i, j).value

16.pd中所有数据转为float

df.convert_objects(convert_numeric=True)

final_mark_dc_combine = final_mark_dc_combine.apply(pd.to_numeric, errors=‘ignore’)

def trans(x):

try:

return float(x)

except:

return x

curves_datas = curves_datas.applymap(trans)

17、安装第三方库

例如:通过清华的镜像安装requests

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple requests

pip uninstall xlrd

pip install xlrd==1.1.0

18、pd修改某值

curve_dc_data.loc[i:i, (‘z’)] = 10

curve_dc_data[‘z’].iloc[i] = 10

pd_data.loc[pd_data[‘a’] == 1, ‘b’] = 100

19、pd修改列名

pd.rename(columns = {‘C’:‘change_c’}, inplace = True)

20、pd按行列删除

#删除深度重复的地方

file_data = file_data.drop_duplicates(subset=‘DEPT’)

#删除每行不为0.125整数的数据,保存

file_data = file_data[(file_data[co] % 0.125) == 0]

#删除任意有空的列

file_data = file_data.dropna(axis=1, how=“all”)

#删除任意有空的行

file_data = file_data.dropna(axis=0, how=“all”)

#删除垂深为空的部分

file_data.dropna(subset=[‘z’], inplace=True)

21、查看文件的编码格式,打开文件,防止中文乱码

f = open(path, ‘rb’)

f_charinfo = chardet.detect(f.read())[‘encoding’]

print(“f_charinfo”, f_charinfo)

f = open(path, encoding=f_charinfo)

lines = f.readlines()

lines[i] = lines[i].encode(f_charinfo).decode(‘gbk’).encode(‘utf-8’).decode(‘utf-8’)#防止中文乱码

22、迭代器产生索引对

product(*loop_val1)

——[(0, 0, 0), (0, 0, 1), (0, 1, 0), (0, 1, 1)…]

23、str变字典

obj_predict = eval(obj_predict)

24、字符串型列表转成真正的列表

读取csv文件,里面的列表为str型

from ast import literal_eval

new_list = literal_eval(c_data)

25、str从第index位开始查找指定值

str.index(‘|’, index)

26、对列表用某字符连接,形成字符串str

final_data = ‘;’.join(data_list)

26、pd重置行索引

pd_data= pd_data.reset_index(drop=True)#重置索引

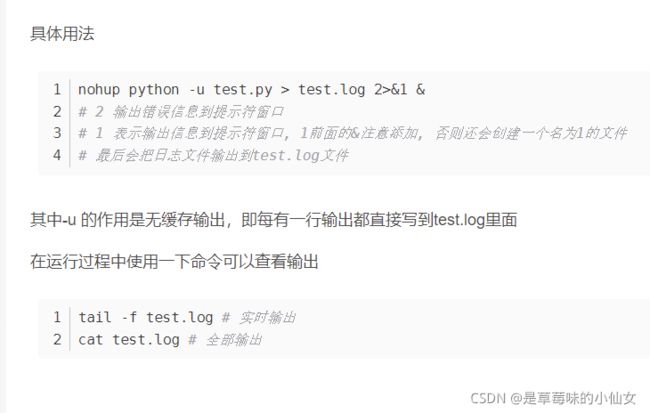

27、linux后台运行python

nohup python -u flush.py > flush.log 2>&1 &#后台执行

nohup python -u flush.py > flush.out 2>&1 &#后台执行

其中-u为无缓冲输出

ps -ef | grep python#查看进程

killall -9 python

kill -s 9 125936#关闭进程 125936为进程号

ps aux|grep python|grep -v grep|cut -c 9-15|xargs kill -9 #关闭全部python进程

#查找并关闭某些进程

ps -ef | grep python | grep “第三个加权模型.py”

ps -ef | grep python | grep “第三个加权模型.py” | awk ‘{print $2}’ | xargs kill -9

tail -f flush.log # 实时输出

cat # 全部输出

free -h#查看内存

top #查看cpu

lscpu #查看cpu个数

#tf和torch是否调用GPU

tf.config.experimental.list_physical_devices(‘GPU’)

tf.test.is_gpu_available()

torch.cuda.is_available()

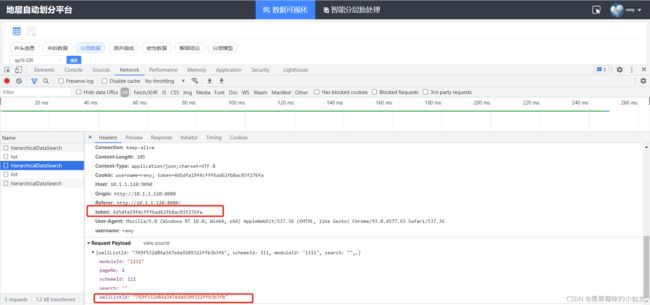

28、pd转json存redis

redisClient = redis.StrictRedis(host=‘10.1.1.120’, port=6379, db=15, decode_responses=True, charset=‘UTF-8’,

encoding=‘UTF-8’)

modelStratums = modelStratums.to_dict(orient=‘index’)#orient='index’横向转

modelStratums = json.dumps(modelStratums)

redisClient.hset(modelData_token, ‘modelStratums’, modelStratums)

29、redis读数据转pd

load1 = redisClient.hget(‘modelData:token:’, ‘modelCrv’) # 从redis中获取

load1 = json.loads(load1)

data = pd.DataFrame(load1)

data = pd.DataFrame(data.values.T, columns=data.index)#转置

30、字典中按找到某些key对应的值

#字典:crv_id_name;某些key:final_choose_curve_id

final_choose_curve = list(itemgetter(*final_choose_curve_id)(crv_id_name))

31、随机选择list中的几个数

population = [‘s’, ‘as’, ‘saf’, ‘ss’, ‘12’, ‘d2’]

k = 5

b = sample(population, k)

list = sorted(set(b), key=population.index)

32、存csv防止中文乱码

well_curve_data_new.to_csv(save_path + well_name_csv, encoding=‘utf_8_sig’, index=False)

33、转码

well_name = well_name.encode(‘’ISO-8859-1).decode(‘gbk’).encode(‘utf-8’).decode(‘utf-8’)

33、plt将y坐标翻转

plt.gca().invert_yaxis()

34、正则表达式判断字符串中的中英数字

letter_num=re.findall(‘[a-zA-Z]’,str)

digit_num = re.findall(‘[0-9]’,str)

chinese_num = re.findall(‘([\u4e00-\u9fa5])’,str)

re.findall(‘[1’, data)#中文数字,找到中文后的数字

35、嵌套list长度不一致,补齐,转为np.array

#如果行数不足,则用0补齐

max_line = max(list(map(lambda x: len(x), file)))#列表中行数最多的个数

file = list(map(lambda x: x + [0] * (max_line - len(x)), file))

file = np.array(file)

36、嵌套list补齐,转为np.array

#如果行数不足,则补齐

max_line = max(list(map(lambda x: len(x), file)))#列表中行数最多的个数

file = list(map(lambda x: x + [0] * (max_line - len(x)), file))

37、读取excel中某行,拼成str

#要用map,因为读取的时候,会把数字变成浮点型,而不是str

line_str = ‘’.join(map(str, line))

38、处理pd读excel的nan

pandas默认读取空字符串时读出的是nan,在使用 pandas.read_excel(file)这个方法时可以在后面加上keep_default_na=False,这样读取到空字符串时读出的就是’'而不是nan了:

one_d = [a_ for a_ in one_d if a_ == a_]#删除列表中空值

39、去除list中的NAT时间格式

将整个list转换为str,再删除NAT

u_data = [str(i) for i in data[1] if (i != ‘’)]

u_data = [i for i in u_data if (i != ‘NaT’)]

40、字典删除部分数据

have_del_data = list(set(list(final_mark_dc_dict.keys())).difference(set(all_choose_dc)))

list(map(final_mark_dc_dict.pop, have_del_data))

41、根据倾斜角方位角获取偏移和垂深

如果为第一行则默认上一层数据为0

X偏移=上一层x偏移+(当前测深-上一层测深)*Math.sin(Math.toRadians(倾斜角))*Math.cos(Math.toRadians(方位角));

Y偏移=上一层Y偏移+(当前测深-上一层测深)*Math.sin(Math.toRadians(倾斜角))*Math.sin(Math.toRadians(方位角));

垂深=上一层垂深+(当前测深-上一层测深)*Math.cos(Math.toRadians(倾斜角));

42、获取sdk接口数据

url = "http://188.188.65.31:9000/well/getDirSurveyListByWellListID"

headers = {"token":"757c0c66-c21d-4d5f-8a67-9f5f65770936","Content-Type":"application/json"}#不加Content-Type会报错

datas = json.dumps({

"wellListId": 3531

})

r = requests.post(url, data=datas, headers=headers)#获取请求

# print(r.text)

# print(r.content.decode("utf-8")) #decode解压缩展示中文

deviation_data = json.loads(r.content.decode("utf-8"))

deviation_data = pd.DataFrame(deviation_data)

43、获取字典中最大值对应的key

max_key = max(data, key=lambda k: data[k])

44、统计list中元素出现的字数

1)b = dict(zip(*np.unique(lst, return_counts=True)))

2)from collections import Counter

dict(Counter(lst))

45、监控日志

tail -f nohup.out

46、忽略nan求均值

mean = np.nanmean(data)

std = np.nanstd(data)

47、根据某函数过滤序列

def is_odd(n):

return n % 2 == 1

#过滤奇数

newlist = filter(is_odd, [1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

48、启动tf

source activate tensorflow35

source deactivate tensorflow35

50、Xshell滚屏

Ctrl+S:锁定当前屏幕

Ctrl+Q:解锁当前屏幕

51、tf读模型参数

file_name = “./第三个模型/”

model_name = “three_model.ckpt-160”

checkpoint_path = os.path.join(file_name, model_name)

reader = tf.train.NewCheckpointReader(checkpoint_path) # 读取参数

var_to_shape_map = reader.get_variable_to_shape_map()

print(“w1”, reader.get_tensor(‘w1’))

print(“w2”, reader.get_tensor(‘w2’))

for key in var_to_shape_map:

print("tensor_name: ", key)

print(reader.get_tensor(key))

52、数据one_hot编码

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import OneHotEncoder

# define example

# integer encode

label_encoder = LabelEncoder()

integer_encoded = label_encoder.fit_transform(label)

print(integer_encoded)

# binary encode

onehot_encoder = OneHotEncoder(sparse=False)

integer_encoded = integer_encoded.reshape(len(integer_encoded), 1)

onehot_encoded = onehot_encoder.fit_transform(integer_encoded)

print("onehot_encoded", onehot_encoded)

53、判断数据类型

#判断是否为某类型

if isinstance(all_data_trajectory_dict[k], pd.DataFrame):

print('ok)

#判断是否为NoneType

if data is None:

print(“is_none”)

54、linux环境下发送post请求 curl

curl --location --request POST ‘填写url’

–header ‘xxxx: xxxxxxxxxxxxx’

–header ‘Content-Type: application/json’

–data ‘JSON格式’

例子:

curl --location --request POST ‘http://188.188.65.31:9000/proj/getPickDataByWellIDSchemeID’

–header ‘token: ff1c5fe0-6942-4935-a227-a909e96a0178’

–header ‘Content-Type: application/json’

–data ‘{

“wellId”: 20839,

“schemeId”: 327

}’

右键->检查

55、pd错位

df.shift(1)#向下错一位

df.shift(-1)#向上错一位

df.shift(axis=1, periods=1)#向右错一位(列错位)

56、LabelEncoder编码

encoder = LabelEncoder()

values[:, 4] = encoder.fit_transform(values[:, 4])#组合风向改变编码方式

57、pycharm自动填充import

Alt+Enter

58、list/pd切片,取奇数偶数位

df.iloc[1::2]取偶数位

df.iloc[::2]取奇数位

df.iloc[::3]每隔两位取一个数

59、文件读取

a = file.readlines()

file.seek(0)#把文件指针指向头,不然b会为空

b = file.read()

60、paddle安装命令

python -m pip install paddlepaddle-gpu==2.2.1 -i https://mirror.baidu.com/pypi/simple

pip install paddlepaddle -i https://pypi.douban.com/simple

61、打乱数据集

train_random_index = np.random.permutation(len(final_data)) # 对训练集生成无序的数字

#对数据集打乱(如果数据集可以转成np.array,直接参考标签的打乱方式)

r = list(tuple(zip(final_data,train_random_index)))

r.sort(key=lambda final_data:final_data[1])

x = list(np.array®[:,0])

#对标签打乱

label_data = np.array(final_label)[train_random_index]

62、重采样

# 重采样,解决数据不平衡

# print("采样前:", Counter(y_train))

# over_samples = SMOTE(random_state=0)

# x_train, y_train = over_samples.fit_resample(x_train, y_train)

# print("采样后", Counter(y_train))

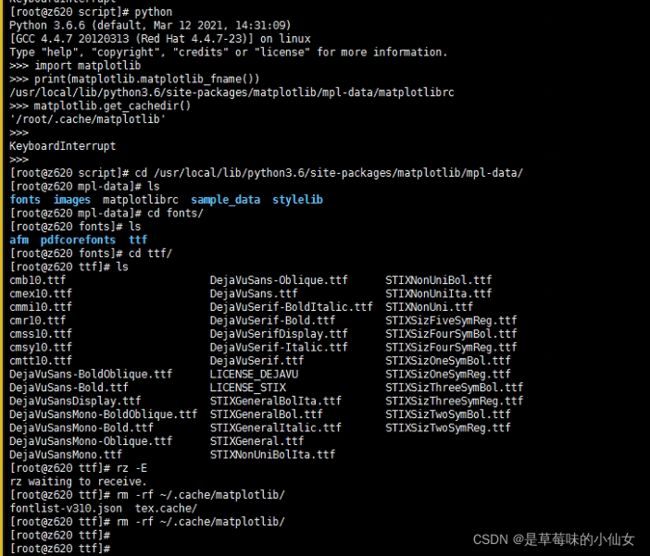

63、linux没有GUI下作图

#-- coding: utf-8 --

import matplotlib

matplotlib.use(‘agg’)

import matplotlib.pyplot as plt

64、sklearn归一化 反归一化

from sklearn import preprocessing

import joblib

x = np.array([[2, 2, 2, 2]])

x = x.reshape([x.shape[1], 1])

print(“x”, x)

min_max_scaler = preprocessing.MinMaxScaler()#默认为范围0~1,拷贝操作

#min_max_scaler = preprocessing.MinMaxScaler(feature_range = (1,3),copy = False)#范围改为1~3,对原数组操作

x_minmax = min_max_scaler.fit_transform(x)

joblib.dump(min_max_scaler, ‘./scalar01.m’)

print(“x_minmax”, x_minmax)

min_max_scaler = joblib.load(os.path.join(sys.path[0], “scalar01.m”))

#X = min_max_scaler.fit_transform(x_minmax)

origin_data = min_max_scaler.inverse_transform(x_minmax)

65、linux下缺少SimHei字体

参考:https://www.cnblogs.com/muchi/articles/11946852.html

66 获取文件下的所有xlsx文件路径

path = os.path.join(sys.path[0], ‘petrel预处理数据’)

data = glob.glob(os.path.join(path, ‘*.xlsx’))

获取上级目录

self.path = os.path.join(os.path.abspath(os.path.dirname(os.getcwd())), ‘projFile’, wellListId) # 平台调用时路径有问题

self.path = os.path.join(os.path.abspath(os.path.join(file, “…”, “…”)), ‘projFile’, wellListId)

67 linux conda

查看conda环境:conda env list

创建环境:conda create -n 环境名 python==3.8

切换conda环境:source activate 环境名

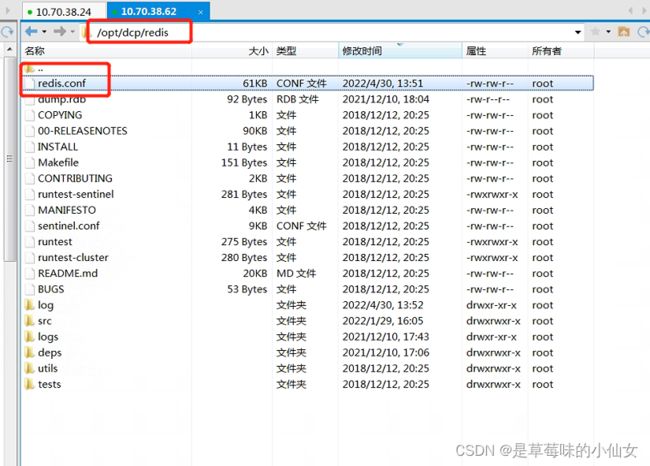

68 linux redis

ps -ef | grep redis (检测是否启动Redis);

2、# /opt/dcp/redis/src/redis-server /opt/dcp/redis/redis.conf尝试执行redis的启动命令

\u4e00-\u9fa5 ↩︎