Pytorch实现手写数字识别,导出权重参数,利用C语言实现推理(正向传播)过程

前言

关键词:FPGA,卷积神经网络,pytorch

将手写数字识别部署在FPGA等嵌入式端时,由于缺少tensorflow,pytorch等框架的使用,需要在PC端先训练出神经网络,导出权重参数再去硬件上只实现前向传播的部分.

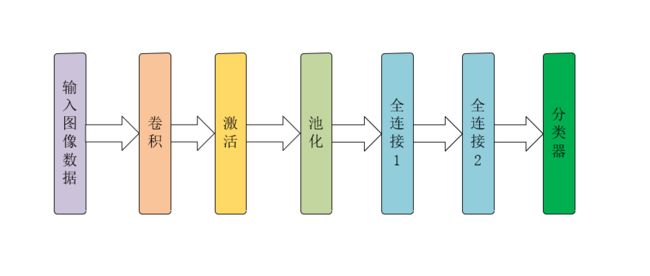

本文使用MINIST手写数字识别数据集.先搭建了一个具有一个卷积层,一个池化层,两个全连接层的卷积神经网络.具体结构如下:

MINIST手写数字识别数据集:其中图像数据为28x28的灰度图像.训练集:60000个,测试集:10000个

卷积层:共有30个5x5的卷积核

激活函数:ReLU()

池化:最大池化

实现整个模型的前提,你得对你的网络要具有大局观念:

输入数据为

28x28,经过30个5x5的卷积核过后,数据变为30x24x24,池化过后数据变为30x12x12 = 4320个,全连接层1将4320个数据映射到100个,全连接层2将100个数据映射到10个输出,其中输出的最大值的索引就为预测值

一.使用pytorch训练数据集

直接上代码,网上对于利用卷积神经网络模型搭建MNIST手写数字识别的教程也很多:这个视频手把手教你.

我直接贴我的代码:

import random

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

##Data

import torch

import torchvision

from torch.utils.data import DataLoader

##pytorch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

##随机数种子(这个不知道有啥用)

random_seed = 1

torch.manual_seed(random_seed)

##设置超参数

n_epochs = 3

batch_size_train = 64

batch_size_test = 1000

learn_rate = 0.01

momentum = 0.5

log_interval = 10

##一些输出配置(numpy打印输出的时候不显示shenglue号)

np.set_printoptions(threshold = np.inf)

##加载数据集

train_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST(

'./data/',train=True,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),

batch_size=batch_size_train,shuffle=True

)

test_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST(

'./data/',train=False,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),

batch_size=batch_size_test,shuffle=True

)

examples = enumerate(test_loader)

batch_idx,(example_data,example_target) = next(examples)

###模型定义 卷积-->激活-->池化-->全连接1-->激活-->全连接2-->softmax (要改模型直接在这改)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1,30,kernel_size=5)

self.fc1 = nn.Linear(4320,100)

self.fc2 = nn.Linear(100, 10)

def forward(self,x):

x = F.max_pool2d(F.relu(self.conv1(x)),2)

x = x.view(-1, 4320)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return F.log_softmax(x,dim=1)

network = Net()

optimizer = optim.SGD(network.parameters(),lr=learn_rate,momentum=momentum)

train_losses = []

train_counter = []

test_losses = []

test_counter = (i*len(train_loader.dataset) for i in range(n_epochs + 1))

##训练器

def train(epoch):

network.train()

for batch_idx,(data,target) in enumerate(train_loader):

optimizer.zero_grad()

output = network(data) #得到输出

loss = F.nll_loss(output,target) ##损失函数

loss.backward() #反向传播

optimizer.step() #梯度优化

if batch_idx % log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

train_losses.append(loss.item())

train_counter.append(

(batch_idx * 64) + ((epoch - 1) * len(train_loader.dataset)))

torch.save(network.state_dict(), './model.pth') ##注意,这里是保存训练好的模型参数

torch.save(optimizer.state_dict(), './optimizer.pth')

def test():

network.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

output = network(data)

test_loss += F.nll_loss(output, target, reduction='sum').item()

pred = output.data.max(1, keepdim=True)[1]

correct += pred.eq(target.data.view_as(pred)).sum() #统计正确率

test_loss /= len(test_loader.dataset)

test_losses.append(test_loss)

print('\nTest set: Avg. loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

# #训练ing

# for epoch in range(1, n_epochs + 1):

# train(epoch)

# test()

##加载训练好的模型参数,注意啦,训练完了要把上面几行代码注释掉,训练一次就行了

state_dict = torch.load('./model.pth')

network.load_state_dict(state_dict)

##这里是我准备用来测试的一个数字,好像是6来着

# network.eval()

# data = torch.tensor([0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,127 ,221 ,52 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,64 ,229 ,219 ,104 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,13 ,235 ,140 ,4 ,3 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,118 ,227 ,25 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,236 ,133 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,13 ,243 ,93 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,85 ,243 ,21 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,189 ,236 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,1 ,208 ,169 ,0 ,0 ,0 ,0 ,0 ,0 ,64 ,151 ,151 ,135 ,74 ,1 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,26 ,254 ,89 ,0 ,0 ,0 ,0 ,6 ,142 ,254 ,224 ,211 ,181 ,241 ,70 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,26 ,254 ,68 ,0 ,0 ,0 ,2 ,161 ,254 ,104 ,7 ,0 ,0 ,80 ,223 ,15 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,57 ,254 ,15 ,0 ,0 ,0 ,150 ,231 ,68 ,1 ,0 ,0 ,0 ,9 ,231 ,26 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,79 ,254 ,15 ,0 ,0 ,24 ,228 ,66 ,0 ,0 ,0 ,0 ,0 ,0 ,196 ,87 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,73 ,254 ,43 ,0 ,0 ,116 ,251 ,7 ,0 ,0 ,0 ,0 ,0 ,0 ,196 ,100 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,13 ,230 ,147 ,0 ,0 ,60 ,255 ,70 ,0 ,0 ,0 ,0 ,0 ,4 ,209 ,84 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,203 ,232 ,4 ,0 ,42 ,253 ,74 ,0 ,0 ,0 ,0 ,0 ,114 ,233 ,17 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,87 ,252 ,147 ,0 ,0 ,154 ,229 ,132 ,123 ,123 ,63 ,93 ,248 ,65 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,169 ,249 ,137 ,23 ,8 ,80 ,100 ,101 ,107 ,145 ,192 ,51 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,5 ,139 ,251 ,224 ,144 ,115 ,115 ,195 ,254 ,187 ,48 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,55 ,141 ,203 ,244 ,180 ,129 ,67 ,2 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,

# 0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0 ,0

# ],dtype=torch.float)

# data = data.reshape(1,28,28)

#将上面的data数字放到模型里面测试一下,看模型行不行

# with torch.no_grad():

# output = network(data)

# pred = output.data.max(1, keepdim=True)[1]

# print(f"pred:{pred}")

##这里是导出模型的权重参数,会员python代码的目录生成几个.csv文件

for name,param in network.named_parameters():

print(f"name:{name}\t\t\t,shape:{param.shape}")

data = pd.DataFrame(param.detach().numpy().reshape(1, -1))

filename = f"{name}.csv"

data.to_csv(f"./{filename}", index=False, header=False, sep=',')

上面的代码需要先训练,再把训练的代码注释掉,再导出权重参数.

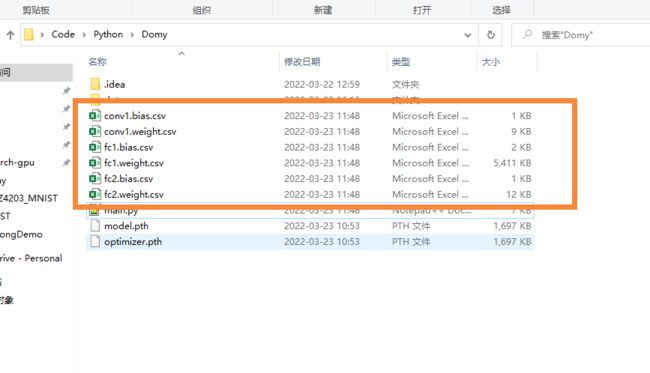

二.导出权重参数

导出权重参数后,python源代码目录下会生成几个

.csv文件:

可以看出来,全连接层的参数是最多的.导致后来在C语言中去实现推理过程的时候,直接导致内存不足.我的C-Free显示的是out of memory.所以在我就标题党了(我道歉),然后我去尝试用Python去实现,在pycharm中也是跑不动,但是在jupyter中是可以的,所以下面的推理正向传播的代码需要在jupyter中去跑

三.利用python实现正向传播过程

直接上代码(由于是在jupyter中写的,代码就是一段一段的):

1.导包

import numpy as np

2.初始化数据,需要把之前导出的权重参数.csv文件用记事本打开,然后分别复制到下面对应的数组中.数据量非常大,全连接1权重多达43万个

conv1_w = np.array([数据复制到这])

conv1_b = np.array([数据复制到这])

fc1_w = np.array([数据复制到这])

fc1_b = np.array([数据复制到这])

fc2_w = np.array([数据复制到这])

fc2_b = np.array([数据复制到这])

- 准备要是被识别的数据,这个是数据被识别的结果是数字

2,如果需要识别其他数字,直接替换这个数组

img_data = np.array([

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,21,56,136,155,195,52,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,6,44,111,204,254,254,254,254,197,21,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,75,211,254,254,230,228,200,168,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,8,206,252,241,220,45,21,7,61,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,55,110,27,5,0,0,0,129,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,3,208,254,246,85,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,112,254,249,123,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,64,252,254,145,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,227,254,254,139,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,8,164,254,254,219,18,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,41,254,254,252,112,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,128,254,254,126,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,79,252,254,232,27,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,9,204,254,254,149,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,128,254,254,254,5,11,26,26,53,116,26,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,85,247,254,254,254,181,211,254,254,254,254,254,91,0,0,0,0,0,0,0,0,0,0,0,0,0,0,25,217,254,254,254,254,254,254,254,239,219,209,120,96,0,0,0,0,0,0,0,0,0,0,0,0,0,0,195,254,254,254,254,254,183,182,102,36,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,220,254,254,239,154,33,2,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,133,223,243,103,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

])

4.初始化一些后面要用的变量

np.set_printoptions(threshold = np.inf) ##numpy数组输出不显示省略号

conv_rlst = np.empty([30*24*24,]) ##卷积-->激活后结果

pool_rslt = np.empty([30*12*12,]) ##池化后的结果

fc1_rslt = np.empty([100,]) ##全连接1后的结果

fc2_rslt = np.empty([10,]) ##全连接2后的结果

5.卷积–>激活,卷积实现的大概思想就是:30个卷积核,所以最外层就是30次循环,row.col就是在图片上定位每一次卷积开始坐标,xy就算是遍历以row.col为开始坐标的5x5的图片,然后用这个5x5图片去和5x5的卷积核进行计算.

#卷积-->激活

for n in range(30):

for row in range(23):##定位图片上5X5大小的,需要运算的部分

for col in range(23):

conv_temp = 0

for x in range(5):##遍历这个5x5图片,也就是卷积开始计算

for y in range(5):

conv_temp = conv_temp + img_data[row*28+col+x*28+y] * conv1_w[x*5+y+n*25] #图片和卷积核相乘

conv_temp = conv_temp + conv1_b[n] ##加偏置

if conv_temp > 0: ##ReLU激活

conv_rlst[row*24+col+n*24*24] = conv_temp

else:

conv_rlst[row*24+col+n*24*24] = 0

6.池化,2x2的最大池化

##池化

for n in range(30):

for row in range(0,24,2):

for col in range(0,24,2):

pool_temp = 0

for x in range(2):

for y in range(2):

if pool_temp <= conv_rlst[row*24+col+x*24+y+n*576]:

pool_temp = conv_rlst[row*24+col+x*24+y+n*576]

data = int((row/2)*12+col/2+n*144)

pool_rslt[data] = pool_temp

7.全连接1

##全连接1-->激活

for n in range(100):

fc1_temp = 0

for i in range(4320):

fc1_temp = fc1_temp + pool_rslt[i]*fc1_w[i+4320*n]

fc1_temp = fc1_temp + fc1_b[n]

if fc1_temp>0 : ##激活

fc1_rslt[n] = fc1_temp;

else :

fc1_rslt[n] = 0;

8,全连接2

###全连接2

for n in range(10):

fc2_temp = 0

for i in range(100):

fc2_temp = fc2_temp + fc2_w[i+100*n]*fc1_rslt[i]

fc2_temp = fc2_temp + fc2_b[n]

fc2_rslt[n] = fc2_temp

9.输出预测值,也可以将fc2_rslt全部输出,看哪个值最大

print(f"预测值:{fc2_rslt.argmax()}")

四 结束

如果你是要将神经网络部署在FPGA上,强烈推荐这个教程,这篇blog就是在看了这个教程后写的.有什么问题可以留言,欢迎批评指正

测试数据

1

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,145,227,6,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,6,223,254,63,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,9,253,254,96,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,9,253,254,96,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,9,253,254,51,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,9,253,254,8,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,18,253,254,8,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,163,253,254,8,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,10,210,253,254,8,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,165,253,222,254,69,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,18,233,160,119,255,96,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,10,90,3,3,254,96,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,232,147,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,166,183,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,166,195,3,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,166,228,11,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,2,30,84,205,253,204,81,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,2,155,253,253,254,242,132,7,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,124,253,253,247,187,29,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,111,186,102,25,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

2

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,21,56,136,155,195,52,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,6,44,111,204,254,254,254,254,197,21,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,75,211,254,254,230,228,200,168,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,8,206,252,241,220,45,21,7,61,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,55,110,27,5,0,0,0,129,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,3,208,254,246,85,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,112,254,249,123,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,64,252,254,145,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,227,254,254,139,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,8,164,254,254,219,18,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,41,254,254,252,112,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,128,254,254,126,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,79,252,254,232,27,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,9,204,254,254,149,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,128,254,254,254,5,11,26,26,53,116,26,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,85,247,254,254,254,181,211,254,254,254,254,254,91,0,0,0,0,0,0,0,0,0,0,0,0,0,0,25,217,254,254,254,254,254,254,254,239,219,209,120,96,0,0,0,0,0,0,0,0,0,0,0,0,0,0,195,254,254,254,254,254,183,182,102,36,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,220,254,254,239,154,33,2,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,133,223,243,103,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

‘5’

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,21,56,136,155,195,52,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,6,44,111,204,254,254,254,254,197,21,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,75,211,254,254,230,228,200,168,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,8,206,252,241,220,45,21,7,61,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,55,110,27,5,0,0,0,129,254,254,229,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,3,208,254,246,85,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,112,254,249,123,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,64,252,254,145,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,227,254,254,139,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,8,164,254,254,219,18,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,41,254,254,252,112,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,128,254,254,126,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,79,252,254,232,27,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,9,204,254,254,149,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,128,254,254,254,5,11,26,26,53,116,26,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,85,247,254,254,254,181,211,254,254,254,254,254,91,0,0,0,0,0,0,0,0,0,0,0,0,0,0,25,217,254,254,254,254,254,254,254,239,219,209,120,96,0,0,0,0,0,0,0,0,0,0,0,0,0,0,195,254,254,254,254,254,183,182,102,36,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,220,254,254,239,154,33,2,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,133,223,243,103,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0