AIStudio使用ResNet进行X光图像肺炎分类超级完整(详细代码)

AiStudio使用ResNet进行X光图像肺炎分类超级完整(详细代码)

题目要求

小袁是市人民医院的一名影像科医生,平时日常的工作就是看看片子然后写一下影像报

告,但是他也一直对计算机技术很感兴趣。最近一段时间呢医院说要提高自身的智能化服务

水平。一方面为了提高工作效率,另一方面也是自己很感兴趣,小袁就在想办法,能不能采

用计算机的相关技术,应用在医学影像上,建立一个类似自动诊断的系统呢。正巧呢他手里

有一个数据较多的胸部 x 光的数据库,是用来诊断是否患有肺炎的。于是他就想在这个数据

库上做点文章。可是他又不是计算机出身,非常缺乏相关的技术,所以他一筹莫展,想问问

大家有没有什么好的方法能够帮帮他。

内容

1、基于给定的数据集,建立一个模型,使之能够尽可能的将对照和病人区分开来。

2、根据给定的数据集,建立一个模型,使之能够尽可能的将病毒性肺炎和细菌性肺

炎区分开来。

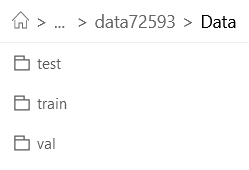

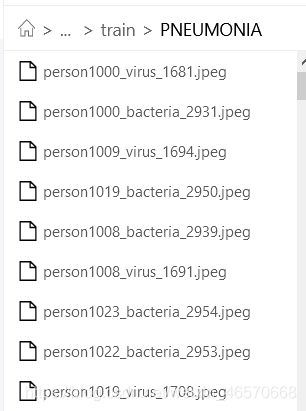

数据介绍

本数据集中所有图片均为 jpeg 格式。绝大多数的图片均是单通道灰度图,极个别为彩

色图像。整个数据集分为训练集、验证集和测试集,分别存放在 train、val 和 test 文件夹

中。在子数据集中分别有两个子文件夹,为 NORMAL 和 PNEUMONIA,分别存放对照 x 光图片和肺炎 x 光图片。在 PNEUMONIA 文件夹中,有两个类型的肺炎图片,分别为细菌性肺炎和病毒性肺炎,区分标准为若名字中带有 bacteria 即为细菌性肺炎 x 光图片,带有 virus 即为病毒性肺炎 x 光图片。

时隔很久,终于考完试了,可以分享一些最近做的小项目了,因为配置环境实在是太麻烦了,所以决定还是用百度AI的开放平台AiStudio进行实验好了,各种包非常全,而且有很多写好的项目可以参考。

下面我们正式开始进行图像肺炎分类吧,最终正确率最好为0.90,差不多训练十五六分钟,效果还是非常不错的。

数据处理

首先最重要的就是数据处理了,先解压,解压后是这个样子的

!cd data/data72593 && unzip -q Data.zip

接下来就是

接下来就是

将训练数据放在trainImageSet中,将测试数据放在evalImageSet中

将训练图片的路径和标签放在train.txt中,将测试图片的路径和标签放在eval.txt中

import codecs

import os

import random

import shutil

from PIL import Image

all_file_dir='data/data72593/Data'

train_file_dir = 'data/data72593/Data/train'

test_file_dir='data/data72593/Data/test'

class_list = [c for c in os.listdir(train_file_dir) if os.path.isdir(os.path.join(train_file_dir, c)) and not c.endswith('Set') and not c.startswith('.')]

class_list.sort()

print(class_list)

train_image_dir = os.path.join(all_file_dir, "trainImageSet")

if not os.path.exists(train_image_dir):

os.makedirs(train_image_dir)

eval_image_dir = os.path.join(all_file_dir, "evalImageSet")

if not os.path.exists(eval_image_dir):

os.makedirs(eval_image_dir)

train_file = codecs.open(os.path.join(all_file_dir, "train.txt"), 'w')

eval_file = codecs.open(os.path.join(all_file_dir, "eval.txt"), 'w')

with codecs.open(os.path.join(all_file_dir, "label_list.txt"), "w") as label_list:

#加入训练集

label_id = 0

for class_dir in class_list:

label_list.write("{0}\t{1}\n".format(label_id, class_dir))

image_path_pre = os.path.join(train_file_dir, class_dir)

for file in os.listdir(image_path_pre):

try:

img = Image.open(os.path.join(image_path_pre, file))

shutil.copyfile(os.path.join(image_path_pre, file), os.path.join(train_image_dir, file))

train_file.write("{0}\t{1}\n".format(os.path.join(train_image_dir, file), label_id))

except Exception as e:

pass

# 存在一些文件打不开,此处需要稍作清洗

label_id += 1

##加入测试集

label_id = 0

for class_dir in class_list:

image_path_pre = os.path.join(test_file_dir, class_dir)

for file in os.listdir(image_path_pre):

try:

img = Image.open(os.path.join(image_path_pre, file))

shutil.copyfile(os.path.join(image_path_pre, file), os.path.join(eval_image_dir, file))

eval_file.write("{0}\t{1}\n".format(os.path.join(eval_image_dir, file), label_id))

except Exception as e:

pass

# 存在一些文件打不开,此处需要稍作清洗

label_id += 1

train_file.close()

eval_file.close()

这个时候可以看到,图片路径和标签都已经分好,我们点开train.txt来看一下。

设置初始参数

import os

import logging

import codecs

# The dir of all logs

__log_dir='/home/aistudio/logs'

mode = 'train'

read_params={

'input_num':-1,#图片数量

'class_num':-1,

'data_dir':'data/data72593/Data',

'train_list':'train.txt',

'label_list':'label_list.txt',

'label_dict':{},#类别个数

'val_list':'val.txt',

'test_list':'eval.txt',

'mode':mode

}

handle_params={

'input_size':[3,224,224],

"need_distort": True, # 是否启用图像颜色增强

"need_rotate": True, # 是否需要增加随机角度

"need_crop": True, # 是否要增加裁剪

"need_flip": True, # 是否要增加水平随机翻转

"hue_prob": 0.5,

"hue_delta": 18,

"contrast_prob": 0.5,

"contrast_delta": 0.5,

"saturation_prob": 0.5,

"saturation_delta": 0.5,

"brightness_prob": 0.5,

"brightness_delta": 0.125,

'mean_rgb':[127.5,127.5,127.5],

'mode':mode

}

def init_read_params():

if read_params['mode'] == 'train':

input_list=os.path.join(read_params['data_dir'],read_params['train_list'])

elif read_params['mode'] == 'eval':

input_list=os.path.join(read_params['data_dir'],read_params['val_list'])

elif read_params['mode'] == 'test':

input_list=os.path.join(read_params['data_dir'],read_params['eval_list'])

else:

raise ValueError('mode must be one of train, eval or test')

label_list=os.path.join(read_params['data_dir'],read_params['test_list'])

class_num=0

with codecs.open(label_list,encoding='utf-8') as l_list:

lines=[line.strip() for line in l_list]

for line in lines:

parts=line.strip().split()

read_params['label_dict'][parts[0]]=int(parts[1])

class_num += 1

read_params['class_num']=class_num

with codecs.open(input_list,encoding='utf-8') as t_list:

lines =[line.strip() for line in t_list]

read_params['input_num']=len(lines)

def init_log_config():

logger=logging.getLogger()

logger.setLevel(logging.INFO)

if not os.path.exists(__log_dir):

os.mkdir(__log_dir)

log_path=os.path.join(__log_dir,mode + '.log')

sh=logging.StreamHandler() # 输出到命令行的Handler

fh=logging.FileHandler(log_path,mode='w')

fh.setLevel(logging.DEBUG)

formatter= logging.Formatter('{asctime:s} - {filename:s} [line:{lineno:d}] - {levelname:s} : {message:s}',style='{')

fh.setFormatter(formatter)

sh.setFormatter(formatter)

logger.addHandler(fh)

logger.addHandler(sh)

return logger

init_read_params()

logger = init_log_config()

if __name__ == '__main__':

print(read_params['input_num'])

print(read_params['label_dict'])

logger.info('Times out')

图片调整

from PIL import Image,ImageEnhance

import numpy as np

import matplotlib.pyplot as plt

import math

def resize_img(img, size): # 设置图片大小

'''

强制缩放图片

force to resize the img

'''

img=img.resize((size[1],size[2]),Image.BILINEAR)

return img

def random_crop(img,size,scale=[0.08,1.0],ratio=[3./4 , 4./3]): # size CHW

'''

随机剪裁

'''

aspect_ratio = math.sqrt(np.random.uniform(*ratio))

bound = min((float(img.size[0])/img.size[1])/(aspect_ratio**2),(float(img.size[1])/img.size[0])*(aspect_ratio**2))

scale_max=min(scale[1],bound)

scale_min=min(scale[0],bound)

target_area = img.size[0] * img.size[1] * np.random.uniform(scale_min,scale_max)

target_size = math.sqrt(target_area)

w = int(target_size*aspect_ratio)

h = int(target_size/aspect_ratio)

i = np.random.randint(0,img.size[0] - w + 1)

j = np.random.randint(0,img.size[1] - h + 1)

img=img.crop((i,j,i+w,j+h))

img=img.resize((size[1],size[2]),Image.BILINEAR)

return img

def random_crop_scale(img,size,scale=[0.8,1.0],ratio=[3./4,4./3]): # size CHW

'''

image randomly croped

scale rate is the mean element

First scale rate be generated, then based on the rate, ratio can be generated with limit

the range of ratio should be large enough, otherwise in order to successfully crop, the ratio will be ignored

valuable passed from Config.py

size information

'''

scale[1]=min(scale[1],1.)

scale_rate=np.random.uniform(*scale)

target_area = img.size[0]*img.size[1]*scale_rate

target_size = math.sqrt(target_area)

bound_max=math.sqrt(float(img.size[0])/img.size[1]/scale_rate)

bound_min=math.sqrt(float(img.size[0])/img.size[1]*scale_rate)

aspect_ratio_max=min(ratio[1],bound_min)

aspect_ratio_min=max(ratio[0],bound_max)

if aspect_ratio_max < aspect_ratio_min:

aspect_ratio = np.random.uniform(bound_min,bound_max)

else:

aspect_ratio = np.random.uniform(aspect_ratio_min,aspect_ratio_max)

w = int(aspect_ratio * target_size)

h = int(target_size / aspect_ratio)

i = np.random.randint(0,img.size[0] - w + 1)

j = np.random.randint(0,img.size[1] - h + 1)

img = img.crop((i,j,i+w,j+h))

img=img.resize((size[1],size[2]),Image.BILINEAR)

return img

def rotate_img(img,angle=[-14,15]):

"""

图像增强,增加随机旋转角度

"""

angle = np.random.randint(*angle)

img= img.rotate(angle)

return img

def random_brightness(img,prob,delta):

"""

图像增强,亮度调整

:param img:

:return:

"""

brightness_prob = np.random.uniform(0,1)

if brightness_prob < prob:

brightness_delta = np.random.uniform(-delta,+delta)+1

img=ImageEnhance.Brightness(img).enhance(brightness_delta)

return img

def random_contrast(img,prob,delta):

"""

图像增强,对比度调整

"""

contrast_prob = np.random.uniform(0,1)

if contrast_prob < prob:

contrast_delta = np.random.uniform(-delta,+delta)+1

img=ImageEnhance.Contrast(img).enhance(contrast_delta)

return img

def random_saturation(img,prob,delta):

"""

图像增强,饱和度调整

"""

saturation_prob = np.random.uniform(0,1)

if saturation_prob < prob:

saturation_delta = np.random.uniform(-delta,+delta)+1

img=ImageEnhance.Color(img).enhance(saturation_delta)

return img

def random_hue(img,prob,delta):

"""

图像增强,色度调整

"""

hue_prob = np.random.uniform(0,1)

if hue_prob < prob :

hue_delta = np.random.uniform(-delta,+delta)

img_hsv = np.array(img.convert('HSV'))

img_hsv[:,:,0]=img_hsv[:,:,0]+hue_delta

img=Image.fromarray(img_hsv,mode='HSV').convert('RGB')

return img

def distort_color(img,params):

"""

概率的图像增强

"""

prob = np.random.uniform(0, 1)

if prob < 0.35:

img = random_brightness(img,params['brightness_prob'],params['brightness_delta'])

img = random_contrast(img,params['contrast_prob'],params['contrast_delta'])

img = random_saturation(img,params['saturation_prob'],params['saturation_delta'])

img = random_hue(img,params['hue_prob'],params['hue_delta'])

elif prob < 0.7:

img = random_brightness(img,params['brightness_prob'],params['brightness_delta'])

img = random_saturation(img,params['saturation_prob'],params['saturation_delta'])

img = random_hue(img,params['hue_prob'],params['hue_delta'])

img = random_contrast(img,params['contrast_prob'],params['contrast_delta'])

return img

def custom_img_reader(input_list,mode='train'): # input_list txt

with codecs.open(input_list) as flist:

lines=[line.strip() for line in flist]

def reader():

np.random.shuffle(lines) ## 这个操作会在内存上直接随机lines 不用赋值

if mode == 'train':

for line in lines:

img_path,label = line.split()

img=Image.open(img_path)

try:

if img.mode!='RGB':

img=img.convert('RGB')

if handle_params['need_distort']:

img = distort_color(img,handle_params)

if handle_params['need_rotate']:

img = rotate_img(img)

if handle_params['need_crop']:

img = random_crop(img,handle_params['input_size'])

if handle_params['need_flip']:

prob = np.random.randint(0,2)

if prob == 0:

img=img.transpose(Image.FLIP_LEFT_RIGHT)

img=np.array(img).astype(np.float32)

img -= handle_params['mean_rgb']

img=img.transpose((2,0,1)) #三个维度的关系HWC to CHW 机器学习用的通道数在最前面

img *= 0.007843

label = np.array([label]).astype(np.int64)

yield img, label

except Exception as e:

print('x\n')

pass

if mode == 'val':

for line in lines:

img_path,label = line.split()

img=Image.open(img_path)

if img.mode!='RGB':

img=img.convert('RGB')

img=resize_img(img,handle_params['input_size'])

img=np.array(img).astype(np.float32)

img -= handle_params['mean_rgb']

img=img.transpose((2,0,1))

img *= 0.007843

label = np.array([label]).astype(np.int64)

yield img,label

if mode == 'test':

for line in lines:

img_path = line

img=Image.open(img_path)

if img.mode!='RGB':

img=img.convert('RGB')

img=resize_img(img,handle_params['input_size'])

img=np.array(img).astype(np.float32)

img -= handle_params['mean_rgb']

img=img.transpose((2,0,1))

img *= 0.007843

yield img

return reader

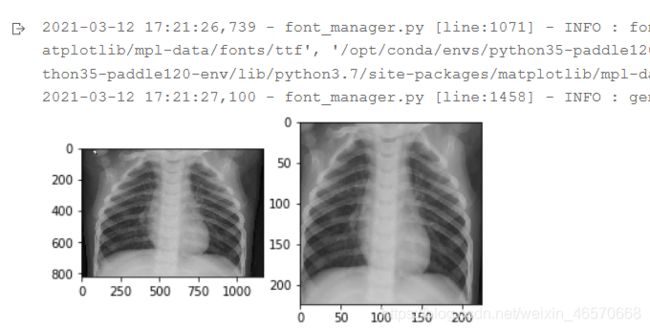

if __name__ == '__main__':

test_file_path='data/data72593/Data/evalImageSet/NORMAL2-IM-0246-0001-0002.jpeg'

test_img=Image.open(test_file_path)

plt.subplot(1,2,1)

plt.imshow(test_img)

test_img=random_contrast(test_img,handle_params['contrast_prob'],handle_params['contrast_delta'])

test_img=random_saturation(test_img,handle_params['saturation_prob'],handle_params['saturation_delta'])

test_img=random_hue(test_img,handle_params['hue_prob'],handle_params['hue_delta'])

test_img=random_brightness(test_img,handle_params['brightness_prob'],handle_params['brightness_delta'])

test_img=random_crop(test_img,handle_params['input_size'])

plt.subplot(1,2,2)

plt.imshow(test_img)

定义卷积层

import paddle.fluid as fluid

def conv3x3(num_channels,num_filters,stride=1,groups=1,dilation=1):

'''

3x3 卷积层

dilation = padding ensures the size

'''

return fluid.dygraph.Conv2D(num_channels=num_channels,num_filters=num_filters,filter_size=3,stride=stride,

padding=dilation,groups=groups,dilation=dilation)

def conv1x1(num_channels,num_filters,stride=1):

'''

1x1 卷积层

changes the number of planes

'''

return fluid.dygraph.Conv2D(num_channels=num_channels,num_filters=num_filters,filter_size=1,stride=stride,bias_attr=False)

class Bottleneck(fluid.dygraph.Layer):

# Bottleneck here places the stride for downsampling at 3x3 convolution

# while the original implementation places the stride at the first 1x1 convolution

expansion = 4

def __init__(self,inplanes,planes,stride=1,downsample=None,

groups=1, base_width =64,dilation=1,norm_layer=None):

super().__init__()

if norm_layer == None:

norm_layer=fluid.dygraph.BatchNorm

width = int(planes * (base_width/64.)) * groups

self.conv1 = conv1x1(inplanes,width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width,width,stride,groups,dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width,planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.downsample = downsample

self.stride = stride

def forward(self,inputs):

res = inputs

x = self.conv1(inputs)

x = self.bn1(x)

x = fluid.layers.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = fluid.layers.relu(x)

x = self.conv3(x)

x = self.bn3(x)

if self.downsample is not None:

res = self.downsample(inputs)

x = fluid.layers.elementwise_add(x,res)

x = fluid.layers.relu(x)

return x

class BasicBlock(fluid.dygraph.Layer):

expansion = 1 # This valuable can be ignored

def __init__(self,inplanes,planes,stride=1,downsample=None,

groups=1, base_width =64,dilation=1,norm_layer=None):

super().__init__()

if norm_layer == None:

norm_layer = fluid.dygraph.BatchNorm

if groups != 1 or base_width != 64:

raise ValueError('BasicBlock only support groups = 1 and base_width =64')

if dilation > 1:

raise NotImplementedError('BasicBlock only support dilation = 1')

self.conv1 = conv3x3(inplanes,planes,stride=stride)

self.bn1 = norm_layer(planes)

self.conv2 = conv3x3(planes,planes)

self.bn2 = norm_layer(planes)

self.downsample=downsample

self.stride = stride

def forward(self,inputs):

res = inputs

x = self.conv1(inputs)

x = self.bn1(x)

x = fluid.layers.relu(x)

x = self.conv2(x)

x = self.bn2(x)

if self.downsample is not None:

res = self.downsample(inputs)

x = fluid.layers.elementwise_add(x,res)

x = fluid.layers.relu(x)

return x

class ResNet(fluid.dygraph.Layer):

def __init__(self,block,layers,num_classes = 100, zero_init_residual = False,

groups=1,width_per_group=64,replace_stride_with_dilation=None,

norm_layer=None):

super(ResNet,self).__init__()

if norm_layer == None:

norm_layer = fluid.dygraph.BatchNorm

self._norm_layer = norm_layer

# initialize the inplanes syntax

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation == None:

replace_stride_with_dilation = [False,False,False]

if len(replace_stride_with_dilation) != 3:

raise ValueError('replace_stride_with_dilation should be None or'

' a 3-element tuple, got{}'.format(replace_stride_with_dilation))

self.groups = groups

self.base_width = width_per_group

self.conv1 = fluid.dygraph.Conv2D(3,self.inplanes,filter_size=7,stride=2,padding=3,bias_attr=False)

self.bn1 = norm_layer(self.inplanes)

self.maxpool = fluid.dygraph.Pool2D(pool_size=3,pool_type='max',pool_stride=2,pool_padding=1)

self.layer1 = self._make_layer(block,64,layers[0])

self.layer2 = self._make_layer(block,128,layers[1],stride=2,dilation = replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block,256,layers[2],stride=2,dilation = replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block,512,layers[3],stride=2,dilation = replace_stride_with_dilation[2])

self.fc = fluid.dygraph.Linear(512*block.expansion,num_classes)

# TODO: initialize the parameters(weights) and biaes

def _make_layer(self,block,planes,blocks,stride=1,dilation = False):

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilation:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = fluid.dygraph.Sequential(

conv1x1(self.inplanes,planes * block.expansion,stride),

norm_layer(planes * block.expansion)

)

layers = []

layers.append(block(self.inplanes,planes,stride,downsample,

self.groups,self.base_width,previous_dilation,

norm_layer))

self.inplanes = planes * block.expansion

for _ in range(1,blocks):

layers.append(block(self.inplanes,planes,groups=self.groups,

base_width=self.base_width,dilation=self.dilation,

norm_layer=norm_layer))

return fluid.dygraph.Sequential(*layers)

def _forward_impl(self,inputs):

x = self.conv1(inputs)

x = self.bn1(x)

x = fluid.layers.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = fluid.layers.adaptive_pool2d(x,pool_size=(1,1),pool_type='avg')

x = fluid.layers.flatten(x,1)

x = self.fc(x)

return x

def forward(self,inputs):

return self._forward_impl(inputs)

def _resnet(block,layers,pretrained,**kwargs): # ignore some valuables

model = ResNet(block,layers,**kwargs)

if pretrained:

pass

# TODO load pretrained weights

return model

##def resnet18(pretrained=False,**kwargs):

## return _resnet(BasicBlock,[2,2,2,2],pretrained,**kwargs)

def resnet50(pretrained=False,**kwargs):

return _resnet(Bottleneck,[3,4,6,3],pretrained,**kwargs)

定义训练层数

使用GPU

epochs为训练轮数,可以随意更改

import math

import paddle.fluid as fluid

# from config import read_params

learning_params = {

'learning_rate': 0.0001,

'batch_size': 64,

'step_per_epoch':-1,

'num_epoch': 3,

'epochs':[10,30],

'lr_decay':[1,0.1,0.01],

'use_GPU':True

}

place = fluid.CPUPlace() if not learning_params['use_GPU'] else fluid.CUDAPlace(0)

def get_momentum_optimizer(parameter_list):

'''

piecewise decay to the initial learning rate

'''

batch_size = learning_params['batch_size']

step_per_epoch = int(math.ceil(read_params['input_num'] / batch_size)) #

learning_rate = learning_params['learning_rate']

boundaries = [i * step_per_epoch for i in learning_params['epochs']]

values = [i * learning_rate for i in learning_params['lr_decay']]

learning_rate = fluid.layers.piecewise_decay(boundaries,values)

optimizer = fluid.optimizer.MomentumOptimizer(learning_rate=learning_rate, momentum=0.9,parameter_list=parameter_list)

return optimizer

def get_adam_optimizer(parameter_list):

'''

piecewise decay to the initial learning rate

'''

batch_size = learning_params['batch_size']

step_per_epoch = int(math.ceil(read_params['input_num'] / batch_size)) #

learning_rate = learning_params['learning_rate']

boundaries = [i * step_per_epoch for i in learning_params['epochs']]

values = [i * learning_rate for i in learning_params['lr_decay']]

learning_rate = fluid.layers.piecewise_decay(boundaries,values)

optimizer = fluid.optimizer.AdamOptimizer(learning_rate=learning_rate,parameter_list=parameter_list)

return optimizer

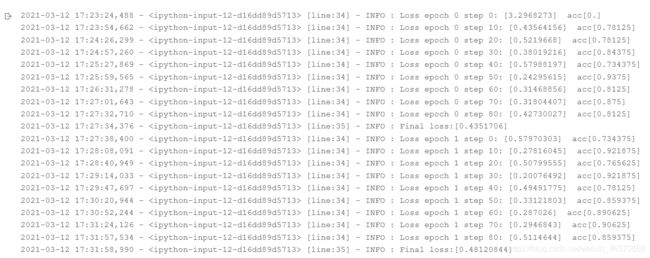

开始训练

with fluid.dygraph.guard(place):

resnet.train()

resnet_50 = resnet50(False)

train_list = os.path.join(read_params['data_dir'],read_params['train_list'])

train_reader = fluid.io.batch(custom_img_reader(train_list,mode='train'),batch_size=learning_params['batch_size'])

train_loader = fluid.io.DataLoader.from_generator(capacity=3,return_list=True,use_multiprocess=False)

train_loader.set_sample_list_generator(train_reader,places=place)

eval_list = os.path.join(read_params['data_dir'],read_params['test_list'])

eval_reader = fluid.io.batch(custom_img_reader(eval_list,mode='test'),batch_size=learning_params['batch_size'])

for epoch_id in range(learning_params['num_epoch']):

for batch_id,single_step_data in enumerate((train_loader())):

img = fluid.dygraph.to_variable(single_step_data[0])

label = fluid.dygraph.to_variable(single_step_data[1])

predict = resnet(img)

predict = fluid.layers.softmax(predict)

acc = fluid.layers.accuracy(input=predict,label=label)

loss = fluid.layers.cross_entropy(predict,label)

avg_loss = fluid.layers.mean(loss)

avg_loss.backward()

optimizer.minimize(avg_loss)

resnet.clear_gradients()

if batch_id % 10 == 0:

try:

single_eval_data = next(eval_reader())

img_eval = np.array([x[0] for x in single_eval_data])

label_eval = np.array([x[1] for x in single_eval_data])

img_eval = fluid.dygraph.to_variable(img_eval)

label_eval = fluid.dygraph.to_variable(label_eval)

eval_predict = resnet(img_eval)

eval_predict = fluid.layers.softmax(eval_predict)

eval_acc = fluid.layers.accuracy(input=eval_predict,label=label_eval)

logger.info('Loss epoch {} step {}: {} acc{} eval_acc {}'.format(epoch_id,batch_id, avg_loss.numpy(),acc.numpy(),eval_acc.numpy()))

except Exception:

logger.info('Loss epoch {} step {}: {} acc{} '.format(epoch_id,batch_id, avg_loss.numpy(),acc.numpy()))

logger.info('Final loss:{}'.format(avg_loss.numpy()))

fluid.save_dygraph(resnet.state_dict(),'ResNet50_3'

测试训练效果

eval_reader = fluid.io.batch(custom_img_reader(eval_list,mode='val'),batch_size=learning_params['batch_size'])

single_eval_data = next(eval_reader())

img_eval = np.array([x[0] for x in single_eval_data])

label_eval = np.array([x[1] for x in single_eval_data])

img_eval = fluid.dygraph.to_variable(img_eval)

label_eval = fluid.dygraph.to_variable(label_eval)

eval_predict = resnet(img_eval)

eval_predict = fluid.layers.softmax(eval_predict)

eval_acc = fluid.layers.accuracy(input=eval_predict,label=label_eval)

logger.info('Loss epoch {} step {}: {} acc{}'.format(epoch_id,batch_id, avg_loss.numpy(),acc.numpy()))

来看一下结果:

正确率0.90625,还是非常不错的

![]() 上面就是完整的代码了,项目已经在AIStudio上设置为公开了,欢迎大家来运行体验。

上面就是完整的代码了,项目已经在AIStudio上设置为公开了,欢迎大家来运行体验。

链接: https://aistudio.baidu.com/aistudio/projectdetail/1645548.

因为分类病毒性和细菌性肺炎和这个差不多,就不多赘述了,一起打包上传资源了,欢迎小伙伴来探讨。

完整的项目运行结果和代码已经上传资源,不需要积分哦,关注就可以下载完整版啦。