深度学习 zuoye4

Download Dataset

有三個檔案,分別是training_label.txt、training_nolabel.txt、testing_data.txt

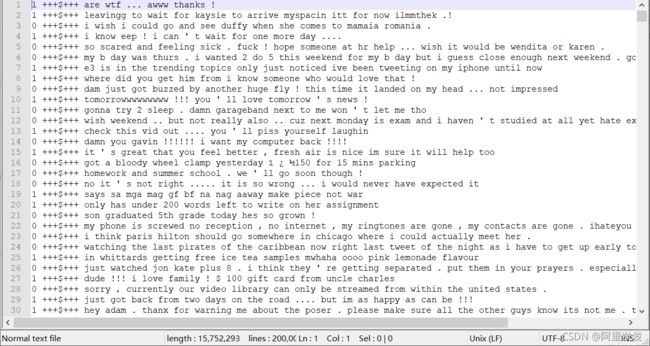

training_label.txt:有label的training data(句子配上0 or 1)

training_nolabel.txt:沒有label的training data(只有句子),用来做半监督学习,约120万句句子。

比如: hates being this burnt !! ouch,前面没有0或者1的标签

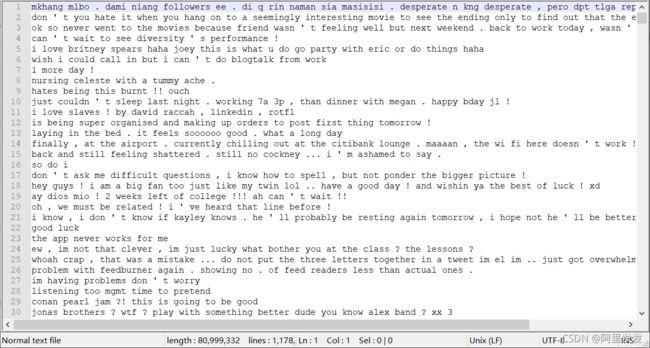

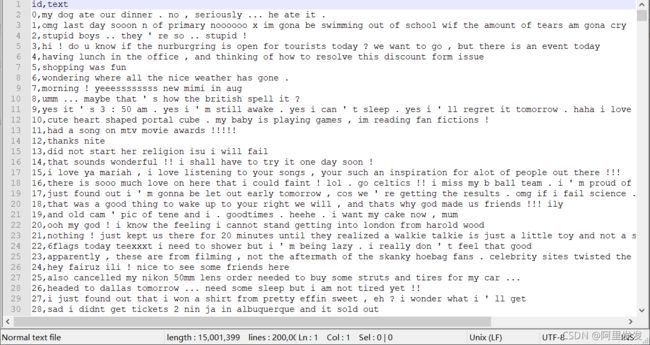

testing_data.txt:最终需要判断 testing data 里面的句子是 0 或 1,约20万句句子(10万句句子是Public,10万句句子是Private)。

具体格式如下,第一行是表头,从第二行开始是数据,第一列是id,第二列是文本

import warnings

warnings.filterwarnings(‘ignore’)

#设置后可以过滤一些无用的warning

定义了两个读取training和testing数据的函数,还定义了评估结果的函数evaluation()。

Python库中的 strip() 方法用于移除字符串头尾指定的字符(默认为空格或换行符)或字符序列。

#utils.py

#這個block用來先定義一些等等常用到的函式

import torch

import numpy as np

import pandas as pd

import torch.optim as optim

import torch.nn.functional as F

def load_training_data(path=‘training_label.txt’):

# 把training時需要的data讀進來

# 如果是’training_label.txt’,需要讀取label,如果是’training_nolabel.txt’,不需要讀取label

if ‘training_label’ in path:

with open(path, ‘r’) as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

lines = [line.strip(’\n’).split(’ ‘) for line in lines]

# 每行按空格分割后,第2个符号之后都是句子的单词

x = [line[2:] for line in lines]

# 每行按空格分割后,第0个符号是label

y = [line[0] for line in lines]

return x, y

else:

with open(path, ‘r’) as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

x = [line.strip(’\n’).split(’ ') for line in lines]

return x

def load_testing_data(path=‘testing_data’):

# 把testing時需要的data讀進來

with open(path, ‘r’) as f:

lines = f.readlines()

# 第0行是表头,从第1行开始是数据

# 第0列是id,第1列是文本,按逗号分割,需要逗号之后的文本

X = ["".join(line.strip(’\n’).split(",")[1:]).strip() for line in lines[1:]]

X = [sen.split(’ ') for sen in X]

return X

def evaluation(outputs, labels):

# outputs => 预测值,概率(float)

# labels => 真实值,标签(0或1)

outputs[outputs>=0.5] = 1 # 大於等於0.5為有惡意

outputs[outputs<0.5] = 0 # 小於0.5為無惡意

correct = torch.sum(torch.eq(outputs, labels)).item()

return correct

Train Word to Vector

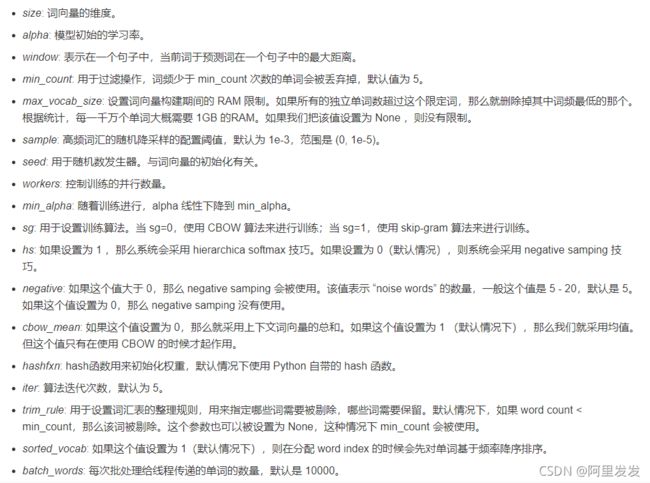

word2vec 即 word to vector 的缩写。把 training 和 testing 中的每个单词都分别变成词向量,这里用到了 Gensim 来进行 word2vec 的操作。没有 gensim 的可以用 conda install gensim 或者 pip install gensim 安装一下。

#w2v.py

#這個block是用來訓練word to vector 的 word embedding

#注意!這個block在訓練word to vector時是用cpu,可能要花到10分鐘以上

import os

import numpy as np

import pandas as pd

import argparse

from gensim.models import word2vec

def train_word2vec(x):

# 训练 word to vector 的 word embedding

# window:滑动窗口的大小,min_count:过滤掉语料中出现频率小于min_count的词

model = word2vec.Word2Vec(x, size=250, window=5, min_count=5, workers=12, iter=10, sg=1)

return model

if name == “main”:

#读取 training 数据

print(“loading training data …”)

train_x, y = load_training_data(‘training_label.txt’)

train_x_no_label = load_training_data(‘training_nolabel.txt’)

#读取 testing 数据

print(“loading testing data …”)

test_x = load_testing_data(‘testing_data.txt’)

#把 training 中的 word 变成 vector

#model = train_word2vec(train_x + train_x_no_label + test_x) # w2v_all

model = train_word2vec(train_x + train_x_no_label + test_x) # w2v

保存 vector

print("saving model ...")

# model.save(os.path.join(path_prefix, 'model/w2v_all.model'))

model.save(os.path.join(path_prefix, 'w2v_all.model'))

数据预处理

定义一个预处理的类Preprocess():

w2v_path:word2vec的存储路径

sentences:句子

sen_len:句子的固定长度

idx2word 是一个列表,比如:self.idx2word[1] = ‘he’

word2idx 是一个字典,记录单词在 idx2word 中的下标,比如:self.word2idx[‘he’] = 1

embedding_matrix 是一个列表,记录词嵌入的向量,比如:self.embedding_matrix[1] = ‘he’ vector

对于句子,我们就可以通过 embedding_matrix[word2idx[‘he’] ] 找到 ‘he’ 的词嵌入向量。

Preprocess()的调用如下:

训练模型:preprocess = Preprocess(train_x, sen_len, w2v_path=w2v_path)

测试模型:preprocess = Preprocess(test_x, sen_len, w2v_path=w2v_path)

另外,这里除了出现在 train_x 和 test_x 中的单词外,还需要两个单词(或者叫特殊符号):

< PAD >:Padding的缩写,把所有句子都变成一样长度时,需要用"“补上空白符

< UNK >:Unknown的缩写,凡是在 train_x 和 test_x 中没有出现过的单词,都用”"来表示

#preprocess.py

#這個block用來做data的預處理

from torch import nn

from gensim.models import Word2Vec

class Preprocess():

def init(self, sentences, sen_len, w2v_path="./w2v.model"):

self.w2v_path = w2v_path # word2vec的存储路径

self.sentences = sentences # 句子

self.sen_len = sen_len # 句子的固定长度

self.idx2word = []

self.word2idx = {}

self.embedding_matrix = []

def get_w2v_model(self):

# 把之前訓練好的word to vec 模型讀進來

self.embedding = Word2Vec.load(self.w2v_path)

self.embedding_dim = self.embedding.vector_size

def add_embedding(self, word):

# 把word加進embedding,並賦予他一個隨機生成的representation vector

# word只會是"< PAD >“或”< UNK >"

vector = torch.empty(1, self.embedding_dim)

torch.nn.init.uniform_(vector)

# 它的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix = torch.cat([self.embedding_matrix, vector], 0)

def make_embedding(self, load=True):

print(“Get embedding …”)

# 取得訓練好的 Word2vec word embedding

if load:

print(“loading word to vec model …”)

self.get_w2v_model()

else:

raise NotImplementedError

# 製作一個 word2idx 的 dictionary

# 製作一個 idx2word 的 list

# 製作一個 word2vector 的 list

for i, word in enumerate(self.embedding.wv.vocab):

print(‘get words #{}’.format(i+1), end=’\r’)

# 新加入的 word 的 index 是 word2idx 这个词典的长度,即最后一个

#e.g. self.word2index[‘魯’] = 1

#e.g. self.index2word[1] = ‘魯’

#e.g. self.vectors[1] = ‘魯’ vector

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix.append(self.embedding[word])

print(’’)

# 把 embedding_matrix 变成 tensor

self.embedding_matrix = torch.tensor(self.embedding_matrix)

# 將"< PAD >“跟”< UN K >“加進embedding裡面

self.add_embedding(”< PAD >")

self.add_embedding("< UNK >")

print(“total words: {}”.format(len(self.embedding_matrix)))

return self.embedding_matrix

def pad_sequence(self, sentence):

# 將每個句子變成一樣的長度

if len(sentence) > self.sen_len:

# 如果句子长度大于 sen_len 的长度,就截断

sentence = sentence[:self.sen_len]

else:

# 如果句子长度小于 sen_len 的长度,就补上 < PAD> 符号,缺多少个单词就补多少个 < PAD>

pad_len = self.sen_len - len(sentence)

for _ in range(pad_len):

sentence.append(self.word2idx["< PAD>"])

assert len(sentence) == self.sen_len

return sentence

def sentence_word2idx(self):

# 把句子裡面的字轉成相對應的index

sentence_list = []

for i, sen in enumerate(self.sentences):

print(‘sentence count #{}’.format(i+1), end=’\r’)

sentence_idx = []

for word in sen:

if (word in self.word2idx.keys()):

sentence_idx.append(self.word2idx[word])

else:

# 没有出现过的单词就用 < UNK> 表示

sentence_idx.append(self.word2idx["< UNK>"])

# 將每個句子變成一樣的長度

sentence_idx = self.pad_sequence(sentence_idx)

sentence_list.append(sentence_idx)

return torch.LongTensor(sentence_list)

def labels_to_tensor(self, y):

# 把labels轉成tensor

y = [int(label) for label in y]

return torch.LongTensor(y)

#data.py

#實作了dataset所需要的’init’, ‘getitem’, ‘len’

#好讓dataloader能使用

import torch

from torch.utils import data

class TwitterDataset(data.Dataset):

“”"

Expected data shape like:(data_num, data_len)

Data can be a list of numpy array or a list of lists

input data shape : (data_num, seq_len, feature_dim)

__len__ will return the number of data

"""

def __init__(self, X, y):

self.data = X

self.label = y

def __getitem__(self, idx):

if self.label is None: return self.data[idx]

return self.data[idx], self.label[idx]

def __len__(self):

return len(self.data)

#model.py

#這個block是要拿來訓練的模型

import torch

from torch import nn

class LSTM_Net(nn.Module):

def init(self, embedding, embedding_dim, hidden_dim, num_layers, dropout=0.5, fix_embedding=True):

super(LSTM_Net, self).init()

# 製作 embedding layer

self.embedding = torch.nn.Embedding(embedding.size(0),embedding.size(1))

self.embedding.weight = torch.nn.Parameter(embedding)

# 是否將 embedding fix住,如果fix_embedding為False,在訓練過程中,embedding也會跟著被訓練

self.embedding.weight.requires_grad = False if fix_embedding else True

self.embedding_dim = embedding.size(1)

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.dropout = dropout

self.lstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=num_layers, batch_first=True)

self.classifier = nn.Sequential( nn.Dropout(dropout),

nn.Linear(hidden_dim, 1),

nn.Sigmoid() )

def forward(self, inputs):

inputs = self.embedding(inputs)

x, _ = self.lstm(inputs, None)

# x 的 dimension (batch, seq_len, hidden_size)

# 取用 LSTM 最後一層的 hidden state丢到分类器中

x = x[:, -1, :]

x = self.classifier(x)

return x

training

将 training 和 validation 封装成函数

#train.py

#這個block是用來訓練模型的

import torch

from torch import nn

import torch.optim as optim

import torch.nn.functional as F

def training(batch_size, n_epoch, lr, model_dir, train, valid, model, device):

#输出模型总的参数数量、可训练的参数数量

total = sum(p.numel() for p in model.parameters())

trainable = sum(p.numel() for p in model.parameters() if p.requires_grad)

print(’\nstart training, parameter total:{}, trainable:{}\n’.format(total, trainable))

model.train() # 將model的模式設為train,這樣optimizer就可以更新model的參數

criterion = nn.BCELoss() # 定義損失函數,這裡我們使用binary cross entropy loss

t_batch = len(train) # training 数据的batch size大小

v_batch = len(valid) # validation 数据的batch size大小

optimizer = optim.Adam(model.parameters(), lr=lr) # 將模型的參數給optimizer,並給予適當的learning rate

total_loss, total_acc, best_acc = 0, 0, 0

for epoch in range(n_epoch):

total_loss, total_acc = 0, 0

# 這段做training # 将 model 的模式设为 train,这样 optimizer 就可以更新 model 的参数

for i, (inputs, labels) in enumerate(train):

inputs = inputs.to(device, dtype=torch.long) # device為"cuda",將inputs轉成torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # device為"cuda",將labels轉成torch.cuda.FloatTensor,因為等等要餵進criterion,所以型態要是float

optimizer.zero_grad() # 由於loss.backward()的gradient會累加,所以每次餵完一個batch後需要歸零

outputs = model(inputs) # 將input餵給模型

outputs = outputs.squeeze() # 去掉最外面的dimension,好讓outputs可以餵進criterion()

loss = criterion(outputs, labels) # 計算此時模型的training loss

loss.backward() # 算loss的gradient

optimizer.step() # 更新訓練模型的參數

correct = evaluation(outputs, labels) # 計算此時模型的training accuracy

total_acc += (correct / batch_size)

total_loss += loss.item()

print(’[ Epoch{}: {}/{} ] loss:{:.3f} acc:{:.3f} '.format(

epoch+1, i+1, t_batch, loss.item(), correct100/batch_size), end=’\r’)

print(’\nTrain | Loss:{:.5f} Acc: {:.3f}’.format(total_loss/t_batch, total_acc/t_batch100))

# 這段做validation

model.eval() # 將model的模式設為eval,這樣model的參數就會固定住

with torch.no_grad():

total_loss, total_acc = 0, 0

for i, (inputs, labels) in enumerate(valid):

inputs = inputs.to(device, dtype=torch.long) # device為"cuda",將inputs轉成torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # device為"cuda",將labels轉成torch.cuda.FloatTensor,因為等等要餵進criterion,所以型態要是float

outputs = model(inputs) # 將input餵給模型

outputs = outputs.squeeze() # 去掉最外面的dimension,好讓outputs可以餵進criterion()

loss = criterion(outputs, labels) # 計算此時模型的validation loss

correct = evaluation(outputs, labels) # 計算此時模型的validation accuracy

total_acc += (correct / batch_size)

total_loss += loss.item()

print("Valid | Loss:{:.5f} Acc: {:.3f} ".format(total_loss/v_batch, total_acc/v_batch*100))

if total_acc > best_acc:

# 如果validation的結果優於之前所有的結果,就把當下的模型存下來以備之後做預測時使用

best_acc = total_acc

#torch.save(model, "{}/val_acc_{:.3f}.model".format(model_dir,total_acc/v_batch*100))

torch.save(model, "{}/ckpt.model".format(model_dir))

print('saving model with acc {:.3f}'.format(total_acc/v_batch*100))

print('-----------------------------------------------')

model.train() # 將model的模式設為train,這樣optimizer就可以更新model的參數(因為剛剛轉成eval模式)

调用前面的封装的Preprocess(),training(),进行训练。

main.py

import os

import torch

import argparse

import numpy as np

from torch import nn

from gensim.models import word2vec

from sklearn.model_selection import train_test_split

通過torch.cuda.is_available()的回傳值進行判斷是否有使用GPU的環境,如果有的話device就設為"cuda",沒有的話就設為"cpu"

device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)

處理好各個data的路徑

train_with_label = os.path.join(path_prefix, ‘training_label.txt’)

train_no_label = os.path.join(path_prefix, ‘training_nolabel.txt’)

testing_data = os.path.join(path_prefix, ‘testing_data.txt’)

w2v_path = os.path.join(path_prefix, ‘w2v_all.model’) # 處理word to vec model的路徑

定義句子長度、要不要固定embedding、batch大小、要訓練幾個epoch、learning rate的值、model的資料夾路徑

sen_len = 30

fix_embedding = True # fix embedding during training

batch_size = 128

epoch = 5

lr = 0.001

model_dir = os.path.join(path_prefix, ‘model/’) # model directory for checkpoint model

model_dir = path_prefix # model directory for checkpoint model

print(“loading data …”) # 把’training_label.txt’跟’training_nolabel.txt’讀進來

train_x, y = load_training_data(train_with_label)

train_x_no_label = load_training_data(train_no_label)

對input跟labels做預處理

preprocess = Preprocess(train_x, sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

train_x = preprocess.sentence_word2idx()

y = preprocess.labels_to_tensor(y)

製作一個model的對象

model = LSTM_Net(embedding, embedding_dim=250, hidden_dim=250, num_layers=1, dropout=0.5, fix_embedding=fix_embedding)

model = model.to(device) # device為"cuda",model使用GPU來訓練(餵進去的inputs也需要是cuda tensor)

把data分為training data跟validation data(將一部份training data拿去當作validation data)

X_train, X_val, y_train, y_val = train_x[:190000], train_x[190000:], y[:190000], y[190000:]

把data做成dataset供dataloader取用

train_dataset = TwitterDataset(X=X_train, y=y_train)

val_dataset = TwitterDataset(X=X_val, y=y_val)

把data 轉成 batch of tensors

train_loader = torch.utils.data.DataLoader(dataset = train_dataset,

batch_size = batch_size,

shuffle = True,

num_workers = 8)

val_loader = torch.utils.data.DataLoader(dataset = val_dataset,

batch_size = batch_size,

shuffle = False,

num_workers = 8)

開始訓練

training(batch_size, epoch, lr, model_dir, train_loader, val_loader, model, device)

loading data …

Get embedding …

loading word to vec model …

get words #55777

total words: 55779

sentence count #200000

start training, parameter total:14447001, trainable:502251

Train | Loss:0.48037 Acc: 76.344

Valid | Loss:0.43569 Acc: 79.391

saving model with acc 79.391

[ Epoch2: 1485/1485 ] loss:0.445 acc:28.906

Train | Loss:0.41297 Acc: 81.141

Valid | Loss:0.40639 Acc: 80.736

saving model with acc 80.736

Train | Loss:0.39345 Acc: 82.240

Valid | Loss:0.39597 Acc: 81.517

saving model with acc 81.517

[ Epoch4: 1485/1485 ] loss:0.457 acc:30.469

Train | Loss:0.37718 Acc: 83.130

Valid | Loss:0.39099 Acc: 81.646

saving model with acc 81.646

Train | Loss:0.36063 Acc: 83.993

Valid | Loss:0.39466 Acc: 81.695

saving model with acc 81.695

testing

同样,将 testing 封装成函数

#test.py

#這個block用來對testing_data.txt做預測

import torch

from torch import nn

import torch.optim as optim

import torch.nn.functional as F

def testing(batch_size, test_loader, model, device):

model.eval()

ret_output = []

with torch.no_grad():

for i, inputs in enumerate(test_loader):

inputs = inputs.to(device, dtype=torch.long)

outputs = model(inputs)

outputs = outputs.squeeze()

outputs[outputs>=0.5] = 1 # 大於等於0.5為負面

outputs[outputs<0.5] = 0 # 小於0.5為正面

ret_output += outputs.int().tolist()

return ret_output

调用testing()进行预测,预测数据保存为predict.csv,约1.6M

#開始測試模型並做預測

#读取测试数据test_x

print(“loading testing data …”)

test_x = load_testing_data(testing_data)

#对test_x作预处理

preprocess = Preprocess(test_x, sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

test_x = preprocess.sentence_word2idx()

test_dataset = TwitterDataset(X=test_x, y=None)

test_loader = torch.utils.data.DataLoader(dataset = test_dataset,

batch_size = batch_size,

shuffle = False,

num_workers = 8)

#读取模型

print(’\nload model …’)

model = torch.load(os.path.join(model_dir, ‘ckpt.model’))

#测试模型

outputs = testing(batch_size, test_loader, model, device)

#寫到csv檔案供上傳kaggle

tmp = pd.DataFrame({“id”:[str(i) for i in range(len(test_x))],“label”:outputs})

print(“save csv …”)

tmp.to_csv(os.path.join(path_prefix, ‘predict.csv’), index=False)

print(“Finish Predicting”)

get words #55777

total words: 55779

sentence count #200000

load model …

save csv …

Finish Predicting