Keras学习之:把一个神经网络所有层的激活特征输出进行可视化并作比较

文章目录

-

- 创建 CNN 网络并训练

- 查看所有网络层并去除 input

- 定义一个获取特征并展示的函数

- 调用函数结果展示

- 结论

如果你最终想要得到下图这种效果,就继续读下去~

创建 CNN 网络并训练

import keras,os

from keras.models import *

from keras.layers import *

import numpy as np

from keras.utils import to_categorical

from keras import backend as K

import tensorflow as tf

import os

%matplotlib inline

import matplotlib.pyplot as plt

os.environ["CUDA_VISIBLE_DEVICES"] = "2"

#### 定义 cnn

def cnn(input_shape,classes):

inputs = Input(shape=input_shape)

x = Conv2D(filters=8,kernel_size=(3,3),strides=1,padding='same',activation='relu')(inputs)

x = Conv2D(filters=16,kernel_size=(3,3),strides=1,padding='same',activation='relu')(x)

x = Conv2D(filters=32,kernel_size=(3,3),strides=1,padding='same',activation='relu')(x)

x = Conv2D(filters=64,kernel_size=(3,3),strides=1,padding='same',activation='relu')(x)

x = Conv2D(filters=128,kernel_size=(3,3),strides=1,padding='same',activation='relu')(x)

gap = GlobalAveragePooling2D()(x)

gap = Dense(256,activation='relu')(gap)

logits = Dense(classes,activation=None)(gap)

outputs = Activation('softmax')(logits)

model = Model(inputs,outputs)

return model

nn = cnn((28,28,1),10)

#### 加载数据集

from keras.datasets import fashion_mnist,cifar10,cifar100,mnist

(x_train,y_train),(x_test,y_test)= fashion_mnist.load_data()

y_train_label = y_train

y_test_label = y_test

x_train = x_train.reshape(60000,28,28,1)

x_test = x_test.reshape(10000,28,28,1)

y_test = to_categorical(y_test)

y_train = to_categorical(y_train)

#### 训练

nn.compile(loss=keras.losses.categorical_crossentropy,optimizer=keras.optimizers.Adam(0.001),metrics=['accuracy'])

nn.fit(x_train,y_train,shuffle=True,batch_size=64,epochs=10)

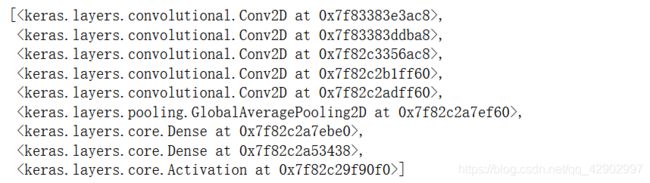

查看所有网络层并去除 input

- 因为 input 层没有 predict 的功能

- 其实你也可以去除后面 conv 以外的所有层,因为在本文中,只用到了 5 个 conv 层

## 展示所有的网络层并去掉 Input 层

nn_layers_without_input = nn.layers.pop(0)

nn.layers

定义一个获取特征并展示的函数

def feature_layers_visualization(model,data):

for i in range(len(model.layers)):

net = Model(model.get_input_at(0),model.layers[i].output) ## 有多少层就构建多少个 net 用来 predict 本层的输出

if len(net.predict(data)[0].shape)==3: ## data 的维度(batch_size,28,28,channel) ---> 判断每个特征图是不是 (28,28,channel) 这样的3维数据

size,_,channels = net.predict(data)[0].shape

feature_maps_for_a_layer = net.predict(data)[0] # (28,28,channel) 每个layer 的特征图由 channel 张子特征图构成

cols = 8 # 格子的固定列数为 8 列,至于行数则取决于不同的 layer 的 channel 的数量

rows = channels // cols

grids = np.zeros((rows*size,cols*size)) # 建立要展示的区域,每个区域放一个 layer 中所有的 channel 特征图;

for row in range(rows):

for col in range(cols):

grids[row * size:(row+1)*size,col*size:(col+1)*size] = feature_maps_for_a_layer[:,:,col+row*cols]

plt.imshow(grids)

plt.title("sub feature map of layer%d"%(i+1))

plt.figure(figsize=(2,2))

plt.imshow(np.cumsum(feature_maps_for_a_layer,axis=2)[:,:,-1].reshape(28,28)) #输出一张总特征图

plt.title("total feature map of layer%d"%(i+1))

plt.axis("off")

plt.show()

else:

print("dimension is not 2, not allowed ")

break

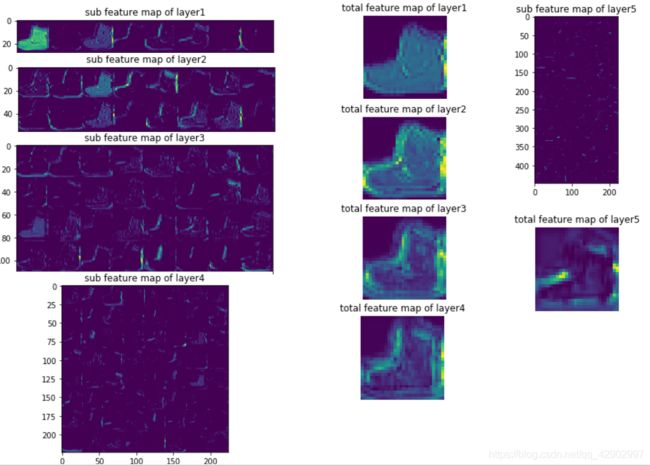

调用函数结果展示

feature_layers_visualization(nn,x_train)

结论

- 可以看到从上到下依次变深的网络层中,前两层趋向于提取整体的特征,而后面的层中更加趋向于辨认边缘和更加抽象的特征。