BatchNorm、LayerNorm、InstanceNorm、GroupNorm、WeightNorm

今天看Transform时看到了LayerNorm,然后想到之前用过BatchNorm,就想着这两个有啥区别呢,然后找资料,就发现还有其他的归一化处理,就在这里整理一下,方便以后查阅。

BatchNorm、LayerNorm、InstanceNorm、GroupNorm、WeightNorm

- torch.nn.BatchNorm1d

-

- API解释

- code

- torch.nn.LayerNorm

-

- API解释

- code

- torch.nn.InstanceNorm1d

-

- API解释

- code

- torch.nn.GroupNorm

-

- API解释

- code

- 四种归一化的总结

-

- 归一化做了啥

- 四种归一化的区别

- torch.nn.utils.weight_norm

-

- API解释

- code

- 参考资料

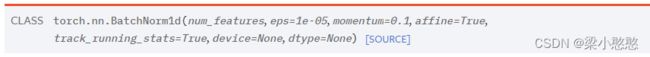

torch.nn.BatchNorm1d

API解释

官方API解释:https://pytorch.org/docs/stable/generated/torch.nn.BatchNorm1d.html#torch.nn.BatchNorm1d

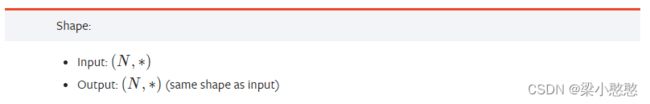

The mean and standard-deviation are calculated per-dimension over the mini-batches and γ γ γ and β β β are learnable parameter vectors of size C (where C is the number of features or channels of the input). By default, the elements of γ γ γ are set to 1 and the elements of β β β are set to 0. The standard-deviation is calculated via the biased estimator, equivalent to torch.var(input, unbiased=False).

Also by default, during training this layer keeps running estimates of its computed mean and variance, which are then used for normalization during evaluation. The running estimates are kept with a default momentum of 0.1.

If track_running_stats is set to False, this layer then does not keep running estimates, and batch statistics are instead used during evaluation time as well.

Because the Batch Normalization is done over the C dimension, computing statistics on (N, L) slices, it’s common terminology to call this Temporal Batch Normalization.

code

### 1. 实现batch_norm并验证API

# 调用batch_norm API

batch_norm_op = nn.BatchNorm1d(num_features=embedding_dim, affine=False)

bn_y = batch_norm_op(inputx.transpose(-1, -2)).transpose(-1, -2)

# 手写batch_norm

bn_mean = inputx.mean(dim=(0, 1), keepdim=True)

bn_std = inputx.std(dim=(0, 1), keepdim=True, unbiased=False)

verify_bn_y = (inputx - bn_mean)/(bn_std + 1e-5)

print(bn_y)

print(verify_bn_y)

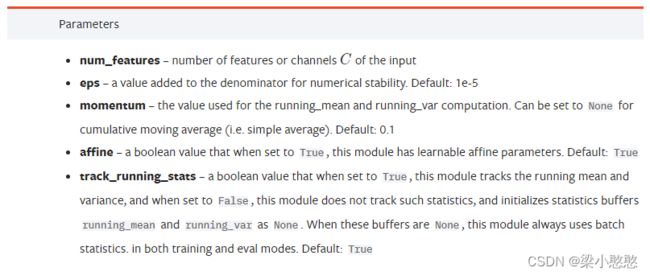

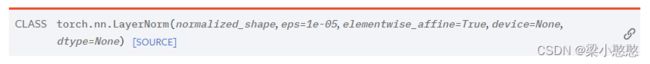

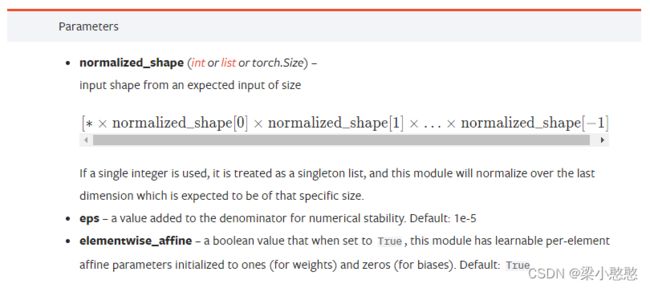

torch.nn.LayerNorm

API解释

官方API解释:

https://pytorch.org/docs/stable/generated/torch.nn.LayerNorm.html?highlight=layer%20norm#torch.nn.LayerNorm

The mean and standard-deviation are calculated over the last D dimensions, where D is the dimension of normalized_shape. For example, if normalized_shape is (3, 5) (a 2-dimensional shape), the mean and standard-deviation are computed over the last 2 dimensions of the input (i.e. input.mean((-2, -1))). γ γ γ and β β β are learnable affine transform parameters of normalized_shape if elementwise_affine is True. The standard-deviation is calculated via the biased estimator, equivalent to torch.var(input, unbiased=False).

This layer uses statistics computed from input data in both training and evaluation modes.

code

### 2. 实现layer_norm并验证API

# 调用layer_norm API

layer_norm_op = nn.LayerNorm(normalized_shape=embedding_dim, elementwise_affine=False)

ln_y = layer_norm_op(inputx)

# 手写layer_norm

ln_mean = inputx.mean(dim=-1, keepdim=True)

ln_std = inputx.std(dim=-1, keepdim=True, unbiased=False)

verify_ln_y = (inputx - ln_mean)/(ln_std + 1e-5)

print(ln_y)

print(verify_ln_y)

torch.nn.InstanceNorm1d

API解释

官方API解释:

https://pytorch.org/docs/stable/generated/torch.nn.InstanceNorm1d.html?highlight=instance%20norm#torch.nn.InstanceNorm1d

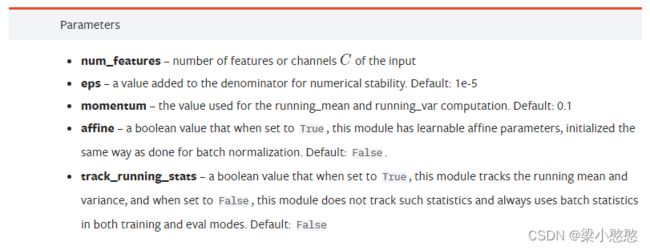

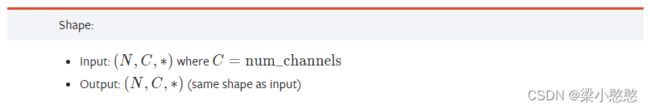

The mean and standard-deviation are calculated per-dimension separately for each object in a mini-batch. γ γ γ and β β β are learnable parameter vectors of size C (where C is the number of features or channels of the input) if affine is True. The standard-deviation is calculated via the biased estimator, equivalent to torch.var(input, unbiased=False).

By default, this layer uses instance statistics computed from input data in both training and evaluation modes.

If track_running_stats is set to True, during training this layer keeps running estimates of its computed mean and variance, which are then used for normalization during evaluation. The running estimates are kept with a default momentum of 0.1.

code

### 3. 实现instance_norm并验证API

# 调用instance_norm API

ins_norm_op = nn.InstanceNorm1d(num_features=embedding_dim)

ins_y = ins_norm_op(inputx.transpose(-1, -2)).transpose(-1, -2)

# 手写instance_norm

ins_mean = inputx.mean(dim=1, keepdim=True)

ins_std = inputx.std(dim=1, keepdim=True, unbiased=False)

verify_ins_y = (inputx - ins_mean)/(ins_std + 1e-5)

print(ins_y)

print(verify_ins_y)

torch.nn.GroupNorm

API解释

官方API解释:

https://pytorch.org/docs/stable/generated/torch.nn.GroupNorm.html?highlight=group%20norm#torch.nn.GroupNorm

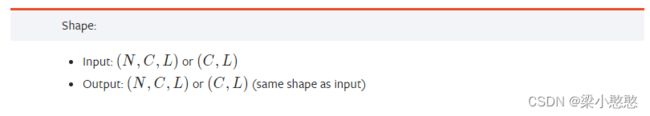

The input channels are separated into num_groups groups, each containing num_channels / num_groups channels. num_channels must be divisible by num_groups. The mean and standard-deviation are calculated separately over the each group. γ γ γ and β β β are learnable per-channel affine transform parameter vectors of size num_channels if affine is True. The standard-deviation is calculated via the biased estimator, equivalent to torch.var(input, unbiased=False).

This layer uses statistics computed from input data in both training and evaluation modes.

code

### 4. 实现group_norm并验证API

# 调用group_norm API

group_norm_op = nn.GroupNorm(num_groups=num_groups, num_channels=embedding_dim)

group_y = group_norm_op(inputx.transpose(-1, -2)).transpose(-1, -2)

# 手写group_norm

group_inputxs = torch.split(inputx, split_size_or_sections=embedding_dim//num_groups, dim=-1)

results = []

for g_inputx in group_inputxs:

gn_mean = g_inputx.mean(dim=(1, 2), keepdim=True)

gn_std = g_inputx.std(dim=(1, 2), keepdim=True, unbiased=False)

gn_result = (g_inputx - gn_mean)/(gn_std + 1e-5)

results.append(gn_result)

verify_gn_y = torch.cat(results, dim=-1)

print(group_y)

print(verify_gn_y)

四种归一化的总结

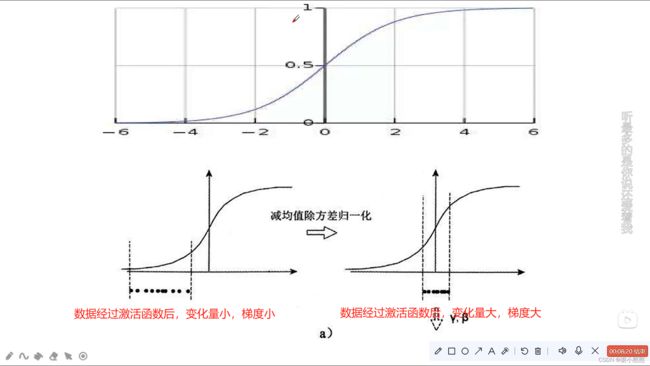

归一化做了啥

有以下两种解释:

- 来自“自由时有船”

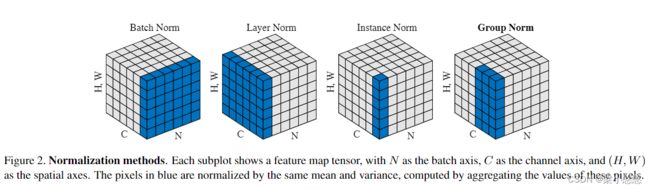

四种归一化的区别

Wu and He, “Group Normalization”, arXiv:1803.08494 [cs.CV]

torch.nn.utils.weight_norm

API解释

官方API解释:

https://pytorch.org/docs/stable/generated/torch.nn.utils.weight_norm.html?highlight=weight%20norm#torch.nn.utils.weight_norm

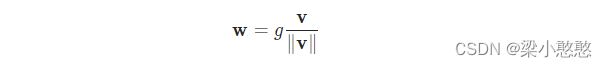

![]()

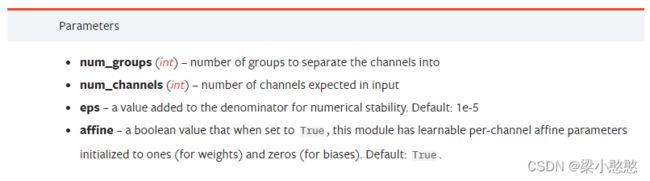

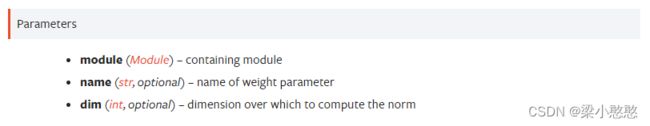

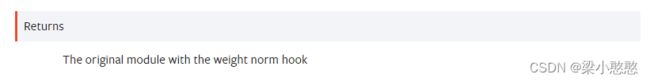

Applies weight normalization to a parameter in the given module.

Weight normalization is a reparameterization that decouples the magnitude of a weight tensor from its direction. This replaces the parameter specified by name (e.g. ‘weight’ ) with two parameters: one specifying the magnitude (e.g. ‘weight_g’ ) and one specifying the direction (e.g. ‘weight_v’ ). Weight normalization is implemented via a hook that recomputes the weight tensor from the magnitude and direction before every forward() call.

By default, with dim=0, the norm is computed independently per output channel/plane. To compute a norm over the entire weight tensor, use dim=None.

code

### 5. 实现weight_norm并验证API

# 调用weight_norm API

linear = nn.Linear(in_features=embedding_dim, out_features=3, bias=False)

wn_linear = torch.nn.utils.weight_norm(module=linear)

wn_linear_output = wn_linear(inputx)

print('wn_linear_output: ', wn_linear_output)

# 手写weight_norm

print('linear.weight.shape: ', linear.weight.shape)

weight_direction = linear.weight/linear.weight.norm(dim=1, keepdim=True) # x*w^T

print('weight_direction.shape: ', weight_direction.shape)

weight_magnitude = linear.weight.norm(dim=1, keepdim=True)

print('weight_magnitude.shape: ', weight_magnitude.shape)

verify_wn_linear_output = inputx @ (weight_direction.transpose(-1, -2)) * (weight_magnitude.transpose(-1, -2))

print('verify_wn_linear_output: ', verify_wn_linear_output)

参考资料

45、五种归一化的原理与PyTorch逐行手写实现讲解(BatchNorm/LayerNorm/InsNorm/GroupNorm/WeightNorm)

常见面试问题2:归一化-BN、LN、IN、GN

28 批量归一化【动手学深度学习v2】

https://pytorch.org/docs/stable/index.html