论文阅读:Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

文章目录

-

-

- 1、论文总述

- 2、Why does batch normalization work

- 3、BN加到卷积层之后的原因

- 4、加入BN之后,训练时数据分布的变化

- 5、与BN配套的一些操作

- 参考文献

-

1、论文总述

本篇论文提出了一个对CNN发展影响深远的操作:BN。BN是对CNN中间层feature map在激活函数前进行归一化操作,让他们的分布不至于那么散,这样的数据分布经过激活函数之后更加有效,不至于进入到Tanh和 Sigmoid的饱和区, 至于RELU 激活函数也有一定的效果。

论文的动机是为了改善CNN中的Internal Covariate Shift (ICS)效应,就是不同中间层的feature map在训练过程由于浅层参数的不固定,它们的分布也会随着训练在一直变化,深层feature map受到的影响更大,这样CNN训练起来比较困难。

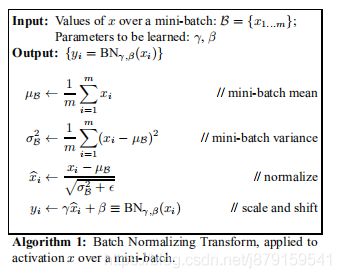

BN的归一化操作就是将BxNxHxW的数据中的BxHxW拿出来算均值和方差,通过算出来的均值和方差进行归一化操作,然后再通过可学习的两个参数beta和gamma进行尺度和平移缩放,beta和gamma的数据维度为通道个数。

We refer to the change in the distributions of internal

nodes of a deep network, in the course of training, as Internal Covariate Shift. Eliminating it offers a promise of faster training. We propose a new mechanism, which we call Batch Normalization, that takes a step towards reducing internal covariate shift, and in doing so dramatically accelerates the training of deep neural nets. It accomplishes this via a normalization step that fixes the

means and variances of layer inputs. Batch Normalization

also has a beneficial effect on the gradient flow through

the network, by reducing the dependence of gradients

on the scale of the parameters or of their initial values.

This allows us to use much higher learning rates without the risk of divergence. Furthermore, batch normalization regularizes the model and reduces the need for

Dropout (Srivastava et al., 2014). Finally, Batch Normalization makes it possible to use saturating nonlinearities

by preventing the network from getting stuck in the saturated modes.

2、Why does batch normalization work

(1) We know that normalizing input features can speed up learning, one

intuition is that doing same thing for hidden layers should also work.(2) solve the problem of covariance shift

Suppose you have trained your cat-recognizing network use black cat,

but evaluate on colored cats, you will see data distribution

changing(called covariance shift). Even there exist a true boundary

separate cat and non-cat, you can’t expect learn that boundary only

with black cat. So you may need to retrain the network.For a neural network, suppose input distribution is constant, so

output distribution of a certain hidden layer should have been

constant. But as the weights of that layer and previous layers

changing in the training phase, the output distribution will change,

this cause covariance shift from the perspective of layer after it.

Just like cat-recognizing network, the following need to re-train. To

recover this problem, we use batch normal to force a zero-mean and

one-variance distribution. It allow layer after it to learn

independently from previous layers, and more concentrate on its own

task, and so as to speed up the training process.(3) Batch normal as regularization(slightly)

In batch normal, mean and variance is computed on mini-batch, which

consist not too much samples. So the mean and variance contains noise.

Just like dropout, it adds some noise to hidden layer’s

activation(dropout randomly multiply activation by 0 or 1).This is an extra and slight effect, don’t rely on it as a regularizer.

注:现在关于BN work的原理解释还不是很明朗,这也只是论文里的初步解释,后续有相关的论文反驳这个ICS的解释。

BN的效果受到batch的限值,在分类网络中,Batch基本得8以上,才有提升效果,在目标检测中,由于网络模型比较大,对显卡显存要求比较高,目标检测的batch一般至少得2以上吧。

3、BN加到卷积层之后的原因

![]()

where W and b are learned parameters of the model, and

g(·) is the nonlinearity such as sigmoid or ReLU. This formulation covers both fully-connected and convolutional layers.

We add the BN transform immediately before the

nonlinearity, by normalizing x = Wu+ b. We could have

also normalized the layer inputs u, but since u is likely

the output of another nonlinearity, the shape of its distribution is likely to change during training, and constraining (加到卷积层之前,u变化太多)

its first and second moments would not eliminate the covariate shift. In contrast, Wu + b is more likely to have

a symmetric, non-sparse distribution, that is “more Gaussian” (Hyv¨arinen & Oja, 2000); normalizing it is likely to

produce activations with a stable distribution.

注意:加入BN层之后,Wu+ b中的Bias可以去掉了,原因如下:

b的作用被beta取代了!

4、加入BN之后,训练时数据分布的变化

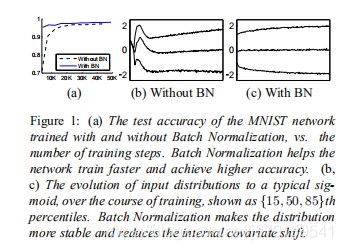

可以看到,比不加BN更早的稳定下来。

5、与BN配套的一些操作

Simply adding Batch Normalization to a network does not

take full advantage of our method. To do so, we further

changed the network and its training parameters, as follows:

Increase learning rate. In a batch-normalized model,

we have been able to achieve a training speedup from

higher learning rates, with no ill side effects (Sec. 3.3).

Remove Dropout. As described in Sec. 3.4, Batch Normalization fulfills some of the same goals as Dropout. Removing Dropout from Modified BN-Inception speeds up training, without increasing overfitting.

Reduce the L2 weight regularization. While in Inception an L2 loss on the model parameters controls overfitting, in Modified BN-Inception the weight of this loss is reduced by a factor of 5. We find that this improves the

accuracy on the held-out validation data.

Accelerate the learning rate decay. In training Inception, learning rate was decayed exponentially. Because our network trains faster than Inception, we lower the learning rate 6 times faster.

Remove Local Response Normalization While Inception and other networks (Srivastava et al., 2014) benefit

from it, we found that with Batch Normalization it is not

necessary.

Shuffle training examples more thoroughly. We enabled

within-shard shuffling of the training data, which prevents

the same examples from always appearing in a mini-batch

together. This led to about 1% improvements in the validation accuracy, which is consistent with the view of Batch Normalization as a regularizer (Sec. 3.4): the randomization inherent in our method should be most bene-

ficial when it affects an example differently each time it is

seen.

Reduce the photometric distortions. Because batchnormalized networks train faster and observe each training example fewer times, we let the trainer focus on more “real” images by distorting them less.

参考文献

[1] 来聊聊批归一化BN(Batch Normalization)层

[2] 【基础算法】六问透彻理解BN(Batch Normalization)