关于Faster R-CNN 算法训练自己数据集的一波三折 -Windows + caffe

我在Windows下训练自己数据集时遇到了很严重的问题,明明训练的loss收敛的很漂亮,但是检测出来的结果要么是mAP很低不超过0.5,要么就是所有的mAP一直为同一个非常低的值。

针对这个问题,我曾经以为:

1)用Python3导致与Faster R-CNN的版本不一致,没有用!!

2) 数据集质量不高:数量太少,图片分辨率不够,图片大小不符合要求,也是没有用!!

3)clone to Faster R-CNN时文件有遗漏,并没有!!!

这个博客完全拯救了我:https://blog.csdn.net/zhangzm0128/article/details/72593745

按照这个博客的步骤完全能够一一实现,为了防止博客被删,以及结合自己遇到的问题,还是整理一下。

开始训练自己的数据集:

一、制作自己的数据集:

1.训练数据集的要求 :不论你是网上找的图片或者你用别人的数据集,记住一点你的图片不能太小,width和height最好不要小于150。需要是jpeg的图片。【这一点很重要,会影响训练出来的model效果】

2.制作.xml文件:

1)采用的是Lambell【因为我是自己的数据集,数据及不大,所以我自己手动标注数据了】

2)上面博客里还写里一个大数据集情况下,生成.xml文件的代码,我还没有试过,先贴上:

def write_xml(bbox,w,h,iter):

'''

bbox为你保存的当前图片的类别的信息和对应坐标的dict

w,h为你当前保存图片的width和height

iter为你图片的序号

'''

root=Element("annotation")

folder=SubElement(root,"folder")#1

folder.text="JPEGImages"

filename=SubElement(root,"filename")#1

filename.text=iter

path=SubElement(root,"path")#1

path.text='D:\\py-faster-rcnn\\data\\VOCdevkit2007\\VOC2007\\JPEGImages'+'\\'+iter+'.jpg'#把这个路径改为你的路径就行

source=SubElement(root,"source")#1

database=SubElement(source,"database")#2

database.text="Unknown"

size=SubElement(root,"size")#1

width=SubElement(size,"width")#2

height=SubElement(size,"height")#2

depth=SubElement(size,"depth")#2

width.text=str(w)

height.text=str(h)

depth.text='3'

segmented=SubElement(root,"segmented")#1

segmented.text='0'

for i in bbox:

object=SubElement(root,"object")#1

name=SubElement(object,"name")#2

name.text=i['cls']

pose=SubElement(object,"pose")#2

pose.text="Unspecified"

truncated=SubElement(object,"truncated")#2

truncated.text='0'

difficult=SubElement(object,"difficult")#2

difficult.text='0'

bndbox=SubElement(object,"bndbox")#2

xmin=SubElement(bndbox,"xmin")#3

ymin=SubElement(bndbox,"ymin")#3

xmax=SubElement(bndbox,"xmax")#3

ymax=SubElement(bndbox,"ymax")#3

xmin.text=str(i['xmin'])

ymin.text=str(i['ymin'])

xmax.text=str(i['xmax'])

ymax.text=str(i['ymax'])

xml=tostring(root,pretty_print=True)

file=open('D:/py-faster-rcnn/data/VOCdevkit2007/VOC2007/Annotations/'+iter+'.xml','w+')#这里的路径也改为你自己的路径

file.write(xml)

3.划分训练、测试、验证数据集:【博客中给出的是MATLAB代码,但我没有安装我用的是Python代码,如下】:

import os

import random

trainval_percent = 0.7

train_percent = 0.7

xmlfilepath = '..\\data\\VOCdevkit2007\\VOC2007\\Annotations'

#..\\指的是你存放的faster r-cnn的地址

txtsavepath ='..\\data\\VOCdevkit2007\\VOC2007\\ImageSets\\Main'

total_xml = os.listdir(xmlfilepath)

num=len(total_xml)

list=range(num)

tv=int(num*trainval_percent)

tr=int(tv*train_percent)

trainval= random.sample(list,tv)

train=random.sample(trainval,tr)

ftrainval = open('..\\data\\VOCdevkit2007\\VOC2007\\ImageSets\\Main\\trainval.txt', 'w')

ftest=open('..\\data\\VOCdevkit2007\\VOC2007\\ImageSets\\Main\\test.txt', 'w')

ftrain = open('..\\data\\VOCdevkit2007\\VOC2007\\ImageSets\\Main\\train.txt', 'w')

fval = open('..\\data\\VOCdevkit2007\\VOC2007\\ImageSets\\Main\\val.txt', 'w')

for i in list:

name=total_xml[i][:-4]+'\n'

if i in trainval:

ftrainval.write(name)

if i in train:

ftrain.write(name)

else:

fval.write(name)

else:

ftest.write(name)

ftrainval.close()

ftrain.close()

fval.close()

ftest .close() 4.数据集存放的位置:..\data\VOCdevkit2007\VOC2007\下

| Annotations | .xml文件 |

| ImageSets->Main | (trainval、train、val、test).txt文件 |

| JPEGImages | .jpg文件 |

二、修改适合自己数据集的训练代码【以vgg_cnn_m_1024为例】

1.prototxt文件【打开:models\pascal_voc\VGG_CNN_M_1024\faster_rcnn_end2end\】

1)train.prototxt文件【四处:input-data、roi-data、cls_score、bbox_pred】

layer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 3" #这里改为你训练类别数+1

}

}

layer {

name: 'roi-data'

type: 'Python'

bottom: 'rpn_rois'

bottom: 'gt_boxes'

top: 'rois'

top: 'labels'

top: 'bbox_targets'

top: 'bbox_inside_weights'

top: 'bbox_outside_weights'

python_param {

module: 'rpn.proposal_target_layer'

layer: 'ProposalTargetLayer'

param_str: "'num_classes': 3" #这里改为你训练类别数+1

}

}

layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 3 #这里改为你训练类别数+1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 12 #这里改为你的(类别数+1)*4

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

}

2)test.prototxt文件

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 3 #这里改为你训练类别数+1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 12 #这里改为你的(类别数+1)

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

}

2.imdb.py【打开:lib\datasets\】两处修改

def append_flipped_images(self):

num_images = self.num_images

widths = [PIL.Image.open(self.image_path_at(i)).size[0]

for i in xrange(num_images)] #修改这里

for i in xrange(num_images):

boxes = self.roidb[i]['boxes'].copy()

oldx1 = boxes[:, 0].copy()

oldx2 = boxes[:, 2].copy()

boxes[:, 0] = widths[i] - oldx2 - 1

boxes[:, 2] = widths[i] - oldx1 - 1

for b in range(len(boxes)):

if boxes[b][2]< boxes[b][0]:

boxes[b][0] = 0 #修改这里

assert (boxes[:, 2] >= boxes[:, 0]).all()

entry = {'boxes' : boxes,

'gt_overlaps' : self.roidb[i]['gt_overlaps'],

'gt_classes' : self.roidb[i]['gt_classes'],

'flipped' : True}

self.roidb.append(entry)

self._image_index = self._image_index * 2

3.修改RPN层的5个文件

在如下目录下,将文件中param_str_全部改为param_str【你不改也会报错,根据报错的位置修改也行,反正麻烦点QAQ】

![]()

4.修改pascal_voc.py文件:

1)在self.classes这里,'__background__'使我们的背景类,不要动他。下面的改为你自己标签的内容。

def __init__(self, image_set, year, devkit_path=None):

imdb.__init__(self, 'voc_' + year + '_' + image_set)

self._year = year

self._image_set = image_set

self._devkit_path = self._get_default_path() if devkit_path is None \

else devkit_path

self._data_path = os.path.join(self._devkit_path, 'VOC' + self._year)

self._classes = ('__background__', # always index 0

'face')

#修改类别

self._class_to_ind = dict(zip(self.classes, xrange(self.num_classes)))

self._image_ext = '.jpg'

self._image_index = self._load_image_set_index()

# Default to roidb handler

self._roidb_handler = self.selective_search_roidb

self._salt = str(uuid.uuid4())

self._comp_id = 'comp4'

2)修改以下2段内容。否则你的test部分一定会出问题

def _get_voc_results_file_template(self):

# VOCdevkit/results/VOC2007/Main/_det_test_aeroplane.txt

filename = self._get_comp_id() + '_det_' + self._image_set + '_{:s}.txt'

path = os.path.join(

self._devkit_path,

'VOC' + self._year,

'Main',

'{}' + '_test.txt')

return path

def _write_voc_results_file(self, all_boxes):

for cls_ind, cls in enumerate(self.classes):

if cls == '__background__':

continue

print 'Writing {} VOC results file'.format(cls)

filename = self._get_voc_results_file_template().format(cls)

with open(filename, 'w+') as f:

for im_ind, index in enumerate(self.image_index):

dets = all_boxes[cls_ind][im_ind]

if dets == []:

continue

# the VOCdevkit expects 1-based indices

for k in xrange(dets.shape[0]):

f.write('{:s} {:.3f} {:.1f} {:.1f} {:.1f} {:.1f}\n'.

format(index, dets[k, -1],

dets[k, 0] + 1, dets[k, 1] + 1,

dets[k, 2] + 1, dets[k, 3] + 1))

5.修改config.py:【这个文件里还可以修改其他数据,比如说训练几次保存model等等】

将训练和测试的proposals改为gt

# Train using these proposals

__C.TRAIN.PROPOSAL_METHOD = 'gt'

# Test using these proposals

__C.TEST.PROPOSAL_METHOD = 'gt

三、开始训练:

1.删除cache文件:每次训练前将data\cache 和 data\VOCdevkit2007\annotations_cache中的文件删除。

【我一直都没有看见过annotations_cache】

2.开始训练:在py-faster-rcnn的根目录下打开git bash输入【需要你下载git】

./experiments/scripts/faster_rcnn_end2end.sh 0 VGG_CNN_M_1024 pascal_voc出现如下结果就是开始训练成功惹!!!【当然你可以去experiments\scripts\faster_rcnn_end2end.sh中调自己的训练的一些参数,也可以中VGG16、ZF模型去训练。】

![]()

四、测试

创建自己的demo.py,将你要测试的图片写在im_names里,并把图片放在data\demo这个文件夹下。

CLASSES = ('__background__',

'face')

NETS = {'vgg16': ('VGG16',

'VGG16_faster_rcnn_final.caffemodel'),

'vgg1024':('VGG_CNN_M_1024',#这里是我自己的model

'vgg_cnn_m_1024_faster_rcnn_iter_1500.caffemodel'),

'zf': ('ZF',

'ZF_faster_rcnn_final.caffemodel')}if __name__ == '__main__':

cfg.TEST.HAS_RPN = True # Use RPN for proposals

args = parse_args()

prototxt = os.path.join(cfg.MODELS_DIR, NETS[args.demo_net][0],

'faster_rcnn_end2end', 'test.prototxt')

#这里要修改成我们使用的Prototxt文件

caffemodel = os.path.join(cfg.DATA_DIR, 'faster_rcnn_models',

NETS[args.demo_net][1])

if not os.path.isfile(caffemodel):

raise IOError(('{:s} not found.\nDid you run ./data/script/'

'fetch_faster_rcnn_models.sh?').format(caffemodel))

if args.cpu_mode:

caffe.set_mode_cpu()

else:

caffe.set_mode_gpu()

caffe.set_device(args.gpu_id)

cfg.GPU_ID = args.gpu_id

net = caffe.Net(prototxt, caffemodel, caffe.TEST)

print '\n\nLoaded network {:s}'.format(caffemodel)

# Warmup on a dummy image

im = 128 * np.ones((300, 500, 3), dtype=np.uint8)

for i in xrange(2):

_, _= im_detect(net, im)

im_names = ['000542.jpg', '001150.jpg','004545(1).jpg', '004545.jpg']

#你自己要检测的图片

for im_name in im_names:

print '~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~'

print 'Demo for data/demo/{}'.format(im_name)

demo(net, im_name)

plt.show()2.测试自己的model:

将output\里你刚刚训练好的caffemodel复制到data\faster_rcnn_models,运行自己的demo.py文件就可以了

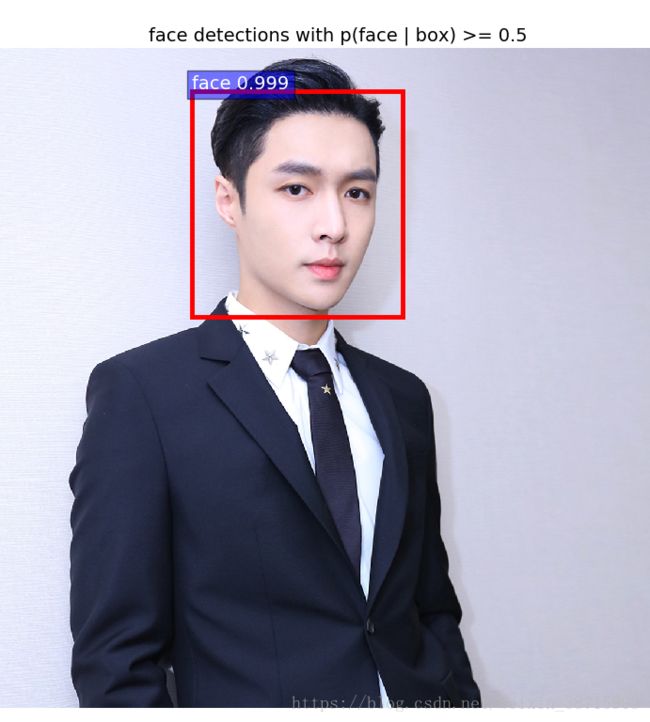

五、结果

给出我的结果,这是我随便找的数据集用来检测face:【此处是张艺兴,哈哈哈哈,我男神】

有一点要注意,这里我的阈值是0.5 ,是因为我当初找错误时改的没改回来,反正阈值可以随便调啦

【框好像大了点,因为我只用了200张图片,看模型也可以看出来,只训练了1500次左右,也有可能是我标定框的时候就标定的有些大,这些都可以调整】

六、遇到的问题

1、AttributeError: 'module' object has no attribute 'text_format'

解决方案:在 ../lib/fast_rcnn/train.py增加一行import google.protobuf.text_format

2.F0615 14:53:28.416858 4384 smooth_L1_loss_layer.cpp:24] Check failed: bottom[0] ->channels() == bottom[1]->channels() (12 vs. 84)

解决方案:一般都是end2end中的train.prototxt的类别没有改好导致的。

检查train.prototx中的input-data层的num_classes:n (自己要训练的类别+1,1代表背景)

roi-data层的num_classes:n

cls_score层的num_output:n

bbox_preda层的num_output:4*n。

3.F0615 14:58:38.421589 7596 net.cpp:757] Cannot copy param 0 weights from layer ‘cls_score’; shape mismatch. Source param shape is 21 4096 (86016); target param shape is 3 4096 (12288). To learn this layer’s parameters from scratch rather than copying from a saved net, rename the layer.

解决方法:我是在训练过程中遇到的问题,所以将train.ptototxt相应的cls_score重新命名了:cls_score1

bbox_pred层也是一样重新命名就好

4.TypeError: 'numpy.float64' object cannot be interpreted as an index

因为numpy版本高导致的。个人倾向于改代码而不是降低numpy版本。

1) /home/xxx/py-faster-rcnn/lib/roi_data_layer/minibatch.py

将第26行:fg_rois_per_image = np.round(cfg.TRAIN.FG_FRACTION * rois_per_image)

改为:fg_rois_per_image = np.round(cfg.TRAIN.FG_FRACTION * rois_per_image).astype(np.int)2)/home/xxx/py-faster-rcnn/lib/datasets/ds_utils.py

将第12行:hashes = np.round(boxes * scale).dot(v)

改为:hashes = np.round(boxes * scale).dot(v).astype(np.int)3) /home/xxx/py-faster-rcnn/lib/fast_rcnn/test.py

将第129行: hashes = np.round(blobs['rois'] * cfg.DEDUP_BOXES).dot(v)

改为: hashes = np.round(blobs['rois'] * cfg.DEDUP_BOXES).dot(v).astype(np.int)4)/home/xxx/py-faster-rcnn/lib/rpn/proposal_target_layer.py

将第60行:fg_rois_per_image = np.round(cfg.TRAIN.FG_FRACTION * rois_per_image)

改为:fg_rois_per_image = np.round(cfg.TRAIN.FG_FRACTION * rois_per_image).astype(np.int)5.TypeError: slice indices must be integers or None or have an index method

依然是numpy版本问题,依然推荐改代码:

修改 /home/lzx/py-faster-rcnn/lib/rpn/proposal_target_layer.py,转到123行,原来内容:

for ind in inds:

cls = clss[ind]

start = 4 * cls

end = start + 4

bbox_targets[ind, start:end] = bbox_target_data[ind, 1:]

bbox_inside_weights[ind, start:end] = cfg.TRAIN.BBOX_INSIDE_WEIGHTS

return bbox_targets, bbox_inside_weights修改为:

for ind in inds:

ind = int(ind)

cls = clss[ind]

start = int(4 * cls)

end = int(start + 4)

bbox_targets[ind, start:end] = bbox_target_data[ind, 1:]

bbox_inside_weights[ind, start:end] = cfg.TRAIN.BBOX_INSIDE_WEIGHTS

return bbox_targets, bbox_inside_weights到此为止,全部完成!!!撒花!!!