基于ffmpeg与rtsp的抓屏推流拉流

主要实现功能

主要想实现出的demo样式为,一台设备抓屏并且将抓到的屏幕传输到另一台设备上。主要实现的环境如下:

- windows抓屏推流

- 虚拟机上搭建rtsp流媒体服务器(centos7)

- windows拉流

实现

关于代码中的 const char* url = “rtsp://192.168.83.129:8554/zyx”;

- 192.168.83.129是我的虚拟机ip地址

- 后面的/zyx可以随便写,但是要保证推流程序和拉流的是一致的

- :8554是默认的端口,不用改

1 windows抓屏推流(vs2019)

#include 2 虚拟机上搭建rtsp流媒体服务器(centos7)

由于在传输中,无法实现点对点的传输,所以是先将屏幕推流到虚拟机上的rtsp服务器上,另一台windows再从该流媒体服务器上拉流

关于rtsp服务器的搭建直接使用github上的开源项目即可,提供一下自己用的方法:

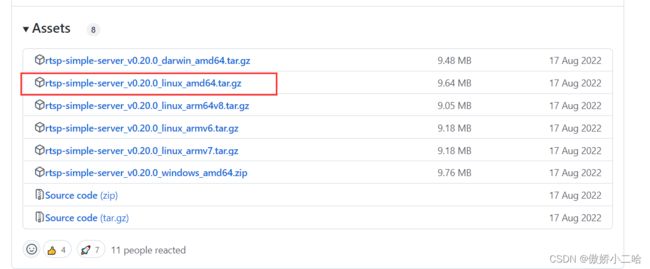

首先,下载该安装包到centos7

https://github.com/aler9/rtsp-simple-server/releases

tar zxvf rtsp-simple-server_v0.20.0_linux_amd64.tar.gz //解压

./rtsp-simple-server //运行

如果无法正常连接,要关闭防火墙

systemctl stop firewalld.service

3 windows拉流并显示

#include 效果图

到此,功能已经全部实现,下面写的就当作一些扩展吧

centos7上ffmpeg的搭建与命令行的推流

centos7上ffmpeg环境搭建

yum install epel-release

rpm -v --import http://li.nux.ro/download/nux/RPM-GPG-KEY-nux.ro

rpm -Uvh http://li.nux.ro/download/nux/dextop/el7/x86_64/nux-dextop-release-0-5.el7.nux.noarch.rpm

yum install ffmpeg ffmpeg-devel

ffmpeg -version//出来版本即安装成功

//利用命令行直接抓屏推流

ffmpeg -f x11grab -s 800x600 -framerate 12 -i :0.0+100,200 -preset ultrafast -s 800x600 -threads 10 -codec:v h264 -f rtsp rtsp://localhost:8554/zyx