Opencv——双目标定

Opencv——双目标定

双目标定

双目视觉标定就是通过求解实际三维空间中坐标点和摄像机二维图像坐标点的对应关系,在双目视觉中,三维空间坐标系一般是以左相机坐标系作为基准坐标系。利用棋盘板获取到的用于计算的二维图像坐标和三维空间的物理坐标,再通过一定的算法,求解出变换矩阵,则解决了基础的双目视觉标定的过程。

矩阵

从物体到相机坐标系统的转换:物体上的点P对应图像平面的点p,我们通过使用旋转矩阵R和平移向量T将点p和点P关联起来。

旋转矩阵R:是在乘以一个向量的时候有改变向量的方向但不改变大小的效果并保持了手性的矩阵。

平移向量T:用来表示如何将一个坐标系统移到另一个坐标系统,,其原点也移动到另一个位置;换句话说,平移向量是第一个坐标系统的原点到第二个坐标系统的原点的偏移量;如:从以物体中心的原点的坐标系到以相机中心为原点的坐标系,相应的平移向量为T。

本征矩阵E:包含了关于物理空间中两个摄像头的平移和旋转信息,在坐标系中第二个摄像头相对于第一个摄像头的位置。

基本矩阵F:除了包含了E中的相同信息外,还包含了两个摄像头的内参数;将图像坐标系(像素)中一个摄像头的像平面上的点和另一个摄像头的像平面上的点相互关联。

标定方法

Hartley校正算法:非标定立体校正

Hartley校正算法只使用基本矩阵F来生成非标定立体视觉;Hartley算法可以通过单个摄像头记录的运动推导出立体结构,但是单个摄像头会比Bouguet标定算法产生更多的畸变图像。

Hartley校正算法旨在找到最小化两幅立体图像的计算视差时将对极点映射到无穷远处的单应矩阵,这可通过匹配两幅图像之间的对应点简单完成。因此,就可以绕过计算两个摄像头的内参。所以我们只需要计算基础矩阵,它可以通过cvFindFundamentalMat()函数获得两个场景视图上的7个以上的匹配点来获得,或者,通过立体标定cvStereoCalibrate()函数求解获得。

Hartley校正算法有点是,在线立体标定可以简单的通过场景的观察点来完成,缺点是场景的图像比例未知。

Bouguet校正算法:标定立体校正

Bouguet校正算法是使用立体图像间的旋转矩阵R和平移矩阵T来标定立体视觉。而旋转矩阵R和平移矩阵T可以通过立体标定cvStereoCalibrate()函数求解获得。

用到的函数

//查找棋盘角点

findChessboardCorners( InputArray image, Size patternSize, OutputArray corners,

int flags = CALIB_CB_ADAPTIVE_THRESH + CALIB_CB_NORMALIZE_IMAGE );

// 亚像素角点

void cornerSubPix( InputArray image, InputOutputArray corners,

Size winSize, Size zeroZone,

TermCriteria criteria );

//显示角点结果

void drawChessboardCorners( InputOutputArray image, Size patternSize,

InputArray corners, bool patternWasFound );

以上函数详情在上一节中

//双目标定

double stereoCalibrate( InputArrayOfArrays objectPoints, //物体目标点向量

InputArrayOfArrays imagePoints1, //第一个摄像头的图像的角点向量

InputArrayOfArrays imagePoints2, //第二个摄像头的图像的角点向量

InputOutputArray cameraMatrix1, //第一个摄像头的相机矩阵

InputOutputArray distCoeffs1, //第一个摄像头的畸变矩阵

InputOutputArray cameraMatrix2, //第二个摄像头的相机矩阵

InputOutputArray distCoeffs2, //第二个摄像头的畸变矩阵

Size imageSize, // 图像大小

OutputArray R, // 旋转矩阵

OutputArray T, // 平移矩阵

OutputArray E, // 本质矩阵

OutputArray F, // 基本矩阵

int flags = CALIB_FIX_INTRINSIC, //标志位

TermCriteria criteria = TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 30, 1e-6) //终止条件

);

//立体矫正

void stereoRectify( InputArray cameraMatrix1, //第一个摄像头的相机矩阵

InputArray distCoeffs1,//第一个摄像头的畸变矩阵

InputArray cameraMatrix2, //第二个摄像头的相机矩阵

InputArray distCoeffs2,//第二个摄像头的畸变矩阵

Size imageSize, // 图像大小

InputArray R, // 旋转矩阵

InputArray T,// 平移矩阵

OutputArray R1,//第一摄像机的旋转矩阵

OutputArray R2,//第二摄像机的旋转矩阵

OutputArray P1,//第一个相机坐标系中的投影矩阵

OutputArray P2,//第二个相机坐标系中的投影矩阵

OutputArray Q, //重影矩阵

int flags = CALIB_ZERO_DISPARITY,

double alpha = -1, Size newImageSize = Size(),

CV_OUT Rect* validPixROI1 = 0, CV_OUT Rect* validPixROI2 = 0 );

为了提高精确度,可以先对左右相机进行单目标定,再用单目标定的参数再双目标定

代码

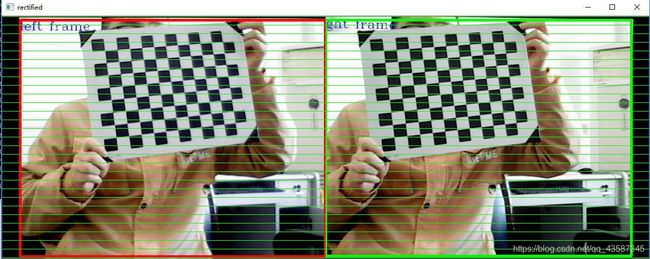

#include结果:

在使用摄像头时,建议使用一般的摄像头,不建议使用自动对焦的自能摄像头

如有错误,请批评指正