文本分类之独热编码、词袋模型、N-gram、TF-IDF

1、one-hot

一般是针对于标签而言,比如现在有猫:0,狗:1,人:2,船:3,车:4这五类,那么就有:

猫:[1,0,0,0,0]

狗:[0,1,0,0,0]

人:[0,0,1,0,0]

船:[0,0,0,1,0]

车:[0,0,0,0,1]

from sklearn import preprocessing

import numpy as np

enc = OneHotEncoder(sparse = False)

labels=[0,1,2,3,4]

labels=np.array(labels).reshape(len(labels),-1)

ans = enc.fit_transform(labels)结果:array([[1., 0., 0., 0., 0.], [0., 1., 0., 0., 0.], [0., 0., 1., 0., 0.], [0., 0., 0., 1., 0.], [0., 0., 0., 0., 1.]])

2、Bags of Words

统计单词出现的次数并进行赋值。

import re

"""

corpus = [

'This is the first document.',

'This document is the second document.',

'And this is the third one.',

'Is this the first document?',

]

"""

corpus = [

'Bob likes to play basketball, Jim likes too.',

'Bob also likes to play football games.'

]

#所有单词组成的列表

words=[]

for sentence in corpus:

#过滤掉标点符号

sentence=re.sub(r'[^\w\s]','',sentence.lower())

#拆分句子为单词

for word in sentence.split(" "):

if word not in words:

words.append(word)

else:

continue

word2idx={}

#idx2word={}

for i in range(len(words)):

word2idx[words[i]]=i

#idx2word[i]=words[i]

#按字典的值排序

word2idx=sorted(word2idx.items(),key=lambda x:x[1])import collections

BOW=[]

for sentence in corpus:

sentence=re.sub(r'[^\w\s]','',sentence.lower())

print(sentence)

tmp=[0 for _ in range(len(word2idx))]

for word in sentence.split(" "):

for k,v in word2idx:

if k==word:

tmp[v]+=1

else:

continue

BOW.append(tmp)

print(word2idx)

print(BOW)输出:

bob likes to play basketball jim likes too

bob also likes to play football games

[('bob', 0), ('likes', 1), ('to', 2), ('play', 3), ('basketball', 4), ('jim', 5), ('too', 6), ('also', 7), ('football', 8), ('games', 9)]

[[1, 2, 1, 1, 1, 1, 1, 0, 0, 0], [1, 1, 1, 1, 0, 0, 0, 1, 1, 1]]需要注意的是,我们是从单词表中进行读取判断其出现在句子中的次数。

在sklearn中的实现:

vectorizer = CountVectorizer()

vectorizer.fit_transform(corpus).toarray()结果:array([[0, 1, 1, 0, 0, 1, 2, 1, 1, 1], [1, 0, 1, 1, 1, 0, 1, 1, 1, 0]])

构建的单词的列表的单词的顺序不同,结果会稍有不同。

3、N-gram

核心思想:滑动窗口。来获取单词的上下文信息。

sklearn实现:

from sklearn.feature_extraction.text import CountVectorizer

corpus = [

'Bob likes to play basketball, Jim likes too.',

'Bob also likes to play football games.'

]

# ngram_range=(2, 2)表明适应2-gram,decode_error="ignore"忽略异常字符,token_pattern按照单词切割

ngram_vectorizer = CountVectorizer(ngram_range=(2, 2), decode_error="ignore",

token_pattern = r'\b\w+\b',min_df=1)

x1 = ngram_vectorizer.fit_transform(corpus) (0, 3) 1

(0, 6) 1

(0, 10) 1

(0, 8) 1

(0, 1) 1

(0, 5) 1

(0, 7) 1

(1, 6) 1

(1, 10) 1

(1, 2) 1

(1, 0) 1

(1, 9) 1

(1, 4) 1上面的第一列中第一个值标识句子顺序,第二个值标识滑动窗口单词顺序。与BOW相同,再计算每个窗口出现的次数。

[[0 1 0 1 0 1 1 1 1 0 1] [1 0 1 0 1 0 1 0 0 1 1]]

# 查看生成的词表

print(ngram_vectorizer.vocabulary_){

'bob likes': 3,

'likes to': 6,

'to play': 10,

'play basketball': 8,

'basketball jim': 1,

'jim likes': 5,

'likes too': 7,

'bob also': 2,

'also likes': 0,

'play football': 9,

'football games': 4

}4、TF-IDF

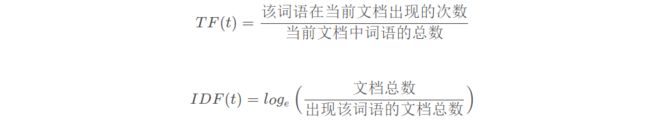

TF-IDF分数由两部分组成:第一部分是词语频率(Term Frequency),第二部分是逆文档频率(Inverse Document Frequency)