深度学习 — VOC数据集 & 处理工具类

文章目录

- 深度学习 — VOC 数据集 & 处理工具类

-

- 一、数据集简介

- 二、数据集内容

-

- 1. Annotations

-

- 1) VOC 数据集类别统计

- 2) VOC 标注文件解析

- 2. ImageSets

-

- 1) VOC数据集划分

- 3. JPEGImages

- 4. SegmentationClass

- 5. SegmentationObject

- 三 VOC 数据集工具类

- 四、参考资料

- 转载请注明原文出处:https://blog.csdn.net/pentiumCM/article/details/109750329

深度学习 — VOC 数据集 & 处理工具类

一、数据集简介

-

VOC数据集官网

-

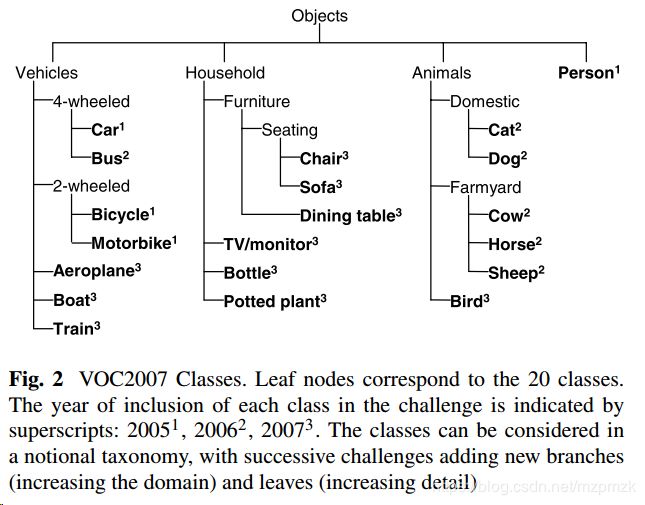

PASCAL VOC 2007 和 2012 数据集总共分 4 个大类:vehicle、household、animal、person,总共 20 个小类(加背景 21 类),预测的时候是只输出下图中黑色粗体的类别:

二、数据集内容

-

目录结构:

以VOC2012数据集为例,数据集下载后解压得到一个名为VOCdevkit的文件夹,该文件夹结构如下:

. └── VOCdevkit #根目录 └── VOC2012 #不同年份的数据集,这里只列举了2012的,还有2007等其它年份的 ├── Annotations # 存放的是数据集标签文件,xml格式,与JPEGImages中的图片一一对应 ├── ImageSets # 数据集的划分文件,txt格式,txt文件中每一行包含一个图片的名称,末尾会加上±1表示正负样本 │ ├── Action │ ├── Layout │ ├── Main │ └── Segmentation ├── JPEGImages # 存放的是数据集图片 ├── SegmentationClass # 存放的是图片,语义分割相关 └── SegmentationObject # 存放的是图片,实例分割相关

以下将介绍每个文件夹的内容。

1. Annotations

-

简介:

Annotation文件夹存放的是 xml 格式的标签文件,每个 xml 文件都对应于 JPEGImages 文件夹的一张同名的图片。

其中 xml 主要介绍了对应图片的基本信息,如来自那个文件夹、文件名、来源、图像尺寸以及图像中包含哪些目标以及目标的信息等等,内容如下:<annotation> <folder>VOC2012folder> <filename>2007_000027.jpgfilename> <source> <database>The VOC2007 Databasedatabase> <annotation>PASCAL VOC2007annotation> <image>flickrimage> source> <size> <width>486width> <height>500height> <depth>3depth> size> <segmented>0segmented> <object> <name>personname> <pose>Unspecifiedpose> <truncated>0truncated> <difficult>0difficult> <bndbox> <xmin>174xmin> <ymin>101ymin> <xmax>349xmax> <ymax>351ymax> bndbox> <part> <name>headname> <bndbox> <xmin>169xmin> <ymin>104ymin> <xmax>209xmax> <ymax>146ymax> bndbox> part> <part> <name>handname> <bndbox> <xmin>278xmin> <ymin>210ymin> <xmax>297xmax> <ymax>233ymax> bndbox> part> <part> <name>footname> <bndbox> <xmin>273xmin> <ymin>333ymin> <xmax>297xmax> <ymax>354ymax> bndbox> part> <part> <name>footname> <bndbox> <xmin>319xmin> <ymin>307ymin> <xmax>340xmax> <ymax>326ymax> bndbox> part> object> annotation>

1) VOC 数据集类别统计

-

python代码:

# !/usr/bin/env python # encoding: utf-8 ''' @Author : pentiumCM @Email : [email protected] @Software: PyCharm @File : voc_label_statistics.py @Time : 2020/12/4 23:56 @desc : VOC 数据集类别统计 ''' import xml.dom.minidom as xmldom import os def voc_label_statistics(annotation_path): ''' VOC 数据集类别统计 :param annotation_path: voc数据集的标签文件夹 :return: {'class1':'count',...} ''' count = 0 annotation_names = [os.path.join(annotation_path, i) for i in os.listdir(annotation_path)] labels = dict() for names in annotation_names: names_arr = names.split('.') file_type = names_arr[-1] if file_type != 'xml': continue file_size = os.path.getsize(names) if file_size == 0: continue count = count + 1 print('process:', names) xmlfilepath = names domobj = xmldom.parse(xmlfilepath) # 得到元素对象 elementobj = domobj.documentElement # 获得子标签 subElementObj = elementobj.getElementsByTagName("object") for s in subElementObj: label = s.getElementsByTagName("name")[0].firstChild.data label_count = labels.get(label, 0) labels[label] = label_count + 1 print('文件标注个数:', count) return labels if __name__ == '__main__': annotation_path = "F:/develop_code/python/ssd-pytorch/VOCdevkit/VOC2007/Annotations" label = voc_label_statistics(annotation_path) print(label)

2) VOC 标注文件解析

-

简介:

将 VOC 数据集的标注汇总到 txt 中,(train.txt,val.txt,test.txt — 分别对应训练集,验证集,测试集)

如下图所示:每一行代表 一张图片的路径 以 及 对应的标注框(xyxy)和 label

-

python代码:

#!/usr/bin/env python # encoding: utf-8 ''' @Author : pentiumCM @Email : [email protected] @Software: PyCharm @File : voc_annotation.py @Time : 2020/11/25 16:54 @desc : VOC 标注文件处理 ''' import os import xml.etree.ElementTree as ET def convert(size, box): """ VOC 的标注转为 yolo的标注,即 xyxy -> xywh :param size: 图片尺寸 (w, h) :param box: 标注框(xyxy) :return: """ dw = 1. / size[0] dh = 1. / size[1] # 中心点坐标 x = (box[0] + box[2]) / 2.0 y = (box[1] + box[3]) / 2.0 # 宽高 w = box[2] - box[0] h = box[3] - box[1] # 归一化 x = x * dw w = w * dw y = y * dh h = h * dh return (x, y, w, h) def xml_annotation(Annotations_path, image_id, out_file, classes, label_type): """ 解析 VOC 的 xml 标注文件,汇总到 txt 文件中 :param Annotations_path 数据集标注文件夹 :param image_id: 对应于每张图片标注的索引 :param out_file: 汇总数据集标注 的输出文件 :param classes: 数据集类别 :param label_type: 标注解析方式:xyxy / xywh :return: """ # 做该校验,为了方便对背景进行训练 annotation_filepath = os.path.join(Annotations_path, image_id + '.xml') file_size = os.path.getsize(annotation_filepath) if file_size > 0: in_file = open(os.path.join(Annotations_path, '%s.xml' % image_id), encoding='utf-8') tree = ET.parse(in_file) root = tree.getroot() size = root.find('size') w = int(size.find('width').text) h = int(size.find('height').text) for obj in root.iter('object'): difficult = obj.find('difficult').text cls = obj.find('name').text if cls not in classes or int(difficult) == 1: continue cls_id = classes.index(cls) xmlbox = obj.find('bndbox') b = ( int(float(xmlbox.find('xmin').text)), int(float(xmlbox.find('ymin').text)), int(float(xmlbox.find('xmax').text)), int(float(xmlbox.find('ymax').text))) b_box = b if label_type == 'xywh': # 标注格式:x,y,w,h,label b_box = convert((w, h), b) elif label_type == 'xyxy': # 标注格式:x,y,x,y,label pass # yolo: out_file.write(str(cls_id) + " " + " ".join([str(a) for a in b_box]) + '\n') out_file.write(" " + ",".join([str(a) for a in b_box]) + ',' + str(cls_id)) def convert_annotation(dataset_path, classes, label_type='xyxy'): """ 2. 处理标注结果:汇总数据集标注到 txt :param dataset_path VOC 数据集路径 :param classes: VOC 数据集标注类别 :param label_type: 标注解析方式:xyxy / xywh :return: """ JPEGImages_path = os.path.join(dataset_path, 'JPEGImages') Annotations_path = os.path.join(dataset_path, 'Annotations') ImageSets_path = os.path.join(dataset_path, 'ImageSets') assert os.path.exists(JPEGImages_path) assert os.path.exists(Annotations_path) assert os.path.exists(ImageSets_path) sets = ['train', 'test', 'val'] for image_set in sets: # 数据集划分文件:ImageSets/Main/train.txt ds_divide_file = os.path.join(ImageSets_path, 'Main', '%s.txt' % (image_set)) # (训练,验证,测试集)数据集索引 image_ids = open(ds_divide_file).read().strip().split() list_file = open(os.path.join(dataset_path, '%s.txt' % (image_set)), 'w', encoding='utf-8') for image_id in image_ids: list_file.write(os.path.join(JPEGImages_path, '%s.jpg' % (image_id))) xml_annotation(Annotations_path, image_id, list_file, classes, label_type) list_file.write('\n') list_file.close() if __name__ == '__main__': # 数据集路径 dataset_path = 'mgq_ds' classes = ["aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"] label_type = 'xyxy' convert_annotation(dataset_path='F:/develop_code/python/ssd-pytorch/VOCdevkit/VOC2007', classes=classes, label_type=label_type)

2. ImageSets

-

简介:

ImageSets文件夹下存放了三个文件,分别是 Layout,Main,Segmentation

- Main:

ImageSets里Main文件夹,存放训练的索引文件,用到4个文件:- train.txt: 是用来训练的图片文件的文件名列表 (训练集)

- val.txt: 是用来验证的图片文件的文件名列表 (验证集)

- trianval.txt:是用来训练和验证的图片文件的文件名列表 (训练集 + 验证集)

- test.txt:是用来测试的图片文件的文件名列表 (测试集)

- Main:

1) VOC数据集划分

-

完整代码:

(训练集,验证集,测试集)

# !/usr/bin/env python # encoding: utf-8 ''' @Author : pentiumCM @Email : [email protected] @Software: PyCharm @File : dataset_divide.py @Time : 2020/11/25 16:51 @desc : 生成:trainval.txt,train.txt,val.txt,test.txt 为ImageSets文件夹下面Main子文件夹中的训练集+验证集、训练集、验证集、测试集的划分 ''' import os import random def ds_partition(annotation_filepath, ds_divide_save__path, train_percent=0.8, trainval_percent=1): """ 数据集划分:训练集,验证集,测试集。 在ImageSets/Main/生成 train.txt,val.txt,trainval.txt,test.txt :param annotation_filepath: 标注文件的路径 Annotations :param ds_divide_save__path: 数据集划分保存的路径 ImageSets/Main/ :param train_percent: 训练集占(训练集+验证集)的比例 :param trainval_percent: (训练集+验证集)占总数据集的比例。测试集所占比例为:1-trainval_percent :return: """ if not os.path.exists(ds_divide_save__path): os.makedirs(ds_divide_save__path) assert os.path.exists(annotation_filepath) assert os.path.exists(ds_divide_save__path) # train_percent:训练集占(训练集+验证集)的比例 # train_percent = 0.8 # trainval_percent:(训练集+验证集)占总数据集的比例。测试集所占比例为:1-trainval_percent # trainval_percent = 1 temp_xml = os.listdir(annotation_filepath) total_xml = [] for xml in temp_xml: if xml.endswith(".xml"): total_xml.append(xml) num = len(total_xml) list = range(num) tv_len = int(num * trainval_percent) tr_len = int(tv_len * train_percent) test_len = int(num - tv_len) trainval = random.sample(list, tv_len) train = random.sample(trainval, tr_len) print("train and val size:", tv_len) print("train size:", tr_len) print("test size:", test_len) ftrainval = open(os.path.join(ds_divide_save__path, 'trainval.txt'), 'w') ftest = open(os.path.join(ds_divide_save__path, 'test.txt'), 'w') ftrain = open(os.path.join(ds_divide_save__path, 'train.txt'), 'w') fval = open(os.path.join(ds_divide_save__path, 'val.txt'), 'w') for i in list: name = total_xml[i][:-4] + '\n' if i in trainval: ftrainval.write(name) if i in train: ftrain.write(name) else: fval.write(name) else: ftest.write(name) ftrainval.close() ftrain.close() fval.close() ftest.close() if __name__ == '__main__': # 数据集根路径 dataset_path = r'F:/develop_code/python/ssd-pytorch/VOCdevkit/VOC2007' annotation_filepath = os.path.join(dataset_path, 'Annotations') divide_save_path = os.path.join(dataset_path, 'ImageSets/Main') ds_partition(annotation_filepath, divide_save_path)

3. JPEGImages

-

简介:

JPEGImages文件夹下存放的是数据集图片,包含训练和测试图片。

4. SegmentationClass

5. SegmentationObject

三 VOC 数据集工具类

-

工具类简介:

- 工具类包含:

- VOC 数据集类别统计

- VOC数据集划分:【(训练集,验证集),测试集】

- VOC标注结果处理,包括:

- VOC 的标注转为 yolo的标注,即 xyxy -> xywh

- 解析 VOC 的 xml 标注文件

- 汇总数据集标注到 txt

- 工具类包含:

-

完整代码:

#!/usr/bin/env python # encoding: utf-8 ''' @Author : pentiumCM @Email : [email protected] @Software: PyCharm @File : voc_data_util.py @Time : 2021/6/30 21:31 @desc : VOC 数据集工具类 ''' import os import random import xml.dom.minidom as xmldom import xml.etree.ElementTree as ET class VOCDataUtil: """ VOC 数据集工具类 """ def __init__(self, dataset_path, JPEGImages_dir='JPEGImages', annotation_dir='Annotations', ImageSets_dir='ImageSets', label_type='xyxy'): """ VOC 格式数据集初始化 :param dataset_path: 数据集文件夹根路径 :param JPEGImages_dir: 图片文件夹 :param annotation_dir: 标注文件夹 :param ImageSets_dir: 数据集划分文件夹 :param label_type: 解析标注方式 xyxy(ssd)/ xywh(yolo) """ self.dataset_path = dataset_path self.JPEGImages = os.path.join(self.dataset_path, JPEGImages_dir) self.Annotations = os.path.join(self.dataset_path, annotation_dir) self.ImageSets = os.path.join(self.dataset_path, ImageSets_dir) self.label_type = label_type assert os.path.exists(self.JPEGImages) assert os.path.exists(self.Annotations) def fill_blanklabel(self): """ 填充 空白(背景)标注文件 :return: """ JPEGImages_names = [i.split('.')[0] for i in os.listdir(self.JPEGImages)] Annotations_names = [i.split('.')[0] for i in os.listdir(self.Annotations)] background_list = list(set(JPEGImages_names).difference(set(Annotations_names))) # 前面有而后面中没有的 for item in background_list: file_name = item + '.xml' file_path = os.path.join(self.Annotations, file_name) open(file_path, "w") print('process:', file_path) def remove_blanklabel(self): """ 删除 空白(背景)标注文件 :return: """ Annotations_names = [os.path.join(self.Annotations, i) for i in os.listdir(self.Annotations)] for item in Annotations_names: file_size = os.path.getsize(item) if file_size == 0: os.remove(item) print('process:', item) def voc_label_statistics(self): ''' voc数据集类别统计 :return: {'class1':'count',...} ''' count = 0 annotation_names = [os.path.join(self.Annotations, i) for i in os.listdir(self.Annotations)] labels = dict() for names in annotation_names: names_arr = names.split('.') file_type = names_arr[-1] if file_type != 'xml': continue file_size = os.path.getsize(names) if file_size == 0: continue count = count + 1 print('process:', names) xmlfilepath = names domobj = xmldom.parse(xmlfilepath) # 得到元素对象 elementobj = domobj.documentElement # 获得子标签 subElementObj = elementobj.getElementsByTagName("object") for s in subElementObj: label = s.getElementsByTagName("name")[0].firstChild.data label_count = labels.get(label, 0) labels[label] = label_count + 1 print('文件标注个数:', count) return labels def dataset_divide(self, train_percent=0.8, trainval_percent=1): """ 1. 划分数据集:【(训练集,验证集),测试集】 在ImageSets/Main/生成 trainval.txt,train.txt,val.txt,test.txt :param train_percent: train_percent = 训练集 / (训练集 + 验证集) :param trainval_percent: trainval_percent = (训练集 + 验证集) / 总数据集。测试集所占比例为:1-trainval_percent :return: """ # 数据集划分保存的路径 ImageSets/Main/ ds_divide_save__path = os.path.join(self.ImageSets, 'Main') if not os.path.exists(ds_divide_save__path): os.makedirs(ds_divide_save__path) # train_percent:训练集占(训练集+验证集)的比例 # train_percent = 0.8 # trainval_percent:(训练集+验证集)占总数据集的比例。测试集所占比例为:1-trainval_percent # trainval_percent = 1 temp_xml = os.listdir(self.Annotations) total_xml = [] for xml in temp_xml: if xml.endswith(".xml"): total_xml.append(xml) num = len(total_xml) list = range(num) tv_len = int(num * trainval_percent) tr_len = int(tv_len * train_percent) test_len = int(num - tv_len) trainval = random.sample(list, tv_len) train = random.sample(trainval, tr_len) print("train and val size:", tv_len) print("train size:", tr_len) print("test size:", test_len) ftrainval = open(os.path.join(ds_divide_save__path, 'trainval.txt'), 'w') ftest = open(os.path.join(ds_divide_save__path, 'test.txt'), 'w') ftrain = open(os.path.join(ds_divide_save__path, 'train.txt'), 'w') fval = open(os.path.join(ds_divide_save__path, 'val.txt'), 'w') for i in list: name = total_xml[i][:-4] + '\n' if i in trainval: ftrainval.write(name) if i in train: ftrain.write(name) else: fval.write(name) else: ftest.write(name) ftrainval.close() ftrain.close() fval.close() ftest.close() def convert(self, size, box): """ VOC 的标注转为 yolo的标注,即 xyxy -> xywh :param size: 图片尺寸 (w, h) :param box: 标注框(xyxy) :return: """ dw = 1. / size[0] dh = 1. / size[1] # 中心点坐标 x = (box[0] + box[2]) / 2.0 y = (box[1] + box[3]) / 2.0 # 宽高 w = box[2] - box[0] h = box[3] - box[1] # 归一化 x = x * dw w = w * dw y = y * dh h = h * dh return (x, y, w, h) def xml_annotation(self, image_id, out_file, classes): """ 解析 voc 的 xml 标注文件 :param image_id: 对应于每张图片标注的索引 :param out_file: 汇总数据集标注 的输出文件 :param classes: 数据集类别 :return: """ # 做该校验,为了方便对背景进行训练 annotation_filepath = os.path.join(self.Annotations, image_id + '.xml') file_size = os.path.getsize(annotation_filepath) if file_size > 0: in_file = open(os.path.join(self.Annotations, '%s.xml' % image_id), encoding='utf-8') tree = ET.parse(in_file) root = tree.getroot() size = root.find('size') w = int(size.find('width').text) h = int(size.find('height').text) for obj in root.iter('object'): difficult = obj.find('difficult').text cls = obj.find('name').text if cls not in classes or int(difficult) == 1: continue cls_id = classes.index(cls) xmlbox = obj.find('bndbox') b = ( int(float(xmlbox.find('xmin').text)), int(float(xmlbox.find('ymin').text)), int(float(xmlbox.find('xmax').text)), int(float(xmlbox.find('ymax').text))) b_box = b if self.label_type == 'xywh': # 标注格式:x,y,w,h,label b_box = self.convert((w, h), b) elif self.label_type == 'xyxy': # 标注格式:x,y,x,y,label pass # yolo: out_file.write(str(cls_id) + " " + " ".join([str(a) for a in b_box]) + '\n') out_file.write(" " + ",".join([str(a) for a in b_box]) + ',' + str(cls_id)) def convert_annotation(self, classes): """ 2. 处理标注结果:汇总数据集标注到 txt :param classes: 数据集类别 :return: """ sets = ['train', 'test', 'val'] for image_set in sets: # 数据集划分文件:ImageSets/Main/train.txt ds_divide_file = os.path.join(self.ImageSets, 'Main', '%s.txt' % (image_set)) # (训练,验证,测试集)数据集索引 image_ids = open(ds_divide_file).read().strip().split() list_file = open(os.path.join(self.dataset_path, '%s.txt' % (image_set)), 'w', encoding='utf-8') for image_id in image_ids: list_file.write(os.path.join(self.JPEGImages, '%s.jpg' % (image_id))) self.xml_annotation(image_id, list_file, classes) list_file.write('\n') list_file.close() if __name__ == '__main__': # VOC_CLASS = [ # "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", # "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor" # ] vocutil = VOCDataUtil(dataset_path='E:/project/nongda/code/ssd-keras-nd/VOCdevkit/VOC2007', label_type='xyxy') # 1. 汇总数据集标注类别 label_dict = vocutil.voc_label_statistics() cus_classes = [] for key in label_dict.keys(): cus_classes.append(key) # 按照字符串进行正序排序 cus_classes.sort() # 2. 划分数据集 vocutil.dataset_divide() # 3. 处理标注结果 vocutil.convert_annotation(cus_classes)

四、参考资料

- https://blog.csdn.net/mzpmzk/article/details/88065416