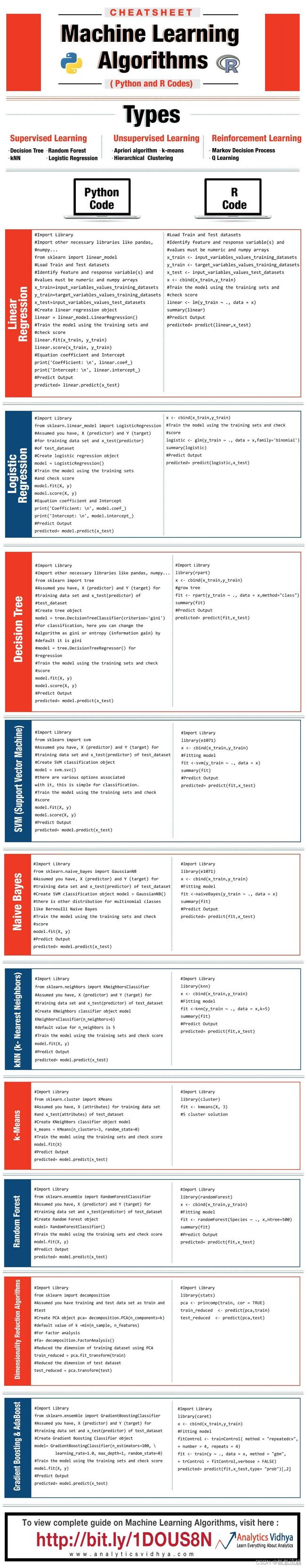

常用的机器学习算法(使用 Python 和 R 代码)

R代码最常用的10种机器学习算法在Python和R中的代码对比:

① 线性回归算法(Linear Regression)

② 逻辑回归算法(Logistic Regression)

③ 决策树算法(Decision Tree

④ 支持向量机算法(SVM)

⑤ 朴素贝叶斯算法(Naive Bayes)

⑥ K邻近算法(k- Nearest Neighbors,kNN)

⑦ K均值算法(k-Means)

⑧ 随机森林算法(Random Forest)

⑨ 主成分分析算法(PCA)

⑩ 梯度提升树(Gradient Boosting)

- GBM

- XGBoos

- LightGBM

- CatBoost

一、线性回归算法(Linear Regression)

线性回归主要有两种类型:简单线性回归和多元线性回归。简单线性回归的特征是一个自变量。而且,多元线性回归(顾名思义)的特征是多个(超过1个)自变量。在查找最佳拟合线时,可以拟合多项式或曲线回归。这些被称为多项式或曲线回归。

1、Python代码

# importing required libraries

import pandas as pd

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

# read the train and test dataset

train_data = pd.read_csv('train.csv')

test_data = pd.read_csv('test.csv')

print(train_data.head())

# shape of the dataset

print('\nShape of training data :',train_data.shape)

print('\nShape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Item_Outlet_Sales

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Item_Outlet_Sales'],axis=1)

train_y = train_data['Item_Outlet_Sales']

# seperate the independent and target variable on training data

test_x = test_data.drop(columns=['Item_Outlet_Sales'],axis=1)

test_y = test_data['Item_Outlet_Sales']

'''

Create the object of the Linear Regression model

You can also add other parameters and test your code here

Some parameters are : fit_intercept and normalize

Documentation of sklearn LinearRegression:

https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html

'''

model = LinearRegression()

# fit the model with the training data

model.fit(train_x,train_y)

# coefficeints of the trained model

print('\nCoefficient of model :', model.coef_)

# intercept of the model

print('\nIntercept of model',model.intercept_)

# predict the target on the test dataset

predict_train = model.predict(train_x)

print('\nItem_Outlet_Sales on training data',predict_train)

# Root Mean Squared Error on training dataset

rmse_train = mean_squared_error(train_y,predict_train)**(0.5)

print('\nRMSE on train dataset : ', rmse_train)

# predict the target on the testing dataset

predict_test = model.predict(test_x)

print('\nItem_Outlet_Sales on test data',predict_test)

# Root Mean Squared Error on testing dataset

rmse_test = mean_squared_error(test_y,predict_test)**(0.5)

print('\nRMSE on test dataset : ', rmse_test)2、R代码

#Load Train and Test datasets

#Identify feature and response variable(s) and values must be numeric and numpy arrays

x_train <- input_variables_values_training_datasets

y_train <- target_variables_values_training_datasets

x_test <- input_variables_values_test_datasets

x <- cbind(x_train,y_train)

# Train the model using the training sets and check score

linear <- lm(y_train ~ ., data = x)

summary(linear)

#Predict Output

predicted= predict(linear,x_test)二、逻辑回归算法(Logistic Regression)

不要被它的名字弄糊涂了!它是一种分类,而不是回归算法。它用于根据给定的自变量集估计离散值(二进制值,如0/1,是/否,真/假)。简而言之,它通过将数据拟合到logit函数来预测事件发生的概率。因此,它也被称为logit回归。由于它预测概率,因此其输出值介于 0 和 1 之间(如预期的那样)。

同样,让我们通过一个简单的例子来尝试理解这一点。

假设你的朋友给了你一个谜题来解决。只有2个结果场景 - 要么你解决它,要么你不解决它。现在想象一下,你正在接受各种各样的谜题/测验,试图了解你擅长哪些主题。这项研究的结果将是这样的 - 如果你得到一个基于三角学的十年级问题,你有70%的1可能性来解决它。另一方面,如果是五年级历史问题,得到答案的概率只有30%。这就是 Logistic 回归为您提供的。

1、Python代码

# importing required libraries

import pandas as pd

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

print(train_data.head())

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the Logistic Regression model

You can also add other parameters and test your code here

Some parameters are : fit_intercept and penalty

Documentation of sklearn LogisticRegression:

https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html

'''

model = LogisticRegression()

# fit the model with the training data

model.fit(train_x,train_y)

# coefficeints of the trained model

print('Coefficient of model :', model.coef_)

# intercept of the model

print('Intercept of model',model.intercept_)

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('Target on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('accuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('Target on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('accuracy_score on test dataset : ', accuracy_test)2、R代码

x <- cbind(x_train,y_train)

# Train the model using the training sets and check score

logistic <- glm(y_train ~ ., data = x,family='binomial')

summary(logistic)

#Predict Output

predicted= predict(logistic,x_test)三、决策树算法(Decision Tree)

1、Python代码

# importing required libraries

import pandas as pd

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the Decision Tree model

You can also add other parameters and test your code here

Some parameters are : max_depth and max_features

Documentation of sklearn DecisionTreeClassifier:

https://scikit-learn.org/stable/modules/generated/sklearn.tree.DecisionTreeClassifier.html

'''

model = DecisionTreeClassifier()

# fit the model with the training data

model.fit(train_x,train_y)

# depth of the decision tree

print('Depth of the Decision Tree :', model.get_depth())

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('Target on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('accuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('Target on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('accuracy_score on test dataset : ', accuracy_test)2、R代码

library(rpart)

x <- cbind(x_train,y_train)

# grow tree

fit <- rpart(y_train ~ ., data = x,method="class")

summary(fit)

#Predict Output

predicted= predict(fit,x_test)四、支持向量机算法(SVM)

- Python代码

# importing required libraries

import pandas as pd

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the Support Vector Classifier model

You can also add other parameters and test your code here

Some parameters are : kernal and degree

Documentation of sklearn Support Vector Classifier:

https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html

'''

model = SVC()

# fit the model with the training data

model.fit(train_x,train_y)

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('Target on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('accuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('Target on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('accuracy_score on test dataset : ', accuracy_test)- R代码

library(e1071)

x <- cbind(x_train,y_train)

# Fitting model

fit <-svm(y_train ~ ., data = x)

summary(fit)

#Predict Output

predicted= predict(fit,x_test)五、朴素贝叶斯算法(Naive Bayes)

- Python代码

# importing required libraries

import pandas as pd

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the Naive Bayes model

You can also add other parameters and test your code here

Some parameters are : var_smoothing

Documentation of sklearn GaussianNB:

https://scikit-learn.org/stable/modules/generated/sklearn.naive_bayes.GaussianNB.html

'''

model = GaussianNB()

# fit the model with the training data

model.fit(train_x,train_y)

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('Target on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('accuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('Target on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('accuracy_score on test dataset : ', accuracy_test)- R代码

library(e1071)

x <- cbind(x_train,y_train)

# Fitting model

fit <-naiveBayes(y_train ~ ., data = x)

summary(fit)

#Predict Output

predicted= predict(fit,x_test)六、K邻近算法(k- Nearest Neighbors,kNN)

1、 Python代码

# importing required libraries

import pandas as pd

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the K-Nearest Neighbor model

You can also add other parameters and test your code here

Some parameters are : n_neighbors, leaf_size

Documentation of sklearn K-Neighbors Classifier:

https://scikit-learn.org/stable/modules/generated/sklearn.neighbors.KNeighborsClassifier.html

'''

model = KNeighborsClassifier()

# fit the model with the training data

model.fit(train_x,train_y)

# Number of Neighbors used to predict the target

print('\nThe number of neighbors used to predict the target : ',model.n_neighbors)

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('\nTarget on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('accuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('Target on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('accuracy_score on test dataset : ', accuracy_test)2、 R代码

library(knn)

x <- cbind(x_train,y_train)

# Fitting model

fit <-knn(y_train ~ ., data = x,k=5)

summary(fit)

#Predict Output

predicted= predict(fit,x_test)七、K均值算法(k-Means)

1、 Python代码

# importing required libraries

import pandas as pd

from sklearn.cluster import KMeans

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to divide the training data into differernt clusters

# and predict in which cluster a particular data point belongs.

'''

Create the object of the K-Means model

You can also add other parameters and test your code here

Some parameters are : n_clusters and max_iter

Documentation of sklearn KMeans:

https://scikit-learn.org/stable/modules/generated/sklearn.cluster.KMeans.html

'''

model = KMeans()

# fit the model with the training data

model.fit(train_data)

# Number of Clusters

print('\nDefault number of Clusters : ',model.n_clusters)

# predict the clusters on the train dataset

predict_train = model.predict(train_data)

print('\nCLusters on train data',predict_train)

# predict the target on the test dataset

predict_test = model.predict(test_data)

print('Clusters on test data',predict_test)

# Now, we will train a model with n_cluster = 3

model_n3 = KMeans(n_clusters=3)

# fit the model with the training data

model_n3.fit(train_data)

# Number of Clusters

print('\nNumber of Clusters : ',model_n3.n_clusters)

# predict the clusters on the train dataset

predict_train_3 = model_n3.predict(train_data)

print('\nCLusters on train data',predict_train_3)

# predict the target on the test dataset

predict_test_3 = model_n3.predict(test_data)

print('Clusters on test data',predict_test_3) 2、 R代码

library(cluster)

fit <- kmeans(X, 3) # 5 cluster solution八、随机森林算法(Random Forest)

1、 Python代码

# importing required libraries

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# view the top 3 rows of the dataset

print(train_data.head(3))

# shape of the dataset

print('\nShape of training data :',train_data.shape)

print('\nShape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the Random Forest model

You can also add other parameters and test your code here

Some parameters are : n_estimators and max_depth

Documentation of sklearn RandomForestClassifier:

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html

'''

model = RandomForestClassifier()

# fit the model with the training data

model.fit(train_x,train_y)

# number of trees used

print('Number of Trees used : ', model.n_estimators)

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('\nTarget on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('\naccuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('\nTarget on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('\naccuracy_score on test dataset : ', accuracy_test)2、 R代码

library(randomForest)

x <- cbind(x_train,y_train)

# Fitting model

fit <- randomForest(Species ~ ., x,ntree=500)

summary(fit)

#Predict Output

predicted= predict(fit,x_test)九、主成分分析算法(PCA)

1、 Python代码

# importing required libraries

import pandas as pd

from sklearn.decomposition import PCA

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

# read the train and test dataset

train_data = pd.read_csv('train.csv')

test_data = pd.read_csv('test.csv')

# view the top 3 rows of the dataset

print(train_data.head(3))

# shape of the dataset

print('\nShape of training data :',train_data.shape)

print('\nShape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

# target variable - Item_Outlet_Sales

train_x = train_data.drop(columns=['Item_Outlet_Sales'],axis=1)

train_y = train_data['Item_Outlet_Sales']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Item_Outlet_Sales'],axis=1)

test_y = test_data['Item_Outlet_Sales']

print('\nTraining model with {} dimensions.'.format(train_x.shape[1]))

# create object of model

model = LinearRegression()

# fit the model with the training data

model.fit(train_x,train_y)

# predict the target on the train dataset

predict_train = model.predict(train_x)

# Accuray Score on train dataset

rmse_train = mean_squared_error(train_y,predict_train)**(0.5)

print('\nRMSE on train dataset : ', rmse_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

# Accuracy Score on test dataset

rmse_test = mean_squared_error(test_y,predict_test)**(0.5)

print('\nRMSE on test dataset : ', rmse_test)

# create the object of the PCA (Principal Component Analysis) model

# reduce the dimensions of the data to 12

'''

You can also add other parameters and test your code here

Some parameters are : svd_solver, iterated_power

Documentation of sklearn PCA:

https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.PCA.html

'''

model_pca = PCA(n_components=12)

new_train = model_pca.fit_transform(train_x)

new_test = model_pca.fit_transform(test_x)

print('\nTraining model with {} dimensions.'.format(new_train.shape[1]))

# create object of model

model_new = LinearRegression()

# fit the model with the training data

model_new.fit(new_train,train_y)

# predict the target on the new train dataset

predict_train_pca = model_new.predict(new_train)

# Accuray Score on train dataset

rmse_train_pca = mean_squared_error(train_y,predict_train_pca)**(0.5)

print('\nRMSE on new train dataset : ', rmse_train_pca)

# predict the target on the new test dataset

predict_test_pca = model_new.predict(new_test)

# Accuracy Score on test dataset

rmse_test_pca = mean_squared_error(test_y,predict_test_pca)**(0.5)

print('\nRMSE on new test dataset : ', rmse_test_pca)2、 R代码

library(stats)

pca <- princomp(train, cor = TRUE)

train_reduced <- predict(pca,train)

test_reduced <- predict(pca,test)十、梯度提升树(Gradient Boosting)

- GBM

1、 Python代码

# importing required libraries

import pandas as pd

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the GradientBoosting Classifier model

You can also add other parameters and test your code here

Some parameters are : learning_rate, n_estimators

Documentation of sklearn GradientBoosting Classifier:

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingClassifier.html

'''

model = GradientBoostingClassifier(n_estimators=100,max_depth=5)

# fit the model with the training data

model.fit(train_x,train_y)

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('\nTarget on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('\naccuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('\nTarget on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('\naccuracy_score on test dataset : ', accuracy_test)2、 R代码

library(caret)

x <- cbind(x_train,y_train)

# Fitting model

fitControl <- trainControl( method = "repeatedcv", number = 4, repeats = 4)

fit <- train(y ~ ., data = x, method = "gbm", trControl = fitControl,verbose = FALSE)

predicted= predict(fit,x_test,type= "prob")[,2] - XGBoost

1、 Python代码

# importing required libraries

import pandas as pd

from xgboost import XGBClassifier

from sklearn.metrics import accuracy_score

# read the train and test dataset

train_data = pd.read_csv('train-data.csv')

test_data = pd.read_csv('test-data.csv')

# shape of the dataset

print('Shape of training data :',train_data.shape)

print('Shape of testing data :',test_data.shape)

# Now, we need to predict the missing target variable in the test data

# target variable - Survived

# seperate the independent and target variable on training data

train_x = train_data.drop(columns=['Survived'],axis=1)

train_y = train_data['Survived']

# seperate the independent and target variable on testing data

test_x = test_data.drop(columns=['Survived'],axis=1)

test_y = test_data['Survived']

'''

Create the object of the XGBoost model

You can also add other parameters and test your code here

Some parameters are : max_depth and n_estimators

Documentation of xgboost:

https://xgboost.readthedocs.io/en/latest/

'''

model = XGBClassifier()

# fit the model with the training data

model.fit(train_x,train_y)

# predict the target on the train dataset

predict_train = model.predict(train_x)

print('\nTarget on train data',predict_train)

# Accuray Score on train dataset

accuracy_train = accuracy_score(train_y,predict_train)

print('\naccuracy_score on train dataset : ', accuracy_train)

# predict the target on the test dataset

predict_test = model.predict(test_x)

print('\nTarget on test data',predict_test)

# Accuracy Score on test dataset

accuracy_test = accuracy_score(test_y,predict_test)

print('\naccuracy_score on test dataset : ', accuracy_test)2、 R代码

require(caret)

x <- cbind(x_train,y_train)

# Fitting model

TrainControl <- trainControl( method = "repeatedcv", number = 10, repeats = 4)

model<- train(y ~ ., data = x, method = "xgbLinear", trControl = TrainControl,verbose = FALSE)

OR

model<- train(y ~ ., data = x, method = "xgbTree", trControl = TrainControl,verbose = FALSE)

predicted <- predict(model, x_test)- LightGBM

1、 Python代码

data = np.random.rand(500, 10) # 500 entities, each contains 10 features

label = np.random.randint(2, size=500) # binary target

train_data = lgb.Dataset(data, label=label)

test_data = train_data.create_valid('test.svm')

param = {'num_leaves':31, 'num_trees':100, 'objective':'binary'}

param['metric'] = 'auc'

num_round = 10

bst = lgb.train(param, train_data, num_round, valid_sets=[test_data])

bst.save_model('model.txt')

# 7 entities, each contains 10 features

data = np.random.rand(7, 10)

ypred = bst.predict(data)2、 R代码

library(RLightGBM)

data(example.binary)

#Parameters

num_iterations <- 100

config <- list(objective = "binary", metric="binary_logloss,auc", learning_rate = 0.1, num_leaves = 63, tree_learner = "serial", feature_fraction = 0.8, bagging_freq = 5, bagging_fraction = 0.8, min_data_in_leaf = 50, min_sum_hessian_in_leaf = 5.0)

#Create data handle and booster

handle.data <- lgbm.data.create(x)

lgbm.data.setField(handle.data, "label", y)

handle.booster <- lgbm.booster.create(handle.data, lapply(config, as.character))

#Train for num_iterations iterations and eval every 5 steps

lgbm.booster.train(handle.booster, num_iterations, 5)

#Predict

pred <- lgbm.booster.predict(handle.booster, x.test)

#Test accuracy

sum(y.test == (y.pred > 0.5)) / length(y.test)

#Save model (can be loaded again via lgbm.booster.load(filename))

lgbm.booster.save(handle.booster, filename = "/tmp/model.txt")require(caret)

require(RLightGBM)

data(iris)

model <-caretModel.LGBM()

fit <- train(Species ~ ., data = iris, method=model, verbosity = 0)

print(fit)

y.pred <- predict(fit, iris[,1:4])

library(Matrix)

model.sparse <- caretModel.LGBM.sparse()

#Generate a sparse matrix

mat <- Matrix(as.matrix(iris[,1:4]), sparse = T)

fit <- train(data.frame(idx = 1:nrow(iris)), iris$Species, method = model.sparse, matrix = mat, verbosity = 0)

print(fit)

- CatBoost

1、 Python代码

import pandas as pd

import numpy as np

from catboost import CatBoostRegressor

#Read training and testing files

train = pd.read_csv("train.csv")

test = pd.read_csv("test.csv")

#Imputing missing values for both train and test

train.fillna(-999, inplace=True)

test.fillna(-999,inplace=True)

#Creating a training set for modeling and validation set to check model performance

X = train.drop(['Item_Outlet_Sales'], axis=1)

y = train.Item_Outlet_Sales

from sklearn.model_selection import train_test_split

X_train, X_validation, y_train, y_validation = train_test_split(X, y, train_size=0.7, random_state=1234)

categorical_features_indices = np.where(X.dtypes != np.float)[0]

#importing library and building model

from catboost import CatBoostRegressormodel=CatBoostRegressor(iterations=50, depth=3, learning_rate=0.1, loss_function='RMSE')

model.fit(X_train, y_train,cat_features=categorical_features_indices,eval_set=(X_validation, y_validation),plot=True)

submission = pd.DataFrame()

submission['Item_Identifier'] = test['Item_Identifier']

submission['Outlet_Identifier'] = test['Outlet_Identifier']

submission['Item_Outlet_Sales'] = model.predict(test)2、 R代码

set.seed(1)

require(titanic)

require(caret)

require(catboost)

tt <- titanic::titanic_train[complete.cases(titanic::titanic_train),]

data <- as.data.frame(as.matrix(tt), stringsAsFactors = TRUE)

drop_columns = c("PassengerId", "Survived", "Name", "Ticket", "Cabin")

x <- data[,!(names(data) %in% drop_columns)]y <- data[,c("Survived")]

fit_control <- trainControl(method = "cv", number = 4,classProbs = TRUE)

grid <- expand.grid(depth = c(4, 6, 8),learning_rate = 0.1,iterations = 100, l2_leaf_reg = 1e-3, rsm = 0.95, border_count = 64)

report <- train(x, as.factor(make.names(y)),method = catboost.caret,verbose = TRUE, preProc = NULL,tuneGrid = grid, trControl = fit_control)

print(report)

importance <- varImp(report, scale = FALSE)

print(importance)