(九)逻辑回归多分类应用

逻辑回归(Logistics Regression)属于分类算法,最适合解决二分类问题,也可以解决多分类问题,下面两个例子都是解决多分类的应用

一、鸢尾花案例

import numpy as np

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegressioniris = datasets.load_iris()

X = iris.data

y = iris.targetX_train, X_test, y_train, y_test = train_test_split(X, y, random_state=666)1.1 OvR:(One vs Rest)一对剩余

将所有类别分为两类,某一类 和 非这一类(其它所有类别),对新样本对于这两类进行概率计算

例如:对要预测的新样本计算为A类的概率、非A类概率;计算为B类的概率、非B类的概率;

计算为C类的概率、非C类的概率......

将这个新样本分到得分最高的那一类,用二分类的思想实现了多分类

"""

可以调用sklearn中封装的OneVsRestClassifier类,调用任意二分类算法进行多分类

例如:

from sklearn.multiclass import OneVsRestClassifier

lgr1 = LogisticRegression()

ovr = OneVsRestClassifier(lgr1)

ovr.fit(X_train, y_train)

"""

lgr1 = LogisticRegression(multi_class='ovr', solver='liblinear')

lgr1.fit(X_train, y_train)

lgr1.score(X_test, y_test)

"""

训练的模型如下:

LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

multi_class='ovr', n_jobs=None, penalty='l2',

random_state=None, solver='liblinear', tol=0.0001, verbose=0,

warm_start=False)

"""

# 0.94736842105263151.2 OvO:(One vs One)一对一

对要预测的新样本进行两两类别求概率,最后投票决定

例如:对某样本求为A类别的概率,为B类别的概率;求为B类别的概率,为C类别的概率;

求为A类别的概率,为C类别的概率, 然后根据在哪个类别中数量最大进行投票决定它的类别

"""

可以调用sklearn中封装的OneVsOneClassifier类,调用任意二分类算法进行多分类

例如:

from sklearn.multiclass import OneVsOneClassifier

lgr2 = LogisticRegression()

ovo = OneVsOneClassifier(lgr2)

ovo.fit(X_train, y_train)

"""

lgr2 = LogisticRegression(multi_class='multinomial', solver='newton-cg')

lgr2.fit(X_train, y_train)

lgr2.score(X_test, y_test)

"""

训练的模型如下:

LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

multi_class='multinomial', n_jobs=None, penalty='l2',

random_state=None, solver='newton-cg', tol=0.0001, verbose=0,

warm_start=False)

"""

# 1.0总结:实际检验中OvO预测准确率高于OvR

二、MNIST手写数据集

import numpy as np

from sklearn.datasets import fetch_openml

mnist = fetch_openml("mnist_784")x = mnist['data']

y = mnist['target']

print(x.shape)

print(y.shape)

x_train = np.array(x[:60000], dtype=float)

y_train = np.array(y[:60000], dtype=float)

x_test = np.array(x[60000:], dtype=float)

y_test = np.array(y[60000:], dtype=float)from sklearn.linear_model import LogisticRegression2.1 OvR

%%time

lgr1 = LogisticRegression(multi_class='ovr', solver='liblinear')

lgr1.fit(x_train, y_train)

lgr1.score(x_test, y_test)

# 0.91762.2 OvO

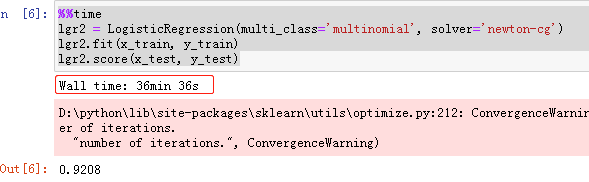

%%time

lgr2 = LogisticRegression(multi_class='multinomial', solver='newton-cg')

lgr2.fit(x_train, y_train)

lgr2.score(x_test, y_test)

# 0.9208