【mmdetection系列】mmdetection之neck讲解

目录

1.configs

2.具体实现

3.如何调用的?

3.1 注册

3.2 调用

类似于backbone部分。

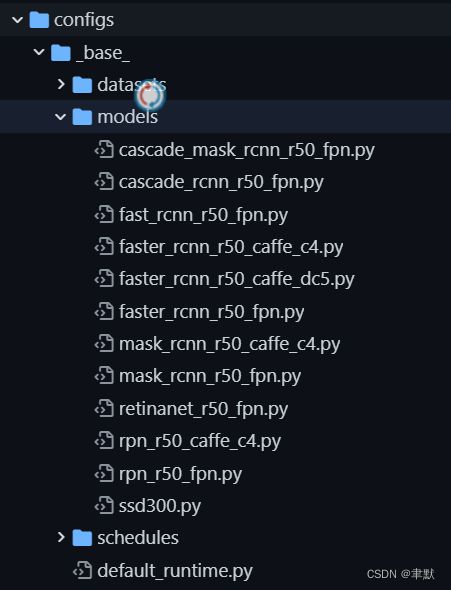

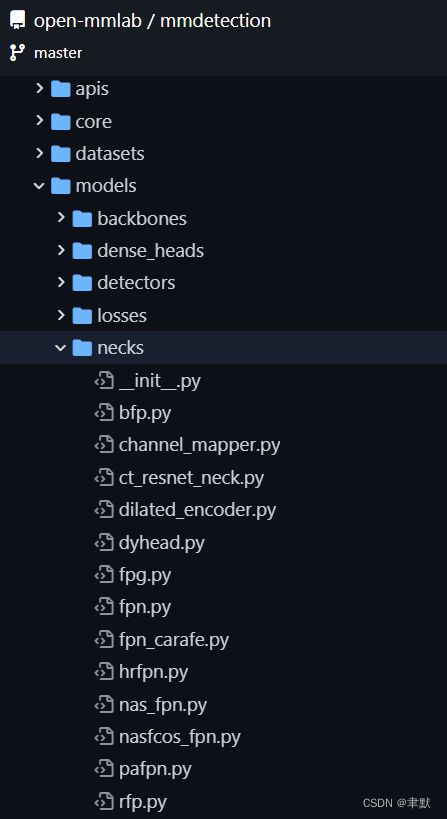

配置部分在configs/_base_/models目录下,具体实现在mmdet/models/necks目录下。

1.configs

我们看下yolox的neck吧。https://github.com/open-mmlab/mmdetection/blob/master/configs/yolox/yolox_s_8x8_300e_coco.py

neck=dict(

type='YOLOXPAFPN',

in_channels=[128, 256, 512],

out_channels=128,

num_csp_blocks=1)2.具体实现

neck作用:一般是收集到不同阶段的特征图的网络层,并将其传递到预测层。

我们可以找到YOLOXPAFPN类的具体实现:

https://github.com/open-mmlab/mmdetection/blob/master/mmdet/models/necks/yolox_pafpn.py#L14

@NECKS.register_module()

class YOLOXPAFPN(BaseModule):

"""Path Aggregation Network used in YOLOX.

Args:

in_channels (List[int]): Number of input channels per scale.

out_channels (int): Number of output channels (used at each scale)

num_csp_blocks (int): Number of bottlenecks in CSPLayer. Default: 3

use_depthwise (bool): Whether to depthwise separable convolution in

blocks. Default: False

upsample_cfg (dict): Config dict for interpolate layer.

Default: `dict(scale_factor=2, mode='nearest')`

conv_cfg (dict, optional): Config dict for convolution layer.

Default: None, which means using conv2d.

norm_cfg (dict): Config dict for normalization layer.

Default: dict(type='BN')

act_cfg (dict): Config dict for activation layer.

Default: dict(type='Swish')

init_cfg (dict or list[dict], optional): Initialization config dict.

Default: None.

"""

def __init__(self,

in_channels,

out_channels,

num_csp_blocks=3,

use_depthwise=False,

upsample_cfg=dict(scale_factor=2, mode='nearest'),

conv_cfg=None,

norm_cfg=dict(type='BN', momentum=0.03, eps=0.001),

act_cfg=dict(type='Swish'),

init_cfg=dict(

type='Kaiming',

layer='Conv2d',

a=math.sqrt(5),

distribution='uniform',

mode='fan_in',

nonlinearity='leaky_relu')):

super(YOLOXPAFPN, self).__init__(init_cfg)

self.in_channels = in_channels

self.out_channels = out_channels

conv = DepthwiseSeparableConvModule if use_depthwise else ConvModule

# build top-down blocks

self.upsample = nn.Upsample(**upsample_cfg)

self.reduce_layers = nn.ModuleList()

self.top_down_blocks = nn.ModuleList()

for idx in range(len(in_channels) - 1, 0, -1):

self.reduce_layers.append(

ConvModule(

in_channels[idx],

in_channels[idx - 1],

1,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg))

self.top_down_blocks.append(

CSPLayer(

in_channels[idx - 1] * 2,

in_channels[idx - 1],

num_blocks=num_csp_blocks,

add_identity=False,

use_depthwise=use_depthwise,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg))

# build bottom-up blocks

self.downsamples = nn.ModuleList()

self.bottom_up_blocks = nn.ModuleList()

for idx in range(len(in_channels) - 1):

self.downsamples.append(

conv(

in_channels[idx],

in_channels[idx],

3,

stride=2,

padding=1,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg))

self.bottom_up_blocks.append(

CSPLayer(

in_channels[idx] * 2,

in_channels[idx + 1],

num_blocks=num_csp_blocks,

add_identity=False,

use_depthwise=use_depthwise,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg))

self.out_convs = nn.ModuleList()

for i in range(len(in_channels)):

self.out_convs.append(

ConvModule(

in_channels[i],

out_channels,

1,

conv_cfg=conv_cfg,

norm_cfg=norm_cfg,

act_cfg=act_cfg))

def forward(self, inputs):

"""

Args:

inputs (tuple[Tensor]): input features.

Returns:

tuple[Tensor]: YOLOXPAFPN features.

"""

assert len(inputs) == len(self.in_channels)

# top-down path

inner_outs = [inputs[-1]]

for idx in range(len(self.in_channels) - 1, 0, -1):

feat_heigh = inner_outs[0]

feat_low = inputs[idx - 1]

feat_heigh = self.reduce_layers[len(self.in_channels) - 1 - idx](

feat_heigh)

inner_outs[0] = feat_heigh

upsample_feat = self.upsample(feat_heigh)

inner_out = self.top_down_blocks[len(self.in_channels) - 1 - idx](

torch.cat([upsample_feat, feat_low], 1))

inner_outs.insert(0, inner_out)

# bottom-up path

outs = [inner_outs[0]]

for idx in range(len(self.in_channels) - 1):

feat_low = outs[-1]

feat_height = inner_outs[idx + 1]

downsample_feat = self.downsamples[idx](feat_low)

out = self.bottom_up_blocks[idx](

torch.cat([downsample_feat, feat_height], 1))

outs.append(out)

# out convs

for idx, conv in enumerate(self.out_convs):

outs[idx] = conv(outs[idx])

return tuple(outs)3.如何调用的?

3.1 注册

注册创建类名字典。

@NECKS.register_module()

3.2 调用

需要从对应的YOLOX类:

mmdetection/yolox.py at master · open-mmlab/mmdetection · GitHub

然后找到实例化还是在其基类中:

mmdetection/single_stage.py at 31c84958f54287a8be2b99cbf87a6dcf12e57753 · open-mmlab/mmdetection · GitHub

self.neck = build_neck(neck)完整代码:

# Copyright (c) OpenMMLab. All rights reserved.

import warnings

import torch

from mmdet.core import bbox2result

from ..builder import DETECTORS, build_backbone, build_head, build_neck

from .base import BaseDetector

@DETECTORS.register_module()

class SingleStageDetector(BaseDetector):

"""Base class for single-stage detectors.

Single-stage detectors directly and densely predict bounding boxes on the

output features of the backbone+neck.

"""

def __init__(self,

backbone,

neck=None,

bbox_head=None,

train_cfg=None,

test_cfg=None,

pretrained=None,

init_cfg=None):

super(SingleStageDetector, self).__init__(init_cfg)

if pretrained:

warnings.warn('DeprecationWarning: pretrained is deprecated, '

'please use "init_cfg" instead')

backbone.pretrained = pretrained

self.backbone = build_backbone(backbone)

if neck is not None:

self.neck = build_neck(neck)

bbox_head.update(train_cfg=train_cfg)

bbox_head.update(test_cfg=test_cfg)

self.bbox_head = build_head(bbox_head)

self.train_cfg = train_cfg

self.test_cfg = test_cfg

def extract_feat(self, img):

"""Directly extract features from the backbone+neck."""

x = self.backbone(img)

if self.with_neck:

x = self.neck(x)

return x

def forward_dummy(self, img):

"""Used for computing network flops.

See `mmdetection/tools/analysis_tools/get_flops.py`

"""

x = self.extract_feat(img)

outs = self.bbox_head(x)

return outs

def forward_train(self,

img,

img_metas,

gt_bboxes,

gt_labels,

gt_bboxes_ignore=None):

"""

Args:

img (Tensor): Input images of shape (N, C, H, W).

Typically these should be mean centered and std scaled.

img_metas (list[dict]): A List of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys see

:class:`mmdet.datasets.pipelines.Collect`.

gt_bboxes (list[Tensor]): Each item are the truth boxes for each

image in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): Class indices corresponding to each box

gt_bboxes_ignore (None | list[Tensor]): Specify which bounding

boxes can be ignored when computing the loss.

Returns:

dict[str, Tensor]: A dictionary of loss components.

"""

super(SingleStageDetector, self).forward_train(img, img_metas)

x = self.extract_feat(img)

losses = self.bbox_head.forward_train(x, img_metas, gt_bboxes,

gt_labels, gt_bboxes_ignore)

return losses

def simple_test(self, img, img_metas, rescale=False):

"""Test function without test-time augmentation.

Args:

img (torch.Tensor): Images with shape (N, C, H, W).

img_metas (list[dict]): List of image information.

rescale (bool, optional): Whether to rescale the results.

Defaults to False.

Returns:

list[list[np.ndarray]]: BBox results of each image and classes.

The outer list corresponds to each image. The inner list

corresponds to each class.

"""

feat = self.extract_feat(img)

results_list = self.bbox_head.simple_test(

feat, img_metas, rescale=rescale)

bbox_results = [

bbox2result(det_bboxes, det_labels, self.bbox_head.num_classes)

for det_bboxes, det_labels in results_list

]

return bbox_results

def aug_test(self, imgs, img_metas, rescale=False):

"""Test function with test time augmentation.

Args:

imgs (list[Tensor]): the outer list indicates test-time

augmentations and inner Tensor should have a shape NxCxHxW,

which contains all images in the batch.

img_metas (list[list[dict]]): the outer list indicates test-time

augs (multiscale, flip, etc.) and the inner list indicates

images in a batch. each dict has image information.

rescale (bool, optional): Whether to rescale the results.

Defaults to False.

Returns:

list[list[np.ndarray]]: BBox results of each image and classes.

The outer list corresponds to each image. The inner list

corresponds to each class.

"""

assert hasattr(self.bbox_head, 'aug_test'), \

f'{self.bbox_head.__class__.__name__}' \

' does not support test-time augmentation'

feats = self.extract_feats(imgs)

results_list = self.bbox_head.aug_test(

feats, img_metas, rescale=rescale)

bbox_results = [

bbox2result(det_bboxes, det_labels, self.bbox_head.num_classes)

for det_bboxes, det_labels in results_list

]

return bbox_results

def onnx_export(self, img, img_metas, with_nms=True):

"""Test function without test time augmentation.

Args:

img (torch.Tensor): input images.

img_metas (list[dict]): List of image information.

Returns:

tuple[Tensor, Tensor]: dets of shape [N, num_det, 5]

and class labels of shape [N, num_det].

"""

x = self.extract_feat(img)

outs = self.bbox_head(x)

# get origin input shape to support onnx dynamic shape

# get shape as tensor

img_shape = torch._shape_as_tensor(img)[2:]

img_metas[0]['img_shape_for_onnx'] = img_shape

# get pad input shape to support onnx dynamic shape for exporting

# `CornerNet` and `CentripetalNet`, which 'pad_shape' is used

# for inference

img_metas[0]['pad_shape_for_onnx'] = img_shape

if len(outs) == 2:

# add dummy score_factor

outs = (*outs, None)

# TODO Can we change to `get_bboxes` when `onnx_export` fail

det_bboxes, det_labels = self.bbox_head.onnx_export(

*outs, img_metas, with_nms=with_nms)

return det_bboxes, det_labels