grafana监控nginx日志

先展示下grafana 的一张大图。

看图还是比较炫酷的,那怎么才能够展示这样的大屏,咱们一步一步拆解

用到组件:nginx ,filebeat,logstash,elasticsearch,grafana

流程图如下:

1、nginx代理设置

log_format elklog '$remote_addr - $remote_user [$time_local] "$host" $server_port "$request" '

'$status $body_bytes_sent $bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for" $request_time '

'"$upstream_response_time" "$upstream_addr" "$upstream_status" ';

2、filebeat 将nginx日志发往logstash,编辑filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /opt/servers/nginx/logs/access.portal.*.log

- /opt/servers/nginx/logs/access.*.log

fields:

filetype: nginx #这一行的key:value都可以自己定义

fields_under_root: true

output.logstash:

hosts: ["logstash001:5044"]

3、logstash解析nginx日志写入elasticsearch

input {

beats {

port => 5044 #设置专用端口用于接受filebeat的日志

}

}

filter {

# nginx 日志

if ([fields][filetype] == "nginx") or ([fields][filetype] == "nginx-records") {

grok {

match => {

"message" => "%{IP:remote_addr} - (?:%{DATA:remote_user}|-) \[%{HTTPDATE:timestamp}\] \"%{DATA:host}\" %{NUMBER:server_port} \"%{WORD:request_method} %{DATA:request} HTTP/%{NUMBER:httpversion}\" %{NUMBER:status} %{NUMBER:body_bytes_sent} %{NUMBER:bytes_sent} \"(?:%{DATA:http_referer}|-)\" \"%{DATA:http_user_agent}\" \"(?:%{DATA:http_x_forwarded_for}|-)\" (?:%{DATA:request_time}|-) \"%{DATA:upstream_response_time}\" \"%{DATA:upstream_addr}\" \"%{NUMBER:upstream_status}\""

}

}

urldecode{

field => [ "request" ]

}

#解析request

mutate {

add_field => { "http_request" => "%{request}" } #先随便创建一个字段,把request的值传给它。

}

#分割http_request

mutate {

split => [ "http_request" , "?" ] #http_request以问号为切割点

add_field => [ "url" , "%{[http_request][0]}" ] #取出数组中第一个值,同时添加url为新的field

}

#存在 [http_request][1] 机型解析参数

if[http_request][1] {

mutate {

add_field => [ "args" , "%{[http_request][1]}" ] #取出http_request数组中第一个值,同时添加args为新的field

}

kv {

source => "args"

field_split => "&"

target => "query"

}

#删除args字段

mutate {

remove_field => [ "args" ]

}

}

#删除http_request字段

mutate {

remove_field => [ "http_request" ]

}

geoip {

#multiLang => "zh-CN"

target => "geoip"

source => "remote_addr"

database => "/home/hadoop/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-filter-geoip-6.0.5-java/vendor/GeoLite2-City.mmdb" #指定库的位置

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

# 去掉显示 geoip 显示的多余信息

remove_field => ["[geoip][latitude]", "[geoip][longitude]", "[geoip][country_code]", "[geoip][country_code2]", "[geoip][country_code3]", "[geoip][timezone]", "[geoip][continent_code]", "[geoip][region_code]"]

}

mutate {

#重名字段改名

rename => { "remote_addr" => "client_ip" } #将key字段改名。

rename => { "http_x_forwarded_for" => "xff" } #将key字段改名。

# rename => { "host" => "domain" } #将key字段改名。

rename => { "http_referer" => "referer" } #将key字段改名。

rename => { "request_time" => "responsetime" } #将key字段改名。

rename => { "upstream_response_time" => "upstreamtime" } #将key字段改名。

rename => { "body_bytes_sent" => "size" } #将key字段改名。

rename => { "upstream_addr" => "upstreamhost" } #将key字段改名。

#copy字段

copy => { "host" => "domain" } #copy _ProductID字段改名。

copy => { "client_ip" => "server_ip" }

}

mutate {

convert => [ "size", "integer" ]

convert => [ "status", "integer" ]

convert => [ "responsetime", "float" ]

convert => [ "upstreamtime", "float" ]

convert => [ "[geoip][coordinates]", "float" ]

# 过滤 filebeat 没用的字段,这里过滤的字段要考虑好输出到es的,否则过滤了就没法做判断

remove_field => [ "ecs","agent","cloud","@version","input","logs_type","message" ]

}

# 根据http_user_agent来自动处理区分用户客户端系统与版本

useragent {

source => "http_user_agent"

target => "ua"

# 过滤useragent没用的字段

remove_field => [ "[ua][minor]","[ua][major]","[ua][build]","[ua][patch]","[ua][os_minor]","[ua][os_major]" ]

}

#创建索引日期字段

ruby{

code => "event.set('index_day', (event.get('@timestamp').time.localtime + 0*60*60).strftime('%Y.%m.%d'))"

}

}

}

filter {

if "_grokparsefailure" in [tags] {

drop {}

}

}

output {

if [fields][filetype] == "nginx" {

elasticsearch {

hosts => ["elasticsearch001:9200"]

index => "logstash-nginx-log-%{index_day}"

}

# file {

#path => "/home/hadoop/logstash-nginx-log-%{+YYYY.MM.dd}"

#path => "/home/hadoop/logstash-nginx-log-%{index_day}"

#}

}

}

4、在kibana中查看elasticsearch数据

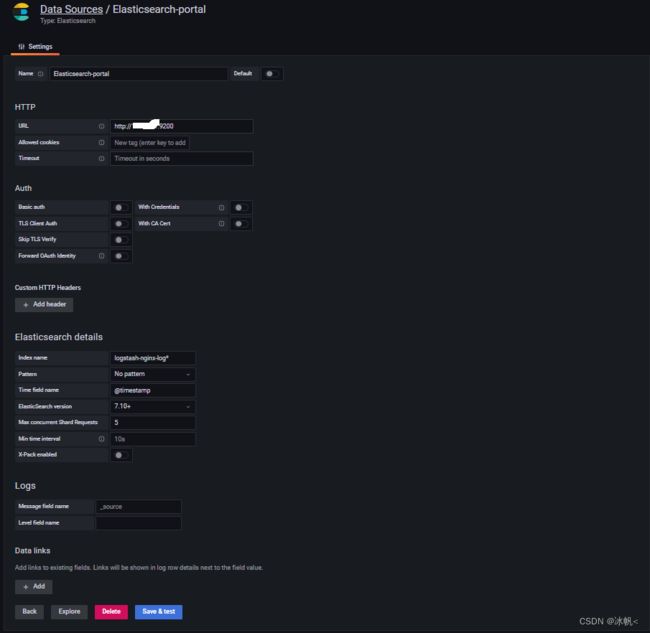

5、在grafana配置elasticsearch数据源

需要注意的是最新的dashboard需要选择7.10+的elasticsearch,保存数据源后

需要注意的是最新的dashboard需要选择7.10+的elasticsearch,保存数据源后

AKA ES Nginx Logs | Grafana Labs 查看dashboard id:11190

在grafana中导入

完工。

本人喜欢研究新技术,并有丰富的大数据经验,希望和各位小伙伴探讨技术。