yolov5半自动标注,测试好用

效果是检测一个文件夹里面的图片批量生成txt文件,再通过txt转xml可以直接在labelimg可视化微调,实现批量标注。

下载yolov5官方文件,然后修改detect.py文件。

import argparse

import os

import sys

from pathlib import Path

from dataread import MyData

import cv2

import numpy as np

import torch

import torch.backends.cudnn as cudnn

import threading

FILE = Path(__file__).resolve()

ROOT = FILE.parents[0] # YOLOv5 root directory

if str(ROOT) not in sys.path:

sys.path.append(str(ROOT)) # add ROOT to PATH

ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # relative

from models.common import DetectMultiBackend

from utils.datasets import IMG_FORMATS, VID_FORMATS, LoadImages, LoadStreams

from utils.general import (LOGGER, check_file, check_img_size, check_imshow, check_requirements, colorstr,

increment_path, non_max_suppression, print_args, scale_coords, strip_optimizer, xyxy2xywh)

from utils.plots import Annotator, colors, save_one_box

from utils.torch_utils import select_device, time_sync

mask_num=nomask_num=person=0

@torch.no_grad()

def run(weights=ROOT / 'yolov5s.pt', # model.pt path(s)

source=ROOT / 'data/images', # file/dir/URL/glob, 0 for webcam

imgsz=640, # inference size (pixels)

conf_thres=0.25, # confidence threshold

iou_thres=0.45, # NMS IOU threshold

max_det=1000, # maximum detections per image

device='0', # cuda device, i.e. 0 or 0,1,2,3 or cpu

view_img=True, # show results

save_txt=False, # save results to *.txt,改了这个

save_conf=False, # save confidences in --save-txt labels

save_crop=False, # save cropped prediction boxes

nosave=False, # do not save images/videos

classes=None, # filter by class: --class 0, or --class 0 2 3

agnostic_nms=False, # class-agnostic NMS

augment=False, # augmented inference

visualize=False, # visualize features

update=False, # update all models

project=ROOT / 'runs/detect', # save results to project/name

name='exp', # save results to project/name

exist_ok=False, # existing project/name ok, do not increment

line_thickness=3, # bounding box thickness (pixels)

hide_labels=False, # hide labels

hide_conf=False, # hide confidences

half=False, # use FP16 half-precision inference

dnn=False, # use OpenCV DNN for ONNX inference

):

source = str(source)#将文件强制变成字符串

save_img = not nosave and not source.endswith('.txt') #true和true就要把接过保存

is_file = Path(source).suffix[1:] in (IMG_FORMATS + VID_FORMATS)#前面表示jpg后缀是否在后面两个,表示true

is_url = source.lower().startswith(('rtsp://', 'rtmp://', 'http://', 'https://'))#转小写,后面判断网路地址

webcam = source.isnumeric() or source.endswith('.txt') or (is_url and not is_file)#判断是不是数字,是不是0,是不是摄像头

if is_url and is_file:

source = check_file(source) # 判断是不是文件,然后下载

# Directories

save_dir = increment_path(Path(project) / name, exist_ok=True) # increment run,exp会增量保存改了这个

(save_dir / 'labels' if save_txt else save_dir).mkdir(parents=True, exist_ok=True) # make dir,可以新建文件夹

# Load model

device = select_device(device)#选择设备

model = DetectMultiBackend(weights, device=device, dnn=dnn)#加载模型

stride, names, pt, jit, onnx = model.stride, model.names, model.pt, model.jit, model.onnx#模型读取

imgsz = check_img_size(imgsz, s=stride) #检查图片大小,步长,帮忙修改

# Half

half &= pt and device.type != 'cpu' # half precision only supported by PyTorch on CUDA

if pt:

model.model.half() if half else model.model.float()

# Dataloader#数据加载

if webcam:

view_img = check_imshow()

cudnn.benchmark = True # set True to speed up constant image size inference

dataset = LoadStreams(source, img_size=imgsz, stride=stride, auto=pt and not jit)

bs = len(dataset) # batch_size

else:

# print('请输入X1,Y1')

# x1=input()

# x1=int(x1)

# y1=input()

# y1 = int(y1)

# print('请输入X2,Y2')

# x2 = input()

# x2 = int(x2)

# y2 = input()

# y2 = int(y2)

dataset = LoadImages(source, img_size=imgsz, stride=stride, auto=pt and not jit)#改输入图片

bs = 1 # batch_size

vid_path, vid_writer = [None] * bs, [None] * bs

# Run inference

if pt and device.type != 'cpu':

model(torch.zeros(1, 3, *imgsz).to(device).type_as(next(model.model.parameters()))) # warmup

dt, seen = [0.0, 0.0, 0.0], 0

for path, im, im0s, vid_cap, s in dataset:

# mask for certain region

#1,2,3,4 分别对应左上,右上,右下,左下四个点

hl1 = 0 / 10 #监测区域高度距离图片顶部比例

wl1 = 0/ 10 #监测区域高度距离图片左部比例

hl2 = 0 / 10 # 监测区域高度距离图片顶部比例

wl2 = 10 / 10 # 监测区域高度距离图片左部比例

hl3 = 10 / 10 # 监测区域高度距离图片顶部比例

wl3 = 10/ 10 # 监测区域高度距离图片左部比例

hl4 = 10 / 10 # 监测区域高度距离图片顶部比例

wl4 = 0 / 10 # 监测区域高度距离图片左部比例

if webcam:

pass

# for b in range(0,im.shape[0]):

# mask = np.zeros([im[b].shape[1], im[b].shape[2]], dtype=np.uint8)

# #mask[round(img[b].shape[1] * hl1):img[b].shape[1], round(img[b].shape[2] * wl1):img[b].shape[2]] = 255

# pts = np.array([[int(im[b].shape[2] * wl1), int(im[b].shape[1] * hl1)], # pts1

# [int(im[b].shape[2] * wl2), int(im[b].shape[1] * hl2)], # pts2

# [int(im[b].shape[2] * wl3), int(im[b].shape[1] * hl3)], # pts3

# [int(im[b].shape[2] * wl4), int(im[b].shape[1] * hl4)]], np.int32)

# mask = cv2.fillPoly(mask,[pts],(255,255,255))

# imgc = im[b].transpose((1, 2, 0))

# imgc = cv2.add(imgc, np.zeros(np.shape(imgc), dtype=np.uint8), mask=mask)

# #cv2.imshow('1',imgc)

# im[b] = imgc.transpose((2, 0, 1))

else:

mask = np.zeros([im.shape[1], im.shape[2]], dtype=np.uint8)

#mask[round(img.shape[1] * hl1):img.shape[1], round(img.shape[2] * wl1):img.shape[2]] = 255

pts = np.array([[int(im.shape[2] * wl1), int(im.shape[1] * hl1)], # pts1

[int(im.shape[2] * wl2), int(im.shape[1] * hl2)], # pts2

[int(im.shape[2] * wl3), int(im.shape[1] * hl3)], # pts3

[int(im.shape[2] * wl4), int(im.shape[1] * hl4)]], np.int32)

mask = cv2.fillPoly(mask, [pts], (255,255,255))

im = im.transpose((1, 2, 0))

im = cv2.add(im, np.zeros(np.shape(im), dtype=np.uint8), mask=mask)

im = im.transpose((2, 0, 1))

t1 = time_sync()

im = torch.from_numpy(im).to(device)

im = im.half() if half else im.float() # uint8 to fp16/32

im /= 255 # 0 - 255 to 0.0 - 1.0

if len(im.shape) == 3:

im = im[None] # expand for batch dim

t2 = time_sync()

dt[0] += t2 - t1

# Inference

visualize = increment_path(save_dir / Path(path).stem, mkdir=True) if visualize else False

pred = model(im, augment=augment, visualize=visualize)

t3 = time_sync()

dt[1] += t3 - t2

# NMS

pred = non_max_suppression(pred, conf_thres, iou_thres, classes, agnostic_nms, max_det=max_det)

dt[2] += time_sync() - t3

# Second-stage classifier (optional)

# pred = utils.general.apply_classifier(pred, classifier_model, im, im0s)

# Process predictions

for i, det in enumerate(pred): # per image

seen += 1

if webcam: # batch_size >= 1

pass

# p, s, im0, frame = path[i], f'{i}: ', im0s[i].copy(), dataset.count

# cv2.putText(im0, "Detection_Region", (int(im0.shape[1] * wl1 - 5), int(im0.shape[0] * hl1 - 5)),

# cv2.FONT_HERSHEY_SIMPLEX,

# 1.0, (255, 255, 0), 2, cv2.LINE_AA)

#

# pts = np.array([[int(im0.shape[1] * wl1), int(im0.shape[0] * hl1)], # pts1

# [int(im0.shape[1] * wl2), int(im0.shape[0] * hl2)], # pts2

# [int(im0.shape[1] * wl3), int(im0.shape[0] * hl3)], # pts3

# [int(im0.shape[1] * wl4), int(im0.shape[0] * hl4)]], np.int32) # pts4

# # pts = pts.reshape((-1, 1, 2))

# zeros = np.zeros((im0.shape), dtype=np.uint8)

# mask = cv2.fillPoly(zeros, [pts], color=(0, 165, 255))

# im0 = cv2.addWeighted(im0, 1, mask, 0.2, 0)

# cv2.polylines(im0, [pts], True, (255, 255, 0), 3)

# # plot_one_box(dr, im0, label='Detection_Region', color=(0, 255, 0), line_thickness=2)

else:

p, s, im0, frame = path, '', im0s.copy(), getattr(dataset, 'frame', 0)

cv2.putText(im0, "Detection_Region", (int(im0.shape[1] * wl1 - 5), int(im0.shape[0] * hl1 - 5)),

cv2.FONT_HERSHEY_SIMPLEX,

1.0, (255, 255, 0), 2, cv2.LINE_AA)

pts = np.array([[int(im0.shape[1] * wl1), int(im0.shape[0] * hl1)], # pts1

[int(im0.shape[1] * wl2), int(im0.shape[0] * hl2)], # pts2

[int(im0.shape[1] * wl3), int(im0.shape[0] * hl3)], # pts3

[int(im0.shape[1] * wl4), int(im0.shape[0] * hl4)]], np.int32) # pts4

# pts = pts.reshape((-1, 1, 2))

zeros = np.zeros((im0.shape), dtype=np.uint8)

mask = cv2.fillPoly(zeros, [pts], color=(0, 165, 255))

im0 = cv2.addWeighted(im0, 1, mask, 0.2, 0)

cv2.polylines(im0, [pts], True, (255, 255, 0), 3)

p = Path(p) # to Path

save_path = str(save_dir / p.name) # im.jpg

txt_path = str(save_dir / 'labels' / p.stem) + ('' if dataset.mode == 'image' else f'_{frame}') # im.txt

s += '%gx%g ' % im.shape[2:] # print string

gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] # normalization gain whwh

imc = im0.copy() if save_crop else im0 # for save_crop

annotator = Annotator(im0, line_width=line_thickness, example=str(names))

if len(det):

# Rescale boxes from img_size to im0 size

det[:, :4] = scale_coords(im.shape[2:], det[:, :4], im0.shape).round()

# Print results

for c in det[:, -1].unique():

n = (det[:, -1] == c).sum() # detections per class

s += f"{n} {names[int(c)]}{'s' * (n > 1)}, " # add to string

# Write results

for *xyxy, conf, cls in reversed(det):

if save_txt: # Write to file

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

line = (cls, *xywh, conf) if save_conf else (cls, *xywh) # label format

with open(txt_path + '.txt', 'a') as f:

f.write(('%g ' * len(line)).rstrip() % line + '\n')

if save_img or save_crop or view_img: # Add bbox to image

c = int(cls) # integer class

label = None if hide_labels else (names[c] if hide_conf else f'{names[c]} {conf:.2f}')

annotator.box_label(xyxy, label, color=colors(c, True))

global mask_num,nomask_num,person

if names[c]=="with_mask":

mask_num+=1

print('第'+str(mask_num)+'个'+'戴口罩的坐标')

p1, p2 = (int(xyxy[0]), int(xyxy[1])), (int(xyxy[2]), int(xyxy[3]))

print("左上点的坐标为:(" + str(p1[0]) + "," + str(p1[1]) + "),右下点的坐标为(" + str(p2[0]) + "," + str(p2[1]) + ")")

if names[c]=="without_mask":

nomask_num+=1

print('第'+str(nomask_num)+'个'+'没戴口罩的坐标')

p1, p2 = (int(xyxy[0]), int(xyxy[1])), (int(xyxy[2]), int(xyxy[3]))

print("左上点的坐标为:(" + str(p1[0]) + "," + str(p1[1]) + "),右下点的坐标为(" + str(

p2[0]) + "," + str(p2[1]) + ")")

if names[c] == "person":

person += 1

print('第' + str(person) + '个' + '人')

p1, p2 = (int(xyxy[0]), int(xyxy[1])), (int(xyxy[2]), int(xyxy[3]))

print("左上点的坐标为:(" + str(p1[0]) + "," + str(p1[1]) + "),右下点的坐标为(" + str(

p2[0]) + "," + str(p2[1]) + ")")

if save_crop:

save_one_box(xyxy, imc, file=save_dir / 'crops' / names[c] / f'{p.stem}.jpg', BGR=True)

# Print time (inference-only)

LOGGER.info(f'{s}Done. ({t3 - t2:.3f}s)')

# Stream results

# im0 = annotator.result()

# if view_img:

# cv2.imshow(str(p), im0)

# cv2.waitKey(1) # 1 millisecond

# Save results (image with detections)

if save_img:

print("戴口罩人数是"+str(mask_num)+"没戴口罩人数是"+str(nomask_num)+"无法判断的人数"+str(person)+"总人数"+str(person+mask_num+nomask_num))

if dataset.mode == 'image':

im0=cv2.putText(im0, f"{nomask_num}{'no'} {mask_num}{'yes'} {person}{'cannot'} {person+mask_num+nomask_num}{'all'}", (5, 50), cv2.FONT_HERSHEY_SIMPLEX, 1,

(0, 0, 255), 2)

# if mask_num == nomask_num == 0:

# os.remove(path)#删除检测不到的

# cv2.imwrite(save_path, im0)

# mask_num=nomask_num=person=0#上面3行注释

cv2.imwrite(save_path, im0)

mask_num = nomask_num = person = 0 # 上面2行注释

# img1 = cv2.resize(im0, (1100, 700))

# cv2.imshow('img', img1)

# cv2.waitKey(100)

# else: # 'video' or 'stream'

# if vid_path[i] != save_path: # new video

# vid_path[i] = save_path

# if isinstance(vid_writer[i], cv2.VideoWriter):

# vid_writer[i].release() # release previous video writer

# if vid_cap: # video

# fps = vid_cap.get(cv2.CAP_PROP_FPS)

# w = int(vid_cap.get(cv2.CAP_PROP_FRAME_WIDTH))

# h = int(vid_cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

# else: # stream

# fps, w, h = 30, im0.shape[1], im0.shape[0]

# save_path += '.mp4'

# vid_writer[i] = cv2.VideoWriter(save_path, cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

# vid_writer[i].write(im0)

#Print results

t = tuple(x / seen * 1E3 for x in dt) # speeds per image

LOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {(1, 3, *imgsz)}' % t)

if save_txt or save_img:

s = f"\n{len(list(save_dir.glob('labels/*.txt')))} labels saved to {save_dir / 'labels'}" if save_txt else ''

LOGGER.info(f"Results saved to {colorstr('bold', save_dir)}{s}")

if update:

strip_optimizer(weights) # update model (to fix SourceChangeWarning)

def parse_opt():

parser = argparse.ArgumentParser()

parser.add_argument('--weights', nargs='+', type=str, default=ROOT / 'runs/train/exp4/exp4/weights/best.pt', help='model path(s)')

parser.add_argument('--source', type=str, default=ROOT / '1512629522879614977/1-1666145676282-5.jpg', help='file/dir/URL/glob, 0 for webcam')

parser.add_argument('--imgsz', '--img', '--img-size', nargs='+', type=int, default=[640], help='inference size h,w')

parser.add_argument('--conf-thres', type=float, default=0.25, help='confidence threshold')#置信度

parser.add_argument('--iou-thres', type=float, default=0.45, help='NMS IoU threshold')

parser.add_argument('--max-det', type=int, default=1000, help='maximum detections per image')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--view-img', action='store_true', help='show results')

parser.add_argument('--save-txt', action='store_true', help='save results to *.txt')

parser.add_argument('--save-conf', action='store_true', help='save confidences in --save-txt labels')

parser.add_argument('--save-crop', action='store_true', help='save cropped prediction boxes')

parser.add_argument('--nosave', action='store_true', help='do not save images/videos')

parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --classes 0, or --classes 0 2 3')

parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')

parser.add_argument('--augment', action='store_true', help='augmented inference')

parser.add_argument('--visualize', action='store_true', help='visualize features')

parser.add_argument('--update', action='store_true', help='update all models')

parser.add_argument('--project', default=ROOT / 'runs/detect', help='save results to project/name')

parser.add_argument('--name', default='exp', help='save results to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--line-thickness', default=2, type=int, help='bounding box thickness (pixels)')

parser.add_argument('--hide-labels', default=False, action='store_true', help='hide labels')

parser.add_argument('--hide-conf', default=False, action='store_true', help='hide confidences')

parser.add_argument('--half', action='store_true', help='use FP16 half-precision inference')

parser.add_argument('--dnn', action='store_true', help='use OpenCV DNN for ONNX inference')

opt = parser.parse_args()

opt.imgsz *= 2 if len(opt.imgsz) == 1 else 1 # expand

print_args(FILE.stem, opt)

return opt

def main(opt):

check_requirements(exclude=('tensorboard', 'thop'))

run(**vars(opt))

# class MyThread(threading.Thread):

# def run(self):

# for i in range(5):

# opt = parse_opt()

# root_dir = "" # 当前根目录,在当前文件夹就不写

# image = "runs/detect/Images"+str(i) # 图片文件夹名字,需要跟detect.py同一个文件夹下面

# img = MyData(root_dir, image)

# for i in range(len(img)):

# opt.source = img[i]

# main(opt)#多线程

# 命令使用

# python detect.py --weights runs/train/exp_yolov5s/weights/best.pt --source data/images/fishman.jpg # webcam

if __name__ == "__main__":

# for i in range(5):

# t = MyThread()

# t.start()#多线程

opt = parse_opt()

root_dir = "" # 当前根目录,在当前文件夹就不写

image = "runs/detect/Images0" # 图片文件夹名字,需要跟detect.py同一个文件夹下面

img = MyData(root_dir, image)

for i in range(len(img)):

opt.source = img[i]

main(opt)

# opt = parse_opt()

# main(opt)#单张图片新创建一个文件叫dataread.py

from torch.utils.data import Dataset

import os

class MyData(Dataset):

def __init__(self, root_dir, image_dir):

self.root_dir = root_dir

self.image_dir = image_dir

self.image_path = os.path.join(self.root_dir, self.image_dir)

self.image_list = os.listdir(self.image_path)

self.image_list.sort()

def __getitem__(self, idx):

img_name = self.image_list[idx]

img_item_path = os.path.join(self.root_dir, self.image_dir, img_name)

img_item_path.replace("\\", "/")

return img_item_path

def __len__(self):

return len(self.image_list)

if __name__ == '__main__':

root_dir = ""#当前根目录,在当前文件夹就不写

image_ants = "1512629522879614977"#图片文件夹名字,需要跟detect.py同一个文件夹下面

ants_dataset = MyData(root_dir, image_ants)

print(ants_dataset[1])

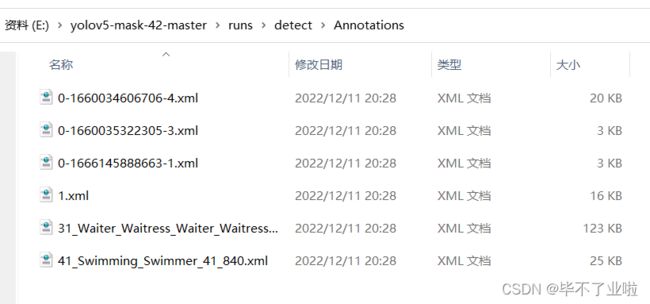

然后我是在runs/detect/Images0这个目录放图片,没有这个目录自己创建一个,然后再创一个runs/detect/exp/labels以及runs/detect/Annotations。

然后在python终端运行python detect.py --save-txt ,开始自动标注。

txt转xml

创建一个python文件,代码里面自己改自动标成什么名字。

# 将 txt 标签 文件转换为 xml 标签文件, 修改dict中的类,以及xml txt 和jpg 路径。

from xml.dom.minidom import Document

import os

import cv2

# 'person','head','helmet','lifejacket'

def makexml(txtPath,xmlPath,picPath): #读取txt路径,xml保存路径,数据集图片所在路径

dict = {'2': "person", #字典对类型进行转换,自己的标签的类。

'0': "with_mask",

'1': "without_mask",

'3': "with_mask"

}

files = os.listdir(txtPath)

for i, name in enumerate(files):

xmlBuilder = Document()

annotation = xmlBuilder.createElement("annotation") # 创建annotation标签

xmlBuilder.appendChild(annotation)

txtFile=open(txtPath+name)

txtList = txtFile.readlines()

img = cv2.imread(picPath+name[0:-4]+".jpg")

Pheight,Pwidth,Pdepth=img.shape

for i in txtList:

oneline = i.strip().split(" ")

folder = xmlBuilder.createElement("folder")#folder标签

folderContent = xmlBuilder.createTextNode("VOC2007")

folder.appendChild(folderContent)

annotation.appendChild(folder)

filename = xmlBuilder.createElement("filename")#filename标签

filenameContent = xmlBuilder.createTextNode(name[0:-4]+".png")

filename.appendChild(filenameContent)

annotation.appendChild(filename)

size = xmlBuilder.createElement("size") # size标签

width = xmlBuilder.createElement("width") # size子标签width

widthContent = xmlBuilder.createTextNode(str(Pwidth))

width.appendChild(widthContent)

size.appendChild(width)

height = xmlBuilder.createElement("height") # size子标签height

heightContent = xmlBuilder.createTextNode(str(Pheight))

height.appendChild(heightContent)

size.appendChild(height)

depth = xmlBuilder.createElement("depth") # size子标签depth

depthContent = xmlBuilder.createTextNode(str(Pdepth))

depth.appendChild(depthContent)

size.appendChild(depth)

annotation.appendChild(size)

object = xmlBuilder.createElement("object")

picname = xmlBuilder.createElement("name")

nameContent = xmlBuilder.createTextNode(dict[oneline[0]])

picname.appendChild(nameContent)

object.appendChild(picname)

pose = xmlBuilder.createElement("pose")

poseContent = xmlBuilder.createTextNode("Unspecified")

pose.appendChild(poseContent)

object.appendChild(pose)

truncated = xmlBuilder.createElement("truncated")

truncatedContent = xmlBuilder.createTextNode("0")

truncated.appendChild(truncatedContent)

object.appendChild(truncated)

difficult = xmlBuilder.createElement("difficult")

difficultContent = xmlBuilder.createTextNode("0")

difficult.appendChild(difficultContent)

object.appendChild(difficult)

bndbox = xmlBuilder.createElement("bndbox")

xmin = xmlBuilder.createElement("xmin")

mathData=int(((float(oneline[1]))*Pwidth+1)-(float(oneline[3]))*0.5*Pwidth)

xminContent = xmlBuilder.createTextNode(str(mathData))

xmin.appendChild(xminContent)

bndbox.appendChild(xmin)

ymin = xmlBuilder.createElement("ymin")

mathData = int(((float(oneline[2]))*Pheight+1)-(float(oneline[4]))*0.5*Pheight)

yminContent = xmlBuilder.createTextNode(str(mathData))

ymin.appendChild(yminContent)

bndbox.appendChild(ymin)

xmax = xmlBuilder.createElement("xmax")

mathData = int(((float(oneline[1]))*Pwidth+1)+(float(oneline[3]))*0.5*Pwidth)

xmaxContent = xmlBuilder.createTextNode(str(mathData))

xmax.appendChild(xmaxContent)

bndbox.appendChild(xmax)

ymax = xmlBuilder.createElement("ymax")

mathData = int(((float(oneline[2]))*Pheight+1)+(float(oneline[4]))*0.5*Pheight)

ymaxContent = xmlBuilder.createTextNode(str(mathData))

ymax.appendChild(ymaxContent)

bndbox.appendChild(ymax)

object.appendChild(bndbox)

annotation.appendChild(object)

f = open(xmlPath+name[0:-4]+".xml", 'w')

xmlBuilder.writexml(f, indent='\t', newl='\n', addindent='\t', encoding='utf-8')

f.close()

makexml("runs/detect/exp/labels/", # txt文件夹

"runs/detect/Annotations/", # xml文件夹

"runs/detect/Images0/") # 图片数据文件夹

运行过后,标注完成

查看使用labelimg打开。可以看到自动标注成功

想要删除一类标签,再创建一个python文件

import os

import xml.etree.ElementTree as ET

yuan_dir = 'runs/detect/Annotations' # 设置原始标签路径为 Annos

new_dir = 'runs/detect/Annotations' # 设置新标签路径 Annotations

for filename in os.listdir(yuan_dir):

file_path = os.path.join(yuan_dir, filename)

new_path=os.path.join(new_dir,filename)

dom = ET.parse(file_path)

root = dom.getroot()

for obj in root.iter('object'): # 获取object节点中的name子节点

if obj.find('name').text== 'with_mask':

root.remove(obj)

#print("change %s to %s." % (yuan_name, new_name1))

elif obj.find('name').text== 'a':

root.remove(obj)

##可以继续删除,继续用elif语句

# 保存到指定文件

dom.write(new_path, xml_declaration=True)

可以看到自动删完啦