- 【图像处理入门】12. 综合项目与进阶:超分辨率、医学分割与工业检测

小米玄戒Andrew

图像处理:从入门到专家图像处理人工智能深度学习算法python计算机视觉CV

摘要本周将聚焦三个高价值的综合项目,打通传统算法与深度学习的技术壁垒。通过图像超分辨率重建对比传统方法与深度学习方案,掌握医学图像分割的U-Net实现,设计工业缺陷检测的完整流水线。每个项目均包含原理解析、代码实现与性能优化,帮助读者从“技术应用”迈向“系统设计”。一、项目1:图像超分辨率重建(从模糊到清晰的跨越)1.技术背景与核心指标超分辨率(SR)是通过算法将低分辨率(LR)图像恢复为高分辨率

- Densenet模型花卉图像分类

深度学习乐园

分类数据挖掘人工智能

项目源码获取方式见文章末尾!600多个深度学习项目资料,快来加入社群一起学习吧。《------往期经典推荐------》项目名称1.【基于CNN-RNN的影像报告生成】2.【卫星图像道路检测DeepLabV3Plus模型】3.【GAN模型实现二次元头像生成】4.【CNN模型实现mnist手写数字识别】5.【fasterRCNN模型实现飞机类目标检测】6.【CNN-LSTM住宅用电量预测】7.【VG

- 基于AFM注意因子分解机的推荐算法

深度学习乐园

深度学习实战项目深度学习科研项目推荐算法算法机器学习

关于深度实战社区我们是一个深度学习领域的独立工作室。团队成员有:中科大硕士、纽约大学硕士、浙江大学硕士、华东理工博士等,曾在腾讯、百度、德勤等担任算法工程师/产品经理。全网20多万+粉丝,拥有2篇国家级人工智能发明专利。社区特色:深度实战算法创新获取全部完整项目数据集、代码、视频教程,请进入官网:zzgcz.com。竞赛/论文/毕设项目辅导答疑,v:zzgcz_com1.项目简介项目A033基于A

- 深度学习实战:基于嵌入模型的AI应用开发

AIGC应用创新大全

AI人工智能与大数据应用开发MCP&Agent云算力网络人工智能深度学习ai

深度学习实战:基于嵌入模型的AI应用开发关键词:嵌入模型(EmbeddingModel)、深度学习、向量空间、语义表示、AI应用开发、相似性搜索、迁移学习摘要:本文将带你从0到1掌握基于嵌入模型的AI应用开发全流程。我们会用“翻译机”“数字身份证”等生活比喻拆解嵌入模型的核心原理,结合Python代码实战(BERT/CLIP模型)演示如何将文本、图像转化为可计算的语义向量,并通过“智能客服问答”“

- 卷积神经网络(Convolutional Neural Network, CNN)

不想秃头的程序

神经网络语音识别人工智能深度学习网络卷积神经网络

卷积神经网络(ConvolutionalNeuralNetwork,CNN)是一种专门用于处理图像、视频等网格数据的深度学习模型。它通过卷积层自动提取数据的特征,并利用空间共享权重和池化层减少参数量和计算复杂度,成为计算机视觉领域的核心技术。以下是CNN的详细介绍:一、核心思想CNN的核心目标是从图像中自动学习层次化特征,并通过空间共享权重和平移不变性减少参数量和计算成本。其关键组件包括:卷积层(

- ResNet(Residual Network)

不想秃头的程序

神经网络语音识别人工智能深度学习网络残差网络神经网络

ResNet(ResidualNetwork)是深度学习中一种经典的卷积神经网络(CNN)架构,由微软研究院的KaimingHe等人在2015年提出。它通过引入残差连接(SkipConnection)解决了深度神经网络中的梯度消失问题,使得网络可以训练极深的模型(如上百层),并在图像分类、目标检测、语义分割等任务中取得了突破性成果。以下是ResNet的详细介绍:一、核心思想ResNet的核心创新是

- P25:LSTM实现糖尿病探索与预测

?Agony

lstm人工智能rnn

本文为365天深度学习训练营中的学习记录博客原作者:K同学啊一、相关技术1.LSTM基本概念LSTM(长短期记忆网络)是RNN(循环神经网络)的一种变体,它通过引入特殊的结构来解决传统RNN中的梯度消失和梯度爆炸问题,特别适合处理序列数据。结构组成:遗忘门:决定丢弃哪些信息,通过sigmoid函数输出0-1之间的值,表示保留或遗忘的程度。输入门:决定更新哪些信息,同样通过sigmoid函数控制更新

- Python训练营打卡——DAY16(2025.5.5)

cosine2025

Python训练营打卡python开发语言机器学习

目录一、NumPy数组基础笔记1.理解数组的维度(Dimensions)2.NumPy数组与深度学习Tensor的关系3.一维数组(1DArray)4.二维数组(2DArray)5.数组的创建5.1数组的简单创建5.2数组的随机化创建5.3数组的遍历5.4数组的运算6.数组的索引6.1一维数组索引6.2二维数组索引6.3三维数组索引二、SHAP值的深入理解三、总结1.NumPy数组基础总结2.SH

- 【机器学习&深度学习】反向传播机制

目录一、一句话定义二、类比理解三、为什重要?四、用生活例子解释:神经网络=烹饪机器人4.1第一步:尝一口(前向传播)4.2第二步:倒着推原因(反向传播)五、换成人工智能流程说一遍六、图示类比:找山顶(最优参数)七、总结一句人话八、PyTorch代码示例:亲眼看到每一层的梯度九、梯度=损失函数对参数的偏导数十、类比总结反向传播(Backpropagation)是神经网络中训练过程的核心机制,它就像“

- 人脸识别算法赋能园区无人超市安防升级

智驱力人工智能

算法人工智能边缘计算人脸识别智慧园区智慧工地智慧煤矿

人脸识别算法赋能园区无人超市安防升级正文在园区无人超市的运营管理中,传统安防手段依赖人工巡检或基础监控设备,存在响应滞后、误报率高、环境适应性差等问题。本文从技术背景、实现路径、功能优势及应用场景四个维度,阐述如何通过人脸识别检测、人员入侵算法及疲劳检测算法的协同应用,构建高效、精准的智能安防体系。一、技术背景:视觉分析算法的核心支撑人脸识别算法基于深度学习的卷积神经网络(CNN)模型,通过提取面

- 【Python深度学习】零基础掌握Pytorch Pooling layers nn.MaxPool方法

Mr数据杨

Python深度学习python深度学习pytorch

在深度学习的世界中,MaxPooling是一种关键的操作,用于降低数据的维度并保留重要特征。这就像是从一堆照片中挑选出最能代表某个场景的那张。PyTorch提供了多种MaxPooling层,包括nn.MaxPool1d、nn.MaxPool2d和nn.MaxPool3d,它们分别适用于不同维度的数据处理。如果处理的是声音信号(一维数据),就会用到nn.MaxPool1d。而处理图像(二维数据)时,

- 阅读笔记(2) 单层网络:回归

a2507283885

笔记

阅读笔记(2)单层网络:回归该笔记是DataWhale组队学习计划(共度AI新圣经:深度学习基础与概念)的Task02以下内容为个人理解,可能存在不准确或疏漏之处,请以教材为主。1.从泛函视角来看线性回归还记得线性代数里学过的“基”这个概念吗?一组基向量是一组线性无关的向量,它们通过线性组合可以张成一个向量空间。也就是说,这个空间里的任意一个向量,都可以表示成这组基的线性组合。函数其实也可以看作是

- 【深度学习解惑】如果用RNN实现情感分析或文本分类,你会如何设计数据输入?

云博士的AI课堂

大模型技术开发与实践哈佛博后带你玩转机器学习深度学习深度学习rnn分类人工智能机器学习神经网络

以下是用RNN实现情感分析/文本分类时数据输入设计的完整技术方案:1.引言与背景介绍情感分析/文本分类是NLP的核心任务,目标是将文本映射到预定义类别(如正面/负面情感)。RNN因其处理序列数据的天然优势成为主流方案。核心挑战在于如何将非结构化的文本数据转换为适合RNN处理的数值化序列输入。2.原理解释文本到向量的转换流程:原始文本分词建立词汇表词索引映射词嵌入层序列向量关键数学表示:词嵌入表示:

- Pytorch模型安卓部署

python&java

pytorch人工智能python

Pytorch是一种流行的深度学习框架,用于算法开发,而Android是一种广泛应用的操作系统,多应用于移动设备当中。目前多数的研究都是在于算法上,个人觉得把算法落地是一件很有意思的事情,因此本人准备分享一些模型落地的文章(后续可能分享微信小程序部署,PyQt部署以及exe打包,ncnn部署,tensorRT部署,MNN部署)。本篇文章主要分享Pytorch的Android端部署。看这篇文章的读者

- 人工智能-基础篇-5-建模方式(判别式模型和生成式模型)

机器学习包括了多种建模方式,其中判别式建模(DiscriminativeModel)和生成式建模是最常见的两种。这两种建模方式都可以通过深度学习技术来实现,并用于创建不同类型的模型。简单来说:想要创建一个模型,依赖需求需要合适的建模方式来创建这个模型。通常建模方式主要分为两大类。一类是判别式模型,针对输入数据给出特定的输出。如:判断一张图片是猫还是狗,直接学习“猫”和“狗”的特征差异(如耳朵形状、

- PyTorch教程:LSTM语言模型的动态量化技术解析

怀灏其Prudent

PyTorch教程:LSTM语言模型的动态量化技术解析tutorialsPyTorchtutorials.项目地址:https://gitcode.com/gh_mirrors/tuto/tutorials前言在深度学习模型部署过程中,模型大小和推理速度是两个至关重要的考量因素。PyTorch提供的动态量化技术能够在不显著影响模型准确率的前提下,有效减小模型体积并提升推理速度。本文将深入解析如何对

- 【机器学习】数学基础——张量(傻瓜篇)

一叶千舟

深度学习【理论】机器学习人工智能

目录前言一、张量的定义1.标量(0维张量)2.向量(1维张量)3.矩阵(2维张量)4.高阶张量(≥3维张量)二、张量的数学表示2.1张量表示法示例三、张量的运算3.1常见张量运算四、张量在深度学习中的应用4.1PyTorch示例:张量在神经网络中的运用五、总结:张量的多维世界延伸阅读前言在机器学习、深度学习以及物理学中,张量是一个至关重要的概念。无论是在人工智能领域的神经网络中,还是在高等数学、物

- 后端开发实习生简历迭代的5个版本,希望能帮你找到实习

今天不coding

简历实习后端Java大厂暑期实习

后端开发实习生简历迭代的5个版本,希望能帮你找到实习1.0研究生开学时写的第一份简历,主要是对本科做的项目的一些总结。本科主要是以深度学习的项目为主+比赛,开发的技术学的比较少,后端的项目也没有做过。但是凭此找到了一份算法的实习。当时研一还是想走算法工程师的。后面觉得自己不适合,就放弃了。2.0经历过几个月的算法实习和论文折磨之后,决定走后端开发岗了,选择Java为主语言,在B站大学做了一个项目,

- 【机器学习实战】Datawhale夏令营2:深度学习回顾

城主_全栈开发

机器学习机器学习深度学习人工智能

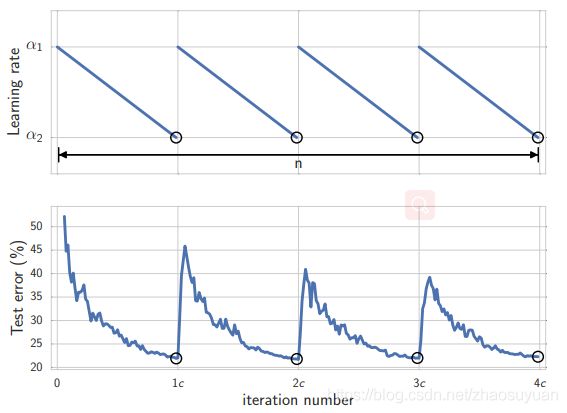

#DataWhale夏令营#ai夏令营文章目录1.深度学习的定义1.1深度学习&图神经网络1.2机器学习和深度学习的关系2.深度学习的训练流程2.1数学基础2.1.1梯度下降法基本原理数学表达步骤学习率α梯度下降的变体2.1.2神经网络与矩阵网络结构表示前向传播激活函数反向传播批处理卷积操作参数更新优化算法正则化初始化2.2激活函数Sigmoid函数:Tanh函数:ReLU函数(Rectified

- 深度学习详解:通过案例了解机器学习基础

beist

深度学习机器学习人工智能

引言机器学习(MachineLearning,ML)和深度学习(DeepLearning,DL)是现代人工智能领域中的两个重要概念。通过让机器具备学习的能力,机器可以从数据中自动找到函数,并应用于各种任务,如语音识别、图像识别和游戏对战等。在这篇笔记中,我们将通过一个简单的案例,逐步了解机器学习的基础知识。1.1机器学习案例学习1.1.1回归问题与分类问题在机器学习中,根据所要解决的问题类型,任务

- 大模型量化

需要重新演唱

大模型量化

大模型量化是一种优化技术,旨在减少深度学习模型的内存占用和提高推理速度,同时尽量保持模型的精度。量化通过将模型中的浮点数权重和激活值转换为较低精度的表示形式来实现这一目标。以下是关于大模型量化的详细知识:目录1.量化基础1.1量化定义1.2量化优势1.3量化挑战2.量化方法2.1量化类型2.2量化粒度2.3量化算法3.量化实践3.1量化流程3.2量化工具4.量化案例4.1BERT量化4.2GPT-

- pytorch 要点之雅可比向量积

AI大模型教程

pytorch人工智能pythonfacebook深度学习机器学习webpack

自动微分是PyTorch深度学习框架的核心。既然是核心,就需要敲黑板、划重点学习。同时,带来另外一个重要的数学概念:雅可比向量积。PyTorch中的自动微分与雅可比向量积自动微分(AutomaticDifferentiation,AD)是深度学习框架中的关键技术之一,它使得模型训练变得更加简单和高效。且已知:PyTorch是一个广泛使用的深度学习框架,它内置了强大的自动微分功能。在本文中,我们将深

- 昇腾AI生态组件全解析:与英伟达生态的深度对比

随着人工智能技术的快速发展,国产AI芯片的崛起正在改变全球计算产业的格局。华为昇腾(Ascend)系列AI处理器凭借自主创新的达芬奇架构,构建了完整的软硬件生态体系。本文将从核心组件对比、显卡性能对标两个维度,深入剖析昇腾与英伟达(NVIDIA)生态的技术差异与适用场景。一、昇腾核心组件与英伟达对标分析1.推理引擎:MindIEvsTensorRT昇腾MindIE1.0.0基于昇腾芯片的深度学习推

- 智能汽车图像及视频处理方案,支持视频智能包装创作能力

美摄科技

汽车

在这个日新月异的智能时代,每一帧画面都承载着超越想象的力量。随着自动驾驶技术的飞速发展,智能汽车不仅成为了未来出行的代名词,更是技术与艺术完美融合的典范。在这场变革的浪潮中,美摄科技以创新为翼,推出了领先的智能汽车图像及视频处理方案,为智能汽车行业带来了前所未有的视觉盛宴,重新定义了智能出行的视觉体验。一、智能重塑,视觉新境界美摄科技的智能汽车图像及视频处理方案,是基于深度学习、人工智能及大数据处

- 深度学习计算机视觉开源系统OpenMMLab(mmsegmentation、mmdetection、mmpose)环境配置【详细、可运行】

nomoremorphine

深度学习计算机视觉开源

OpenMMLab(mmsegmentation、mmdetection、mmpose)环境配置OpenMMLab简介优势:一、Windows/Linux下环境配置(以mmsegmentationv1.2.2(最新版)为例)0.确认安装版本信息1)确认电脑显卡版本2)确认mmcv对应版本3)确认版本1.安装CUDA和cuDNN2.创建conda环境,下载pytorch3.安装mmcv4.安装MMS

- 编译OpenCV支持CUDA视频解码

AI标书

pythonopenvccudanvidiadockerbuild

如何在Ubuntu上编译OpenCV并启用CUDA视频解码支持(cudacodec)在深度学习、视频处理等高性能计算领域,OpenCV的GPU加速功能非常重要。特别是它的cudacodec模块,能直接利用NVIDIA硬件实现高效的视频解码,极大提升性能。本文将基于Ubuntu环境,详细介绍从环境准备到编译安装OpenCV,并开启cudacodec模块的全过程。完整的shell脚本以及本次编译所用到

- 深度学习:梯度下降法

数字化与智能化

人工智能深度学习深度学习梯度下降法

一、梯度的概念(1)什么是梯度梯度的本意是一个向量(矢量),表示某一函数在该点处的方向导数沿着该方向取得最大值,即函数在该点处沿着该方向(此梯度的方向)变化最快,变化率最大(为该梯度的模)。对于一个多元函数f(x1,x2,...,xn),其梯度是一个由函数偏导数组成的向量,其梯度表示为:Gradient=(∂f/∂x1,∂f/∂x2,...,∂f/∂xn)其中,∂f/∂xi表示函数f对第i个自变量

- C# vs Python:谁更适合初学者?用5个关键点教你掌握深度学习中的线性代数

墨瑾轩

一起学学C#【四】c#python深度学习

关注墨瑾轩,带你探索编程的奥秘!超萌技术攻略,轻松晋级编程高手技术宝库已备好,就等你来挖掘订阅墨瑾轩,智趣学习不孤单即刻启航,编程之旅更有趣嘿,小伙伴们!今天我们要一起探索如何使用C#来入门深度学习的世界,特别关注其中的线性代数部分。你可能会好奇:“为什么是C#而不是Python?”别急,我们会在接下来的内容中详细解释这个问题,并通过对比两种语言的特点,让你明白选择C#进行深度学习并不是一个坏主意

- 合规视角下银行智能客服风险防控

AI 智能服务

智能客服人工智能AIGC数据库chatgpt

1.AI驱动金融变革的政策与技术背景政策导向:我国《新一代人工智能发展规划》明确提出发展智能金融,要求:构建金融大数据平台,提升多媒体数据处理能力;创新智能金融产品与服务形态;推广智能客服、监控等技术应用;建立智能风控预警体系。技术支撑:云计算、大数据技术成熟为AI发展奠定了基础。深度学习算法的突破则引爆了本轮AI浪潮,显著提升了复杂任务处理精度,进而推动了计算机视觉、机器学习、自然语言处理(NL

- GRU与Transformer结合:新一代序列模型

AI大模型应用工坊

grutransformer深度学习ai

GRU与Transformer结合:新一代序列模型关键词:GRU、Transformer、序列模型、结合、深度学习摘要:本文深入探讨了GRU与Transformer结合所形成的新一代序列模型。先介绍了GRU和Transformer各自的核心概念及工作原理,然后阐述了二者结合的原因、方式和优势。通过代码实际案例展示了如何搭建结合的模型,还探讨了其在自然语言处理、语音识别等领域的实际应用场景。最后对未

- 解读Servlet原理篇二---GenericServlet与HttpServlet

周凡杨

javaHttpServlet源理GenericService源码

在上一篇《解读Servlet原理篇一》中提到,要实现javax.servlet.Servlet接口(即写自己的Servlet应用),你可以写一个继承自javax.servlet.GenericServletr的generic Servlet ,也可以写一个继承自java.servlet.http.HttpServlet的HTTP Servlet(这就是为什么我们自定义的Servlet通常是exte

- MySQL性能优化

bijian1013

数据库mysql

性能优化是通过某些有效的方法来提高MySQL的运行速度,减少占用的磁盘空间。性能优化包含很多方面,例如优化查询速度,优化更新速度和优化MySQL服务器等。本文介绍方法的主要有:

a.优化查询

b.优化数据库结构

- ThreadPool定时重试

dai_lm

javaThreadPoolthreadtimertimertask

项目需要当某事件触发时,执行http请求任务,失败时需要有重试机制,并根据失败次数的增加,重试间隔也相应增加,任务可能并发。

由于是耗时任务,首先考虑的就是用线程来实现,并且为了节约资源,因而选择线程池。

为了解决不定间隔的重试,选择Timer和TimerTask来完成

package threadpool;

public class ThreadPoolTest {

- Oracle 查看数据库的连接情况

周凡杨

sqloracle 连接

首先要说的是,不同版本数据库提供的系统表会有不同,你可以根据数据字典查看该版本数据库所提供的表。

select * from dict where table_name like '%SESSION%';

就可以查出一些表,然后根据这些表就可以获得会话信息

select sid,serial#,status,username,schemaname,osuser,terminal,ma

- 类的继承

朱辉辉33

java

类的继承可以提高代码的重用行,减少冗余代码;还能提高代码的扩展性。Java继承的关键字是extends

格式:public class 类名(子类)extends 类名(父类){ }

子类可以继承到父类所有的属性和普通方法,但不能继承构造方法。且子类可以直接使用父类的public和

protected属性,但要使用private属性仍需通过调用。

子类的方法可以重写,但必须和父类的返回值类

- android 悬浮窗特效

肆无忌惮_

android

最近在开发项目的时候需要做一个悬浮层的动画,类似于支付宝掉钱动画。但是区别在于,需求是浮出一个窗口,之后边缩放边位移至屏幕右下角标签处。效果图如下:

一开始考虑用自定义View来做。后来发现开线程让其移动很卡,ListView+动画也没法精确定位到目标点。

后来想利用Dialog的dismiss动画来完成。

自定义一个Dialog后,在styl

- hadoop伪分布式搭建

林鹤霄

hadoop

要修改4个文件 1: vim hadoop-env.sh 第九行 2: vim core-site.xml <configuration> &n

- gdb调试命令

aigo

gdb

原文:http://blog.csdn.net/hanchaoman/article/details/5517362

一、GDB常用命令简介

r run 运行.程序还没有运行前使用 c cuntinue

- Socket编程的HelloWorld实例

alleni123

socket

public class Client

{

public static void main(String[] args)

{

Client c=new Client();

c.receiveMessage();

}

public void receiveMessage(){

Socket s=null;

BufferedRea

- 线程同步和异步

百合不是茶

线程同步异步

多线程和同步 : 如进程、线程同步,可理解为进程或线程A和B一块配合,A执行到一定程度时要依靠B的某个结果,于是停下来,示意B运行;B依言执行,再将结果给A;A再继续操作。 所谓同步,就是在发出一个功能调用时,在没有得到结果之前,该调用就不返回,同时其它线程也不能调用这个方法

多线程和异步:多线程可以做不同的事情,涉及到线程通知

&

- JSP中文乱码分析

bijian1013

javajsp中文乱码

在JSP的开发过程中,经常出现中文乱码的问题。

首先了解一下Java中文问题的由来:

Java的内核和class文件是基于unicode的,这使Java程序具有良好的跨平台性,但也带来了一些中文乱码问题的麻烦。原因主要有两方面,

- js实现页面跳转重定向的几种方式

bijian1013

JavaScript重定向

js实现页面跳转重定向有如下几种方式:

一.window.location.href

<script language="javascript"type="text/javascript">

window.location.href="http://www.baidu.c

- 【Struts2三】Struts2 Action转发类型

bit1129

struts2

在【Struts2一】 Struts Hello World http://bit1129.iteye.com/blog/2109365中配置了一个简单的Action,配置如下

<!DOCTYPE struts PUBLIC

"-//Apache Software Foundation//DTD Struts Configurat

- 【HBase十一】Java API操作HBase

bit1129

hbase

Admin类的主要方法注释:

1. 创建表

/**

* Creates a new table. Synchronous operation.

*

* @param desc table descriptor for table

* @throws IllegalArgumentException if the table name is res

- nginx gzip

ronin47

nginx gzip

Nginx GZip 压缩

Nginx GZip 模块文档详见:http://wiki.nginx.org/HttpGzipModule

常用配置片段如下:

gzip on; gzip_comp_level 2; # 压缩比例,比例越大,压缩时间越长。默认是1 gzip_types text/css text/javascript; # 哪些文件可以被压缩 gzip_disable &q

- java-7.微软亚院之编程判断俩个链表是否相交 给出俩个单向链表的头指针,比如 h1 , h2 ,判断这俩个链表是否相交

bylijinnan

java

public class LinkListTest {

/**

* we deal with two main missions:

*

* A.

* 1.we create two joined-List(both have no loop)

* 2.whether list1 and list2 join

* 3.print the join

- Spring源码学习-JdbcTemplate batchUpdate批量操作

bylijinnan

javaspring

Spring JdbcTemplate的batch操作最后还是利用了JDBC提供的方法,Spring只是做了一下改造和封装

JDBC的batch操作:

String sql = "INSERT INTO CUSTOMER " +

"(CUST_ID, NAME, AGE) VALUES (?, ?, ?)";

- [JWFD开源工作流]大规模拓扑矩阵存储结构最新进展

comsci

工作流

生成和创建类已经完成,构造一个100万个元素的矩阵模型,存储空间只有11M大,请大家参考我在博客园上面的文档"构造下一代工作流存储结构的尝试",更加相信的设计和代码将陆续推出.........

竞争对手的能力也很强.......,我相信..你们一定能够先于我们推出大规模拓扑扫描和分析系统的....

- base64编码和url编码

cuityang

base64url

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.PrintWriter;

import java.io.StringWriter;

import java.io.UnsupportedEncodingException;

- web应用集群Session保持

dalan_123

session

关于使用 memcached 或redis 存储 session ,以及使用 terracotta 服务器共享。建议使用 redis,不仅仅因为它可以将缓存的内容持久化,还因为它支持的单个对象比较大,而且数据类型丰富,不只是缓存 session,还可以做其他用途,一举几得啊。1、使用 filter 方法存储这种方法比较推荐,因为它的服务器使用范围比较多,不仅限于tomcat ,而且实现的原理比较简

- Yii 框架里数据库操作详解-[增加、查询、更新、删除的方法 'AR模式']

dcj3sjt126com

数据库

public function getMinLimit () { $sql = "..."; $result = yii::app()->db->createCo

- solr StatsComponent(聚合统计)

eksliang

solr聚合查询solr stats

StatsComponent

转载请出自出处:http://eksliang.iteye.com/blog/2169134

http://eksliang.iteye.com/ 一、概述

Solr可以利用StatsComponent 实现数据库的聚合统计查询,也就是min、max、avg、count、sum的功能

二、参数

- 百度一道面试题

greemranqq

位运算百度面试寻找奇数算法bitmap 算法

那天看朋友提了一个百度面试的题目:怎么找出{1,1,2,3,3,4,4,4,5,5,5,5} 找出出现次数为奇数的数字.

我这里复制的是原话,当然顺序是不一定的,很多拿到题目第一反应就是用map,当然可以解决,但是效率不高。

还有人觉得应该用算法xxx,我是没想到用啥算法好...!

还有觉得应该先排序...

还有觉

- Spring之在开发中使用SpringJDBC

ihuning

spring

在实际开发中使用SpringJDBC有两种方式:

1. 在Dao中添加属性JdbcTemplate并用Spring注入;

JdbcTemplate类被设计成为线程安全的,所以可以在IOC 容器中声明它的单个实例,并将这个实例注入到所有的 DAO 实例中。JdbcTemplate也利用了Java 1.5 的特定(自动装箱,泛型,可变长度

- JSON API 1.0 核心开发者自述 | 你所不知道的那些技术细节

justjavac

json

2013年5月,Yehuda Katz 完成了JSON API(英文,中文) 技术规范的初稿。事情就发生在 RailsConf 之后,在那次会议上他和 Steve Klabnik 就 JSON 雏形的技术细节相聊甚欢。在沟通单一 Rails 服务器库—— ActiveModel::Serializers 和单一 JavaScript 客户端库——&

- 网站项目建设流程概述

macroli

工作

一.概念

网站项目管理就是根据特定的规范、在预算范围内、按时完成的网站开发任务。

二.需求分析

项目立项

我们接到客户的业务咨询,经过双方不断的接洽和了解,并通过基本的可行性讨论够,初步达成制作协议,这时就需要将项目立项。较好的做法是成立一个专门的项目小组,小组成员包括:项目经理,网页设计,程序员,测试员,编辑/文档等必须人员。项目实行项目经理制。

客户的需求说明书

第一步是需

- AngularJs 三目运算 表达式判断

qiaolevip

每天进步一点点学习永无止境众观千象AngularJS

事件回顾:由于需要修改同一个模板,里面包含2个不同的内容,第一个里面使用的时间差和第二个里面名称不一样,其他过滤器,内容都大同小异。希望杜绝If这样比较傻的来判断if-show or not,继续追究其源码。

var b = "{{",

a = "}}";

this.startSymbol = function(a) {

- Spark算子:统计RDD分区中的元素及数量

superlxw1234

sparkspark算子Spark RDD分区元素

关键字:Spark算子、Spark RDD分区、Spark RDD分区元素数量

Spark RDD是被分区的,在生成RDD时候,一般可以指定分区的数量,如果不指定分区数量,当RDD从集合创建时候,则默认为该程序所分配到的资源的CPU核数,如果是从HDFS文件创建,默认为文件的Block数。

可以利用RDD的mapPartitionsWithInd

- Spring 3.2.x将于2016年12月31日停止支持

wiselyman

Spring 3

Spring 团队公布在2016年12月31日停止对Spring Framework 3.2.x(包含tomcat 6.x)的支持。在此之前spring团队将持续发布3.2.x的维护版本。

请大家及时准备及时升级到Spring

- fis纯前端解决方案fis-pure

zccst

JavaScript

作者:zccst

FIS通过插件扩展可以完美的支持模块化的前端开发方案,我们通过FIS的二次封装能力,封装了一个功能完备的纯前端模块化方案pure。

1,fis-pure的安装

$ fis install -g fis-pure

$ pure -v

0.1.4

2,下载demo到本地

git clone https://github.com/hefangshi/f