1 导入模块

import torch

from torch.utils.data import DataLoader

import torch.nn as nn

import torchvision.datasets as Dataset

import torchvision.transforms as transforms

import sys

2 加载数据 FashionMNIST

def data_iter(mnist_train, mnist_test, batch_szie, num_workers=0):

if sys.platform.startswith('win'):

num_workers = 0

else:

num_workers = 4

train_iter = DataLoader(mnist_train, batch_size=batch_szie, shuffle=True, num_workers=num_workers)

test_iter = DataLoader(mnist_test, batch_size=batch_szie, shuffle=False, num_workers=num_workers)

return train_iter, test_iter

mnist_train = Dataset.FashionMNIST('./datasets/FashionMNIST', train=True, transform=transforms.ToTensor(),

download=True)

mnist_test = Dataset.FashionMNIST('./datasets/FashionMNIST', train=False, transform=transforms.ToTensor(),

download=True)

batch_size = 256

train_iter, test_iter = data_iter(mnist_train, mnist_test, batch_size, num_workers=0)

3 定义模型

class Net(nn.Module):

def __init__(self, num_inputs, num_outputs):

super(Net, self).__init__()

self.linear = nn.Linear(num_inputs, num_outputs)

def forward(self, x):

return self.linear(x.view(x.shape[0], -1))

num_inputs = 28 * 28

num_outputs = 10

net = Net(num_inputs, num_outputs)

4初始化模型参数

torch.nn.init.normal_(net.linear.weight, mean=0, std=0.01)

torch.nn.init.zeros_(net.linear.bias)

5定义损失函数和优化器

loss = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(net.parameters(), lr=0.1)

6定义模型的评估方式

def evaluate(data_iter, net):

acc_sum = 0.0

n = 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

7训练模型的函数

def train(net, train_iter, test_iter, loss, num_epochs, batch_size, lr, optimizer):

train_l_sum = 0.0

train_acc_sum = 0.0

n = 0

for epoch in range(1, num_epochs + 1):

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

optimizer.zero_grad()

l.backward()

optimizer.step()

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

test_acc = evaluate(test_iter, net)

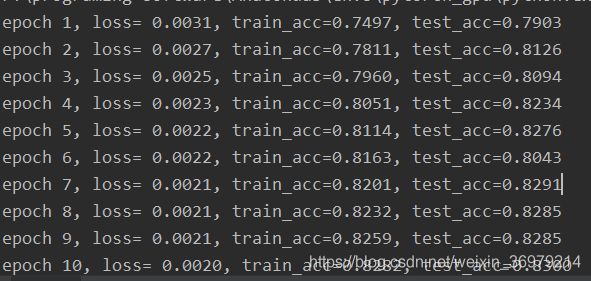

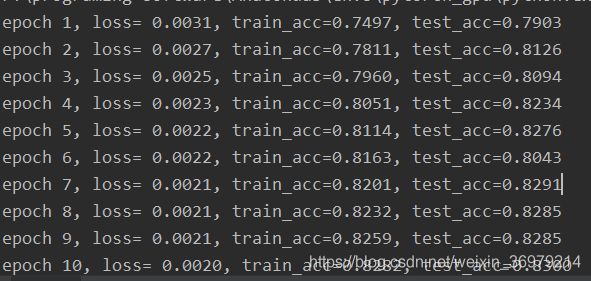

print('epoch %d, loss= %.4f, train_acc=%.4f, test_acc=%.4f' % (

epoch, train_l_sum / n, train_acc_sum / n, test_acc))

8训练模型

num_epochs = 10

lr = 0.1

train(net, train_iter, test_iter, loss, num_epochs, batch_size, lr, optimizer)

输出

10 完整代码

import torch

from torch.utils.data import DataLoader

import torch.nn as nn

import torchvision.datasets as Dataset

import torchvision.transforms as transforms

import sys

mnist_train = Dataset.FashionMNIST('./datasets/FashionMNIST', train=True, transform=transforms.ToTensor(),

download=True)

mnist_test = Dataset.FashionMNIST('./datasets/FashionMNIST', train=False, transform=transforms.ToTensor(),

download=True)

def data_iter(mnist_train, mnist_test, batch_szie, num_workers=0):

if sys.platform.startswith('win'):

num_workers = 0

else:

num_workers = 4

train_iter = DataLoader(mnist_train, batch_size=batch_szie, shuffle=True, num_workers=num_workers)

test_iter = DataLoader(mnist_test, batch_size=batch_szie, shuffle=False, num_workers=num_workers)

return train_iter, test_iter

batch_size = 256

train_iter, test_iter = data_iter(mnist_train, mnist_test, batch_size, num_workers=0)

class Net(nn.Module):

def __init__(self, num_inputs, num_outputs):

super(Net, self).__init__()

self.linear = nn.Linear(num_inputs, num_outputs)

def forward(self, x):

return self.linear(x.view(x.shape[0], -1))

num_inputs = 28 * 28

num_outputs = 10

net = Net(num_inputs, num_outputs)

torch.nn.init.normal_(net.linear.weight, mean=0, std=0.01)

torch.nn.init.zeros_(net.linear.bias)

loss = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(net.parameters(), lr=0.1)

num_epochs = 10

lr = 0.1

def evaluate(data_iter, net):

acc_sum = 0.0

n = 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

def train(net, train_iter, test_iter, loss, num_epochs, batch_size, lr, optimizer):

train_l_sum = 0.0

train_acc_sum = 0.0

n = 0

for epoch in range(1, num_epochs + 1):

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

optimizer.zero_grad()

l.backward()

optimizer.step()

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

test_acc = evaluate(test_iter, net)

print('epoch %d, loss= %.4f, train_acc=%.4f, test_acc=%.4f' % (

epoch, train_l_sum / n, train_acc_sum / n, test_acc))

train(net, train_iter, test_iter, loss, num_epochs, batch_size, lr, optimizer)