初入领域自适应DomainAdaptation

15年的文章:Unsupervised Domain Adaptation by Backpropagation

雷郭出品

-

- DomainAdatation的定义

- 本文的领域自适应的独特之处

- 本文方法的梗概

- 本文的citing达到接近1900的原因(截至2020年11月初)

- 本文的GRL的作用

- 本文方法详情介绍

- 本文的最终目标

- 本文的框架图

- pytorch实现代码

DomainAdatation的定义

Learning a discriminative classifier or other predictor in the presence of a shift between training and test distributions is known asdomain adaptation(DA).

在训练分布和测试分布之间发生变化的情况下学习判别分类器或其他预测因子称为域适应(DA)。

领域自适应和迁移学习的关系

本文的领域自适应的独特之处

Unlike most previous papers on domain adaptation that worked with fixed feature representations, we focus on combining domain adaptation and deep feature learning within one training process (deep domain adaptation). Our goal is to embed domain adaptation into the process of learning representation, so that the final classification decisions are made based on features that are both discriminative and invariant to the change of domains, i.e. have the same or very similar distributions in the source and the target domains. In this way, the obtained feed-forward network can be applicable to the target domain without being hindered by the shift between the two domains.

不同于以往大多数关于领域适应的论文都使用固定的特征表示,我们专注于在一个训练过程(深层域适应)中结合领域适应和深层特征学习。我们的目标是将领域适应嵌入到学习表示的过程中,使得最终的分类决策的做出是基于对域的变化既有区别又不变(感觉这要求有点高)的特征,即在源域和目标域中具有相同或非常相似的分布。这样,所得到的前馈网络可以应用于目标域,而不受两个域之间的转变的阻碍。

本文方法的梗概

We thus focus on learning features that combine (i) discriminativeness and (ii) domain-invariance.This is achieved by jointly optimizing the underlying features as well as two discriminative classifiers operating on these features: (i) the label predictor that predicts class labels and is used both during training and at test time and (ii) the domain classifier that discriminates between the source andthe target domains during training.

While the parameters of the classifiers are optimized in order to minimize their error on the training set, the parameters of the underlying deep feature mapping are optimized in order to minimize the loss of the label classifier and to maximize the loss of the domain classifier. The latter encourages domain-invariant features to emerge in the course of the optimization.

两个分类器:类别分类和领域分类

两个分类器的参数都是来最小化各自的损失

特征提取层的参数既要最小化类别损失,又要最大化领域损失

正是这个特征提取层的既又 使得领域不变性特征得以出现

个人感觉上面这一段好复杂(各种损失,各种最大最小),因为它是本文核心

本文的citing达到接近1900的原因(截至2020年11月初)

Crucially, we show that all three training processes(这里的三对应的是什么?有两种可能) can be embedded into an appropriately composed deep feed-forward network (Figure 1) that uses standard layers andloss functions, and can be trained using standard backpropagation algorithms based on stochastic gradient descent orits modifications (e.g. SGD with momentum).

Our approach is generic as it can be used to add domain adaptation to any existing feed-forward architecture that is trainable by backpropagation.

本文的算法很通用,可以嵌入到一般的神经网络(现在绝大多数的神经网络用的都是反向传播)当中

本文的GRL的作用

In practice, the only non-standard component of the proposed architecture is a rather trivial gradient reversal layer that leaves the input unchanged during forward propagation and reverses the gradient by multiplying it by a negative scalar during the back propagation.

GRL怎么做到前向传播保持输入不变?

后向传播反转梯度?

本文方法详情介绍

-

We now detail the proposed model for the domain adaptation. We assume that the model works with input samples x∈X, where X is some input space and certain labels (output) y from the label space Y. Below,we assume classification problems where Y is a finite set(Y={1,2,…L}), however our approach is generic and can handle any output label space that other deep feedforward models can handle. We further assume that there exist two distributions S(x,y) and T(x,y) on X⊗Y,which will be referred to as the source distribution and the target distribution (or the source domain and the target domain). Both distributions are assumed complex and unknown, and furthermore similar but different (in otherwords,S is “shifted” from T by some domain shift).

源域和目标域;既类似又不同(这个是对应前面的既又?) -

At training time,we have an access to a large set of training samples{x1,x2,…,xN} from both the source and the target domains distributed according to the marginal distributions S(x) and T(x) .

训练的时候源域和目标域的样本都得使用 -

We denote with di the binary variable (domain label) for the ith example, which indicates whetherxicome from the source distribution (xi∼S(x) if di=0) orfrom the target distribution (xi∼T(x) if di=1). For the examples from the source distribution (di=0) the corresponding labels yi∈Y are known at training time. For the examples from the target domains, we do not know the labels at training time, and we want to predict such labels at test time.

di的取值(0或者1):源域的样本di=0,目标域的样本di=1

但是由于目标域的样本的y标签不知道(因为本文的题目就是unsupervised domain adaptation)

(那目标域的样本在训练的时候可以用来干嘛,可用来算领域误差(因为目标域和源域都有di标签))

(我觉得目标域的标签肯定是有的,只是训练的时候不让网络知道,不然测试的时候怎么判断准确率) -

We now define a deep feed-forward architecture that for each input x predicts its label y∈Y and its domain label d∈ {0,1}.

我的疑问是:训练的时候y标签和d标签自然都需要算出来,那测试的时候d标签还需要吗

我暂时觉得不需要 -

We decompose such mapping into three parts.We assume that the input x is first mapped by a mapping Gf(a feature extractor) to a D-dimensional feature vector f ∈R^D. The feature mapping may also include several feed-forward layers and we denote the vector of parameters of all layers in this mapping as θf, i.e.f=Gf(x;θf).Then, the feature vector f is mapped by a mapping Gy(label predictor) to the label y, and we denote the parameters of this mapping with θy. Finally, the same feature vector f is mapped to the domain label d by a mapping Gd(domain classifier) with the parameters θd.

输入x经过特征提取层得到D维向量,然后D维向量分别输入两个分支,即分类和领域分支。

然后注意这里的Gf,Gy,Gd分别对应三个局部网络

θf,θy,θd分别对应三个局部网络的参数 -

重点内容来了

-

During the learning stage, we aim to minimize the label prediction loss on the annotated part (i.e. the source part)of the training set, and the parameters of both the feature extractor and the label predictor are thus optimized in order to minimize the empirical loss for the source domain samples. This ensures the discriminativeness of the features f and the overall good prediction performance of the combination of the feature extractor and the label predictor on the source domain.

优化特征提取器和类别分类器的参数从而最小化源域的类别标签预测损失 -

At the same time, we want to make the features f domain-invariant.That is, we want to make the distributions S(f) ={Gf(x;θf)|x∼S(x)} and T(f) ={Gf(x;θf)|x∼T(x)} to be similar.

我觉得上面的S(f)和T(f)就是分别指代源域和目标域的样本经过特征提取层得到的向量

训练的目标是希望得到的特征提取层对源域和目标域的映射是相似的。 -

Under the covariate shift assumption, this would make the label prediction accuracy on the target domain to be the same as on the sourcedomain (Shimodaira, 2000).

这里的covariate shift assumption不懂 -

Measuring the dissimilarity of the distributions S(f) and T(f) is however non-trivial,given that f is high-dimensional, and that the distributions themselves are constantly changing as learning progresses

non-trival的意思就是significant;

然后这里说到:学习过程中分布会一直变化,好像是这么回事,因为映射在变化,从a分布到b分布。 -

One way to estimate the dissimilarity is to look at the loss of the domain classifier Gd, provided that the parameters θd of the domain classifier have been trained to discriminate between the two feature distributions in an optimal way.

估计差异性的一种方法是观察域分类器Gd的损失,前提是域分类器的参数θd已被训练以最佳方式区分两个特征分布。(域分类器这里说要区分,我在别的博客中看到要混淆。。) -

This observation leads to our idea. At training time, in order to obtain domain-invariant features, we seek the parameters θf of the feature mapping that maximize the loss of the domain classifier (by making the two feature distributions as similar as possible(我觉得:当源域和目标域的样本经过特征提取层映射得到的向量分布相似的时候,由于领域标签是各自的0和1,所以此时的领域误差是最大的)), while simultaneously seeking the parameters θd of the domain classifier that minimize the loss of the domain classifier. (θd 要最小化领域损失的目的个人认为是训练出好的领域分类器,这里的θd 和θf的关系太像GAN中的生成器和辨别器;)In addition, we seek to minimize the loss of the label predictor.

(最后,类别分类器的损失自然是也是要最小化的) -

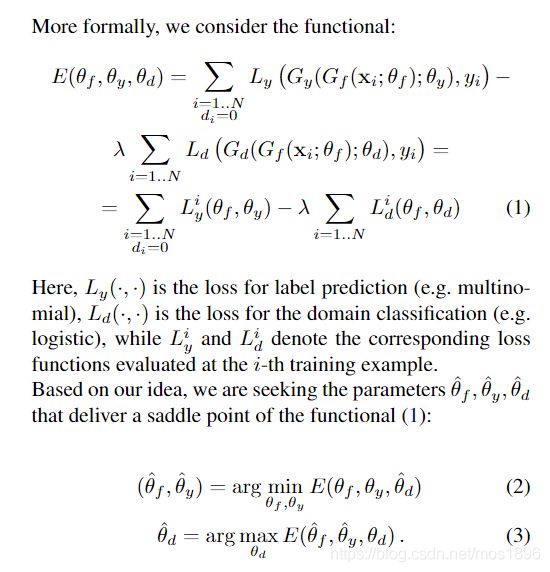

下面的这个公式劝退

分析一下(2)和(3)

为什么这里的θf和θy组队

而θd就一个人

先把这个问题放一边

接下来考虑argmin和argmax的问题

θf是既想minimize类别损失,又想maximize领域损失

θy是想minimize类别损失,

θd是想minimize领域损失

(从上面的分析来看,好像也只能这样分组了)

对于(2)式来说,当θd已经固定为θd^时,我们只考虑类别损失,此时类别损失最小就是E最小

对于(3)式来说,当θf和θy已经别固定为θf^ 和 θy^时,我们只考虑领域损失,此时领域损失最小就是E最大(因为有个负号)

论文原文如下:

At the saddle point, the parameters θd of the domain classifier θd minimize the domain classification loss (since it enters into (1) with the minus sign) while the parameters θy of the label predictor minimize the label prediction loss. The feature mapping parameters θf minimize the label prediction loss (i.e. the features are discriminative), while maximizing the domain classification loss (i.e. the features are domain-invariant). The parameter λ controls the trade-off between the two objectives that shape the features during learning.

我想说的是这里的trade-off对应的two objectives应该是上面的argmin和argmax,即式2和式3

又或者是跟 θf 的maximize和minimize相关

感觉是后者,因为two objectives,极其对应上一句的θf的两个优化目标

我又有一种想法:

从:

θf是既想minimize类别损失,又想maximize领域损失

θy是想minimize类别损失,

θd是想minimize领域损失

如果θf不作妖,即目的只有一个,那E中的领域损失没必要弄个负号,直接正号就行了

但是实际θf非要作妖

加了负号之后,可以将θf的maximize(领域)转化为minimize(E)

所以θf在E中的作用就统一为minimize

θy也是minimize

所以它俩一队

但是此时却将θd的minmize(领域)转换为maxmize(E)

所以θd只能一个人

那具体这两个小分队是怎么求解的?一个要argminE,一个要argmaxE?

从4,5,6式来看

前面分析过所以θf在E中的作用就统一为minimize

所以θf的更新可以直接套用梯度下降,即如4式所示,括号内是E对θf的偏导数

同理θy也是如此,其更新如5式所示

θd则比较特殊,因为其要最大化E,所以用梯度上升,即θd+u*(E对θd的偏导数)

整理的θd+u * (负的lamuta * Ld对θd的偏导),括号去掉加号就变成了减号

但是疑惑的点是lamuta不见了,我觉得就是u和u*lamuta的差别仅仅是大小不一样(即更新的程度不一样),所以为了简便可以去掉

(这篇文章很多内容都是我的初步理解,可能理解的完全不对,但是总给把自己的观点和看法提出来)

现在我们已经能确定4,5,6式的合理性,

那如何将其与我们常用的反向传播结合起来

先来看看论文原文:

The updates (4)-(6) are very similar to stochastic gradient descent (SGD) updates for a feed-forward deep model that comprises(包括) feature extractor fed into the label predictor and into the domain classifier. The difference is the−λ factor in (4) (the difference is important, as without such factor stochastic gradient descent would try to make features dissimilar across domains in order to minimize the domain classification loss).

非常像SGD,唯一的区别就是λ,λ至关重要于领域不变性,所以只需要处理这个λ,本文引入GRL模块

Although direct implementation of (4)-(6) as SGD is not possible, it is highly desirable to reduce the updates (4)-(6) to some form of SGD, since SGD (andits variants) is the main learning algorithm implemented inmost packages for deep learning.

直接当成SGD不行,那就适当变形

14.Fortunately, such reduction can be accomplished by introducing a special gradient reversal layer(GRL) defined as follows. The gradient reversal layer has no parameters associated with it (梯度反转层没有相关参数,感觉是很重要的知识点)(apart from the meta-parameter λ, which is not updated by back propagation). During the forward propagation, GRL acts as an identity transform. During the back propagation though, GRL takes the gradient from the subsequent level, multiplies it by −λ and passes it to the preceding layer. Implementing such layer using existing object-oriented packages for deep learning is simple(使用现有的面向对象的包来实现这种层很简单), as defining procedures for forwardprop (identity transform),backprop (multiplying by a constant), and parameter update (nothing) is trivial(简单).

之前的non-trivial我是翻译成significant,事实上还有复杂的意思。

The GRL as defined above is inserted between the feature extractor and the domain classifier, resulting in the architecture depicted in Figure 1. As the back propagation process passes through the GRL, the partial derivatives of the loss that is downstream the GRL (i.e.Ld) w.r.t. the layerparameters that are upstream the GRL (i.e.θf) get multiplied by−λ(GRL下游的loss关于GRL上游的θf的偏导数乘以−λ), i.e.∂Ld/∂θf is effectively replaced with−λ*∂Ld/∂θf.Therefore, running SGD in the resulting model implements the updates (4)-(6) and converges to a saddle point of (1).Mathematically, we can formally treat the gradient reversal layer as a “pseudo-function” Rλ(x) defined by two (incompatible) equations describing its forward- and back propagation behaviour:

where I is an identity matrix. We can then define the objective “pseudo-function” (跟前一段的应该不是同一个东西,只是都叫伪函数)of (θf,θy,θd) that is being optimized by the stochastic gradient descent within our method:

但是当我们把Rλ代入到9式,感觉是不是前向传播的时候少了−λ

我觉应该是上一段说的Rλ的前向和后向incompatible所导致的,

假设compatible的话,那代入Rλ就应该是前向后向都满足

而这里只满足后向(我的看法:Ly和Ld本身在前向的时候没有变化,而在反向传播时E对参数的偏导也没有变化,所以参数的更新就不会有变化,前向的最终目的是为了后向,我直接后向不变,你前向变不变跟我没关系,所以感觉等价)

(两个E不完全相同,修改后的带波浪线)

那从数学上如何解释呢??希望有人解惑。

- 小结

Running updates (4)-(6) can then be implemented as doing SGD for (9) and leads to the emergence of features that are domain-invariant and discriminative at the same time.After the learning, the label predictor y(x) =Gy(Gf(x;θf);θy) can be used to predict labels for samples from the target domain (as well as from the sourcedomain).

本文的最终目标

Our ultimate goal is to be able to predict labels y given the input x for the target distribution.

对于目标域也能做出正确的分类.

本文的框架图

注意上面图中的Otherwise,否则,也就是说实际不是这样。

pytorch实现代码

之后再添

言简意赅的博客

不明觉厉的知乎