如何快速制作一个垃圾分类图像识别器(卷积神经网络)

前言:本文分为四个部分,耐心阅读,会学到不少,另外,我会将代码和所需的文件供大家参考。

在制作这个垃圾分类图像识别器,不需要写很多代码,所以这篇文章完全适用于小白,我会教大家一步一步来学习。

第一部分(数据集的获取)

数据的来源通常从开源的网站或者爬虫获取,我总结了几个专门开源的数据集网站提供给大家参考,当然也可以自己用爬虫来爬取数据。

数据集网站:UCI机器学习库![]() https://archive.ics.uci.edu/ml/index.php

https://archive.ics.uci.edu/ml/index.php

Kaggle![]() https://www.kaggle.com/爬虫代码(爬取图片数据)

https://www.kaggle.com/爬虫代码(爬取图片数据)

import requests

import re

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.125 Safari/537.36'}

name = input('请输入要爬取的图片类别:')

num = 0

num_1 = 0

num_2 = 0

x = input('请输入要爬取的图片数量?(1等于60张图片,2等于120张图片):')

list_1 = []

for i in range(int(x)):

name_1 = os.getcwd()

name_2 = os.path.join(name_1, 'data/' + name)

url = 'https://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=' + name + '&pn=' + str(i * 30)

res = requests.get(url, headers=headers)

htlm_1 = res.content.decode()

a = re.findall('"objURL":"(.*?)",', htlm_1)

if not os.path.exists(name_2):

os.makedirs(name_2)

for b in a:

try:

b_1 = re.findall('https:(.*?)&', b)

b_2 = ''.join(b_1)

if b_2 not in list_1:

num = num + 1

img = requests.get(b)

f = open(os.path.join(name_1, 'data/' + name, name + str(num) + '.jpg'), 'ab')

print('---------正在下载第' + str(num) + '张图片----------')

f.write(img.content)

f.close()

list_1.append(b_2)

elif b_2 in list_1:

num_1 = num_1 + 1

continue

except Exception as e:

print('---------第' + str(num) + '张图片无法下载----------')

num_2 = num_2 + 1

continue

print('下载完成,总共下载{}张,成功下载:{}张,重复下载:{}张,下载失败:{}张'.format(num + num_1 + num_2, num, num_1, num_2))第二部分(数据的预处理)

数据的清洗:如果你用爬虫获取的数据,则会有有大量的数据相似,或者图片的质量较差,噪声大,这会影响到模型的过拟合或者欠拟合。数据的清洗有:重复观测处理,缺失值处理,异常值处理。详情的处理方法参考这篇文章: 常用的数据清洗方法![]() https://blog.csdn.net/m0_46298813/article/details/118946173

https://blog.csdn.net/m0_46298813/article/details/118946173

第三部分(训练模型)

划分数据集

在训练模型之前,要将数据进行划分,如果在开源网站上获取的数据,可能已经划分了训练集和测试集,验证集,如果自己爬取的数据,对其进行划分为训练集、测试集和验证集,下面是数据划分的代码。

import os

import random

from shutil import copy2

def data_set_split(src_data_folder, target_data_folder, train_scale=0.8, val_scale=0.2, test_scale=0.0):

'''

读取源数据文件夹,生成划分好的文件夹,分为trian、val、test三个文件夹进行

:param src_data_folder: 源文件夹 E:/biye/gogogo/note_book/torch_note/data/utils_test/data_split/src_data

:param target_data_folder: 目标文件夹 E:/biye/gogogo/note_book/torch_note/data/utils_test/data_split/target_data

:param train_scale: 训练集比例

:param val_scale: 验证集比例

:param test_scale: 测试集比例

:return:

'''

print("开始数据集划分")

class_names = os.listdir(src_data_folder)

# 在目标目录下创建文件夹

split_names = ['train', 'val', 'test']

for split_name in split_names:

split_path = os.path.join(target_data_folder, split_name)

if os.path.isdir(split_path):

pass

else:

os.mkdir(split_path)

# 然后在split_path的目录下创建类别文件夹

for class_name in class_names:

class_split_path = os.path.join(split_path, class_name)

if os.path.isdir(class_split_path):

pass

else:

os.mkdir(class_split_path)

# 按照比例划分数据集,并进行数据图片的复制

# 首先进行分类遍历

for class_name in class_names:

current_class_data_path = os.path.join(src_data_folder, class_name)

current_all_data = os.listdir(current_class_data_path)

current_data_length = len(current_all_data)

current_data_index_list = list(range(current_data_length))

random.shuffle(current_data_index_list)

train_folder = os.path.join(os.path.join(target_data_folder, 'train'), class_name)

val_folder = os.path.join(os.path.join(target_data_folder, 'val'), class_name)

test_folder = os.path.join(os.path.join(target_data_folder, 'test'), class_name)

train_stop_flag = current_data_length * train_scale

val_stop_flag = current_data_length * (train_scale + val_scale)

current_idx = 0

train_num = 0

val_num = 0

test_num = 0

for i in current_data_index_list:

src_img_path = os.path.join(current_class_data_path, current_all_data[i])

if current_idx <= train_stop_flag:

copy2(src_img_path, train_folder)

# print("{}复制到了{}".format(src_img_path, train_folder))

train_num = train_num + 1

elif (current_idx > train_stop_flag) and (current_idx <= val_stop_flag):

copy2(src_img_path, val_folder)

# print("{}复制到了{}".format(src_img_path, val_folder))

val_num = val_num + 1

else:

copy2(src_img_path, test_folder)

# print("{}复制到了{}".format(src_img_path, test_folder))

test_num = test_num + 1

current_idx = current_idx + 1

print("*********************************{}*************************************".format(class_name))

print(

"{}类按照{}:{}:{}的比例划分完成,一共{}张图片".format(class_name, train_scale, val_scale, test_scale, current_data_length))

print("训练集{}:{}张".format(train_folder, train_num))

print("验证集{}:{}张".format(val_folder, val_num))

print("测试集{}:{}张".format(test_folder, test_num))

if __name__ == '__main__':

src_data_folder = "C:/Users/dongg/Downloads/Compressed/archive" # todo 修改你的原始数据集路径

target_data_folder = "C:/Users/dongg/Downloads/Compressed/target" # todo 修改为你要存放的路径

data_set_split(src_data_folder, target_data_folder)

开发环境

本次教程需要大家实现配置好python的环境,和一些所需的包。

keras==2.8.0

Keras-Preprocessing==1.1.2

kiwisolver==1.4.2

libclang==14.0.1

Markdown==3.3.6

matplotlib==3.5.1

numpy==1.22.3

oauthlib==3.2.0

opencv-python==4.5.5.64

opt-einsum==3.3.0

packaging==21.3

Pillow==9.1.0

protobuf==3.20.1

pyasn1==0.4.8

pyasn1-modules==0.2.8

pycocotools==2.0.4

pyparsing==3.0.8

PyQt5==5.15.6

PyQt5-Qt5==5.15.2

PyQt5-sip==12.10.1

PyQt5-stubs==5.15.6.0

python-dateutil==2.8.2

requests==2.27.1

requests-oauthlib==1.3.1

rsa==4.8

scipy==1.8.0

six==1.16.0

tensorboard==2.8.0

tensorboard-data-server==0.6.1

tensorboard-plugin-wit==1.8.1

tensorflow==2.8.0

tensorflow-io-gcs-filesystem==0.25.0

termcolor==1.1.0

tf-estimator-nightly==2.8.0.dev2021122109

typing-extensions==4.2.0

urllib3==1.26.9

Werkzeug==2.1.2

wrapt==1.14.0

zipp==3.8.0

训练模型

下面的代码可以训练cnn模型(卷积神经网络模型)

import tensorflow as tf

import matplotlib.pyplot as plt

from time import *

# 数据集加载函数,指明数据集的位置并统一处理为imgheight*imgwidth的大小,同时设置batch

def data_load(data_dir, test_data_dir, img_height, img_width, batch_size):

# 加载训练集

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

label_mode='categorical',

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

# 加载测试集

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

test_data_dir,

label_mode='categorical',

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

class_names = train_ds.class_names

# 返回处理之后的训练集、验证集和类名

return train_ds, val_ds, class_names

# 构建CNN模型

def model_load(IMG_SHAPE=(224, 224, 3), class_num=12):

# 搭建模型

model = tf.keras.models.Sequential([

# 对模型做归一化的处理,将0-255之间的数字统一处理到0到1之间

tf.keras.layers.experimental.preprocessing.Rescaling(1. / 255, input_shape=IMG_SHAPE),

# 卷积层,该卷积层的输出为32个通道,卷积核的大小是3*3,激活函数为relu

tf.keras.layers.Conv2D(32, (3, 3), activation='relu'),

# 添加池化层,池化的kernel大小是2*2

tf.keras.layers.MaxPooling2D(2, 2),

# Add another convolution

# 卷积层,输出为64个通道,卷积核大小为3*3,激活函数为relu

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

# 池化层,最大池化,对2*2的区域进行池化操作

tf.keras.layers.MaxPooling2D(2, 2),

# 将二维的输出转化为一维

tf.keras.layers.Flatten(),

# The same 128 dense layers, and 10 output layers as in the pre-convolution example:

tf.keras.layers.Dense(128, activation='relu'),

# 通过softmax函数将模型输出为类名长度的神经元上,激活函数采用softmax对应概率值

tf.keras.layers.Dense(class_num, activation='softmax')

])

# 输出模型信息

model.summary()

# 指明模型的训练参数,优化器为sgd优化器,损失函数为交叉熵损失函数

model.compile(optimizer='sgd', loss='categorical_crossentropy', metrics=['accuracy'])

# 返回模型

return model

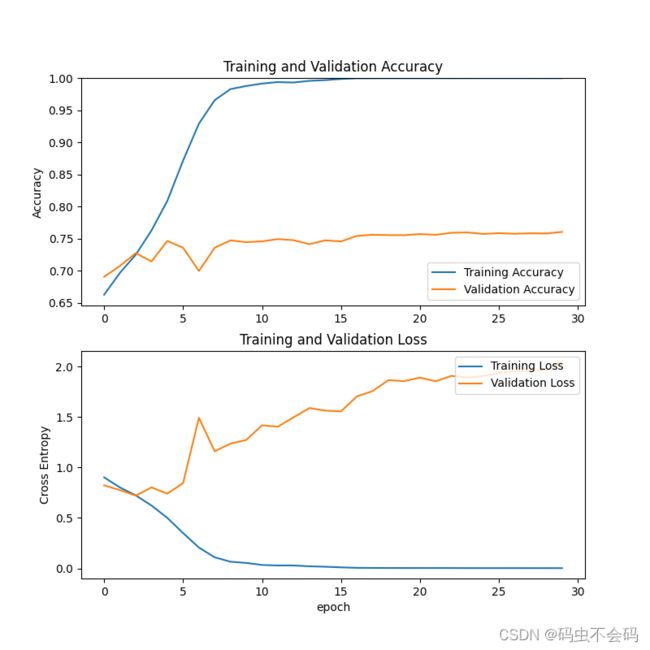

# 展示训练过程的曲线

def show_loss_acc(history):

# 从history中提取模型训练集和验证集准确率信息和误差信息

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

# 按照上下结构将图画输出

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.ylabel('Accuracy')

plt.ylim([min(plt.ylim()), 1])

plt.title('Training and Validation Accuracy')

plt.subplot(2, 1, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.ylabel('Cross Entropy')

plt.title('Training and Validation Loss')

plt.xlabel('epoch')

plt.savefig('results/results_cnn.png', dpi=100)

def train(epochs):

# 开始训练,记录开始时间

begin_time = time()

# todo 加载数据集, 修改为你的数据集的路径

train_ds, val_ds, class_names = data_load("C:/Users/dongg/Desktop/train/target/train",

"C:/Users/dongg/Desktop/train/target/val", 224, 224, 16)

print(class_names)

# 加载模型

model = model_load(class_num=len(class_names))

# 指明训练的轮数epoch,开始训练

history = model.fit(train_ds, validation_data=val_ds, epochs=epochs)

# todo 保存模型, 修改为你要保存的模型的名称

model.save("models/cnn_apple_leaf_disease.h5")

# 记录结束时间

end_time = time()

run_time = end_time - begin_time

print('该循环程序运行时间:', run_time, "s") # 该循环程序运行时间: 1.4201874732

# 绘制模型训练过程图

show_loss_acc(history)

if __name__ == '__main__':

train(epochs=30)

训练好的模型会保存在models文件夹中,训练过程图像会保存在results文件夹中。

第四部分(测试模型)

import tensorflow as tf

from PyQt5.QtGui import *

from PyQt5.QtCore import *

from PyQt5.QtWidgets import *

import sys

import cv2

from PIL import Image

import numpy as np

import shutil

class MainWindow(QTabWidget):

# 初始化

def __init__(self):

super().__init__()

self.setWindowIcon(QIcon('images/img_19335.jpg'))

self.setWindowTitle('Recycle') # todo 修改系统名称

# 模型初始化

self.model = tf.keras.models.load_model("models/cnn_laji_shibie_disease.h5") # todo 修改模型名称

self.to_predict_name = "images/tim9.jpeg" # todo 修改初始图片,这个图片要放在images目录下

self.class_names = ['其他垃圾', '厨余垃圾','可回收垃圾','有害垃圾'] # todo 修改类名,这个数组在模型训练的开始会输出

self.resize(900, 700)

self.initUI()

# 界面初始化,设置界面布局

def initUI(self):

main_widget = QWidget()

main_layout = QHBoxLayout()

font = QFont('楷体', 15)

# 主页面,设置组件并在组件放在布局上

left_widget = QWidget()

left_layout = QVBoxLayout()

img_title = QLabel("样本")

img_title.setFont(font)

img_title.setAlignment(Qt.AlignCenter)

self.img_label = QLabel()

img_init = cv2.imread(self.to_predict_name)

h, w, c = img_init.shape

scale = 400 / h

img_show = cv2.resize(img_init, (0, 0), fx=scale, fy=scale)

cv2.imwrite("images/show.png", img_show)

img_init = cv2.resize(img_init, (224, 224))

cv2.imwrite('images/target.png', img_init)

self.img_label.setPixmap(QPixmap("images/show.png"))

left_layout.addWidget(img_title)

left_layout.addWidget(self.img_label, 1, Qt.AlignCenter)

left_widget.setLayout(left_layout)

right_widget = QWidget()

right_layout = QVBoxLayout()

btn_change = QPushButton(" 上传图片 ")

btn_change.clicked.connect(self.change_img)

btn_change.setFont(font)

btn_predict = QPushButton(" 开始识别 ")

btn_predict.setFont(font)

btn_predict.clicked.connect(self.predict_img)

label_result = QLabel(' 识别结果 ')

self.result = QLabel("等待识别")

label_result.setFont(QFont('楷体', 16))

self.result.setFont(QFont('楷体', 24))

right_layout.addStretch()

right_layout.addWidget(label_result, 0, Qt.AlignCenter)

right_layout.addStretch()

right_layout.addWidget(self.result, 0, Qt.AlignCenter)

right_layout.addStretch()

right_layout.addStretch()

right_layout.addWidget(btn_change)

right_layout.addWidget(btn_predict)

right_layout.addStretch()

right_widget.setLayout(right_layout)

main_layout.addWidget(left_widget)

main_layout.addWidget(right_widget)

main_widget.setLayout(main_layout)

# 关于页面,设置组件并把组件放在布局上

about_widget = QWidget()

about_layout = QVBoxLayout()

about_title = QLabel('欢迎使用垃圾识别系统') # todo 修改欢迎词语

about_title.setFont(QFont('楷体', 18))

about_title.setAlignment(Qt.AlignCenter)

about_img = QLabel()

about_img.setPixmap(QPixmap('images/bj.jpg'))

about_img.setAlignment(Qt.AlignCenter)

label_super = QLabel("作者:recycle团队") # todo 更换作者信息

label_super.setFont(QFont('楷体', 12))

# label_super.setOpenExternalLinks(True)

label_super.setAlignment(Qt.AlignRight)

about_layout.addWidget(about_title)

about_layout.addStretch()

about_layout.addWidget(about_img)

about_layout.addStretch()

about_layout.addWidget(label_super)

about_widget.setLayout(about_layout)

# 添加注释

self.addTab(main_widget, '主页')

self.addTab(about_widget, '关于')

self.setTabIcon(0, QIcon('images/主页面.png'))

self.setTabIcon(1, QIcon('images/关于.png'))

# 上传并显示图片

def change_img(self):

openfile_name = QFileDialog.getOpenFileName(self, 'chose files', '',

'Image files(*.jpg *.png *jpeg)') # 打开文件选择框选择文件

img_name = openfile_name[0] # 获取图片名称

if img_name == '':

pass

else:

target_image_name = "images/tmp_up." + img_name.split(".")[-1] # 将图片移动到当前目录

shutil.copy(img_name, target_image_name)

self.to_predict_name = target_image_name

img_init = cv2.imread(self.to_predict_name) # 打开图片

h, w, c = img_init.shape

scale = 400 / h

img_show = cv2.resize(img_init, (0, 0), fx=scale, fy=scale) # 将图片的大小统一调整到400的高,方便界面显示

cv2.imwrite("images/show.png", img_show)

img_init = cv2.resize(img_init, (224, 224)) # 将图片大小调整到224*224用于模型推理

cv2.imwrite('images/target.png', img_init)

self.img_label.setPixmap(QPixmap("images/show.png"))

self.result.setText("等待识别")

# 预测图片

def predict_img(self):

img = Image.open('images/target.png') # 读取图片

img = np.asarray(img) # 将图片转化为numpy的数组

outputs = self.model.predict(img.reshape(1, 224, 224, 3)) # 将图片输入模型得到结果

result_index = int(np.argmax(outputs))

result = self.class_names[result_index] # 获得对应的水果名称

self.result.setText(result) # 在界面上做显示

# 界面关闭事件,询问用户是否关闭

def closeEvent(self, event):

reply = QMessageBox.question(self,

'退出',

"是否要退出程序?",

QMessageBox.Yes | QMessageBox.No,

QMessageBox.No)

if reply == QMessageBox.Yes:

self.close()

event.accept()

else:

event.ignore()

if __name__ == "__main__":

app = QApplication(sys.argv)

x = MainWindow()

x.show()

sys.exit(app.exec_())下面是我训练好的测试图:

感谢大家阅读,祝大家学习快乐 !

侵权必删。