线性回归以及python代码实现

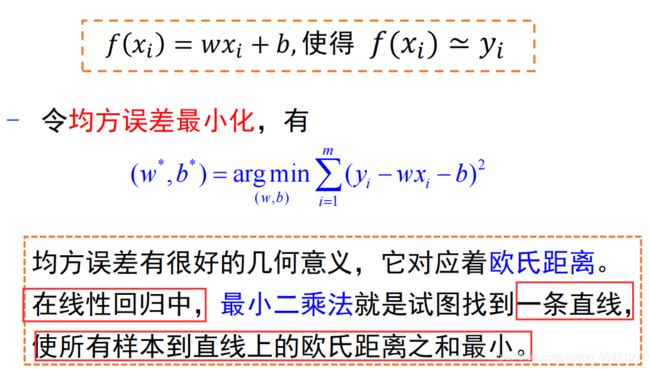

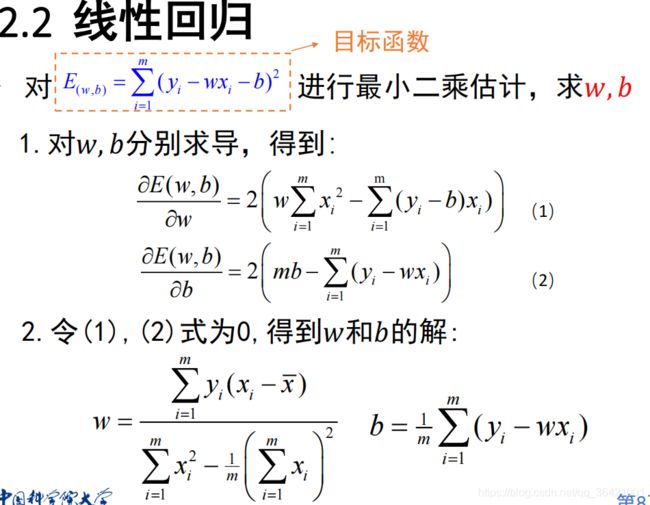

线性回归(linear regression)

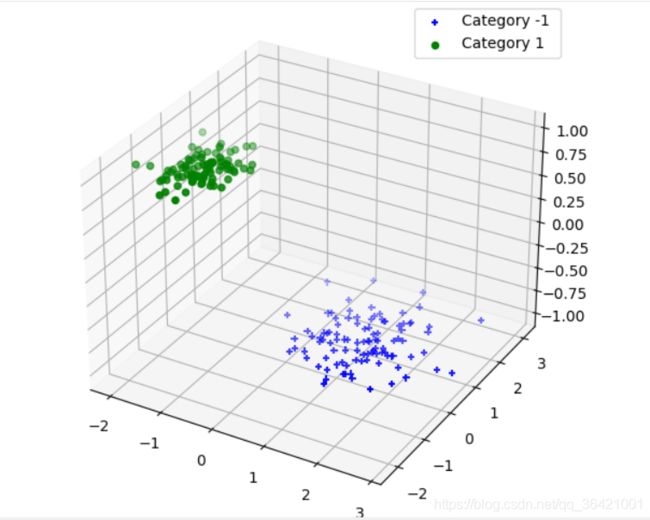

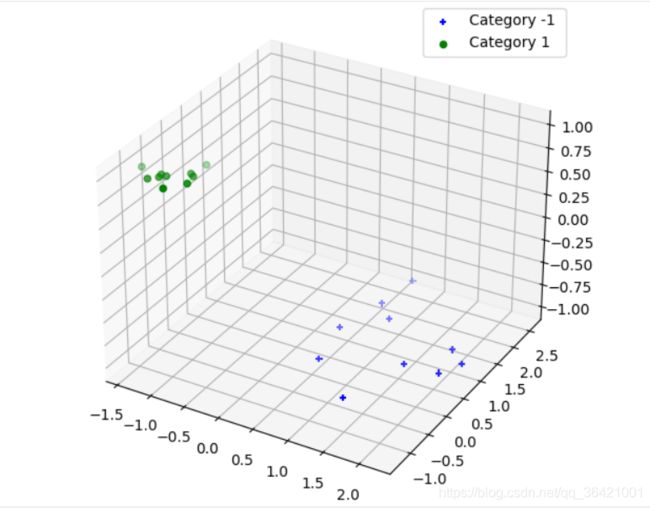

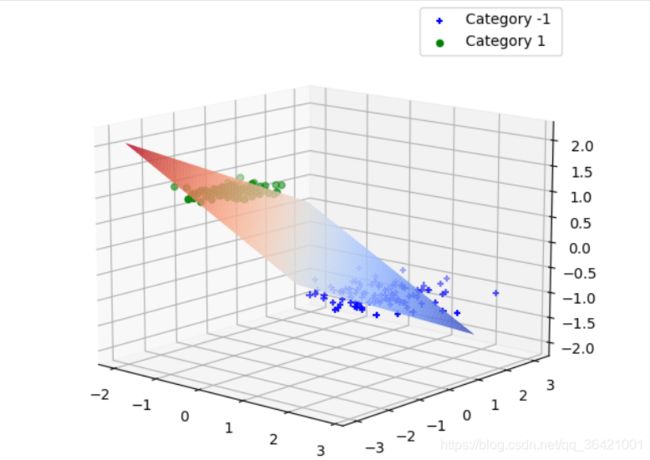

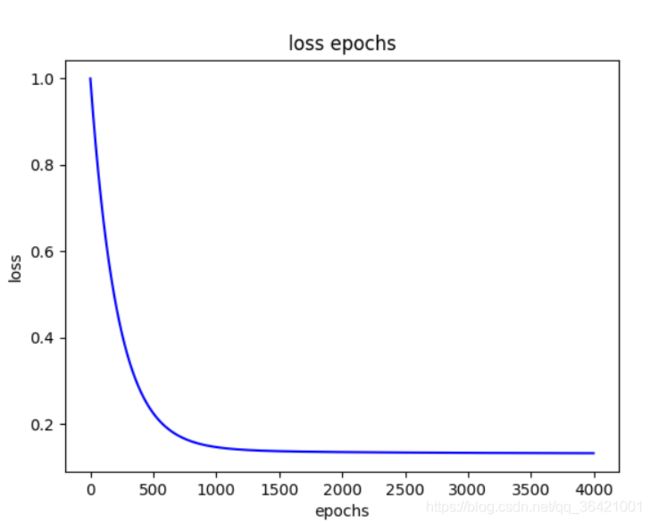

编程实现线性回归, yi为-1 或 1。保存参数,画出回归投影面,同时可视化显示结果。

import numpy as np

from sklearn.datasets import load_diabetes

from sklearn.utils import shuffle

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

class lr_model():

def __init__(self):

pass

#初始化参数

def initialize_params(self,dims):

w = np.zeros((dims, 1))

b = 0

return w, b

def linear_loss(self,x, y, w, b):

num_train = x.shape[0]

num_feature = x.shape[1]

# 模型公式

y_hat = np.dot(x, w) + b

# 损失函数

loss = np.sum((y_hat - y) ** 2) / num_train

# 参数的偏导

dw = np.dot(x.T, (y_hat - y)) / num_train

db = np.sum((y_hat - y)) / num_train

return y_hat, loss, dw, db

# 基于梯度下降的模型训练过程

def linear_train(self,x, y, learning_rate, epochs):

w, b = self.initialize_params(x.shape[1])

loss_list = []

for i in range(1, epochs):

# 计算当前预测值,损失和参数推导

y_hat, loss, dw, db = self.linear_loss(x, y, w, b)

loss_list.append(loss)

# 基于梯度下降的参数更新过程

w += -learning_rate * dw

b += -learning_rate * db

# 打印迭代次数和损失

if i % 100 == 0:

print('epoch %d loss %f' % (i, loss))

# 保存参数

params = {

'w': w,

'b': b

}

# 保存梯度

grads = {

'dw': dw,

'db': db

}

return loss_list,loss, params, grads

#下面定义一个预测函数对测试集结果进行预测:

def predict(self,x, params):

w = params['w']

b = params['b']

y_pred = np.dot(x, w) + b

return y_pred

#训练集数据

def get_train_data(self,data_size=100):

data_label=np.zeros((2*data_size,1))

#class 1

x1=np.reshape(np.random.normal(1,0.6,data_size),(data_size,1))

y1=np.reshape(np.random.normal(1,0.8,data_size),(data_size,1))

data_train=np.concatenate((x1,y1),axis=1)

data_label[0:data_size,:]=-1#标签为负类

#class 2

x2=np.reshape(np.random.normal(-1,0.3,data_size),(data_size,1))

y2=np.reshape(np.random.normal(-1,0.5,data_size),(data_size,1))

data_train=np.concatenate((data_train,np.concatenate((x2,y2),axis=1)),axis=0)

data_label[data_size:2*data_size,:]=1#标签为正类

print('data_train=', data_train.shape)

print('data_label=', data_label.shape)

return data_train,data_label

#测试集数据

def get_test_data(self,data_size=10):

testdata_label=np.zeros((2*data_size,1))

#class 1

x1=np.reshape(np.random.normal(1,0.6,data_size),(data_size,1))

y1=np.reshape(np.random.normal(1,0.8,data_size),(data_size,1))

data_test=np.concatenate((x1,y1),axis=1)

testdata_label[0:data_size,:]=-1

#class 2

x2=np.reshape(np.random.normal(-1,0.3,data_size),(data_size,1))

y2=np.reshape(np.random.normal(-1,0.5,data_size),(data_size,1))

data_test=np.concatenate((data_test,np.concatenate((x2,y2),axis=1)),axis=0)

testdata_label[data_size:2*data_size,:]=1

print('data_test=', data_test.shape)

print('testdata_label=', testdata_label.shape)

return data_test,testdata_label

#画测试集和训练集数据分布

def plot_lr(self,data_train, data_label,data_size):

fig1 = plt.figure()

ax1 = Axes3D(fig1)

plt.title("Data Distribution")

ax1.scatter(data_train[0:data_size, 0], data_train[0:data_size, 1], data_label[:data_size, 0], color='blue', marker='+',

label='Category -1 ')

ax1.scatter(data_train[data_size:, 0], data_train[data_size:, 1], data_label[data_size:, 0], color='green', marker='o',

label='Category 1 ')

plt.legend()

#画投影面

def plot_projection(self,data_train, data_label,data_size):

fig1 = plt.figure()

ax2 = Axes3D(fig1)

x1 = np.arange(-2, 2, 0.1)

x2 = np.arange(-3, 3, 0.1)

x1, x2 = np.meshgrid(x1, x2)

y = params['w'][0] * x1 + params['w'][1] * x2 + params['b']

ax2.scatter(data_train[0:data_size, 0], data_train[0:data_size, 1], data_label[:data_size, 0], color='blue',

marker='+',

label='Category -1 ')

ax2.scatter(data_train[data_size:, 0], data_train[data_size:, 1], data_label[data_size:, 0], color='green',

marker='o',

label='Category 1 ')

ax2.plot_surface(x1, x2, y, rstride=1, cstride=1, cmap=plt.cm.coolwarm)

plt.legend()

#画损失函数曲线

def plot_loss(self):

plt.figure()

plt.plot(loss_list, color='blue')

plt.title("loss epochs")

plt.xlabel('epochs')

plt.ylabel('loss')

plt.show()

if __name__=='__main__':

lr = lr_model()

#1.产生数据并可视化

data_train, data_label=lr.get_train_data(data_size=100)

data_test, testdata_label=lr.get_test_data(data_size=10)

#训练集数据可视化

lr.plot_lr(data_train, data_label,data_size=100)

#测试集数据可视化

lr.plot_lr(data_test, testdata_label, data_size=10)

#2,线性回归

loss_list,loss, params, grads = lr.linear_train(data_train, data_label, 0.001, 4000)

print(params)

#用测试数据进行测试

y_pred=lr.predict(data_test, params)

print(y_pred)

#3.画出投影面

lr.plot_projection(data_train, data_label,data_size=100)

#画损失函数曲线

lr.plot_loss()