VS2015+caffe+matlab+python+CPU

实验平台:

Win7 64bit, VS 2015(Professional), matlab 2016b(64bit), python2.7.12, Eclipse IDE for Java Developers(Version: Neon.1a Release (4.6.1)),Cmake 3.8.0,

protoc-3.1.0-windows-x86_64 ,boost_1_61_0-msvc-14.0-64

资料下载

百度云:

链接:http://pan.baidu.com/s/1gfyvVQb 密码:t9xw

可以下载到下面所用到的文件。

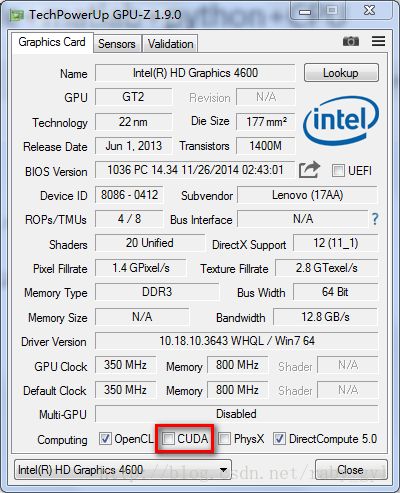

GPU-Z 参看自己电脑GPU是否支持CUDA.

caffe-windows 下载地址:

https://github.com/BVLC/caffe/tree/windows

caffe-master 下载地址:

https://github.com/Microsoft/caffe

caffe-windows 和 caffe-master 的区别和联系:

在caffe-windows文件下,我们可以看到有个windows文件夹,其组织形式类似于caffe-master文件,并说其是旧版本,以后将抛弃。因此,caffe-windows 是caffe-master 的新版本。并且caffe-windows 支持VS2015 和VS2013 ,caffe-master 仅支持VS2013。因此,我们下载caffe-windows 即可。

Anaconda是管理和下载Python, R and Scala 语言包的工具。Miniconda是其简化版本,仅支持Python。我们使用Miniconda下载Python包,Miniconda在安装的时候会将其自动添加到环境变量中,因此我们可以通过cmd命令在dos下直接使用conda命令进行下载包。

Miniconda下载地址:

https://mirrors.tuna.tsinghua.edu.cn/anaconda/miniconda/

conda命令在下载python包的时候很慢,因此,我们清华大学的镜像:

https://mirrors.tuna.tsinghua.edu.cn/help/anaconda/

添加三个镜像网址:

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/msys2/想要删除清华源把add改成remove就行。

显示URL

conda config --set show_channel_urls yes参考:https://www.zhihu.com/question/38252144

通过下面的指令可以参看是否添加成功:

> conda config --get channels镜像中拥有的python包(for windows):

https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/win-64/

如果里面没有,我们可以去官网:

https://pypi.python.org/pypi

下载需要的包,通过pip.exe进行安装。

python接口依赖:

> conda install --yes numpy scipy matplotlib scikit-image pip six

> conda install --yes --channel willyd protobuf==3.1.0注意:

在使用上面安装的时候,可能会因为一个安装错误,出现回滚到原状态,那么可以一个库,一个库的安装。

protobuf如何下载很慢,可以通过手动安装:

https://pypi.python.org/pypi/protobuf

补充:

.whl文件的安装,例如下载的为protobuf.whl文件。那么利用pip.exe进行安装,进入cmd命令行,切换到:

D:\python\python2.7.12\Scripts

执行:

pip install protobuf.whl

切记,指令中一定要带有.whl扩展名。

Caffe 安装

0. 前期工作

VS 2015, Matlab, Python, Eclispe ,Cmake都安装好,利用Miniconda将上面python依赖的包都下载好,确定 VS,Matlab,Python,Cmake都在环境变量中。

如下:

Path=D:\python\python2.7.12;

E:\cmake-3.8.0-rc2-win64-x64\bin;

D:\Program Files\MATLAB\R2016b\bin;

D:\Program Files\MATLAB\R2016b\runtime\win64;

D:\Program Files\MATLAB\R2016b\polyspace\bin;

D:\Program Files (x86)\Microsoft Visual Studio 14.0\SDK;

D:\Program Files (x86)\Microsoft Visual Studio 14.0

VCROOT=D:\Program Files (x86)\Microsoft Visual Studio 14.0

VS140COMNTOOLS=D:\Program Files (x86)\Microsoft Visual Studio 14.0\Common7\Tools\

D:\Miniconda2

D:\Miniconda2\Scripts

D:\Miniconda2\Library\bin安装Miniconda的时候,过程中,我选择将python整合到miniconda中,在miniconda中也会用python解析器,环境变量中含有两个,不晓得在后面是否冲突(可测试)。这样的话只安装miniconda就可以了其自带python,就不用额外的装python了,并且环境变量值D:\python\python2.7.12可去掉。

1.build_win文件的编辑

编辑:E:\caffe-windows\scripts\build_win.cmd

因为对cmd文件中的执行流程不太清楚。因此将

if DEFINED APPVEYOR 条件语句中的与上图对应的处也进行了设置。

并也设置了Miniconda的路径。

:: Set python 2.7 with conda as the default python

if !PYTHON_VERSION! EQU 2 (

set CONDA_ROOT=D:\Miniconda2

)注释:

一个合理的理解是,就像.cmd文件中在if DEFINED APPVEYOR语句 给出在注释为::: Default values,因此if DEFINED APPVEYOR语句中的并不需要修改,只需要修改截图中红色的标识处以及miniconda的路径。(即需要修改的地方cmd文件中都给出注释。)

在cmd命令行中运行(确定cmake在环境变量中,如E:\cmake-3.8.0-rc2-win64-x64\bin):

E:\caffe-windows\scripts\build_win.cmd该命令会在E:\caffe-windows\scripts\目录下创建一个build文件夹,并且会将额外的库libraries_v140_x64_py27_1.0.1.tar下载到该文件夹,由于通过命令行下载很慢,我们可以中断命令的执行,手动下载该库文件到build目录下,然后再重新运行上面的指令。指令执行完之后,在build文件下形成Caffe.sln。

注释:

在运行bulid_win.cmd时候经常会碰到两个问题:

(1) the C compiler identification is unknown… (首先检查一下VS的环境变量),如果环境变量都搭配好了。那么进入bulid/CMakeFiles/CMakeError.txt。进行参考:

LINK : fatal error LNK1104: 无法打开文件“ucrtd.lib” 利用everything搜索,发现该文件在:

C:\Program Files (x86)\Windows Kits\10\Lib\10.0.10150.0\ucrt\x64目录下,将该目录下的4个库文件,全部拷贝到:

C:\Program Files (x86)\Windows Kits\8.1\Lib\winv6.3\um\x64即可解决问题。可能还会碰到“corecrt.h”文件无法找到,同样的道理,将:

C:\Program Files (x86)\Windows Kits\10\Include\10.0.10150.0\ucrt所有头文件拷贝到:

D:\Program Files (x86)\Microsoft Visual Studio 14.0\VC\include(2). the dependency target “pycaffe’ of target “pytest” does not exist。

是因为python中检测不到numpy库的原因(检测build_win.cmd中miniconda的路径是否配置正确),第二个方法是直接利用pip 安装numpy库。

每次修改,请记得清理build文件夹中生成的文件,在重新执行build_win.cmd命令

(3)Could NOT find Matlab (missing :Matlab_MEX_EXTENSION) (find version “9.1”。在部分电脑上将Matlab装在D盘,结果会出现这个问题。解决方法是将Matlab装在C盘就好了。

2 .环境变量的添加:

我们通过 RapidEE添加三个环境变量,其在后面的程序执行时调用:

E:\caffe-windows\scripts\build\libraries\bin

E:\caffe-windows\scripts\build\libraries\lib

E:\caffe-windows\scripts\build\libraries\x64\vc14\bin

添加完后,记得一定要重启电脑。如果忘记添加环境变量或者未重启的话,在matlab测试caffe时,会提示:

caffe_.mexw64 无效的mex文件。

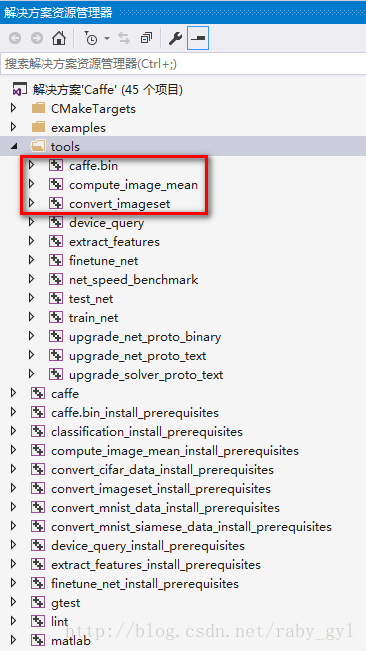

3 编译caffe,matlab,pycaffe项目

用VS2015打开build文件夹下的Caffe.sln,会在VS的资源管理器目录看到: caffe,matlab,pycaffe 三个项目,其默认是编译release版本,我们依次进行编译,可以通过选中项目,右键属性的方式,看到三个项目的配置,以及编译生成的文件保存的路径:

E:\caffe-windows\scripts\build\lib\Release\caffe.lib

E:\caffe-windows\matlab+caffe\private\Release\caffe_.mexw64

E:\caffe-windows\scripts\build\lib\Release_caffe.pyd

4. matlab 使用caffe

在上面的保存生成路径中,我们可以看到,caffe_.mexw64在E:\caffe-windows\matlab+caffe\private\Release目录下,我们将其拷贝到:上一层目录下,即private目录下。然后打开matlab,将工具路径设置为:

E:\caffe-windows\matlab\demo ,然后新建一个test_caffe.m,即如下的matlab测试代码拷贝,运行即可。(+caffe是matlab类,对接口进行了封装).

注意: 编译完后,所有东西感觉都设置好了,有时候还会提示,

Invalid MEX-file ‘*matlab/+caffe/private/.mexw64使用depends.exe工具(网上自己下载),打开.mexw64 它会告诉你缺少什么,然后或者把什么添加到环境变量或者缺少其他库等等。

5. python 使用caffe

将 E:\caffe-windows\python 下的caffe文件夹,拷贝到:python的site-packages 文件夹下,我们这里是:

D:\python\python2.7.12\Lib\site-packages

其实我们会看到,编译生成的_caffe.pyd 也会在E:\caffe-windows\python\caffe 目录下生成一份。

注意!!!:在进行matlab和python测试前,请确保需要模型文件放置到访问到的目录下,如将bvlc_reference_caffenet.caffemodel 文件放到:

E:\caffe-windows\models\bvlc_reference_caffenet\

以及synset_words.txt(for matlab)放在同test_caffe.m的目录下。

6. VS2015 使用Caffe

Caffe给出了一个测试用例classification.cpp,在E:\caffe-windows\examples\cpp_classification下(具体根据自己当前Caffe位置)

。为了运行例子。需要搭配运行环境。

1. 先进一个VS控制台应用程序,参考caffe 21天,配置工程的包含目录,库目录和环境变量,自己可以选择是Debug模式还是Release模式。

2. 输入命令参数:

eploy.prototxt bvlc_reference_caffenet.caffemodel imagenet_mean.binaryproto synset_words.txt cat.jpg注:缺少的文件都可以通过上面分享的百度云中找到。

补充:

Miniconda 安装的python包是在一个单独目录下,如我的是

C:\Users\***\Miniconda2\Lib\site-packages

在Eclipse中,我们将该目录添加进去即可。

matlab测试code:

% test_caffe.m

close all;clear all;

im = imread('../../examples/images/cat.jpg');%读取图片

figure;imshow(im);%显示图片

[scores, maxlabel] = classification_demo(im, 0);%获取得分第二个参数0为CPU,1为GPU

maxlabel %查看最大标签是谁

figure;plot(scores);%画出得分情况

axis([0, 999, -0.1, 0.5]);%坐标轴范围

grid on %有网格

fid = fopen('synset_words.txt', 'r');

i=0;

while ~feof(fid)

i=i+1;

lin = fgetl(fid);

lin = strtrim(lin);

if(i==maxlabel)

fprintf('the label of %d is %s\n',i,lin)

break

end

end

python测试code:

# coding=gbk

'''

Created on 2017年3月9日

'''

#安装Python环境、numpy、matplotlib

import numpy as np

import matplotlib.pyplot as plt

#设置默认显示参数

plt.rcParams['figure.figsize'] = (10, 10) # 图像显示大小

plt.rcParams['image.interpolation'] = 'nearest' # 最近邻差值: 像素为正方形

plt.rcParams['image.cmap'] = 'gray' # 使用灰度输出而不是彩色输出

import sys

caffe_root = 'E:/caffe-windows/' #该文件要从路径{caffe_root}/examples下运行,否则要调整这一行。

sys.path.insert(0, caffe_root + 'python')

import caffe

import os

if os.path.isfile(caffe_root + 'models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel'):

print 'CaffeNet found.'

else:

print 'Downloading pre-trained CaffeNet model...'

# !../scripts/download_model_binary.py ../models/bvlc_reference_caffenet

caffe.set_mode_cpu()

model_def = caffe_root + 'models/bvlc_reference_caffenet/deploy.prototxt'

model_weights = caffe_root + 'models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel'

net = caffe.Net(model_def, # 定义模型结构

model_weights, # 包含了模型的训练权值

caffe.TEST) # 使用测试模式(不执行dropout)

# 加载ImageNet图像均值 (随着Caffe一起发布的)

mu = np.load(caffe_root + 'python/caffe/imagenet/ilsvrc_2012_mean.npy')

mu = mu.mean(1).mean(1) #对所有像素值取平均以此获取BGR的均值像素值

print 'mean-subtracted values:', zip('BGR', mu)

# 对输入数据进行变换

transformer = caffe.io.Transformer({'data': net.blobs['data'].data.shape})

transformer.set_transpose('data', (2,0,1)) #将图像的通道数设置为outermost的维数

transformer.set_mean('data', mu) #对于每个通道,都减去BGR的均值像素值

transformer.set_raw_scale('data', 255) #将像素值从[0,255]变换到[0,1]之间

transformer.set_channel_swap('data', (2,1,0)) #交换通道,从RGB变换到BGR

# 设置输入图像大小

net.blobs['data'].reshape(50, # batch 大小

3, # 3-channel (BGR) images

227, 227) # 图像大小为:227x227

image = caffe.io.load_image(caffe_root + 'examples/images/cat.jpg')

transformed_image = transformer.preprocess('data', image)

plt.imshow(image)

plt.show()

# 将图像数据拷贝到为net分配的内存中

net.blobs['data'].data[...] = transformed_image

### 执行分类

output = net.forward()

output_prob = output['prob'][0] #batch中第一张图像的概率值

print 'predicted class is:', output_prob.argmax()

# 加载ImageNet标签

labels_file = caffe_root + 'data/ilsvrc12/synset_words.txt'

# if not os.path.exists(labels_file):

# !../data/ilsvrc12/get_ilsvrc_aux.sh

labels = np.loadtxt(labels_file, str, delimiter='\t')

print 'output label:', labels[output_prob.argmax()]结果:

CaffeNet found.

WARNING: Logging before InitGoogleLogging() is written to STDERR

W0309 15:43:33.012079 8740 _caffe.cpp:172] DEPRECATION WARNING - deprecated use of Python interface

W0309 15:43:33.012079 8740 _caffe.cpp:173] Use this instead (with the named "weights" parameter):

W0309 15:43:33.012079 8740 _caffe.cpp:175] Net('E:/caffe-windows/models/bvlc_reference_caffenet/deploy.prototxt', 1, weights='E:/caffe-windows/models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel')

I0309 15:43:33.026078 8740 net.cpp:53] Initializing net from parameters:

name: "CaffeNet"

state {

phase: TEST

level: 0

}

layer {

name: "data"

type: "Input"

top: "data"

input_param {

shape {

dim: 10

dim: 3

dim: 227

dim: 227

}

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm1"

type: "LRN"

bottom: "pool1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "norm1"

top: "conv2"

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "norm2"

type: "LRN"

bottom: "pool2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "norm2"

top: "conv3"

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

inner_product_param {

num_output: 4096

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

inner_product_param {

num_output: 4096

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8"

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

inner_product_param {

num_output: 1000

}

}

layer {

name: "prob"

type: "Softmax"

bottom: "fc8"

top: "prob"

}

I0309 15:43:33.026078 8740 layer_factory.cpp:58] Creating layer data

I0309 15:43:33.026078 8740 net.cpp:86] Creating Layer data

I0309 15:43:33.026078 8740 net.cpp:382] data -> data

I0309 15:43:33.026078 8740 net.cpp:124] Setting up data

I0309 15:43:33.026078 8740 net.cpp:131] Top shape: 10 3 227 227 (1545870)

I0309 15:43:33.026078 8740 net.cpp:139] Memory required for data: 6183480

I0309 15:43:33.026078 8740 layer_factory.cpp:58] Creating layer conv1

I0309 15:43:33.026078 8740 net.cpp:86] Creating Layer conv1

I0309 15:43:33.026078 8740 net.cpp:408] conv1 <- data

I0309 15:43:33.026078 8740 net.cpp:382] conv1 -> conv1

I0309 15:43:33.026078 8740 net.cpp:124] Setting up conv1

I0309 15:43:33.026078 8740 net.cpp:131] Top shape: 10 96 55 55 (2904000)

I0309 15:43:33.026078 8740 net.cpp:139] Memory required for data: 17799480

I0309 15:43:33.026078 8740 layer_factory.cpp:58] Creating layer relu1

I0309 15:43:33.026078 8740 net.cpp:86] Creating Layer relu1

I0309 15:43:33.026078 8740 net.cpp:408] relu1 <- conv1

I0309 15:43:33.026078 8740 net.cpp:369] relu1 -> conv1 (in-place)

I0309 15:43:33.026078 8740 net.cpp:124] Setting up relu1

I0309 15:43:33.026078 8740 net.cpp:131] Top shape: 10 96 55 55 (2904000)

I0309 15:43:33.026078 8740 net.cpp:139] Memory required for data: 29415480

I0309 15:43:33.026078 8740 layer_factory.cpp:58] Creating layer pool1

I0309 15:43:33.026078 8740 net.cpp:86] Creating Layer pool1

I0309 15:43:33.026078 8740 net.cpp:408] pool1 <- conv1

I0309 15:43:33.026078 8740 net.cpp:382] pool1 -> pool1

I0309 15:43:33.026078 8740 net.cpp:124] Setting up pool1

I0309 15:43:33.026078 8740 net.cpp:131] Top shape: 10 96 27 27 (699840)

I0309 15:43:33.026078 8740 net.cpp:139] Memory required for data: 32214840

I0309 15:43:33.026078 8740 layer_factory.cpp:58] Creating layer norm1

I0309 15:43:33.026078 8740 net.cpp:86] Creating Layer norm1

I0309 15:43:33.026078 8740 net.cpp:408] norm1 <- pool1

I0309 15:43:33.026078 8740 net.cpp:382] norm1 -> norm1

I0309 15:43:33.026078 8740 net.cpp:124] Setting up norm1

I0309 15:43:33.026078 8740 net.cpp:131] Top shape: 10 96 27 27 (699840)

I0309 15:43:33.026078 8740 net.cpp:139] Memory required for data: 35014200

I0309 15:43:33.026078 8740 layer_factory.cpp:58] Creating layer conv2

I0309 15:43:33.026078 8740 net.cpp:86] Creating Layer conv2

I0309 15:43:33.027079 8740 net.cpp:408] conv2 <- norm1

I0309 15:43:33.027079 8740 net.cpp:382] conv2 -> conv2

I0309 15:43:33.027079 8740 net.cpp:124] Setting up conv2

I0309 15:43:33.027079 8740 net.cpp:131] Top shape: 10 256 27 27 (1866240)

I0309 15:43:33.027079 8740 net.cpp:139] Memory required for data: 42479160

I0309 15:43:33.027079 8740 layer_factory.cpp:58] Creating layer relu2

I0309 15:43:33.027079 8740 net.cpp:86] Creating Layer relu2

I0309 15:43:33.027079 8740 net.cpp:408] relu2 <- conv2

I0309 15:43:33.027079 8740 net.cpp:369] relu2 -> conv2 (in-place)

I0309 15:43:33.027079 8740 net.cpp:124] Setting up relu2

I0309 15:43:33.027079 8740 net.cpp:131] Top shape: 10 256 27 27 (1866240)

I0309 15:43:33.027079 8740 net.cpp:139] Memory required for data: 49944120

I0309 15:43:33.027079 8740 layer_factory.cpp:58] Creating layer pool2

I0309 15:43:33.027079 8740 net.cpp:86] Creating Layer pool2

I0309 15:43:33.027079 8740 net.cpp:408] pool2 <- conv2

I0309 15:43:33.027079 8740 net.cpp:382] pool2 -> pool2

I0309 15:43:33.027079 8740 net.cpp:124] Setting up pool2

I0309 15:43:33.028079 8740 net.cpp:131] Top shape: 10 256 13 13 (432640)

I0309 15:43:33.028079 8740 net.cpp:139] Memory required for data: 51674680

I0309 15:43:33.028079 8740 layer_factory.cpp:58] Creating layer norm2

I0309 15:43:33.028079 8740 net.cpp:86] Creating Layer norm2

I0309 15:43:33.028079 8740 net.cpp:408] norm2 <- pool2

I0309 15:43:33.028079 8740 net.cpp:382] norm2 -> norm2

I0309 15:43:33.028079 8740 net.cpp:124] Setting up norm2

I0309 15:43:33.028079 8740 net.cpp:131] Top shape: 10 256 13 13 (432640)

I0309 15:43:33.028079 8740 net.cpp:139] Memory required for data: 53405240

I0309 15:43:33.028079 8740 layer_factory.cpp:58] Creating layer conv3

I0309 15:43:33.028079 8740 net.cpp:86] Creating Layer conv3

I0309 15:43:33.028079 8740 net.cpp:408] conv3 <- norm2

I0309 15:43:33.028079 8740 net.cpp:382] conv3 -> conv3

I0309 15:43:33.030079 8740 net.cpp:124] Setting up conv3

I0309 15:43:33.030079 8740 net.cpp:131] Top shape: 10 384 13 13 (648960)

I0309 15:43:33.030079 8740 net.cpp:139] Memory required for data: 56001080

I0309 15:43:33.030079 8740 layer_factory.cpp:58] Creating layer relu3

I0309 15:43:33.030079 8740 net.cpp:86] Creating Layer relu3

I0309 15:43:33.030079 8740 net.cpp:408] relu3 <- conv3

I0309 15:43:33.030079 8740 net.cpp:369] relu3 -> conv3 (in-place)

I0309 15:43:33.030079 8740 net.cpp:124] Setting up relu3

I0309 15:43:33.030079 8740 net.cpp:131] Top shape: 10 384 13 13 (648960)

I0309 15:43:33.030079 8740 net.cpp:139] Memory required for data: 58596920

I0309 15:43:33.030079 8740 layer_factory.cpp:58] Creating layer conv4

I0309 15:43:33.030079 8740 net.cpp:86] Creating Layer conv4

I0309 15:43:33.030079 8740 net.cpp:408] conv4 <- conv3

I0309 15:43:33.030079 8740 net.cpp:382] conv4 -> conv4

I0309 15:43:33.031080 8740 net.cpp:124] Setting up conv4

I0309 15:43:33.031080 8740 net.cpp:131] Top shape: 10 384 13 13 (648960)

I0309 15:43:33.031080 8740 net.cpp:139] Memory required for data: 61192760

I0309 15:43:33.031080 8740 layer_factory.cpp:58] Creating layer relu4

I0309 15:43:33.031080 8740 net.cpp:86] Creating Layer relu4

I0309 15:43:33.031080 8740 net.cpp:408] relu4 <- conv4

I0309 15:43:33.031080 8740 net.cpp:369] relu4 -> conv4 (in-place)

I0309 15:43:33.031080 8740 net.cpp:124] Setting up relu4

I0309 15:43:33.031080 8740 net.cpp:131] Top shape: 10 384 13 13 (648960)

I0309 15:43:33.031080 8740 net.cpp:139] Memory required for data: 63788600

I0309 15:43:33.031080 8740 layer_factory.cpp:58] Creating layer conv5

I0309 15:43:33.031080 8740 net.cpp:86] Creating Layer conv5

I0309 15:43:33.031080 8740 net.cpp:408] conv5 <- conv4

I0309 15:43:33.031080 8740 net.cpp:382] conv5 -> conv5

I0309 15:43:33.032079 8740 net.cpp:124] Setting up conv5

I0309 15:43:33.032079 8740 net.cpp:131] Top shape: 10 256 13 13 (432640)

I0309 15:43:33.032079 8740 net.cpp:139] Memory required for data: 65519160

I0309 15:43:33.032079 8740 layer_factory.cpp:58] Creating layer relu5

I0309 15:43:33.032079 8740 net.cpp:86] Creating Layer relu5

I0309 15:43:33.032079 8740 net.cpp:408] relu5 <- conv5

I0309 15:43:33.032079 8740 net.cpp:369] relu5 -> conv5 (in-place)

I0309 15:43:33.032079 8740 net.cpp:124] Setting up relu5

I0309 15:43:33.032079 8740 net.cpp:131] Top shape: 10 256 13 13 (432640)

I0309 15:43:33.032079 8740 net.cpp:139] Memory required for data: 67249720

I0309 15:43:33.032079 8740 layer_factory.cpp:58] Creating layer pool5

I0309 15:43:33.032079 8740 net.cpp:86] Creating Layer pool5

I0309 15:43:33.032079 8740 net.cpp:408] pool5 <- conv5

I0309 15:43:33.032079 8740 net.cpp:382] pool5 -> pool5

I0309 15:43:33.032079 8740 net.cpp:124] Setting up pool5

I0309 15:43:33.032079 8740 net.cpp:131] Top shape: 10 256 6 6 (92160)

I0309 15:43:33.032079 8740 net.cpp:139] Memory required for data: 67618360

I0309 15:43:33.032079 8740 layer_factory.cpp:58] Creating layer fc6

I0309 15:43:33.032079 8740 net.cpp:86] Creating Layer fc6

I0309 15:43:33.032079 8740 net.cpp:408] fc6 <- pool5

I0309 15:43:33.032079 8740 net.cpp:382] fc6 -> fc6

I0309 15:43:33.130084 8740 net.cpp:124] Setting up fc6

I0309 15:43:33.130084 8740 net.cpp:131] Top shape: 10 4096 (40960)

I0309 15:43:33.130084 8740 net.cpp:139] Memory required for data: 67782200

I0309 15:43:33.130084 8740 layer_factory.cpp:58] Creating layer relu6

I0309 15:43:33.130084 8740 net.cpp:86] Creating Layer relu6

I0309 15:43:33.130084 8740 net.cpp:408] relu6 <- fc6

I0309 15:43:33.130084 8740 net.cpp:369] relu6 -> fc6 (in-place)

I0309 15:43:33.130084 8740 net.cpp:124] Setting up relu6

I0309 15:43:33.130084 8740 net.cpp:131] Top shape: 10 4096 (40960)

I0309 15:43:33.130084 8740 net.cpp:139] Memory required for data: 67946040

I0309 15:43:33.130084 8740 layer_factory.cpp:58] Creating layer drop6

I0309 15:43:33.130084 8740 net.cpp:86] Creating Layer drop6

I0309 15:43:33.130084 8740 net.cpp:408] drop6 <- fc6

I0309 15:43:33.130084 8740 net.cpp:369] drop6 -> fc6 (in-place)

I0309 15:43:33.130084 8740 net.cpp:124] Setting up drop6

I0309 15:43:33.130084 8740 net.cpp:131] Top shape: 10 4096 (40960)

I0309 15:43:33.130084 8740 net.cpp:139] Memory required for data: 68109880

I0309 15:43:33.130084 8740 layer_factory.cpp:58] Creating layer fc7

I0309 15:43:33.130084 8740 net.cpp:86] Creating Layer fc7

I0309 15:43:33.130084 8740 net.cpp:408] fc7 <- fc6

I0309 15:43:33.130084 8740 net.cpp:382] fc7 -> fc7

I0309 15:43:33.166087 8740 net.cpp:124] Setting up fc7

I0309 15:43:33.166087 8740 net.cpp:131] Top shape: 10 4096 (40960)

I0309 15:43:33.166087 8740 net.cpp:139] Memory required for data: 68273720

I0309 15:43:33.166087 8740 layer_factory.cpp:58] Creating layer relu7

I0309 15:43:33.166087 8740 net.cpp:86] Creating Layer relu7

I0309 15:43:33.166087 8740 net.cpp:408] relu7 <- fc7

I0309 15:43:33.166087 8740 net.cpp:369] relu7 -> fc7 (in-place)

I0309 15:43:33.166087 8740 net.cpp:124] Setting up relu7

I0309 15:43:33.166087 8740 net.cpp:131] Top shape: 10 4096 (40960)

I0309 15:43:33.166087 8740 net.cpp:139] Memory required for data: 68437560

I0309 15:43:33.166087 8740 layer_factory.cpp:58] Creating layer drop7

I0309 15:43:33.166087 8740 net.cpp:86] Creating Layer drop7

I0309 15:43:33.166087 8740 net.cpp:408] drop7 <- fc7

I0309 15:43:33.166087 8740 net.cpp:369] drop7 -> fc7 (in-place)

I0309 15:43:33.166087 8740 net.cpp:124] Setting up drop7

I0309 15:43:33.166087 8740 net.cpp:131] Top shape: 10 4096 (40960)

I0309 15:43:33.166087 8740 net.cpp:139] Memory required for data: 68601400

I0309 15:43:33.166087 8740 layer_factory.cpp:58] Creating layer fc8

I0309 15:43:33.166087 8740 net.cpp:86] Creating Layer fc8

I0309 15:43:33.166087 8740 net.cpp:408] fc8 <- fc7

I0309 15:43:33.166087 8740 net.cpp:382] fc8 -> fc8

I0309 15:43:33.175087 8740 net.cpp:124] Setting up fc8

I0309 15:43:33.175087 8740 net.cpp:131] Top shape: 10 1000 (10000)

I0309 15:43:33.176087 8740 net.cpp:139] Memory required for data: 68641400

I0309 15:43:33.176087 8740 layer_factory.cpp:58] Creating layer prob

I0309 15:43:33.176087 8740 net.cpp:86] Creating Layer prob

I0309 15:43:33.176087 8740 net.cpp:408] prob <- fc8

I0309 15:43:33.176087 8740 net.cpp:382] prob -> prob

I0309 15:43:33.176087 8740 net.cpp:124] Setting up prob

I0309 15:43:33.176087 8740 net.cpp:131] Top shape: 10 1000 (10000)

I0309 15:43:33.176087 8740 net.cpp:139] Memory required for data: 68681400

I0309 15:43:33.176087 8740 net.cpp:202] prob does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] fc8 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] drop7 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] relu7 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] fc7 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] drop6 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] relu6 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] fc6 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] pool5 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] relu5 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] conv5 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] relu4 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] conv4 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] relu3 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] conv3 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] norm2 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] pool2 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] relu2 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] conv2 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] norm1 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] pool1 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] relu1 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] conv1 does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:202] data does not need backward computation.

I0309 15:43:33.176087 8740 net.cpp:244] This network produces output prob

I0309 15:43:33.176087 8740 net.cpp:257] Network initialimean-subtracted values: [('B', 104.0069879317889), ('G', 116.66876761696767), ('R', 122.6789143406786)]

predicted class is: 281

output label: n02123045 tabby, tabby cat

zation done.

I0309 15:43:43.999706 8740 upgrade_proto.cpp:44] Attempting to upgrade input file specified using deprecated transformation parameters: E:/caffe-windows/models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel

I0309 15:43:43.999706 8740 upgrade_proto.cpp:47] Successfully upgraded file specified using deprecated data transformation parameters.

W0309 15:43:43.999706 8740 upgrade_proto.cpp:49] Note that future Caffe releases will only support transform_param messages for transformation fields.

I0309 15:43:43.999706 8740 upgrade_proto.cpp:53] Attempting to upgrade input file specified using deprecated V1LayerParameter: E:/caffe-windows/models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel

I0309 15:43:44.619742 8740 upgrade_proto.cpp:61] Successfully upgraded file specified using deprecated V1LayerParameter

I0309 15:43:44.827754 8740 net.cpp:746] Ignoring source layer loss

exe应用程序的生成

caffe.exe compute_image_mean.exe convert_imageset.exe

1. 参考文献:

http://blog.csdn.net/jnulzl/article/details/52077915 [Caffe for Python 官方教程(翻译)]