Datawhale12月组队学习【推荐系统】Task01

paddle入门与NeutralCF实现

-

- 数据处理

- Dataset

-

- Dataloader

- 定义模型结构

- 模型训练

-

- Train Pipeline

- 指标

-

- hit_rate

- NDCG

- 可视化

数据处理

- 筛选出活跃度大于20的用户

df = pd.read_csv(config['valid_path'])

df['user_count'] = df['user_id'].map(df['user_id'].value_counts())

df = df[df['user_count']>20].reset_index(drop=True)

- 构建正样本字典

to_dict()方法将DataFrame/Series转换成dict{ user_id : [ item_id, item_id ] }

pos_dict = df.groupby('user_id')['item_id'].apply(list).to_dict()

- 构造训练样本&测试样本

训练样本:

- 正样本:采用用户的前n-1个历史记录

- 负样本:全局采样,数量为该用户正样本数量的ratio倍(3倍)

测试样本:

- 采用用户最后一个历史记录作为正样本

- 负样本:全局采样,每个用户负样本数为 100

样本集合:[ user_id, item_id, label ]

Dataset

重写 __getitem__方法

#Dataset构造

class BaseDataset(Dataset):

def __init__(self,df):

self.df = df

self.feature_name = ['user_id','item_id']

#数据编码

self.enc_data()

def enc_data(self):

#使用enc_dict对数据进行编码

self.enc_data = defaultdict(dict)

for col in self.feature_name:

self.enc_data[col] = paddle.to_tensor(np.array(self.df[col])).squeeze(-1)

def __getitem__(self, index):

data = dict()

for col in self.feature_name:

data[col] = self.enc_data[col][index]

if 'label' in self.df.columns:

data['label'] = paddle.to_tensor([self.df['label'].iloc[index]],dtype="float32").squeeze(-1)

return data

def __len__(self):

return len(self.df)

Dataloader

通过random_split切分数据集,但是需要传入子集合长度数组, 总和等于原数组长度。

from paddle.io import random_split

train_dataset = BaseDataset(train_df)

train_dataset, val_dataset = random_split(train_dataset, [40164, 10000])

test_dataset = BaseDataset(test_df)

#dataloader

train_loader = DataLoader(train_dataset,batch_size=config['batch'],shuffle=True,num_workers=0)

test_loader = DataLoader(test_dataset,batch_size=config['batch'],shuffle=False,num_workers=0)

# 迭代获得每个batch

for data in tqdm(train_loader):

# data:{user_id:[batch], item_id:[batch], label:[batch]}

定义模型结构

-

init

- 嵌入层:

nn.Embedding(input_dim, output_dim)输出:[batch, 1, emb_dim] loss_func:nn.BCELoss()

- 嵌入层:

-

forward:

- MLP_output:

nn.Sequential(layers)输出:[batch, emb_dim] - GMF_output:

paddle.multiply(user_emb, item_emb)输出:`[batch, emb_dim]`` - ``MLP_output

与GMF_output进行paddle.concat后,通过output_layer,得到输出y_pred`

- MLP_output:

class NCF(paddle.nn.Layer):

def __init__(self,

embedding_dim = 16,

vocab_map = None,

loss_fun = 'nn.BCELoss()'):

super(NCF, self).__init__()

self.embedding_dim = embedding_dim

self.vocab_map = vocab_map

self.loss_fun = eval(loss_fun) # self.loss_fun = paddle.nn.BCELoss()

self.user_emb_layer = nn.Embedding(self.vocab_map['user_id'],

self.embedding_dim)

self.item_emb_layer = nn.Embedding(self.vocab_map['item_id'],

self.embedding_dim)

self.mlp = nn.Sequential(

nn.Linear(2*self.embedding_dim,self.embedding_dim),

nn.ReLU(),

nn.BatchNorm1D(self.embedding_dim),

# nn.Linear(self.embedding_dim,1),

# nn.Sigmoid()

)

self.output = nn.Sequential(

nn.Linear(2*self.embedding_dim,1),

nn.Sigmoid()

)

def forward(self,data):

user_emb = self.user_emb_layer(data['user_id']) # [batch, 1, emb]

item_emb = self.item_emb_layer(data['item_id']) # [batch, 1, emb]

GMF = paddle.multiply(user_emb, item_emb).squeeze(1)

mlp_input = paddle.concat([user_emb, item_emb],axis=-1).squeeze(1)

mlp_output = self.mlp(mlp_input)

y_pred = self.output(paddle.concat([GMF, mlp_output], axis=-1))

# y_pred = self.mlp(mlp_input)

if 'label' in data.keys():

loss = self.loss_fun(y_pred.squeeze(),data['label'])

output_dict = {'pred':y_pred,'loss':loss}

else:

output_dict = {'pred':y_pred}

return output_dict

模型训练

声明:

- 模型

- optimizer:需要传入模型参数

parameters=model.parameters()和学习率learning_rate=config['lr']

model = NCF(embedding_dim=64,vocab_map=vocab_map)

optimizer = paddle.optimizer.Adam(parameters=model.parameters(), learning_rate=config['lr'])

train_metric_list = []

#模型训练流程

for i in range(config['epoch']):

# 模型训练

train_metirc = train_model(model,train_loader,optimizer=optimizer)

train_metric_list.append(train_metirc)

# 模型验证

if i+1 % 5 == 0:

val_metric = valid_model(model,val_loader)

print('valid : ', val_metric)

val_metric_list.append(val_metric)

print("Train Metric:")

print(train_metirc)

Train Pipeline

进入训练模式

model.train()反向传播,计算梯度

loss.backward()更新模型参数

optimizer.step()梯度清零

optimizer.clear_grad()

#训练模型,返回metrics

def train_model(model, train_loader, optimizer, metric_list=['roc_auc_score','log_loss']):

model.train()

pred_list = []

label_list = []

pbar = tqdm(train_loader)

# data 代表每个batch的数据

for data in pbar:

output = model(data)

pred = output['pred']

loss = output['loss']

loss.backward()

optimizer.step()

optimizer.clear_grad()

pred_list.extend(pred.squeeze(-1).cpu().detach().numpy())

label_list.extend(data['label'].squeeze(-1).cpu().detach().numpy())

pbar.set_description("Loss {}".format(loss.numpy()[0]))

# 每个eopch的指标

res_dict = dict()

for metric in metric_list:

if metric =='log_loss':

res_dict[metric] = log_loss(label_list,pred_list, eps=1e-7)

else:

res_dict[metric] = eval(metric)(label_list,pred_list)

return res_dict

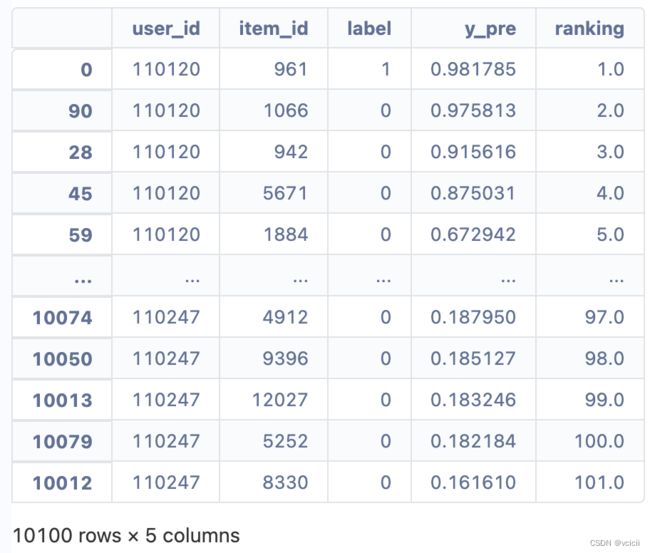

指标

测试样本中,每个用户只有一个正例,其他全为负样本。

按照模型计算的pred进行排序

y_pre = test_model(model,test_loader)

test_df['y_pre'] = y_pre

test_df['ranking'] = test_df.groupby(['user_id'])['y_pre'].rank(method='first', ascending=False)

test_df = test_df.sort_values(by=['user_id','ranking'],ascending=True)

hit_rate

只保留每个用户排名靠前的k个样本,进行求和,判断有多少正样本被成功召回

def hitrate(test_df,k=20):

user_num = test_df['user_id'].nunique()

test_gd_df = test_df[test_df['ranking']<=k].reset_index(drop=True)

return test_gd_df['label'].sum() / user_num

NDCG

计算公式:

NDCG = DCG / IDCG

idcg@k 一定为1

dcg@k 1/log_2(ranking+1) -> log(2)/log(ranking+1)

def ndcg(test_df,k=20):

user_num = test_df['user_id'].nunique()

test_gd_df = test_df[test_df['ranking']<=k].reset_index(drop=True)

# 取出所有正样本,根据正样本的rank计算DCG

test_gd_df = test_gd_df[test_gd_df['label']==1].reset_index(drop=True)

test_gd_df['ndcg'] = math.log(2) / np.log(test_gd_df['ranking']+1)

return test_gd_df['ndcg'].sum() / user_num

可视化

def plot_metric(metric_dict_list, metric_name):

epoch_list = [x for x in range(1,1+len(metric_dict_list))]

metric_list = [metric_dict_list[i][metric_name] for i in range(len(metric_dict_list))]

# 设置画布像素

plt.figure(dpi=100)

plt.plot(epoch_list, metric_list)

plt.xlabel('Epoch')

plt.ylabel(metric_name)

plt.title('Train Metric')

plt.show()

plot_metric(train_metric_list,'roc_auc_score')

plot_metric(train_metric_list,'log_loss')