pytorch/mxnet模型tensorrt部署

本文用于记录pytorch/mxnet模型使用tersorrt的整个流程以及遇到的坑。

tensorrt支持TensorFlow的uff和onnx以及自定义模型的推理加速,对于pytorch有第三方接口torch2trt项目,但是这个需要定义好模型在加入,不能把模型和tensorrt分离

import torch

from torch2trt import torch2trt

from torchvision.models.alexnet import alexnet

# create some regular pytorch model...

model = alexnet(pretrained=True).eval().cuda()

# create example data

x = torch.ones((1, 3, 224, 224)).cuda()

# convert to TensorRT feeding sample data as input

model_trt = torch2trt(model, [x])

部署的时候还依赖pytorch环境,就没尝试。

mxnet官方是有接口直接转tensorrt的,

arg_params.update(aux_params)

all_params = dict([(k, v.as_in_context(mx.gpu(0))) for k, v in arg_params.items()])

executor = mx.contrib.tensorrt.tensorrt_bind(sym, ctx=mx.gpu(0), all_params=all_params,data=batch_shape, grad_req='null', force_rebind=True)

y_gen = executor.forward(is_train=False, data=input)

y_gen[0].wait_to_read()

这个也没有尝试,主要还是想部署时分离,只用tensorrt环境,不需要装深度学习全家桶

pytorch和mxnet转换为onnx的模型官方都有接口和文档,使用方法也很简单

#mxnet转onnx

sym = './resnet-50-symbol.json'

params = './resnet-50-0000.params'

input_shape = (1, 3, 224, 224)

onnx_file = './resnet-50.onnx'

converted_model_path = onnx_mxnet.export_model(sym, params, [input_shape], np.float32, onnx_file)

#pytorch转onnx

import torch

import torchvision

dummy_input = torch.randn(10, 3, 224, 224, device='cuda')

model = torchvision.models.alexnet(pretrained=True).cuda()

# Providing input and output names sets the display names for values

# within the model's graph. Setting these does not change the semantics

# of the graph; it is only for readability.

#

# The inputs to the network consist of the flat list of inputs (i.e.

# the values you would pass to the forward() method) followed by the

# flat list of parameters. You can partially specify names, i.e. provide

# a list here shorter than the number of inputs to the model, and we will

# only set that subset of names, starting from the beginning.

input_names = [ "actual_input_1" ] + [ "learned_%d" % i for i in range(16) ]

output_names = [ "output1" ]

torch.onnx.export(model, dummy_input, "alexnet.onnx", verbose=True, input_names=input_names, output_names=output_names)

转onnx问题记录

-

自定义层SegmentConsensus 不识别

对于自定义层,在pytorch转onnx需要自定义,onnx转trt是还需要自定义,对于这种层还是建议搞懂底层原理,用基础的操作来实现,这个层比较简单,使用了mean和index_select操作实现了 -

TracerWarning: There are 2 live references to the data region being modified when tracing in-place operator copy_ (possibly due to an assignment). This might cause the trace to be incorrect, because all other views that also reference this data will not reflect this change in the trace! On the other hand, if all other views use the same memory chunk, but are disjoint (e.g. are outputs of torch.split), this might still be safe

这个错误是说修改的数据有两个引用导致无法trace,错误的代码如下:

out[:, :-1, :fold] = x[:, 1:, :fold] # shift left

out[:, 1:, fold: 2 * fold] = x[:, :-1, fold: 2 * fold] # shift right

out[:, :, 2 * fold:] = x[:, :, 2 * fold:] # not shift

查了一些资料应该是说左边赋值是一个引用,切片又是一个引用,两个引用无法trace,那么把切片使用index_select替换

left_side = torch.cat((x[:, 1:, :fold], torch.zeros(1, 1, fold, h, w)), dim=1)

middle_side = torch.cat((torch.zeros(1, 1, fold, h, w), x[:, :n_segment - 1, fold: 2 * fold]), dim=1)

out = torch.cat((left_side, middle_side, x[:, :, 2 * fold:]), dim=2)

- 模型部分转换为onnx

保存的模型可能是pretrained的模型,实际使用中只需要用部分层,对于mxnet可以在sym文件中直接指定出口层,再转换即可

sym, arg_params, aux_params = mx.model.load_checkpoint(pretrained, epoch)

sym = get_output_sym(sym, 'fc1_output')

arg_params.update(aux_params)

onnx_mx.export_model(sym, arg_params, input_shape, onnx_file_path=onnx_file_path, verbose=True)

对于pytorch可以继承torch.nn.Module将模型传进来自己进行修改定制

class ExtractFeature(torch.nn.Module):

def __init__(self, cnn, frames=16):

super().__init__()

self.model = cnn

self.num_segments = frames

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

def forward(self, data):

# st = time.time()

# print('feature extracting start')

n = self.model

pool = torch.nn.MaxPool2d(3,2)

with torch.no_grad():

input= data.view((-1, 3) + data.size()[-2:]).to(self.device)

x=n.conv1(input)

x=n.bn1(x)

x=n.relu(x)

x=n.maxpool(x)

x=n.layer1(x)

x=n.layer2(x)

x=n.layer3(x)

x=n.layer4(x)

x=pool(x)

x=x.flatten(start_dim=1)

ndata=x

data=ndata.view((-1, self.num_segments) + ndata.size()[1:])

return data

-

模型调用不使用默认的forward

模型继承torch.nn.Module,该类有个__call__方法可以使类可以像函数一样被调用,在__call__中调用了apply方法最终调用到forward方法,如果模型使用中不使用forward方法,该怎么转onnx呢?如下这种

out = net.forward_features(x)

显式调用了forward_features方法,开始想通过继承方式,将forward_features函数直接返回父类的forward,其实可以直接修改方法的指向,像下面这样直接修改指向即可

OCR.forward = OCR.forward_ocr -

Exporting the operator GatherElements to ONNX opset version 9 is not supported

opset9 不支持该op,可以将opset version调高,目前最高是12,越高支持的op越多,opset_version默认是9

torch.onnx.export(model, dummy_input, "alexnet.onnx", verbose=True, input_names=input_names, output_names=output_names,opset_version=11,)

- dynamic input

动态输入包括batchsize, 以及可变h,w,使用dynamic_axes参数指定可变的维度

torch.onnx.export(OCR, dummy_input ,onnx_ocr_forword_ocr_path,

input_names=['input'],

output_names=['segm_pred', 'segm_pred2', 'rbox', 'rbox2', 'angle', 'angle2', 'x'],

opset_version=11,

dynamic_axes={"input": {0: 'batch',2:'h', 3:'w'}})

- onnxruntime测试

模型转换完成后需要测试onnx的模型和原模型的输出是否一致,先用onnxruntime来跑模型,运行时报错can’t load culib 10.1,找不到cuda库,查看了代码和官方文档,明确指定只支持cuda10.1,不是对应的版本重新安装对应的版本即可

tensorrt问题记录

-

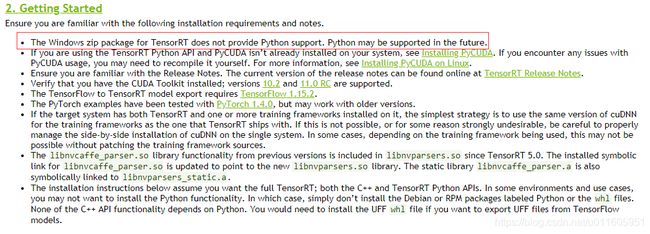

在tensorrt官网下载最新的tensorrt7.1版本,安装好后,配置环境变量,库里面都是so库,和一些c文件,无法import tensorrt,查看官网说明发现tensorrt 的Python接口是不支持Windows的,无法在Windows下用Python接口

-

[TensorRT] ERROR: …/rtSafe/cuda/caskConvolutionRunner.cpp (290) - Cask Error in checkCaskExecError: 7 (Cask Convolution execution)

[TensorRT] ERROR: FAILED_EXECUTION: std::exception

这个问题是因为创建的engine和执行不在一个线程中,使用了多线程,将创建和执行放在一个线程中 -

[TensorRT] ERROR: …/rtSafe/cuda/cudaConvolutionRunner.cpp (303) - Cudnn Error in execute: 7 (CUDNN_STATUS_MAPPING_ERROR)

[TensorRT] ERROR: FAILED_EXECUTION: std::exception

创建engine后不使用to(device)和cuda操作,pytorch和mxnet都需要将模型和数据cuda操作,需要删除 -

[TensorRT] WARNING: Explicit batch network detected and batch size specified, use execute without batch size instead.

[TensorRT] ERROR: Parameter check failed at: engine.cpp::resolveSlots::1024, condition: allInputDimensionsSpecified(routine)

动态batchsize tensorrt不能直接构建engine,需要设置profile构建

profile = builder.create_optimization_profile()

profile.set_shape(

ModelData.INPUT_NAME,

ModelData.MIN_INPUT_SHAPE,

ModelData.OPT_INPUT_SHAPE,

ModelData.MAX_INPUT_SHAPE)

config.add_optimization_profile(profile)

engine = builder.build_engine(network,config)

- [TensorRT]ERROR: …/rtSafe/safeRuntime.cpp (25) - Cuda Error in allocate: 2 (out of memory)

看起来像是显存爆了,nvidia-smi -l 打开显存实时占用发现显存还剩很多,调试运行后发现分配的buffer size未负数,当使用动态batchsize时候第一个维度变成了-1,分配的size是负数就失败了,将负数变成正数在*batchsize分配buffer

size = trt.volume(engine.get_binding_shape(binding)) * batch_size

if size < 0:

size *= -1

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

- dynamic input,目标检测输入宽高不确定,和问题4中batchsize问题一样也需要在profile中设置H/W的最小值、典型值和最大值,还需要在allocate_buffers时传入w/h,如果不传入默认都是-1,算出来的size会很小,binding分别为输入和输出计算size并分配buffer,需要分别传入输入和输出的h_和w_

for binding in engine:

bind = engine.get_binding_shape(binding)

vol = trt.volume(bind)

# size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

if binding == 'input':

size = trt.volume(engine.get_binding_shape(binding)) * batch_size * h_ * w_

else:

size = trt.volume(engine.get_binding_shape(binding)) * batch_size * math.ceil(h_ / 4) * math.ceil(w_ / 4)

if size < 0:

size *= -1

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

- [TensorRT] ERROR: instance normalization doesn’t support dynamic input

instance normalization 不支持动态输入,又是在目标检测模型中使用的,无法规避,这个问题也可以归类为不支持的op,可以

在onnx自定义op然后在tensorrt定义plugin,也可以重写op,这里使用重写op实现,在torch/onnx/symbolic_opset9.py中将原有的instance_norm函数改写如下

@parse_args('v', 'v', 'v', 'v', 'v', 'i', 'f', 'f', 'i')

def instance_norm(g, input, weight, bias, running_mean, running_var, use_input_stats, momentum, eps,

cudnn_enabled):

axes = [-i for i in range(2, 0, -1)]

two_cst = g.op("Constant", value_t=torch.tensor(2.))

eps_cst = g.op("Constant", value_t=torch.tensor(eps))

mean = g.op("ReduceMean", input, axes_i=axes)

numerator = sub(g, input, mean)

# variance = e((x - e(x))^2), and (x - e(x)) is the numerator in the layer_norm formula

variance = g.op("ReduceMean", pow(g, numerator, two_cst), axes_i=axes)

denominator = sqrt(g, add(g, variance, eps_cst))

inst_norm = div(g, numerator, denominator)

if not (weight is None or weight.node().mustBeNone()):

inst_norm = mul(g, inst_norm, weight)

if not (bias is None or bias.node().mustBeNone()):

inst_norm = add(g, inst_norm, bias)

return inst_norm

- instance_norm改写后报mul elementwise dimension mismatch [1,256,4,8]and [1,1,1,256]

乘法维度不匹配,mul是在计算完均值和方差后成γ参数时报错的,根据pytorch的broadcasting 机制,不同维度的数据会通过expand和repeat操作达到相同维度,但是expand是尾部对齐,变成四维后增加的维度都在前面,最后一个维度都不是1无法进行repeat操作,导致维度不匹配,那直接UNsqueeze两维出来将channel放在第二个维度再broadcasting 就可以维度相同了

@parse_args('v', 'v', 'v', 'v', 'v', 'i', 'f', 'f', 'i')

def instance_norm(g, input, weight, bias, running_mean, running_var, use_input_stats, momentum, eps,

cudnn_enabled):

axes = [-i for i in range(2, 0, -1)]

two_cst = g.op("Constant", value_t=torch.tensor(2.))

eps_cst = g.op("Constant", value_t=torch.tensor(eps))

mean = g.op("ReduceMean", input, axes_i=axes)

numerator = sub(g, input, mean)

# variance = e((x - e(x))^2), and (x - e(x)) is the numerator in the layer_norm formula

variance = g.op("ReduceMean", pow(g, numerator, two_cst), axes_i=axes)

denominator = sqrt(g, add(g, variance, eps_cst))

inst_norm = div(g, numerator, denominator)

weight = g.op("Unsqueeze", weight, axes_i=[-1])

weight = g.op("Unsqueeze", weight, axes_i=[-1])

bias = g.op("Unsqueeze", bias, axes_i=[-1])

bias = g.op("Unsqueeze", bias, axes_i=[-1])

if not (weight is None or weight.node().mustBeNone()):

inst_norm = mul(g, inst_norm, weight)

if not (bias is None or bias.node().mustBeNone()):

inst_norm = add(g, inst_norm, bias)

return inst_norm