CDH5适配spark3.0集成kyuubi详细教程

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

CDH5适配spark3.0集成kyuubi详细教程

- 前言

- 参考文章

- 一、编译环境准备

- 二、环境安装

-

- 1.maven环境(Java和Scala环境这里就不说了)

- 2.上传并解压文件

- 3.配置setting文件

-

- 3.1配置maven本地存储路径

- 3.2修改镜像地址为阿里地址

- 三、spark源码编译

-

- 1.源码下载

- 2.修改部分不兼容代码

-

- 2.1第一处修改yarn模块

- 2.2第二处修改Utils 模块

- 2.3第三处修改HttpSecurityFilter 模块

- 3.配置pom.xml文件

- 4.配置dev/make-distribution.sh文件

- 5.编译spark

-

- 5.1编译

- 5.2验证

- 5.3记录遇到过的坑及解决办法

- 六、spark环境搭建

- 七、其它

前言

随着spark的更新迭代,已经官网编译好的版本已经不支持低版本的hive,由于公司需要使用到网易的开源组件kyuubi,而公司的生产环境还是CDH5,hive版本为1.1.0,所以需要重新编译spark3,对低版本hive进行适配。

参考文章

链接: 自编译Spark3.X,支持CDH 5.16.2(hadoop-2.6.0-cdh5.16.2)

链接: Kyuubi实践 | 编译Spark3.1以适配CDH5并集成Kyuubi

链接: 使用spark源码脚本编译CDH版本spark

一、编译环境准备

java -version #1.8.0_181

mvn -v #Apache Maven 3.6.3

scala -version #2.12.10

二、环境安装

1.maven环境(Java和Scala环境这里就不说了)

下载链接:

maven:https://archive.apache.org/dist/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz

scala:https://downloads.lightbend.com/scala/2.12.10/scala-2.12.10.tgz

2.上传并解压文件

代码如下(示例):

mkdir /opt/inst

#将文件上传后解压至该目录

tar -zxvf apache-maven-3.6.3-bin.tar.gz -C /opt/inst/

ln -s /opt/inst/apache-maven-3.6.3 /opt/inst/maven363

vi /etc/profile

#配置环境变量文件输入下面这两个内容。输入完成后wq保存

export MAVEN_HOME=/opt/inst/maven363

export PATH=$PATH:$MAVEN_HOME/bin

#对maven的jvm参数进行调整,避免JVM内存溢出

export MAVEN_OPTS="-Xmx2g -XX:ReservedCodeCacheSize=1g"

完事刷新一下环境

source /etc/profile

mvn -v

3.配置setting文件

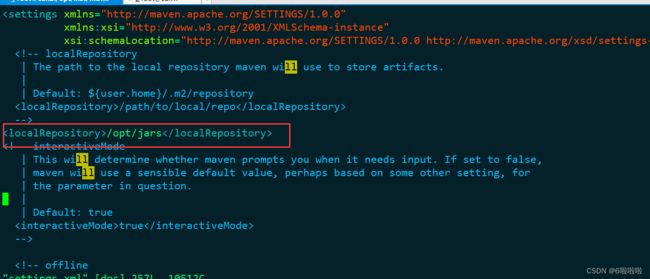

3.1配置maven本地存储路径

cd $MAVEN_HOME/conf

vim settings.xml

#提示:按下/可以在文件中进行搜索

1.按下/

2.输入localRepository并回车

将下列代码贴入

/opt/jars

由于/opt/jars目录没有创建,所以要自行创建一下。

mkdir /opt/jars

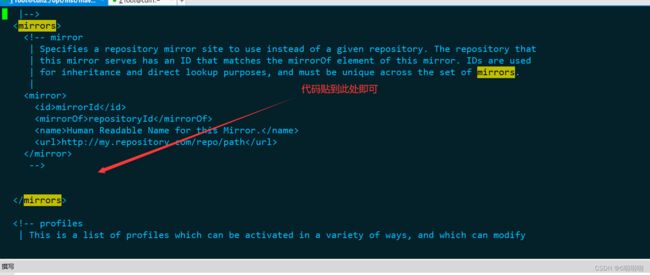

3.2修改镜像地址为阿里地址

根据3.1的查找方式,这里输入mirrors

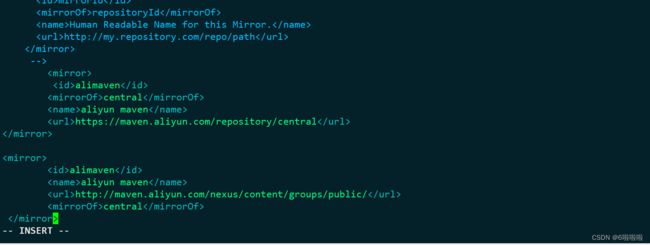

alimaven

central

aliyun maven

https://maven.aliyun.com/repository/central

alimaven

aliyun maven

http://maven.aliyun.com/nexus/content/groups/public/

central

三、spark源码编译

1.源码下载

https://archive.apache.org/dist/spark/spark-3.1.3/spark-3.1.3.tgz

源码下载完成后进行解压

cd /home/ftp #这里是你自定义存放文件的位置

mkdir -p /bi_bigdata/user_shell/spark

tar -zxvf spark-3.1.3.tgz -C /bi_bigdata/user_shell/spark/

cd /bi_bigdata/user_shell/spark/spark-3.1.3

2.修改部分不兼容代码

2.1第一处修改yarn模块

vim resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/Client.scala

搜索关键字:sparkConf.get(ROLLED_LOG_INCLUDE_PATTERN).foreach

/*注释

sparkConf.get(ROLLED_LOG_INCLUDE_PATTERN).foreach { includePattern =>

try {

val logAggregationContext = Records.newRecord(classOf[LogAggregationContext])

logAggregationContext.setRolledLogsIncludePattern(includePattern)

sparkConf.get(ROLLED_LOG_EXCLUDE_PATTERN).foreach { excludePattern =>

logAggregationContext.setRolledLogsExcludePattern(excludePattern)

}

appContext.setLogAggregationContext(logAggregationContext)

} catch {

case NonFatal(e) =>

logWarning(s"Ignoring ${ROLLED_LOG_INCLUDE_PATTERN.key} because the version of YARN " +

"does not support it", e)

}

}

appContext.setUnmanagedAM(isClientUnmanagedAMEnabled)

sparkConf.get(APPLICATION_PRIORITY).foreach { appPriority =>

appContext.setPriority(Priority.newInstance(appPriority))

}

appContext

}

*/

/*替换*/

sparkConf.get(ROLLED_LOG_INCLUDE_PATTERN).foreach { includePattern =>

try {

val logAggregationContext = Records.newRecord(classOf[LogAggregationContext])

// These two methods were added in Hadoop 2.6.4, so we still need to use reflection to

// avoid compile error when building against Hadoop 2.6.0 ~ 2.6.3.

val setRolledLogsIncludePatternMethod =

logAggregationContext.getClass.getMethod("setRolledLogsIncludePattern", classOf[String])

setRolledLogsIncludePatternMethod.invoke(logAggregationContext, includePattern)

sparkConf.get(ROLLED_LOG_EXCLUDE_PATTERN).foreach { excludePattern =>

val setRolledLogsExcludePatternMethod =

logAggregationContext.getClass.getMethod("setRolledLogsExcludePattern", classOf[String])

setRolledLogsExcludePatternMethod.invoke(logAggregationContext, excludePattern)

}

appContext.setLogAggregationContext(logAggregationContext)

} catch {

case NonFatal(e) =>

logWarning(s"Ignoring ${ROLLED_LOG_INCLUDE_PATTERN.key} because the version of YARN " +

"does not support it", e)

}

}

appContext

}

2.2第二处修改Utils 模块

vim core/src/main/scala/org/apache/spark/util/Utils.scala

//注释掉

//import org.apache.hadoop.util.{RunJar, StringUtils}

//替换为

import org.apache.hadoop.util.{RunJar}

def unpack(source: File, dest: File): Unit = {

// StringUtils 在hadoop2.6.0中引用不到,所以取消此import,然后修改为相似的功能

// val lowerSrc = StringUtils.toLowerCase(source.getName)

if (source.getName == null) {

throw new NullPointerException

}

val lowerSrc = source.getName.toLowerCase()

if (lowerSrc.endsWith(".jar")) {

RunJar.unJar(source, dest, RunJar.MATCH_ANY)

} else if (lowerSrc.endsWith(".zip")) {

FileUtil.unZip(source, dest)

} else if (

lowerSrc.endsWith(".tar.gz") || lowerSrc.endsWith(".tgz") || lowerSrc.endsWith(".tar")) {

FileUtil.unTar(source, dest)

} else {

logWarning(s"Cannot unpack $source, just copying it to $dest.")

copyRecursive(source, dest)

}

}

2.3第三处修改HttpSecurityFilter 模块

vim core/src/main/scala/org/apache/spark/ui/HttpSecurityFilter.scala

private val parameterMap: Map[String, Array[String]] = {

super.getParameterMap().asScala.map { case (name, values) =>

//Unapplied methods are only converted to functions when a function type is expected.

//You can make this conversion explicit by writing `stripXSS _` or `stripXSS(_)` instead of `stripXSS`.

// stripXSS(name) -> values.map(stripXSS)

stripXSS(name) -> values.map(stripXSS(_))

}.toMap

}

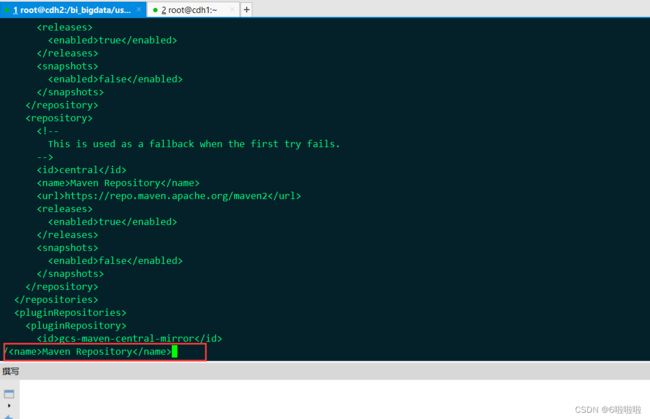

3.配置pom.xml文件

cd /bi_bigdata/user_shell/spark/spark-3.1.3

vi pom.xml

central

Maven Repository

https://mvnrepository.com/repos/central

true

false

搜索关键字:

aliyun

Nexus Release Repository

http://maven.aliyun.com/nexus/content/groups/public

true

true

cloudera

cloudera Repository

https://repository.cloudera.com/artifactory/cloudera-repos/

搜索关键字:

hadoop2.6.0-cdh5.13.1

hadoop2.6.0-cdh5.13.1

2.7.1

2.4

servlet-api

wq保存退出

4.配置dev/make-distribution.sh文件

cd /bi_bigdata/user_shell/spark/spark-3.1.3

vi dev/make-distribution.sh

搜索关键字:SPARK_HADOOP_VERSION

# spark版本

VERSION=3.1.2

# scala版本

SCALA_VERSION=2.12

# hadoop版本

SPARK_HADOOP_VERSION=2.6.0-cdh5.13.1

# 开启hive

SPARK_HIVE=1

# 原来的内容注释掉

#VERSION=$("$MVN" help:evaluate -Dexpression=project.version $@ \

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | tail -n 1)

#SCALA_VERSION=$("$MVN" help:evaluate -Dexpression=scala.binary.version $@ \

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | tail -n 1)

#SPARK_HADOOP_VERSION=$("$MVN" help:evaluate -Dexpression=hadoop.version $@ \

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | tail -n 1)

#SPARK_HIVE=$("$MVN" help:evaluate -Dexpression=project.activeProfiles -pl sql/hive $@ \

# | grep -v "INFO"\

# | grep -v "WARNING"\

# | fgrep --count "hive ";\

# # Reset exit status to 0, otherwise the script stops here if the last grep finds nothing\

# # because we use "set -o pipefail"

# echo -n)

#注释掉原来的代码

#MVN="$SPARK_HOME/build/mvn"

#本地maven环境

MVN=/opt/inst/maven363/bin/mvn

5.编译spark

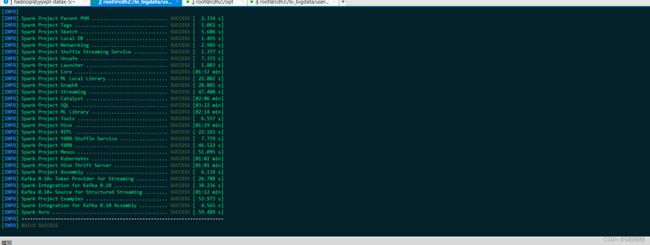

5.1编译

/bi_bigdata/user_shell/spark/spark-3.1.3/dev/make-distribution.sh --name 2.6.0-cdh5.13.1 --tgz -Phive -Phive-thriftserver -Pmesos -Pyarn -Pkubernetes -Phadoop2.6.0-cdh5.13.1 -Dhadoop.version=2.6.0-cdh5.13.1 -Dscala.version=2.12.10

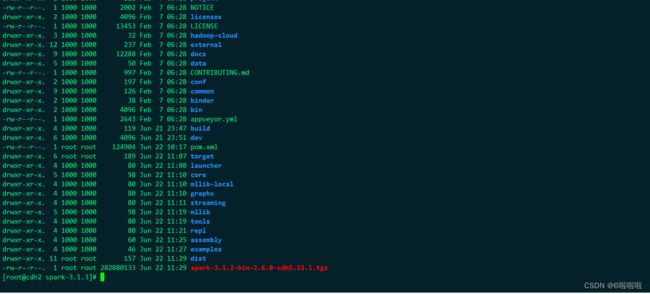

ll -rt

5.2验证

mv spark-3.1.2-bin-2.6.0-cdh5.13.1.tgz /home/ftp/

mkdir -p /opt/soft

tar -zxvf /home/ftp/spark-3.1.2-bin-2.6.0-cdh5.13.1.tgz -C /opt/soft

cd /opt/soft/spark-3.1.2-bin-2.6.0-cdh5.13.1/jars/

#ll查看一下jar包是否正确

ll *hadoop*

5.3记录遇到过的坑及解决办法

1.排除pom.xml配置的问题

2.排除环境变量问题

3.已解决,报错原因:修改完不兼容代码忘保存或修改代码时写错、漏写等。

#已解决

#jar包下载失败,这边手动去下载一下

http://maven.aliyun.com/nexus/content/groups/public/net/bytebuddy/byte-buddy/1.8.15/byte-buddy-1.8.15.jar

#下载成功后上传到本地仓库中

/opt/jars/net/bytebuddy/byte-buddy/1.8.15/

很明显/bi_bigdata/user_shell/spark/spark-3.1.3/resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/Client.scala文件修改错误

重新按照2.1的方法进行源码修改

1.错误原因内存不足

2.解决方法:更换更大的内存进行编译

export MAVEN_OPTS="${MAVEN_OPTS:--Xmx2g -XX:ReservedCodeCacheSize=512m}"

BUILD_COMMAND=("$MVN" -T 1C clean package -DskipTests $@)

TARDIR_NAME=spark-$VERSION-bin-$NAME

对maven的jvm参数进行调整,避免JVM内存溢出

将下面的这个参数配置到环境变量中

export MAVEN_OPTS="-Xmx2g -XX:ReservedCodeCacheSize=1g"

六、spark环境搭建

vi /etc/profile

export SPARK_HOME=/opt/soft/spark-3.1.2-bin-2.6.0-cdh5.13.1

export PATH=$PATH:$SPARK_HOME/bin

source /etc/profile

输入下列测试语句

# 本地跑spark-shell

spark-shell

# 以yarn-client的方式跑spark-shell

spark-shell --master yarn --deploy-mode client --executor-memory 1G --num-executors 2

# 以yarn-cluster的方式跑spark-sql

spark-sql --master yarn -deploy-mode cluster --executor-memory 1G --num-executors 2

# 跑一些spark代码 操作hive表中的一些数据,测试正常就OK

七、其它

后续kyuubi搭建继续在这里补充