Spark之日志数据清洗及分析(详细解说)

日志数据清洗及分析

1、数据清洗

基本步骤:

- 按照

Tab切割数据 - 过滤掉字段数量少于8个的数据

- 按照第一列和第二列对数据进行去重

- 过滤掉状态码非200的数据

- 过滤掉

event_time为空的数据 - 将

url按照&以及=切割 - 保存数据:将数据写入

mysql表中

日志拆分字段:

- event_time

- url

- method

- status

- sip

- user_uip

- action_prepend

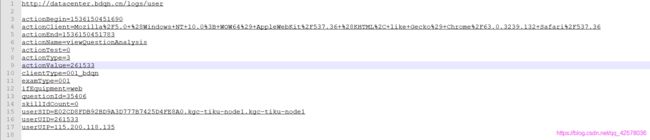

- action_client

如下是日志中的一条数据按照Tab分隔后的示例,每一行代表一个字段,分别以上一一对应

val spark: SparkSession = SparkSession.builder()

.master("local[*]")

.appName("clearDemo")

.getOrCreate()

val sc: SparkContext = spark.sparkContext

import spark.implicits._

val linesRdd: RDD[String] = sc.textFile("in/test.log")

1.2、切割数据生成RDD

按照分隔符Tab(即\t)切割日志中的每个字段,并过滤掉字段不等于8的数据

val rdd: RDD[Row] = linesRdd.map(x => x.split("\t"))

.filter(x => x.length == 8)

.map(x => Row(x(0).trim, x(1).trim, x(2).trim, x(3).trim, x(4).trim, x(5).trim, x(6).trim, x(7).trim))

1.3、拉取RDD中的每一个字段,再转成DataFrame

- 首先创建一个

schema(表结构),即每个字段名为表头 - 然后创建 DataFrame

val schema = StructType(

Array(

StructField("event_time", StringType),

StructField("url", StringType),

StructField("method", StringType),

StructField("status", StringType),

StructField("sip", StringType),

StructField("user_uip", StringType),

StructField("action_prepend", StringType),

StructField("action_client", StringType)

)

)

val orgDF: DataFrame = spark.createDataFrame(rdd,schema)

orgDF.show 的运行结果如下:

1.4、数据清洗过滤

- 按照第一列和第二列对数据进行去重

- 过滤掉状态码为非200的数据

- 过滤掉

event_time为空的数据

val ds1: Dataset[Row] = orgDF.dropDuplicates("event_time", "url")

.filter(x => x(3) == "200")

.filter(x => StringUtils.isNotEmpty(x(0).toString))

// .filter(x=>x(0).equals("")==false) //同上

1.5、拆分url列数据

- 将

url按照&以及=切割 - 注意 DataFrame 不能超过22列

val dfDetail: DataFrame = ds1.map((row: Row) => {

val urlArray: Array[String] = row.getAs[String]("url").split("\\?")

// val uelArray2: Array[String] = row(1).toString.split("\\?")

var map: Map[String, String] = Map("params" -> "null")

if (urlArray.length == 2) {

map = urlArray(1)

.split("&")

.map((x: String) => x.split("="))

.filter((_: Array[String]).length == 2)

.map((x: Array[String]) => (x(0), x(1)))

.toMap

}

(row.getAs[String]("event_time"),

map.getOrElse("actionBegin", ""),

map.getOrElse("actionClient", ""),

map.getOrElse("actionName", ""),

map.getOrElse("actionTest", ""),

map.getOrElse("actionType", ""),

map.getOrElse("actionValue", ""),

map.getOrElse("clientType", ""),

map.getOrElse("examType", ""),

map.getOrElse("ifEquipment", ""),

map.getOrElse("isFromContinue", ""),

map.getOrElse("skillIdCount",""),

map.getOrElse("skillLevel",""),

map.getOrElse("testType",""),

map.getOrElse("userSID", ""),

map.getOrElse("userUID", ""),

map.getOrElse("userUIP", ""),

row.getAs[String]("method"),

row.getAs[String]("status"),

row.getAs[String]("sip"),

// row.getAs[String]("user_uip"),

row.getAs[String]("action_prepend"),

row.getAs[String]("action_client"))

}).toDF()

1.6、数据清洗完成

- 现将上面的

dfDetail转成rdd - 创建表结构

detailschema - 最后生成

DataFrame完成数据清洗

val detailRdd: RDD[Row] = dfDetail.rdd

val detailschema = StructType(

Array(

StructField("event_time", StringType),

StructField("actionBegin", StringType),

StructField("actionClient", StringType),

StructField("actionName", StringType),

StructField("actionTest", StringType),

StructField("actionType", StringType),

StructField("actionValue", StringType),

StructField("clientType", StringType),

StructField("examType", StringType),

StructField("ifEquipment", StringType),

StructField("isFromContinue", StringType),

StructField("skillIdCount", StringType),

StructField("skillLevel", StringType),

StructField("testType", StringType),

StructField("userSID", StringType),

StructField("userUID", StringType),

StructField("userUIP", StringType),

StructField("method", StringType),

StructField("status", StringType),

StructField("sip", StringType),

// StructField("user_uip", StringType),

StructField("action_prepend", StringType),

StructField("action_client", StringType)

)

)

val detailDF: DataFrame = spark.createDataFrame(detailRdd,detailschema)

1.7、保存数据

- 将清理完成的数据保存到

mysql数据库中

//创建mysql连接

val url = "jdbc:mysql://192.168.8.99:3306/kb09db"

val prop = new Properties()

prop.setProperty("user","root")

prop.setProperty("password","ok")

prop.setProperty("driver","com.mysql.jdbc.Driver")

print("开始写入mysql")

//overwrite--->覆盖

//append--->追加

detailDF.write.mode("overwrite").jdbc(url,"logdetail",prop)

detailDF.write.mode("overwrite").jdbc(url,"orgDF",prop)

print("写入mysql完成")

2、数据分析

用户留存分析

计算用户的次日留存率

- 求当天新增用户总数n

- 求当天新增的用户ID与次日登录的用户ID的交集,得出新增用户次日登录总数m (次日留存数)

- m/n*100%

2.1、读取mysql数据

- 读取 mysql 中清洗好的数据表

val spark: SparkSession = SparkSession.builder()

.master("local[*]")

.appName("UserAnalysis")

.getOrCreate()

val sc: SparkContext = spark.sparkContext

import spark.implicits._

//创建mysql连接

val url = "jdbc:mysql://192.168.8.99:3306/kb09db"

val prop = new Properties()

prop.setProperty("user","root")

prop.setProperty("password","ok")

prop.setProperty("driver","com.mysql.jdbc.Driver")

//读取清洗好的数据表

val detailDF: DataFrame = spark.read.jdbc(url,"logdetail",prop)

2.2、自定义时间戳函数

- 先截取年月日部分,舍弃其余部分

- 再将截取的年月日转换成时间戳形式

val changeTime: UserDefinedFunction = spark.udf.register("changeTime", (x: String) => {

val time: Long = new SimpleDateFormat("yyyy-MM-dd")

.parse(x.substring(0, 10))

.getTime

time

})

2.3、注册用户信息

- 过滤出所有注册用户信息

- 利用时间戳函数将时间转换成时间戳

- 查询出行为

Registered的注册时间、userUID - 去重,即同一用户注册一次

val registerDF: DataFrame = detailDF.filter(detailDF("actionName") === "Registered")

.select("userUID","event_time", "actionName")

.withColumnRenamed("event_time","register_time")

.withColumnRenamed("userUID","regUID")

//时间戳转换,并去重

val registerDF2: DataFrame = registerDF.select($"regUID"

, changeTime($"register_time").as("register_date")

, $"actionName").distinct()

2.4、用户登录信息

- 过滤出所有用户登录信息

- 利用时间戳函数将时间转换成时间戳

- 查询出行为

Registered的注册时间、userUID - 去重,即计算留存率,同一用户在一天内的登录次数只能算一个用户

val signinDF: DataFrame = detailDF.filter(detailDF("actionName") === "Signin")

.select("userUID","event_time", "actionName")

.withColumnRenamed("event_time","signin_time")

.withColumnRenamed("userUID","sigUID")

.distinct()

val signinDF2: Dataset[Row] = signinDF.select($"sigUID",

changeTime($"signin_time").as("signin_date")

, $"actionName").distinct()

2.5、合并用户注册表和用户登录表

- 注意此处用

join默认连接

val joinDF: DataFrame = registerDF2

.join(signinDF2,signinDF2("sigUID")===registerDF2("regUID"))

2.6、次日留存人数

- 计算一日的时间戳:86400000=246060*1000

- 计算一日留存人数

- 修改列名

val frame: DataFrame = joinDF

.filter(joinDF("register_date") === joinDF("signin_date") - 86400000)

.groupBy($"register_date").count()

.withColumnRenamed("count","sigcount")

2.7、注册人数

- count 计算注册的人数

- 修改列名

val frame1: DataFrame = registerDF2.groupBy($"register_date").count()

.withColumnRenamed("count","regcount")

2.8、合并留存人数表和注册人数表

val frame2: DataFrame = frame.join(frame1, "register_date")

合并后的表如下:

+-------------+--------+--------+

|register_date|sigcount|regcount|

+-------------+--------+--------+

|1535990400000| 355| 381|

+-------------+--------+--------+

2.9、计算留存率

- 通过一日留存人数和注册人数计算留存率

- 注意数据类型,做除法计算是需要

.toDouble,将其转换为Double类型

frame2.map(x=>(x.getAs[Long]("register_date")

, x.getAs[Long]("sigcount")

, x.getAs[Long]("regcount")

, x.getAs[Long]("sigcount").toDouble/x.getAs[Long]("regcount")

))

最后结果:

+-------------+---+---+-----------------+

| _1| _2| _3| _4|

+-------------+---+---+-----------------+

|1535990400000|355|381|0.931758530183727|

+-------------+---+---+-----------------+