- 探索Python中的集成方法:Stacking

Echo_Wish

Python笔记Python算法python开发语言

在机器学习领域,Stacking是一种高级的集成学习方法,它通过将多个基本模型的预测结果作为新的特征输入到一个元模型中,从而提高整体模型的性能和鲁棒性。本文将深入介绍Stacking的原理、实现方式以及如何在Python中应用。什么是Stacking?Stacking,又称为堆叠泛化(StackedGeneralization),是一种模型集成方法,与Bagging和Boosting不同,它并不直

- 【Python】 Stacking: 强大的集成学习方法

音乐学家方大刚

Pythonpython集成学习开发语言

我们都找到天使了说好了心事不能偷藏着什么都一起做幸福得没话说把坏脾气变成了好沟通我们都找到天使了约好了负责对方的快乐阳光下的山坡你素描的以后怎么抄袭我脑袋想的薛凯琪《找到天使了》在机器学习中,单一模型的性能可能会受到其局限性和数据的影响。为了解决这个问题,我们可以使用集成学习(EnsembleLearning)方法。集成学习通过结合多个基模型的预测结果,来提高整体模型的准确性和稳健性。Stacki

- Stacking算法:集成学习的终极武器

civilpy

算法集成学习机器学习

Stacking算法:集成学习的终极武器在机器学习的竞技场中,集成学习方法以其卓越的性能而闻名。其中,Stacking(堆叠泛化)作为一种高级集成技术,更是被誉为“集成学习的终极武器”。本文将带你深入了解Stacking算法的原理和实现,并提供一些实战技巧和最佳实践。1.Stacking算法原理探秘Stacking算法的核心思想是训练多个不同的基模型,并将它们的预测结果作为新模型的输入特征,以此来

- 集成学习(上):Bagging集成方法

万事可爱^

机器学习修仙之旅#监督学习集成学习机器学习人工智能Bagging随机森林

一、什么是集成学习?在机器学习的世界里,没有哪个模型是完美无缺的。就像古希腊神话中的"盲人摸象",单个模型往往只能捕捉到数据特征的某个侧面。但当我们把多个模型的智慧集合起来,就能像拼图一样还原出完整的真相,接下来我们就来介绍一种“拼图”算法——集成学习。集成学习是一种机器学习技术,它通过组合多个模型(通常称为“弱学习器”或“基础模型”)的预测结果,构建出更强、更准确的学习算法。这种方法的主要思想是

- 【集成学习】:Stacking原理以及Python代码实现

Geeksongs

机器学习python机器学习深度学习人工智能算法

Stacking集成学习在各类机器学习竞赛当中得到了广泛的应用,尤其是在结构化的机器学习竞赛当中表现非常好。今天我们就来介绍下stacking这个在机器学习模型融合当中的大杀器的原理。并在博文的后面附有相关代码实现。总体来说,stacking集成算法主要是一种基于“标签”的学习,有以下的特点:用法:模型利用交叉验证,对训练集进行预测,从而实现二次学习优点:可以结合不同的模型缺点:增加了时间开销,容

- windows使用ssh-copy-id命令的解决方案

爱编程的喵喵

Windows实用技巧windowssshssh-copy-id解决方案

大家好,我是爱编程的喵喵。双985硕士毕业,现担任全栈工程师一职,热衷于将数据思维应用到工作与生活中。从事机器学习以及相关的前后端开发工作。曾在阿里云、科大讯飞、CCF等比赛获得多次Top名次。现为CSDN博客专家、人工智能领域优质创作者。喜欢通过博客创作的方式对所学的知识进行总结与归纳,不仅形成深入且独到的理解,而且能够帮助新手快速入门。 本文主要介绍了windows使用ssh-copy-

- Yolo系列之Yolo的基本理解

是十一月末

YOLOpython开发语言yolo

YOLO的基本理解目录YOLO的基本理解1YOLO1.1概念1.2算法2单、多阶段对比2.1FLOPs和FPS2.2one-stage单阶段2.3two-stage两阶段1YOLO1.1概念YOLO(YouOnlyLookOnce)是一种基于深度学习的目标检测算法,由JosephRedmon等人于2016年提出。它的核心思想是将目标检测问题转化为一个回归问题,通过一个神经网络直接预测目标的类别和位

- PyTorch基础知识讲解(一)完整训练流程示例

苏雨流丰

机器学习pytorch人工智能python机器学习深度学习

文章目录Tutorial1.数据处理2.网络模型定义3.损失函数、模型优化、模型训练、模型评价4.模型保存、模型加载、模型推理Tutorial大多数机器学习工作流程涉及处理数据、创建模型、优化模型参数和保存训练好的模型。本教程向你介绍一个用PyTorch实现的完整的ML工作流程,并提供链接来了解这些概念中的每一个。我们将使用FashionMNIST数据集来训练一个神经网络,预测输入图像是否属于以下

- 机器学习中的贝叶斯网络:如何构建高效的风险预测模型

AI天才研究院

DeepSeekR1&大数据AI人工智能大模型自然语言处理人工智能语言模型编程实践开发语言架构设计

作者:禅与计算机程序设计艺术文章目录机器学习中的贝叶斯网络:如何构建高效的风险预测模型1.背景介绍2.基本概念术语说明2.1马尔科夫随机场(MarkovRandomField)2.2条件随机场(ConditionalRandomField,CRF)2.3变量elimination算法2.4贝叶斯网络3.核心算法原理和具体操作步骤以及数学公式讲解3.1原理介绍1.贝叶斯网络基础2.贝叶斯网络构建风险

- AI时代个人财富增长实战指南:从零基础到精通变现的完整路径

A达峰绮

人工智能

(本文基于人工智能技术发展规律,结合互联网经济底层逻辑,为普通从业者构建系统性AI应用框架)一、建立AI认知基础:技术理解与工具掌握技术分类认知人工智能工具分为四大功能模块:自然语言处理(文本生成、对话交互)、计算机视觉(图像视频处理)、数据分析(预测建模)、自动化控制(流程优化)。建议新手首先掌握语言类工具的基础操作,逐步扩展到其他领域。工具操作逻辑通用AI工具通常包含三大核心功能模块:输入界面

- 大语言模型学习路线:从入门到实战

大模型官方资料

语言模型学习人工智能产品经理自然语言处理搜索引擎

大语言模型学习路线:从入门到实战在人工智能领域,大语言模型(LargeLanguageModels,LLMs)正迅速成为一个热点话题。本学习路线旨在为有基本Python编程和深度学习基础的学习者提供一个清晰、系统的大模型学习指南,帮助你在这一领域快速成长。本学习路线更新至2024年02月,后期部分内容或工具可能需要更新。适应人群已掌握Python基础具备基本的深度学习知识学习步骤本路线将通过四个核

- 深度学习与目标检测系列(六) 本文约(4.5万字) | 全面解读复现ResNet | Pytorch |

小酒馆燃着灯

深度学习目标检测pytorch人工智能ResNet残差连接残差网络

文章目录解读Abstract—摘要翻译精读主要内容Introduction—介绍翻译精读背景RelatedWork—相关工作ResidualRepresentations—残差表达翻译精读主要内容ShortcutConnections—短路连接翻译精读主要内容DeepResidualLearning—深度残差学习ResidualLearning—残差学习翻译精读ResNet目的以前方法本文改进本质

- 深度学习与目标检测系列(三) 本文约(4万字) | 全面解读复现AlexNet | Pytorch |

小酒馆燃着灯

深度学习目标检测pytorchAlexNet人工智能

文章目录解读Abstract-摘要翻译精读主要内容1.Introduction—前言翻译精读主要内容:本文主要贡献:2.TheDataset-数据集翻译精读主要内容:ImageNet简介:图像处理方法:3.TheArchitecture—网络结构3.1ReLUNonlinearity—非线性激活函数ReLU翻译精读传统方法及不足本文改进方法本文的改进结果3.2TrainingonMultipleG

- Tinyflow AI 工作流编排框架 v0.0.7 发布

自不量力的A同学

人工智能

目前没有关于TinyflowAI工作流编排框架v0.0.7发布的相关具体信息。Tinyflow是一个轻量的AI智能体流程编排解决方案,其设计理念是“简单、灵活、无侵入性”。它基于WebComponent开发,前端支持与React、Vue等任何框架集成,后端支持Java、Node.js、Python等语言,助力传统应用快速AI转型。该框架代码库轻量,学习成本低,能轻松应对简单任务编排和复杂多模态推理

- 使用 Milvus 进行向量数据库管理与实践

qahaj

milvus数据库python

技术背景介绍在当今的AI与机器学习应用中,处理和管理大量的嵌入向量是一个常见的需求。Milvus是一个开源向量数据库,专门用于存储、索引和管理深度神经网络以及其他机器学习模型生成的大规模嵌入向量。它的高性能和易用性使其成为处理向量数据的理想选择。核心原理解析Milvus的核心功能体现在其强大的向量索引和搜索能力。它支持多种索引算法,包括IVF、HNSW等,使其能够高效地进行大规模向量的相似性搜索操

- 物理学不存在了?诺贝尔物理学奖颁给了人工智能

资讯新鲜事

人工智能

2024年10月8日,瑞典皇家科学院宣布,将2024年诺贝尔物理学奖授予美国普林斯顿大学教授约翰·J·霍普菲尔德(JohnJ.Hopfield)和加拿大多伦多大学教授杰弗里·E·辛顿(GeoffreyE.Hinton),以表彰他们“在人工神经网络机器学习方面的基础性发现和发明”。辛顿在接受电话采访时表示:“完全没想到”。实话实说,在结果出来前,大家也都没想到。因为在外界预测里,今年的诺贝尔物理学奖

- 向量检索、检索增强生成(RAG)、大语言模型及相关系统架构——典型面试问题及简要答案

快撑死的鱼

算法工程师宝典(面试学习最新技术必备)语言模型系统架构面试

1.什么是向量检索?它与传统基于关键字的检索相比有什么不同?答案要点:向量检索是将文本、图像、音频等数据映射为向量,在高维向量空间中基于相似度或距离进行搜索。与传统基于关键字的检索(如倒排索引)相比,向量检索更关注“语义”或“特征”,能找出语义上相似但未必包含相同关键词的内容。向量检索非常适合多模态场景(例如“以图搜图”)或自然语言问答(同义词、上下文关联等)。2.什么是检索增强生成(RAG)?核

- 探索Astra DB与LangChain的集成:从向量存储到对话历史

eahba

数据库langchainpython

技术背景介绍AstraDB是DataStax推出的一款无服务器的向量数据库,基于ApacheCassandra®构建,并通过易于使用的JSONAPI提供服务。AstraDB的独特之处在于其强大的向量存储能力,这在处理自然语言处理任务时尤为突出。LangChain与AstraDB的集成为开发者提供了强大的工具链,从数据存储到语义缓存,再到自查询检索,帮助简化复杂的数据操作。核心原理解析LangCha

- 计算机视觉技术探索:美颜SDK如何利用深度学习优化美颜、滤镜功能?

美狐美颜sdk

美颜SDK美颜API直播美颜SDK计算机视觉深度学习直播美颜SDK美颜sdk第三方美颜sdk美颜api

时下,计算机视觉+深度学习正在重塑美颜技术,通过智能人脸检测、AI滤镜、深度美肤、实时优化等方式,让美颜效果更加自然、精准、个性化。那么,美颜SDK如何结合深度学习来优化美颜和滤镜功能?本文将深入解析AI在美颜技术中的应用,并探讨其未来发展趋势。一、深度学习如何赋能美颜SDK?1.AI人脸检测与关键点识别:精准捕捉五官在美颜过程中,首先需要精准检测人脸位置和五官特征点,确保美颜效果不会失真。深度学

- PHP 爬虫实战:爬取淘宝商品详情数据

EcomDataMiner

php爬虫开发语言

随着互联网技术的发展,数据爬取越来越成为了数据分析、机器学习等领域的重要前置技能。而在这其中,爬虫技术更是不可或缺。php作为一门广泛使用的后端编程语言,其在爬虫领域同样也有着广泛应用和优势。本文将以爬取斗鱼直播数据为例,介绍php爬虫的实战应用。准备工作在开始爬虫之前,我们需要做一些准备工作。首先,需要搭建一个本地服务器环境,推荐使用WAMP、XAMPP等集成化工具,方便部署PHP环境。其次,我

- 深度学习模型性能全景评估与优化指南

niuTaylor

深度学习人工智能

深度学习模型性能全景评估与优化指南一、算力性能指标体系1.核心算力指标对比指标计算方式适用场景硬件限制TOPS(TeraOperationsPerSecond)每秒万亿次整数运算量化模型推理NVIDIAJetsonNano仅支持FP16/FP32TFLOPS(TeraFLoating-pointOPerationsperSecond)TFLOPS=Cores×FLOPs/Cycle×Frequen

- 利用Python和深度学习方法实现手写数字识别的高精度解决方案——从数据预处理到模型优化的全流程解析

快撑死的鱼

Python算法精解python深度学习开发语言

利用Python和深度学习方法实现手写数字识别的高精度解决方案——从数据预处理到模型优化的全流程解析在人工智能的众多应用领域中,手写数字识别是一项经典且具有重要实际应用价值的任务。随着深度学习技术的飞速发展,通过构建和训练神经网络模型,手写数字识别的精度已经可以达到99%以上。本文将以Python为主要编程语言,结合深度学习的核心技术,详细解析手写数字识别的实现过程,并探讨如何进一步优化模型以提高

- 强化学习中的深度卷积神经网络设计与应用实例

数字扫地僧

计算机视觉cnn人工智能神经网络

I.引言强化学习(ReinforcementLearning,RL)是机器学习的一个重要分支,通过与环境的交互来学习最优策略。深度学习,特别是深度卷积神经网络(DeepConvolutionalNeuralNetworks,DCNNs)的引入,为强化学习在处理高维度数据方面提供了强大工具。本文将探讨强化学习中深度卷积神经网络的设计原则及其在不同应用场景中的实例。II.深度卷积神经网络在强化学习中的

- 腾讯云大模型知识引擎与DeepSeek:打造懒人专属的谷歌浏览器翻译插件

大富大贵7

程序员知识储备1程序员知识储备2程序员知识储备3腾讯云云计算

摘要:随着人工智能技术的飞速发展,越来越多的前沿技术和工具已走入日常生活。翻译工具作为跨语言沟通的桥梁,一直处于技术创新的风口浪尖。本文探讨了腾讯云大模型知识引擎与DeepSeek结合谷歌浏览器插件的可能性,旨在为用户提供一种便捷、高效的翻译体验。通过应用深度学习、自然语言处理和知识图谱技术,该插件不仅能实时翻译网页内容,还能根据上下文进行智能推荐,实现精准的语境转换。本文将详细阐述其设计思路、技

- 模拟退火算法:原理、应用与优化策略

尹清雅

算法

摘要模拟退火算法是一种基于物理退火过程的随机搜索算法,在解决复杂优化问题上表现出独特优势。本文详细阐述模拟退火算法的原理,深入分析其核心要素,通过案例展示在函数优化、旅行商问题中的应用,并探讨算法的优化策略与拓展方向,为解决复杂优化问题提供全面的理论与实践指导,助力该算法在多领域的高效应用与创新发展。一、引言在现代科学与工程领域,复杂优化问题无处不在,如资源分配、路径规划、机器学习模型参数调优等。

- PyTorch深度学习框架60天进阶学习计划 - 第28天:多模态模型实践(二)

凡人的AI工具箱

深度学习pytorch学习AI编程人工智能python

PyTorch深度学习框架60天进阶学习计划-第28天:多模态模型实践(二)5.跨模态检索系统应用场景5.1图文匹配系统的实际应用应用领域具体场景优势电子商务商品图像搜索、视觉购物用户可以上传图片查找相似商品或使用文本描述查找商品智能媒体内容推荐、图片库搜索通过内容的语义理解提供更精准的推荐和搜索社交网络基于内容的帖子推荐理解用户兴趣,提供更相关的内容推荐教育技术多模态教学资源检索教师和学生可以更

- PyTorch深度学习框架60天进阶学习计划 - 第28天:多模态模型实践(一)

凡人的AI工具箱

深度学习pytorch学习AI编程人工智能python

PyTorch深度学习框架60天进阶学习计划-第28天:多模态模型实践(一)引言:跨越感知的边界欢迎来到我们的PyTorch学习旅程第28天!今天我们将步入AI世界中最激动人心的领域之一:多模态学习。想象一下,如果你的模型既能"看"又能"读",并且能够理解图像与文字之间的联系,这将为我们打开怎样的可能性?今天我们将专注于构建图文匹配系统,学习如何使用CLIP(ContrastiveLanguage

- 10.2 如何解决从复杂 PDF 文件中提取数据的问题?

墨染辉

大语言模型pdf

10.2如何解决从复杂PDF文件中提取数据的问题?解决方案:嵌入式表格检索解释:嵌入式表格检索是一种专门针对从复杂PDF文件中的表格提取数据的技术。它结合了表格识别、解析和语义理解,使得从复杂结构的表格中检索信息成为可能。具体步骤:表格检测和识别:目标:在PDF页面中准确地定位和识别表格区域。方法:使用计算机视觉和深度学习技术,如卷积神经网络(CNN)或其他先进的图像处理算法。效果:能够检测出页面

- TensorFlow深度学习实战项目:从入门到精通

点我头像干啥

Ai深度学习tensorflow人工智能

引言深度学习作为人工智能领域的一个重要分支,近年来取得了显著的进展。TensorFlow作为Google开源的深度学习框架,因其强大的功能和灵活的架构,成为了众多开发者和研究者的首选工具。本文将带领大家通过一个实战项目,深入理解TensorFlow的使用方法,并掌握深度学习的基本流程。1.TensorFlow简介1.1TensorFlow是什么?TensorFlow是一个开源的机器学习框架,由Go

- 使用大语言模型API在AI应用中的实现

qq_37836323

人工智能语言模型自然语言处理python

随着人工智能技术的迅速发展,大语言模型(LLM)在自然语言处理(NLP)领域的应用越来越广泛。本文将介绍如何使用大语言模型API来实现一些基础的AI应用,并提供一个简单的demo代码,帮助大家更好地理解和使用这些技术。大语言模型API简介大语言模型(如GPT-4)能够理解和生成类似人类的文本。这些模型可以应用于各种任务,包括文本生成、语言翻译、情感分析、对话系统等。为了方便国内用户访问这些强大的模

- 设计模式介绍

tntxia

设计模式

设计模式来源于土木工程师 克里斯托弗 亚历山大(http://en.wikipedia.org/wiki/Christopher_Alexander)的早期作品。他经常发表一些作品,内容是总结他在解决设计问题方面的经验,以及这些知识与城市和建筑模式之间有何关联。有一天,亚历山大突然发现,重复使用这些模式可以让某些设计构造取得我们期望的最佳效果。

亚历山大与萨拉-石川佳纯和穆雷 西乐弗斯坦合作

- android高级组件使用(一)

百合不是茶

androidRatingBarSpinner

1、自动完成文本框(AutoCompleteTextView)

AutoCompleteTextView从EditText派生出来,实际上也是一个文本编辑框,但它比普通编辑框多一个功能:当用户输入一个字符后,自动完成文本框会显示一个下拉菜单,供用户从中选择,当用户选择某个菜单项之后,AutoCompleteTextView按用户选择自动填写该文本框。

使用AutoCompleteTex

- [网络与通讯]路由器市场大有潜力可挖掘

comsci

网络

如果国内的电子厂商和计算机设备厂商觉得手机市场已经有点饱和了,那么可以考虑一下交换机和路由器市场的进入问题.....

这方面的技术和知识,目前处在一个开放型的状态,有利于各类小型电子企业进入

&nbs

- 自写简单Redis内存统计shell

商人shang

Linux shell统计Redis内存

#!/bin/bash

address="192.168.150.128:6666,192.168.150.128:6666"

hosts=(${address//,/ })

sfile="staticts.log"

for hostitem in ${hosts[@]}

do

ipport=(${hostitem

- 单例模式(饿汉 vs懒汉)

oloz

单例模式

package 单例模式;

/*

* 应用场景:保证在整个应用之中某个对象的实例只有一个

* 单例模式种的《 懒汉模式》

* */

public class Singleton {

//01 将构造方法私有化,外界就无法用new Singleton()的方式获得实例

private Singleton(){};

//02 申明类得唯一实例

priva

- springMvc json支持

杨白白

json springmvc

1.Spring mvc处理json需要使用jackson的类库,因此需要先引入jackson包

2在spring mvc中解析输入为json格式的数据:使用@RequestBody来设置输入

@RequestMapping("helloJson")

public @ResponseBody

JsonTest helloJson() {

- android播放,掃描添加本地音頻文件

小桔子

最近幾乎沒有什麽事情,繼續鼓搗我的小東西。想在項目中加入一個簡易的音樂播放器功能,就像華為p6桌面上那麼大小的音樂播放器。用過天天動聽或者QQ音樂播放器的人都知道,可已通過本地掃描添加歌曲。不知道他們是怎麼實現的,我覺得應該掃描設備上的所有文件,過濾出音頻文件,每個文件實例化為一個實體,記錄文件名、路徑、歌手、類型、大小等信息。具體算法思想,

- oracle常用命令

aichenglong

oracledba常用命令

1 创建临时表空间

create temporary tablespace user_temp

tempfile 'D:\oracle\oradata\Oracle9i\user_temp.dbf'

size 50m

autoextend on

next 50m maxsize 20480m

extent management local

- 25个Eclipse插件

AILIKES

eclipse插件

提高代码质量的插件1. FindBugsFindBugs可以帮你找到Java代码中的bug,它使用Lesser GNU Public License的自由软件许可。2. CheckstyleCheckstyle插件可以集成到Eclipse IDE中去,能确保Java代码遵循标准代码样式。3. ECLemmaECLemma是一款拥有Eclipse Public License许可的免费工具,它提供了

- Spring MVC拦截器+注解方式实现防止表单重复提交

baalwolf

spring mvc

原理:在新建页面中Session保存token随机码,当保存时验证,通过后删除,当再次点击保存时由于服务器端的Session中已经不存在了,所有无法验证通过。

1.新建注解:

? 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

- 《Javascript高级程序设计(第3版)》闭包理解

bijian1013

JavaScript

“闭包是指有权访问另一个函数作用域中的变量的函数。”--《Javascript高级程序设计(第3版)》

看以下代码:

<script type="text/javascript">

function outer() {

var i = 10;

return f

- AngularJS Module类的方法

bijian1013

JavaScriptAngularJSModule

AngularJS中的Module类负责定义应用如何启动,它还可以通过声明的方式定义应用中的各个片段。我们来看看它是如何实现这些功能的。

一.Main方法在哪里

如果你是从Java或者Python编程语言转过来的,那么你可能很想知道AngularJS里面的main方法在哪里?这个把所

- [Maven学习笔记七]Maven插件和目标

bit1129

maven插件

插件(plugin)和目标(goal)

Maven,就其本质而言,是一个插件执行框架,Maven的每个目标的执行逻辑都是由插件来完成的,一个插件可以有1个或者几个目标,比如maven-compiler-plugin插件包含compile和testCompile,即maven-compiler-plugin提供了源代码编译和测试源代码编译的两个目标

使用插件和目标使得我们可以干预

- 【Hadoop八】Yarn的资源调度策略

bit1129

hadoop

1. Hadoop的三种调度策略

Hadoop提供了3中作业调用的策略,

FIFO Scheduler

Fair Scheduler

Capacity Scheduler

以上三种调度算法,在Hadoop MR1中就引入了,在Yarn中对它们进行了改进和完善.Fair和Capacity Scheduler用于多用户共享的资源调度

2. 多用户资源共享的调度

- Nginx使用Linux内存加速静态文件访问

ronin47

Nginx是一个非常出色的静态资源web服务器。如果你嫌它还不够快,可以把放在磁盘中的文件,映射到内存中,减少高并发下的磁盘IO。

先做几个假设。nginx.conf中所配置站点的路径是/home/wwwroot/res,站点所对应文件原始存储路径:/opt/web/res

shell脚本非常简单,思路就是拷贝资源文件到内存中,然后在把网站的静态文件链接指向到内存中即可。具体如下:

- 关于Unity3D中的Shader的知识

brotherlamp

unityunity资料unity教程unity视频unity自学

首先先解释下Unity3D的Shader,Unity里面的Shaders是使用一种叫ShaderLab的语言编写的,它同微软的FX文件或者NVIDIA的CgFX有些类似。传统意义上的vertex shader和pixel shader还是使用标准的Cg/HLSL 编程语言编写的。因此Unity文档里面的Shader,都是指用ShaderLab编写的代码,然后我们来看下Unity3D自带的60多个S

- CopyOnWriteArrayList vs ArrayList

bylijinnan

java

package com.ljn.base;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import java.util.concurrent.CopyOnWriteArrayList;

/**

* 总述:

* 1.ArrayListi不是线程安全的,CopyO

- 内存中栈和堆的区别

chicony

内存

1、内存分配方面:

堆:一般由程序员分配释放, 若程序员不释放,程序结束时可能由OS回收 。注意它与数据结构中的堆是两回事,分配方式是类似于链表。可能用到的关键字如下:new、malloc、delete、free等等。

栈:由编译器(Compiler)自动分配释放,存放函数的参数值,局部变量的值等。其操作方式类似于数据结构中

- 回答一位网友对Scala的提问

chenchao051

scalamap

本来准备在私信里直接回复了,但是发现不太方便,就简要回答在这里。 问题 写道 对于scala的简洁十分佩服,但又觉得比较晦涩,例如一例,Map("a" -> List(11,111)).flatMap(_._2),可否说下最后那个函数做了什么,真正在开发的时候也会如此简洁?谢谢

先回答一点,在实际使用中,Scala毫无疑问就是这么简单。

- mysql 取每组前几条记录

daizj

mysql分组最大值最小值每组三条记录

一、对分组的记录取前N条记录:例如:取每组的前3条最大的记录 1.用子查询: SELECT * FROM tableName a WHERE 3> (SELECT COUNT(*) FROM tableName b WHERE b.id=a.id AND b.cnt>a. cnt) ORDER BY a.id,a.account DE

- HTTP深入浅出 http请求

dcj3sjt126com

http

HTTP(HyperText Transfer Protocol)是一套计算机通过网络进行通信的规则。计算机专家设计出HTTP,使HTTP客户(如Web浏览器)能够从HTTP服务器(Web服务器)请求信息和服务,HTTP目前协议的版本是1.1.HTTP是一种无状态的协议,无状态是指Web浏览器和Web服务器之间不需要建立持久的连接,这意味着当一个客户端向服务器端发出请求,然后We

- 判断MySQL记录是否存在方法比较

dcj3sjt126com

mysql

把数据写入到数据库的时,常常会碰到先要检测要插入的记录是否存在,然后决定是否要写入。

我这里总结了判断记录是否存在的常用方法:

sql语句: select count ( * ) from tablename;

然后读取count(*)的值判断记录是否存在。对于这种方法性能上有些浪费,我们只是想判断记录记录是否存在,没有必要全部都查出来。

- 对HTML XML的一点认识

e200702084

htmlxml

感谢http://www.w3school.com.cn提供的资料

HTML 文档中的每个成分都是一个节点。

节点

根据 DOM,HTML 文档中的每个成分都是一个节点。

DOM 是这样规定的:

整个文档是一个文档节点

每个 HTML 标签是一个元素节点

包含在 HTML 元素中的文本是文本节点

每一个 HTML 属性是一个属性节点

注释属于注释节点

Node 层次

- jquery分页插件

genaiwei

jqueryWeb前端分页插件

//jquery页码控件// 创建一个闭包 (function($) { // 插件的定义 $.fn.pageTool = function(options) { var totalPa

- Mybatis与Ibatis对照入门于学习

Josh_Persistence

mybatisibatis区别联系

一、为什么使用IBatis/Mybatis

对于从事 Java EE 的开发人员来说,iBatis 是一个再熟悉不过的持久层框架了,在 Hibernate、JPA 这样的一站式对象 / 关系映射(O/R Mapping)解决方案盛行之前,iBaits 基本是持久层框架的不二选择。即使在持久层框架层出不穷的今天,iBatis 凭借着易学易用、

- C中怎样合理决定使用那种整数类型?

秋风扫落叶

c数据类型

如果需要大数值(大于32767或小于32767), 使用long 型。 否则, 如果空间很重要 (如有大数组或很多结构), 使用 short 型。 除此之外, 就使用 int 型。 如果严格定义的溢出特征很重要而负值无关紧要, 或者你希望在操作二进制位和字节时避免符号扩展的问题, 请使用对应的无符号类型。 但是, 要注意在表达式中混用有符号和无符号值的情况。

&nbs

- maven问题

zhb8015

maven问题

问题1:

Eclipse 中 新建maven项目 无法添加src/main/java 问题

eclipse创建maevn web项目,在选择maven_archetype_web原型后,默认只有src/main/resources这个Source Floder。

按照maven目录结构,添加src/main/ja

- (二)androidpn-server tomcat版源码解析之--push消息处理

spjich

javaandrodipn推送

在 (一)androidpn-server tomcat版源码解析之--项目启动这篇中,已经描述了整个推送服务器的启动过程,并且把握到了消息的入口即XmppIoHandler这个类,今天我将继续往下分析下面的核心代码,主要分为3大块,链接创建,消息的发送,链接关闭。

先贴一段XmppIoHandler的部分代码

/**

* Invoked from an I/O proc

- 用js中的formData类型解决ajax提交表单时文件不能被serialize方法序列化的问题

中华好儿孙

JavaScriptAjaxWeb上传文件FormData

var formData = new FormData($("#inputFileForm")[0]);

$.ajax({

type:'post',

url:webRoot+"/electronicContractUrl/webapp/uploadfile",

data:formData,

async: false,

ca

- mybatis常用jdbcType数据类型

ysj5125094

mybatismapperjdbcType

MyBatis 通过包含的jdbcType

类型

BIT FLOAT CHAR

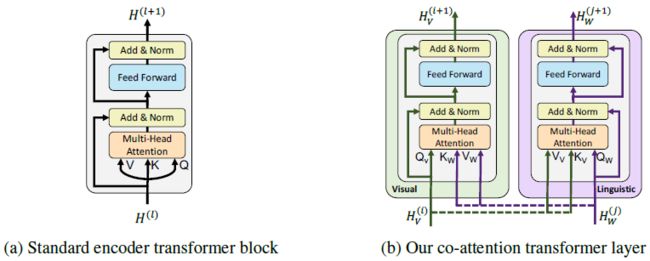

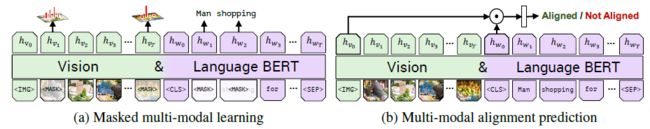

注意到,两个 streams 之间的信息交换被限制在了特定的层上,并且由于输入的 image region features 本身就是经过 CNN 处理过的 high-level 特征,因此 text stream 在和 visual features 交互之前还做了更多的处理 (This structure allows for variable depths for each modality and enables sparse interaction through co-attention.)

注意到,两个 streams 之间的信息交换被限制在了特定的层上,并且由于输入的 image region features 本身就是经过 CNN 处理过的 high-level 特征,因此 text stream 在和 visual features 交互之前还做了更多的处理 (This structure allows for variable depths for each modality and enables sparse interaction through co-attention.)