opencv_4.5.0/OpenCvSharp4.0 九点标定

首先说说OpenCvSharp吧:

/***********************************************************/

首先说说OpenCvSharp4的安装:项目——管理NetGet程序包——搜索OpenCvSharp——安装OpenCvSharp4 和 OpenCvSharp4.runtime.win

如果你不安装OpenCvSharp4.runtime.win则会报以下的错误

![]()

/***********************************************************/

言归正传,网上OpenCvSharp的例子少又甚少,而且opencv与OpenCvSharp的方法参数略有不同,搜集了很多资料终于用C#解决了求仿射矩阵的问题

先上代码:

//mat1是用来存九个像素坐标的,mat2用来存机器人坐标

Mat VectorToHomMat2d(Point2d[] calib_img_pixel_coordinates, Point2d[] calib_img_rob_coordinates)

{

if (calib_img_pixel_coordinates.Length != 9 && calib_img_rob_coordinates.Length != 9)

{

return null;

}

Mat mat1 = new Mat(9, 2, MatType.CV_64F); //这里MatType的解释我之前博客里有介绍

mat1.Set(0, 0, calib_img_pixel_coordinates[0].X);

mat1.Set(0, 1, calib_img_pixel_coordinates[0].Y);

mat1.Set(1, 0, calib_img_pixel_coordinates[1].X);

mat1.Set(1, 1, calib_img_pixel_coordinates[1].Y);

mat1.Set(2, 0, calib_img_pixel_coordinates[2].X);

mat1.Set(2, 1, calib_img_pixel_coordinates[2].Y);

mat1.Set(3, 0, calib_img_pixel_coordinates[3].X);

mat1.Set(3, 1, calib_img_pixel_coordinates[3].Y);

mat1.Set(4, 0, calib_img_pixel_coordinates[4].X);

mat1.Set(4, 1, calib_img_pixel_coordinates[4].Y);

mat1.Set(5, 0, calib_img_pixel_coordinates[5].X);

mat1.Set(5, 1, calib_img_pixel_coordinates[5].Y);

mat1.Set(6, 0, calib_img_pixel_coordinates[6].X);

mat1.Set(6, 1, calib_img_pixel_coordinates[6].Y);

mat1.Set(7, 0, calib_img_pixel_coordinates[7].X);

mat1.Set(7, 1, calib_img_pixel_coordinates[7].Y);

mat1.Set(8, 0, calib_img_pixel_coordinates[8].X);

mat1.Set(8, 1, calib_img_pixel_coordinates[8].Y);

Mat mat2 = new Mat(9, 2, MatType.CV_64F);

mat2.Set(0, 0, calib_img_rob_coordinates[0].X);

mat2.Set(0, 1, calib_img_rob_coordinates[0].Y);

mat2.Set(1, 0, calib_img_rob_coordinates[1].X);

mat2.Set(1, 1, calib_img_rob_coordinates[1].Y);

mat2.Set(2, 0, calib_img_rob_coordinates[2].X);

mat2.Set(2, 1, calib_img_rob_coordinates[2].Y);

mat2.Set(3, 0, calib_img_rob_coordinates[3].X);

mat2.Set(3, 1, calib_img_rob_coordinates[3].Y);

mat2.Set(4, 0, calib_img_rob_coordinates[4].X);

mat2.Set(4, 1, calib_img_rob_coordinates[4].Y);

mat2.Set(5, 0, calib_img_rob_coordinates[5].X);

mat2.Set(5, 1, calib_img_rob_coordinates[5].Y);

mat2.Set(6, 0, calib_img_rob_coordinates[6].X);

mat2.Set(6, 1, calib_img_rob_coordinates[6].Y);

mat2.Set(7, 0, calib_img_rob_coordinates[7].X);

mat2.Set(7, 1, calib_img_rob_coordinates[7].Y);

mat2.Set(8, 0, calib_img_rob_coordinates[8].X);

mat2.Set(8, 1, calib_img_rob_coordinates[8].Y);

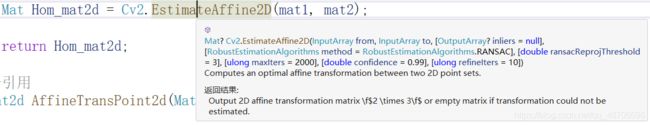

Mat Hom_mat2d = Cv2.EstimateAffine2D(mat1, mat2);

return Hom_mat2d;

}

//下面的方法是将像素坐标转为机器人坐标

Point2d AffineTransPoint2d(Mat Hom_mat2d, Point2d image_coordinates)

{

Point2d robot_coordinate;

var A = Hom_mat2d.Get(0, 0); //这里不能像opencv那样用Mat.ptr(),不然读出来的数会大的离谱,具体原因我也不清楚,有知道的伙伴欢迎评论讨论。

var B = Hom_mat2d.Get(0, 1);

var C = Hom_mat2d.Get(0, 2);

var D = Hom_mat2d.Get(1, 0);

var E = Hom_mat2d.Get(1, 1);

var F = Hom_mat2d.Get(1, 2);

robot_coordinate.X = (A * image_coordinates.X) + (B * image_coordinates.Y) + C;

robot_coordinate.Y = (D * image_coordinates.X) + (E * image_coordinates.Y) + F;

return robot_coordinate;

} 仿射变换矩阵:

我们可以看到求仿射矩阵这个方法在OpenCvSharp这边需要两个InputArray类型,而OpenCvSharp中没有vector

再讲讲opencv_4.5.0这边

如需要opencv_4.5.0的安装方法点击这里!

Mat VectorToHomMat2d(vector calib_img_pixel_coordinates, vector calib_img_rob_coordinates)

{

//方法一:

//如果为真,该函数将找到一个没有附加限制(6自由度)的最优仿射变换。

//否则,可选择的转换类型仅限于平移、旋转和统一缩放(4个自由度)的组合。

//Mat Hom_mat2d = estimateRigidTransform(calib_img_pixel_coordinates, calib_img_rob_coordinates, true);

//方法二:

Mat Hom_mat2d = estimateAffine2D(calib_img_pixel_coordinates, calib_img_rob_coordinates);

return Hom_mat2d;

}

Point2d AffineTransPoint2d(Mat Hom_mat2d, Point2d image_coordinates)

{

Point2d robot_coordinate;

double A = Hom_mat2d.ptr(0)[0];

double B = Hom_mat2d.ptr(0)[1];

double C = Hom_mat2d.ptr(0)[2];

double D = Hom_mat2d.ptr(1)[0];

double E = Hom_mat2d.ptr(1)[1];

double F = Hom_mat2d.ptr(1)[2];

robot_coordinate.x = (A * image_coordinates.x) + (B * image_coordinates.y) + C;

robot_coordinate.y = (D * image_coordinates.x) + (E * image_coordinates.y) + F;

return robot_coordinate;

} 在启动时可能会在Mat Hom_mat2d = estimateAffine2D(calib_img_pixel_coordinates, calib_img_rob_coordinates);这一句报错:

解决办法:解决方案资源管理器中对着项目右键——属性——c/c++的常规——SDL检查——否

如果不清楚什么是九点标定以及九点标定的步骤,请点击这里!