多传感器融合算法,雷视融合算法

![]()

0: 设备选型

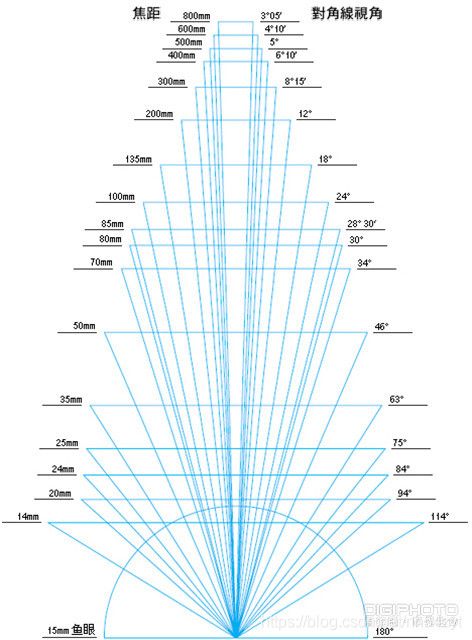

Camera选型: 如何选择视场角和焦距:

Radar选型

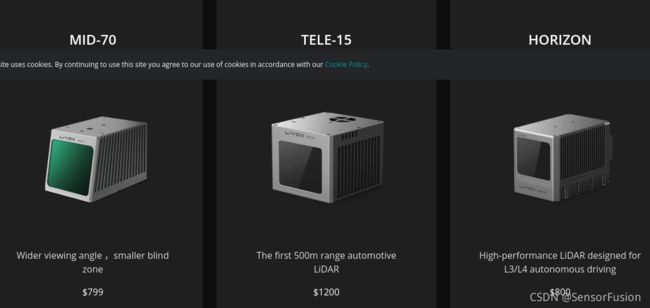

lidar选型

1: Radar毫米波雷达和Camera视觉融合

算法要求:

图像目标检测

Radar目标检测

radar和camera标定

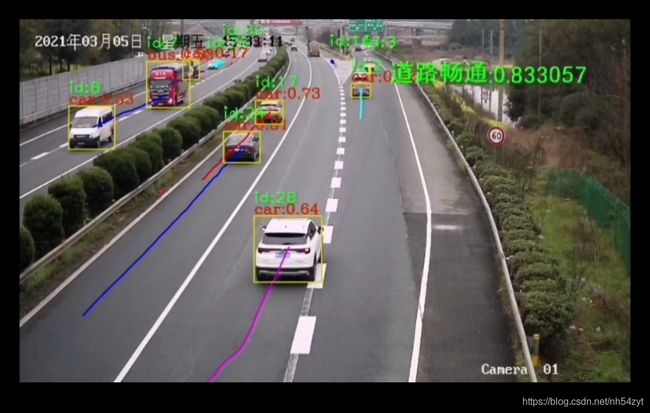

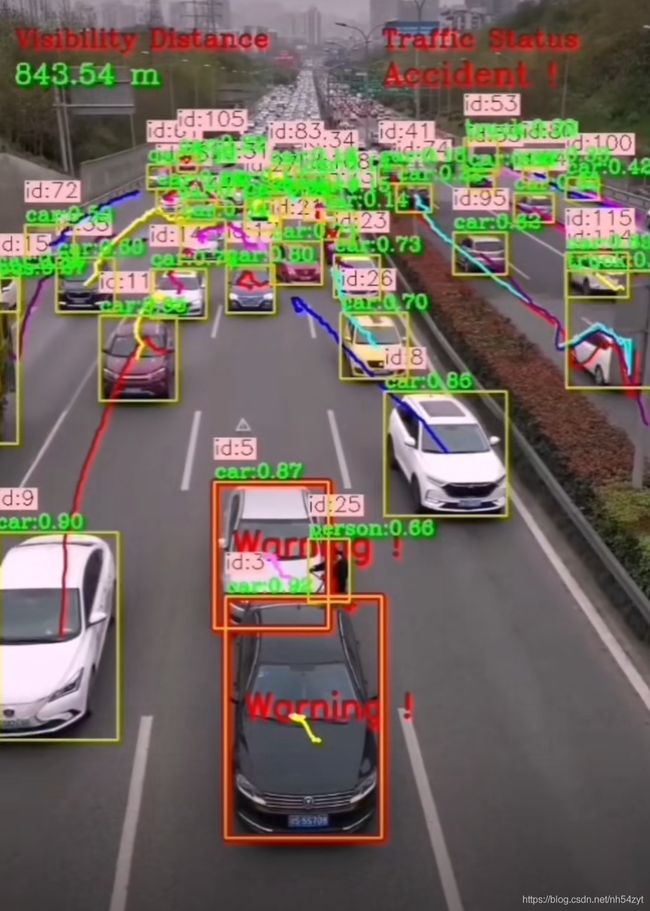

融合效果

2: Lidar激光雷达和Camera视觉融合

算法要求:

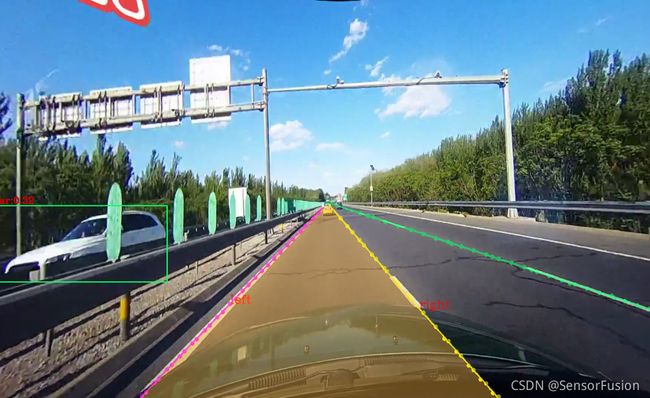

图像目标检测

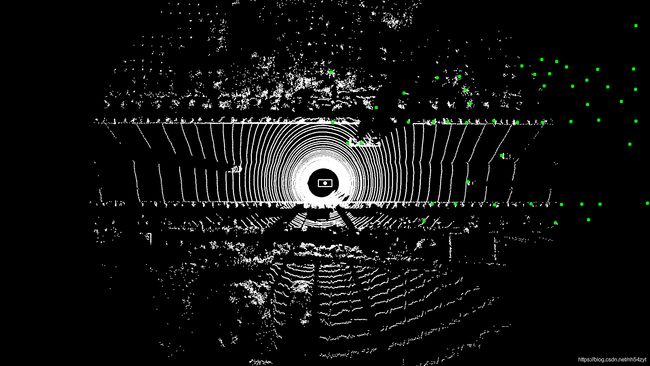

Lidar目标检测

Lidar和camera标定

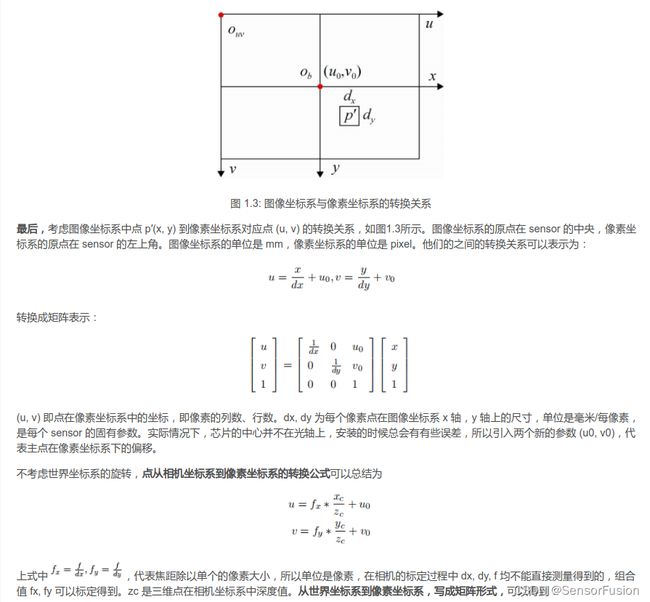

激光测距到相机像素深度值:

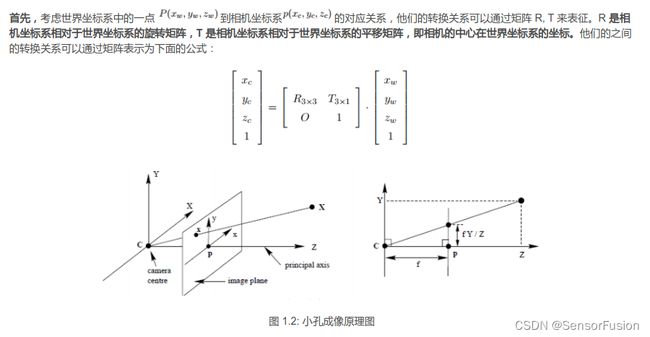

标定投影原理:

https://blog.csdn.net/tMb8Z9Vdm66wH68VX1/article/details/120499743

效果展示:

左边图像目标检测和融合效果

右边Lidar目标检测

基于图像深度学习目标检测

基于激光雷达的深度学习目标检测

融合目标,重新画框显示可视化,赋予车辆速度信息

3: Lidar激光雷达和Radar毫米波雷达融合

算法要求:

图像目标检测

Radar目标检测

Radar和camera标定

融合的前提之跟踪算法

4: camera跟踪算法

匈牙利算法(又叫KM算法)就是用来解决分配问题的一种方法,它基于定理:

如果代价矩阵的某一行或某一列同时加上或减去某个数,则这个新的代价矩阵的最优分配仍然是原代价矩阵的最优分配。

算法步骤(假设矩阵为NxN方阵):

1:对于矩阵的每一行,减去其中最小的元素

2:对于矩阵的每一列,减去其中最小的元素

3:用最少的水平线或垂直线覆盖矩阵中所有的0

4:如果线的数量等于N,则找到了最优分配,算法结束,否则进入步骤

5:找到没有被任何线覆盖的最小元素,每个没被线覆盖的行减去这个元素,每个被线覆盖的列加上这个元素,返回步骤3

图像的跟踪算法的理解

在跟踪之前,对所有目标已经完成检测,实现了特征建模过程。

- 第一帧进来时,以检测到的目标初始化并创建新的跟踪器,标注id。

- 后面帧进来时,先到卡尔曼滤波器中得到由前面帧box产生的状态预测和协方差预测。求跟踪器所有目标状态预测与本帧检测的box的IOU,通过匈牙利指派算法得到IOU最大的唯一匹配(数据关联部分),再去掉匹配值小于iou_threshold的匹配对。

https://blog.csdn.net/u011837761/article/details/52058703 - 用本帧中匹配到的目标检测box去更新卡尔曼跟踪器,计算卡尔曼增益、状态更新和协方差更新,并将状态更新值输出,作为本帧的跟踪box。对于本帧中没有匹配到的目标重新初始化跟踪器。

其中,卡尔曼跟踪器联合了历史跟踪记录,调节历史box与本帧box的残差,更好的匹配跟踪id。

message WeightParam {

optional float appearance = 1 [default = 0];

optional float motion = 2 [default = 0];

optional float shape = 3 [default = 0];

optional float tracklet = 4 [default = 0];

optional float overlap = 5 [default = 0];

}

message OmtParam {

optional int32 img_capability = 1 [default = 7];

optional int32 lost_age = 2 [default = 2];

optional int32 reserve_age = 3 [default = 3];

optional WeightParam weight_same_camera = 4;

optional WeightParam weight_diff_camera = 5;

optional float border = 9 [default = 30];

optional float target_thresh = 10 [default = 0.65];

optional bool correct_type = 11 [default = false];

optional TargetParam target_param = 12;

optional float min_init_height_ratio = 13 [default = 17];

optional float target_combine_iou_threshold = 14 [default = 0.5];

optional float fusion_target_thresh = 15 [default = 0.45];

optional float image_displacement = 16 [default = 50];

optional float abnormal_movement = 17 [default = 0.3];

optional double same_ts_eps = 18 [default = 0.05];

optional ReferenceParam reference = 19;

optional string type_change_cost = 20;

}

如果采用算法,可以综合以下,计算关联矩阵

float sa = ScoreAppearance(targets_[i], objects[j]);

float sm = ScoreMotion(targets_[i], objects[j]);

float ss = ScoreShape(targets_[i], objects[j]);

float so = ScoreOverlap(targets_[i], objects[j]);

if (sa == 0) {

hypo.score =

omt_param_.weight_diff_camera().motion() * sm

+ omt_param_.weight_diff_camera().shape() * ss

+ omt_param_.weight_diff_camera().overlap() * so;

} else {

hypo.score = (omt_param_.weight_same_camera().appearance() * sa +

omt_param_.weight_same_camera().motion() * sm +

omt_param_.weight_same_camera().shape() * ss +

omt_param_.weight_same_camera().overlap() * so);

}

5: Lidar跟踪算法

https://blog.csdn.net/lemonxiaoxiao/article/details/108645427

perception/lidar/lib/tracker/multi_lidar_fusion/mlf_track_object_matcher.cc

void MlfTrackObjectMatcher::Match(

const MlfTrackObjectMatcherOptions &options,

const std::vector<TrackedObjectPtr> &objects,

const std::vector<MlfTrackDataPtr> &tracks,

std::vector<std::pair<size_t, size_t>> *assignments,

std::vector<size_t> *unassigned_tracks,

std::vector<size_t> *unassigned_objects) {

assignments->clear();

unassigned_objects->clear();

unassigned_tracks->clear();

if (objects.empty() || tracks.empty()) {

unassigned_objects->resize(objects.size());

unassigned_tracks->resize(tracks.size());

std::iota(unassigned_objects->begin(), unassigned_objects->end(), 0);

std::iota(unassigned_tracks->begin(), unassigned_tracks->end(), 0);

return;

}

BipartiteGraphMatcherOptions matcher_options;

matcher_options.cost_thresh = max_match_distance_;

matcher_options.bound_value = bound_value_;

BaseBipartiteGraphMatcher *matcher =

objects[0]->is_background ? background_matcher_ : foreground_matcher_;

common::SecureMat<float> *association_mat = matcher->cost_matrix();

association_mat->Resize(tracks.size(), objects.size());

ComputeAssociateMatrix(tracks, objects, association_mat);

matcher->Match(matcher_options, assignments, unassigned_tracks,

unassigned_objects);

for (size_t i = 0; i < assignments->size(); ++i) {

objects[assignments->at(i).second]->association_score =

(*association_mat)(assignments->at(i).first,

assignments->at(i).second) /

max_match_distance_;

}

}

float MlfTrackObjectDistance::ComputeDistance(

const TrackedObjectConstPtr& object,

const MlfTrackDataConstPtr& track) const {

bool is_background = object->is_background;

const TrackedObjectConstPtr latest_object = track->GetLatestObject().second;

std::string key = latest_object->sensor_info.name + object->sensor_info.name;

const std::vector<float>* weights = nullptr;

if (is_background) {

auto iter = background_weight_table_.find(key);

if (iter == background_weight_table_.end()) {

weights = &kBackgroundDefaultWeight;

} else {

weights = &iter->second;

}

} else {

auto iter = foreground_weight_table_.find(key);

if (iter == foreground_weight_table_.end()) {

weights = &kForegroundDefaultWeight;

} else {

weights = &iter->second;

}

}

if (weights == nullptr || weights->size() < 7) {

AERROR << "Invalid weights";

return 1e+10f;

}

float distance = 0.f;

float delta = 1e-10f;

double current_time = object->object_ptr->latest_tracked_time;

track->PredictState(current_time);

double time_diff =

track->age_ ? current_time - track->latest_visible_time_ : 0;

if (weights->at(0) > delta) {

distance +=

weights->at(0) * LocationDistance(latest_object, track->predict_.state,

object, time_diff);

}

if (weights->at(1) > delta) {

distance +=

weights->at(1) * DirectionDistance(latest_object, track->predict_.state,

object, time_diff);

}

if (weights->at(2) > delta) {

distance +=

weights->at(2) * BboxSizeDistance(latest_object, track->predict_.state,

object, time_diff);

}

if (weights->at(3) > delta) {

distance +=

weights->at(3) * PointNumDistance(latest_object, track->predict_.state,

object, time_diff);

}

if (weights->at(4) > delta) {

distance +=

weights->at(4) * HistogramDistance(latest_object, track->predict_.state,

object, time_diff);

}

if (weights->at(5) > delta) {

distance += weights->at(5) * CentroidShiftDistance(latest_object,

track->predict_.state,

object, time_diff);

}

if (weights->at(6) > delta) {

distance += weights->at(6) *

BboxIouDistance(latest_object, track->predict_.state, object,

time_diff, background_object_match_threshold_);

}

// for foreground, calculate semantic map based distance

// if (!is_background) {

// distance += weights->at(7) * SemanticMapDistance(*track, object);

// }

return distance;

}

/******************************************************************************

* Copyright 2018 The Apollo Authors. All Rights Reserved.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*****************************************************************************/

#include ‘’‘

’‘’

6:Radar跟踪算法

bool HMMatcher::Match(const std::vector<RadarTrackPtr> &radar_tracks,

const base::Frame &radar_frame,

const TrackObjectMatcherOptions &options,

std::vector<TrackObjectPair> *assignments,

std::vector<size_t> *unassigned_tracks,

std::vector<size_t> *unassigned_objects) {

IDMatch(radar_tracks, radar_frame, assignments, unassigned_tracks,

unassigned_objects);

TrackObjectPropertyMatch(radar_tracks, radar_frame, assignments,

unassigned_tracks, unassigned_objects);

return true;

}

bool HMMatcher::RefinedTrack(const base::ObjectPtr &track_object,

double track_timestamp,

const base::ObjectPtr &radar_object,

double radar_timestamp) {

double dist = 0.5 * DistanceBetweenObs(track_object, track_timestamp,

radar_object, radar_timestamp) +

0.5 * DistanceBetweenObs(radar_object, radar_timestamp,

track_object, track_timestamp);

return dist < BaseMatcher::GetMaxMatchDistance();

}

void HMMatcher::TrackObjectPropertyMatch(

const std::vector<RadarTrackPtr> &radar_tracks,

const base::Frame &radar_frame, std::vector<TrackObjectPair> *assignments,

std::vector<size_t> *unassigned_tracks,

std::vector<size_t> *unassigned_objects) {

if (unassigned_tracks->empty() || unassigned_objects->empty()) {

return;

}

std::vector<std::vector<double>> association_mat(unassigned_tracks->size());

for (size_t i = 0; i < association_mat.size(); ++i) {

association_mat[i].resize(unassigned_objects->size(), 0);

}

ComputeAssociationMat(radar_tracks, radar_frame, *unassigned_tracks,

*unassigned_objects, &association_mat);

// from perception-common

common::SecureMat<double> *global_costs =

hungarian_matcher_.mutable_global_costs();

global_costs->Resize(unassigned_tracks->size(), unassigned_objects->size());

for (size_t i = 0; i < unassigned_tracks->size(); ++i) {

for (size_t j = 0; j < unassigned_objects->size(); ++j) {

(*global_costs)(i, j) = association_mat[i][j];

}

}

std::vector<TrackObjectPair> property_assignments;

std::vector<size_t> property_unassigned_tracks;

std::vector<size_t> property_unassigned_objects;

hungarian_matcher_.Match(

BaseMatcher::GetMaxMatchDistance(), BaseMatcher::GetBoundMatchDistance(),

common::GatedHungarianMatcher<double>::OptimizeFlag::OPTMIN,

&property_assignments, &property_unassigned_tracks,

&property_unassigned_objects);

for (size_t i = 0; i < property_assignments.size(); ++i) {

size_t gt_idx = unassigned_tracks->at(property_assignments[i].first);

size_t go_idx = unassigned_objects->at(property_assignments[i].second);

assignments->push_back(std::pair<size_t, size_t>(gt_idx, go_idx));

}

std::vector<size_t> temp_unassigned_tracks;

std::vector<size_t> temp_unassigned_objects;

for (size_t i = 0; i < property_unassigned_tracks.size(); ++i) {

size_t gt_idx = unassigned_tracks->at(property_unassigned_tracks[i]);

temp_unassigned_tracks.push_back(gt_idx);

}

for (size_t i = 0; i < property_unassigned_objects.size(); ++i) {

size_t go_idx = unassigned_objects->at(property_unassigned_objects[i]);

temp_unassigned_objects.push_back(go_idx);

}

*unassigned_tracks = temp_unassigned_tracks;

*unassigned_objects = temp_unassigned_objects;

}

void HMMatcher::ComputeAssociationMat(

const std::vector<RadarTrackPtr> &radar_tracks,

const base::Frame &radar_frame,

const std::vector<size_t> &unassigned_tracks,

const std::vector<size_t> &unassigned_objects,

std::vector<std::vector<double>> *association_mat) {

double frame_timestamp = radar_frame.timestamp;

for (size_t i = 0; i < unassigned_tracks.size(); ++i) {

for (size_t j = 0; j < unassigned_objects.size(); ++j) {

const base::ObjectPtr &track_object =

radar_tracks[unassigned_tracks[i]]->GetObs();

const base::ObjectPtr &frame_object =

radar_frame.objects[unassigned_objects[j]];

double track_timestamp =

radar_tracks[unassigned_tracks[i]]->GetTimestamp();

double distance_forward = DistanceBetweenObs(

track_object, track_timestamp, frame_object, frame_timestamp);

double distance_backward = DistanceBetweenObs(

frame_object, frame_timestamp, track_object, track_timestamp);

association_mat->at(i).at(j) =

0.5 * distance_forward + 0.5 * distance_backward;

}

}

}

double HMMatcher::DistanceBetweenObs(const base::ObjectPtr &obs1,

double timestamp1,

const base::ObjectPtr &obs2,

double timestamp2) {

double time_diff = timestamp2 - timestamp1;

return (obs2->center - obs1->center -

obs1->velocity.cast<double>() * time_diff)

.head(2)

.norm();

}

‘’‘

’‘’

‘’‘

7:Camera和Radar融合算法

8:Lidar和Camera融合入算法

#include