多传感器融合算法,基于Lidar,Radar,Camera算法

1:雷达Radar

① 大陆ARS408 参数:

② livox mid40 参数:

mid-40的连接使用测试

https://blog.csdn.net/Summer_CX/article/details/116657887

2:传统方法融合算法

如apollo

https://zhuanlan.zhihu.com/p/33852112

3:深度学习融合算法

如:

CenterFusion

https://arxiv.org/pdf/2011.04841.pdf

难点:

为了实现这一点,一种简单的方法是将每个雷达探测点映射到图像平面,并将其与一个对象关联(如果该点映射到该对象的二维边界框内)。这不是一个非常可靠的解决方案,因为雷达探测和目标之间没有一对一的映射。

在图像中,场景中的许多对象生成多个雷达检测,也有一些雷达检测与任何对象都不对应。另外,由于雷达检测的z维不精确(或根本不存在),映射雷达检测可能会在其对应对象的2D边界框之外结束。最后,被遮挡目标的雷达检测将映射到图像中的同一个区域,这使得在二维图像平面上区分它们变得困难。

4:多相机的融合,长短焦/双目相机融合

相机到相机标定

基本方法:

根据长焦相机投影到短焦相机的融合图像进行判断,绿色通道为短焦相机图像,红色和蓝色通道是长焦投影后的图像,目视判断检验对齐情况。在融合图像中的融合区域,选择场景中距离较远处(50米以外)的景物进行对齐判断,能够重合则精度高,出现粉色或绿色重影(错位),则存在误差,当误差大于一定范围时(范围依据实际使用情况而定),标定失败,需重新标定(正常情况下,近处物体因受视差影响,在水平方向存在错位,且距离越近错位量越大,此为正常现象。垂直方向不受视差影响)。

结果示例:如下图所示,图2为满足精度要求外参效果,图3为不满足精度要求的现象,请重新进行标定过程。

双目标定RT求解环节,长短焦标定:

最近在做多相机图像拼接,可以将多相机分为多个双目相机来拼接,这里记录一下双目相机标定的一些原理。

基于标定板的两个相机的标定,是基于特征点平面情况。

双目之间的位姿(长焦和短焦之间的位姿):

视觉slam求位姿:

基础矩阵和本质矩阵都是3×3的矩阵,它们之间不过是差了个相机内参,因此使用时效果完全一样。

void pose_estimation_2d2d ( std::vector<KeyPoint> keypoints_1,

std::vector<KeyPoint> keypoints_2,

std::vector< DMatch > matches,

Mat& R, Mat& t )

{

// 相机内参,TUM Freiburg2

Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );

//-- 把匹配点转换为vector的形式

vector<Point2f> points1;

vector<Point2f> points2;

for ( int i = 0; i < ( int ) matches.size(); i++ )

{

points1.push_back ( keypoints_1[matches[i].queryIdx].pt );

points2.push_back ( keypoints_2[matches[i].trainIdx].pt );

}

//-- 计算基础矩阵

Mat fundamental_matrix;

fundamental_matrix = findFundamentalMat ( points1, points2, CV_FM_8POINT );

cout<<"fundamental_matrix is "<<endl<< fundamental_matrix<<endl;

//-- 计算本质矩阵

Point2d principal_point ( 325.1, 249.7 ); //相机光心, TUM dataset标定值

double focal_length = 521; //相机焦距, TUM dataset标定值

Mat essential_matrix;

essential_matrix = findEssentialMat ( points1, points2, focal_length, principal_point );

cout<<"essential_matrix is "<<endl<< essential_matrix<<endl;

//-- 计算单应矩阵

Mat homography_matrix;

homography_matrix = findHomography ( points1, points2, RANSAC, 3 );

cout<<"homography_matrix is "<<endl<<homography_matrix<<endl;

//-- 从本质矩阵中恢复旋转和平移信息.

recoverPose ( essential_matrix, points1, points2, R, t, focal_length, principal_point );

cout<<"R is "<<endl<<R<<endl;

cout<<"t is "<<endl<<t<<endl;

}

————————————————————————————————————————————————————————————————————————————————————————————————————————————————————————

单应性矩阵应用:

长焦和短焦摄像头的组合感知,

长焦小视场角远距离,短焦大视场角短距离。

例如:双目标定方法

https://blog.csdn.net/plateros/article/details/102665505

可以由单应性矩阵求解出 旋转 平移关系:

表示就是不同视角的相机的关系;

#include detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" );//二进制描述子 汉明点对匹配

//------------------第一步:检测 Oriented FAST 角点位置-----------------------------

detector->detect ( img_1,keypoints_1 );

detector->detect ( img_2,keypoints_2 );

//------------------第二步:根据角点位置计算 BRIEF 描述子-------------------------

descriptor->compute ( img_1, keypoints_1, descriptors_1 );

descriptor->compute ( img_2, keypoints_2, descriptors_2 );

//------------------第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<DMatch> match;//Descriptors Match 描述子匹配

//BFMatcher matcher ( NORM_HAMMING );

matcher->match ( descriptors_1, descriptors_2, match );//各个特征点描述子之间的汉明距离匹配

//-----------------第四步:匹配点对筛选--------------------------------------------------

double min_dist=10000, max_dist=0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for ( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if ( dist < min_dist ) min_dist = dist; //最短距离 最相似

if ( dist > max_dist ) max_dist = dist; //最长距离 最不相似

}

printf ( "-- Max dist : %f \n", max_dist );

printf ( "-- Min dist : %f \n", min_dist );

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for ( int i = 0; i < descriptors_1.rows; i++ )

{

if ( match[i].distance <= max ( 2*min_dist, 30.0 ) )//最大距离

{

matches.push_back ( match[i] );

}

}

}

// 像素坐标转相机归一化坐标

// 像素坐标转相机归一化坐标 x1 = K逆* p1 x2 = K逆* p2 相机坐标系下 归一化平面上的点

Point2d pixel2cam ( const Point2d& p, const Mat& K )

{

return Point2d

(

( p.x - K.at<double> ( 0,2 ) ) / K.at<double> ( 0,0 ),// x= (px -cx)/fx

( p.y - K.at<double> ( 1,2 ) ) / K.at<double> ( 1,1 )// y=(py-cy)/fy

);

}

//特征匹配 计算匹配点对 函数 第一张图 到第二章图的坐标变换矩阵和平移矩阵

//对极几何

void pose_estimation_2d2d ( std::vector<KeyPoint> keypoints_1,

std::vector<KeyPoint> keypoints_2,

std::vector< DMatch > matches,

Mat& R, Mat& t )

{

// 相机内参,TUM Freiburg2

//相机内参数

// [fx 0 cx

// 0 fy cy

// 0 0 1]

Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );

//------------把匹配点转换为vector的形式------------------

vector<Point2f> points1;

vector<Point2f> points2;

for ( int i = 0; i < ( int ) matches.size(); i++ )

{

points1.push_back ( keypoints_1[matches[i].queryIdx].pt );

points2.push_back ( keypoints_2[matches[i].trainIdx].pt );

}

//-----------计算基础矩阵 F p2转置*F*p1 = 0 -----------------------------------------------------

Mat fundamental_matrix;

fundamental_matrix = findFundamentalMat ( points1, points2, CV_FM_8POINT );//8点发 p2转置*F*p1 = 0

cout<<"基础矩阵 fundamental_matrix is "<<endl<< fundamental_matrix<<endl;

//-----------计算本质矩阵 E x2转置 * E * x1 = 0 ----------------------------------------------------

Point2d principal_point ( 325.1, 249.7 ); //相机光心, TUM dataset标定值 cx cy

double focal_length = 521; //相机焦距, TUM dataset标定值 fx fy

Mat essential_matrix;

essential_matrix = findEssentialMat ( points1, points2, focal_length, principal_point );// x2转置 * E * x1 = 0

cout<<"本质矩阵 essential_matrix is "<<endl<< essential_matrix<<endl;

//-----------计算单应矩阵H p2 = H *p1 ---------------------------------------------------

Mat homography_matrix;

homography_matrix = findHomography ( points1, points2, RANSAC, 3 );

cout<<"单应矩阵 homography_matrix is "<<endl<<homography_matrix<<endl;

//-- 从本质矩阵中恢复旋转和平移信息. 使用奇异值分解法得到

recoverPose ( essential_matrix, points1, points2, R, t, focal_length, principal_point );// E = t^R = U C V ,U V 为正交矩阵 C 为奇异值矩阵 C = diag(1, 1, 0)

cout<<"R is "<<endl<<R<<endl;

cout<<"t is "<<endl<<t<<endl;

}

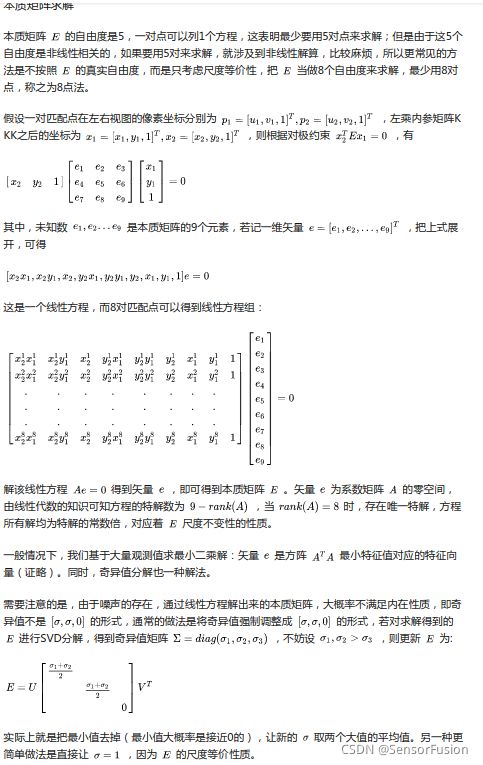

基本矩阵

什么是基础矩阵?Fundamental matrix

基础矩阵,在不同视角下的图像的对应关系,是单应矩阵的一个特例

单应矩阵

http://blog.itpub.net/31562045/viewspace-2220170/

什么是单应矩阵呢?Homography matrix

同一个平面的点,在不同图像之间的关系

这种关系不一定是同一物体,不同视角下的关系。比如我们举例的银行卡

但是 我们一般在介绍的时候,都是在说在不同视角下的图像的对应关系,这个时候和基本矩阵的关系是对应的

举例说明

银行卡拍摄的时候,可能会有倾斜,这个时候进行数字识别,准确率可能会低

经过单应矩阵变换后,将其摆正,然后就有比较高的准确率了。

不仅仅是倾斜的银行卡上面某一个点和摆正后的银行卡对应点上,满足单应矩阵的关系;

而是倾斜银行卡上面所有点和摆正后的银行卡上所有点都满足单应矩阵关系

这里就体现了同一个平面,不同图像之间的观点

至于基础矩阵,实质上是同一个东西,不同的是,本质矩阵的引入是方便利用点P相对于相机坐标系的位置来求解位姿变换矩阵,而基础矩阵的引入是方便利用像素点的位置来求解位姿变换矩阵。点P的绝对坐标和像素点的位置通过相机固有参数联系起来。总结下过程就是:找到相片中的匹配点->计算 和 ->计算本质矩阵->计算位姿矩阵

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

基本矩阵、本质矩阵和单应矩阵总结:

https://blog.csdn.net/kokerf/article/details/72191054

两幅视图存在两个关系:

第一种,通过对极几何一幅图像上的点可以确定另外一幅图像上的一条直线;

另外一种,通过上一种映射,一幅图像上的点可以确定另外一幅图像上的一个点,这个点是第一幅图像通过光心和图像点的射线与一个平面的交点在第二幅图像上的影像。

第一种情况可以用基本矩阵来表示,

第二种情况则用单应矩阵来表示。而本质矩阵则是基本矩阵的一种特殊情况,是在归一化图像坐标下的基本矩阵。

单应性拼接应用:

#include