对抗生成网络代码Generative Adversarial Networks (GANs),Vanilla GAN,Deeply Convolutional GANs

理论部分: CS231n 2022PPT笔记- 生成模型Generative Modeling_iwill323的博客-CSDN博客

目录

导包

加载数据

Vanilla GAN

Discriminator

Generator

GAN Loss

bce loss

Optimizing

主函数

运行GAN

Least Squares GAN

训练

Deeply Convolutional GANs

Discriminator

Generator

训练

需要注意的函数

sampler

np.prod

clamp

We can think of the generator () trying to fool the discriminator () and the discriminator trying to correctly classify real vs. fake as a minimax game:

where ∼()are the random noise samples, () are the generated images using the neural network generator , and is the output of the discriminator, specifying the probability of an input being real.

In this assignment, we will alternate the following updates:

- Update the generator () to maximize the probability of the discriminator making the incorrect choice on generated data:

maximize ∼()[log(())]

- Update the discriminator (), to maximize the probability of the discriminator making the correct choice on real and generated data:

maximize ∼data[log()]+∼()[log(1−(()))]

导包

# Setup cell.

import numpy as np

import torch

import torch.nn as nn

from torch.nn import init

import torchvision

import torchvision.transforms as transforms

import torch.optim as optim

from torch.utils.data import DataLoader

from torch.utils.data import sampler

import torchvision.datasets as datasets

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # Set default size of plots.

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

%load_ext autoreload

%autoreload 2

def show_images(images):

# images: (N, C, H, W)

images = np.reshape(images, [images.shape[0], -1]) # Images reshape to (batch_size, D).

sqrtn = int(np.ceil(np.sqrt(images.shape[0])))

sqrtimg = int(np.ceil(np.sqrt(images.shape[1])))

fig = plt.figure(figsize=(sqrtn, sqrtn))

gs = gridspec.GridSpec(sqrtn, sqrtn)

gs.update(wspace=0.05, hspace=0.05)

for i, img in enumerate(images):

ax = plt.subplot(gs[i])

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(img.reshape([sqrtimg,sqrtimg]))

return

dtype = torch.cuda.FloatTensor if torch.cuda.is_available() else torch.FloatTensor

NOISE_DIM = 96加载数据

NUM_TRAIN = 50000 # 总的训练数据其实是60,000个

NUM_VAL = 5000

NOISE_DIM = 96

batch_size = 128

mnist_train = datasets.MNIST(

'./cs231n/datasets/MNIST_data',

train=True,

download=True,

transform=transforms.ToTensor()

)

loader_train = DataLoader(

mnist_train,

batch_size=batch_size,

sampler=ChunkSampler(NUM_TRAIN, 0)

)

mnist_val = datasets.MNIST(

'./cs231n/datasets/MNIST_data',

train=True,

download=True,

transform=transforms.ToTensor()

)

loader_val = DataLoader(

mnist_val,

batch_size=batch_size,

sampler=ChunkSampler(NUM_VAL, NUM_TRAIN)

)

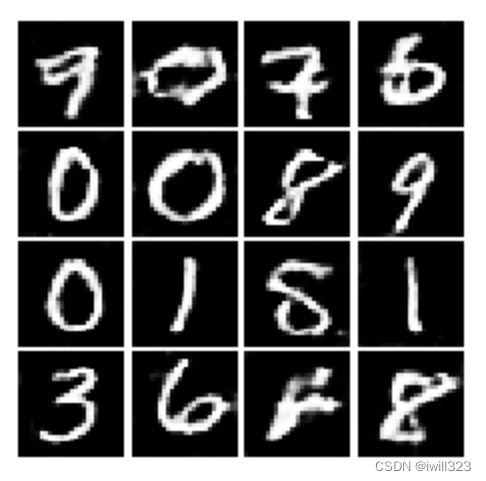

imgs = loader_train.__iter__().next()[0].view(batch_size, 784).numpy().squeeze()

print(imgs.shape) # (128, 784)

show_images(imgs) # 查看其中一个batch的图片

class ChunkSampler(sampler.Sampler):

"""Samples elements sequentially from some offset.

Arguments:

num_samples: # of desired datapoints

start: offset where we should start selecting from

"""

def __init__(self, num_samples, start=0):

self.num_samples = num_samples

self.start = start

def __iter__(self):

return iter(range(self.start, self.start + self.num_samples))

def __len__(self):

return self.num_samplesVanilla GAN

Discriminator

The output of the discriminator should have shape [batch_size, 1], and contain real numbers corresponding to the scores that each of the batch_size inputs is a real image.

def discriminator(seed=None):

'''

Fully connected layer with input size 784 and output size 256

LeakyReLU with alpha 0.01

Fully connected layer with input_size 256 and output size 256

LeakyReLU with alpha 0.01

Fully connected layer with input size 256 and output size 1

'''

if seed is not None:

torch.manual_seed(seed)

model = None

model = nn.Sequential(

Flatten(),

nn.Linear(784, 256),

nn.LeakyReLU(),

nn.Linear(256, 256),

nn.LeakyReLU(),

nn.Linear(256, 1)

)

return model 用到的辅助函数

class Flatten(nn.Module):

def forward(self, x):

N, C, H, W = x.size() # read in N, C, H, W

return x.view(N, -1) # "flatten" the C * H * W values into a single vector per image

class Unflatten(nn.Module):

"""

An Unflatten module receives an input of shape (N, C*H*W) and reshapes it

to produce an output of shape (N, C, H, W).

"""

def __init__(self, N=-1, C=128, H=7, W=7):

super(Unflatten, self).__init__()

self.N = N

self.C = C

self.H = H

self.W = W

def forward(self, x):

return x.view(self.N, self.C, self.H, self.W)Generator

def generator(noise_dim=NOISE_DIM, seed=None):

if seed is not None:

torch.manual_seed(seed)

model = nn.Sequential(

nn.Linear(noise_dim, 1024),

nn.ReLU(),

nn.Linear(1024, 1024),

nn.ReLU(),

nn.Linear(1024, 784),

nn.Tanh()

)

return modelGAN Loss

bce loss

You should use the bce_loss function to compute the binary cross entropy loss which is needed to compute the log probability of the true label given the logits output from the discriminator. Given a score ∈ℝ and a label ∈{0,1}, the binary cross entropy loss is

(,)=−∗log()−(1−)∗log(1−)

A naive implementation of this formula can be numerically unstable, so we have provided a numerically stable implementation that relies on PyTorch's nn.BCEWithLogitsLoss.

Instead of computing the expectation of log(()), log() and log(1−(())), we will be averaging over elements of the minibatch. This is taken care of in bce_loss which combines the loss by averaging.

def bce_loss(input, target):

"""

Numerically stable version of the binary cross-entropy loss function.

Inputs:

- input: PyTorch Tensor of shape (N, ) giving scores.

- target: PyTorch Tensor of shape (N,) containing 0 and 1 giving targets.

Returns:

- A PyTorch Tensor containing the mean BCE loss over the minibatch of input data.

"""

neg_abs = - input.abs()

loss = input.clamp(min=0) - input * target + (1 + neg_abs.exp()).log()

return loss.mean()

def rel_error(x,y):

return np.max(np.abs(x - y) / (np.maximum(1e-8, np.abs(x) + np.abs(y))))两项损失

def discriminator_loss(logits_real, logits_fake):

"""

Inputs:

- logits_real: PyTorch Tensor of shape (N,) giving scores for the real data.

- logits_fake: PyTorch Tensor of shape (N,) giving scores for the fake data.

Returns:

- loss: PyTorch Tensor containing (scalar) the loss for the discriminator.

"""

loss = None

# N = logits_real.size()

# real_labels = torch.ones(N).type(dtype)

real_labels = 1 # 这地方直接写1也可以

fake_labels = 1 - real_labels

loss = bce_loss(logits_real, real_labels) + bce_loss(logits_fake, fake_labels)

# 这地方不能用nn.BCELoss(),因为D最后一层是FC层,给出的数据logits_real不符合nn.BCELoss()要求

# nn.BCELoss()要求输入数据位于(0,1)

return loss

def generator_loss(logits_fake):

"""

Inputs:

- logits_fake: PyTorch Tensor of shape (N,) giving scores for the fake data.

Returns:

- loss: PyTorch Tensor containing the (scalar) loss for the generator.

"""

loss = None

N = logits_fake.size()

fake_labels = torch.ones(N).type(dtype)

loss = bce_loss(logits_fake, fake_labels)

return lossOptimizing

Make a function that returns an optim.Adam optimizer for the given model with a 1e-3 learning rate, beta1=0.5, beta2=0.999.

def get_optimizer(model):

"""

Construct and return an Adam optimizer for the model with learning rate 1e-3,

beta1=0.5, and beta2=0.999.

Input:

- model: A PyTorch model that we want to optimize.

Returns:

- An Adam optimizer for the model with the desired hyperparameters.

"""

optimizer = optim.Adam(model.parameters(), lr=1e-3, betas=(0.5,0.999))

return optimizer主函数

随机生成一张图片

def sample_noise(batch_size, dim, seed=None):

"""

Generate a PyTorch Tensor of uniform random noise.

Input:

- batch_size: Integer giving the batch size of noise to generate.

- dim: Integer giving the dimension of noise to generate.

Output:

- A PyTorch Tensor of shape (batch_size, dim) containing uniform

random noise in the range (-1, 1).

"""

if seed is not None:

torch.manual_seed(seed)

return 2 * torch.rand(batch_size, dim) - 1 # -1 ~ 1

主函数

注意在训练判别器的时候,假图片的输入是fake_images.detach(),这是因为对于判别loss, fake_images也是出了力的,同时fake_images是生成器函数的输出,为了阻断对于生成器的梯度求解,所以要使用fake_images.detach()。同理,训练生成器的时候,不能使用fake_images.detach(),否则训练不起来

fixed_seed = sample_noise(128, 96).type(dtype)

def run_a_gan(D, G, D_solver, G_solver, discriminator_loss, generator_loss,

loader_train, show_every=250, batch_size=128, noise_size=96, num_epochs=10):

"""

Inputs:

- D, G: PyTorch models for the discriminator and generator

- D_solver, G_solver: torch.optim Optimizers to use for training the

discriminator and generator.

- discriminator_loss, generator_loss: Functions to use for computing the generator and

discriminator loss, respectively.

- show_every: Show samples after every show_every iterations.

- batch_size: Batch size to use for training.

- noise_size: Dimension of the noise to use as input to the generator.

- num_epochs: Number of epochs over the training dataset to use for training.

"""

images = []

iter_count = 0

for epoch in range(num_epochs):

for x, _ in loader_train:

if len(x) != batch_size:

continue

# 训练discriminator

D_solver.zero_grad()

real_data = x.type(dtype) # dtype: torch.cuda.FloatTensor or torch.FloatTensor

logits_real = D(2* (real_data - 0.5)).type(dtype)

g_fake_seed = sample_noise(batch_size, noise_size).type(dtype) #(128, 96)

fake_images = G(g_fake_seed) # (batch_size, 784)

# 这里等号右边也可以写G(g_fake_seed).detach(),不过下面就要再生成一次fake_images

# 所以这里不写G(g_fake_seed).detach(),而是在D的实参fake_images中加.detach()

logits_fake = D(fake_images.detach().view(batch_size, 1, 28, 28))# 为什么这里view,然后D里flatten?

d_total_error = discriminator_loss(logits_real, logits_fake)

d_total_error.backward()

D_solver.step()

# 训练 generator

G_solver.zero_grad()

# fake_images = G(g_fake_seed)

gen_logits_fake = D(fake_images.view(batch_size, 1, 28, 28))

g_error = generator_loss(gen_logits_fake)

g_error.backward()

G_solver.step()

# 打印信息

if (iter_count % show_every == 0):

print('Iter: {}, D: {:.4}, G:{:.4}'.format(iter_count,d_total_error.item(),g_error.item()))

imgs_numpy = fake_images.detach().cpu().numpy()

images.append(imgs_numpy[0:16])

iter_count += 1

return images运行GAN

# Make the discriminator

D = discriminator().type(dtype)

# Make the generator

G = generator().type(dtype)

# Use the function you wrote earlier to get optimizers for the Discriminator and the Generator

D_solver = get_optimizer(D)

G_solver = get_optimizer(G)

# Run it!

images = run_a_gan(

D,

G,

D_solver,

G_solver,

discriminator_loss,

generator_loss,

loader_train

)打印结果

numIter = 0

for img in images:

print("Iter: {}".format(numIter))

show_images(img)

plt.show()

numIter += 250

print()

# This output is your answer.

print("Vanilla GAN final image:")

show_images(images[-1])

plt.show()Least Squares GAN

We'll now look at Least Squares GAN, a newer, more stable alernative to the original GAN loss function. For this part, all we have to do is change the loss function and retrain the model. We'll implement equation (9) in the paper, with the generator loss:

def ls_discriminator_loss(scores_real, scores_fake):

"""

Compute the Least-Squares GAN loss for the discriminator.

Inputs:

- scores_real: PyTorch Tensor of shape (N,) giving scores for the real data.

- scores_fake: PyTorch Tensor of shape (N,) giving scores for the fake data.

Outputs:

- loss: A PyTorch Tensor containing the loss.

"""

loss_real = (0.5 * (scores_real - 1) ** 2).mean()

loss_fake = (0.5 * scores_fake ** 2).mean()

loss = loss_real + loss_fake

return loss

def ls_generator_loss(scores_fake):

"""

Computes the Least-Squares GAN loss for the generator.

Inputs:

- scores_fake: PyTorch Tensor of shape (N,) giving scores for the fake data.

Outputs:

- loss: A PyTorch Tensor containing the loss.

"""

loss = (0.5 * (scores_fake - 1) ** 2).mean()

return loss训练

D_LS = discriminator().type(dtype)

G_LS = generator().type(dtype)

D_LS_solver = get_optimizer(D_LS)

G_LS_solver = get_optimizer(G_LS)

images = run_a_gan(

D_LS,

G_LS,

D_LS_solver,

G_LS_solver,

ls_discriminator_loss,

ls_generator_loss,

loader_train

)结果

Deeply Convolutional GANs

In this section, we will implement some of the ideas from DCGAN, where we use convolutional networks

Discriminator

def build_dc_classifier(batch_size):

model = nn.Sequential(

Unflatten(batch_size, 1, 28, 28),

nn.Conv2d(1, 32, 5),

nn.LeakyReLU(),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5),

nn.LeakyReLU(),

nn.MaxPool2d(2),

Flatten(),

nn.Linear(4*4*64, 4*4*64),

nn.LeakyReLU(),

nn.Linear(4*4*64, 1)

)

return modelGenerator

For the generator, we will copy the architecture exactly from the InfoGAN paper. See Appendix C.1 MNIST. See the documentation for nn.ConvTranspose2d. We are always "training" in GAN mode.

def build_dc_generator(noise_dim=NOISE_DIM):

"""

Fully connected with output size 1024

ReLU

BatchNorm

Fully connected with output size 7 x 7 x 128

ReLU

BatchNorm

Use Unflatten() to reshape into Image Tensor of shape 7, 7, 128

ConvTranspose2d: 64 filters of 4x4, stride 2, 'same' padding (use padding=1)

ReLU

BatchNorm

ConvTranspose2d: 1 filter of 4x4, stride 2, 'same' padding (use padding=1)

TanH

Should have a 28x28x1 image, reshape back into 784 vector (using Flatten())

"""

model = nn.Sequential(

nn.Linear(noise_dim, 1024),

nn.ReLU(),

nn.BatchNorm1d(1024),

nn.Linear(1024, 7*7*128),

nn.ReLU(),

nn.BatchNorm1d(7*7*128),

Unflatten(-1, 128, 7, 7),

nn.ConvTranspose2d(128, 64, 4, stride=2, padding=1),

nn.ReLU(),

nn.BatchNorm2d(64),

nn.ConvTranspose2d(64, 1, 4, stride=2, padding=1),

nn.Tanh(),

Flatten()

)

return model训练

def initialize_weights(m):

if isinstance(m, nn.Linear) or isinstance(m, nn.ConvTranspose2d):

nn.init.xavier_uniform_(m.weight.data)对D和G自定义初始化

D_DC = build_dc_classifier(batch_size).type(dtype)

# pytorch中的model.apply(fn)会递归地将函数fn应用到父模块的每个子模块submodule,也包括model这个父模块自身

D_DC.apply(initialize_weights)

G_DC = build_dc_generator().type(dtype)

G_DC.apply(initialize_weights)

D_DC_solver = get_optimizer(D_DC)

G_DC_solver = get_optimizer(G_DC)

images = run_a_gan(

D_DC,

G_DC,

D_DC_solver,

G_DC_solver,

discriminator_loss,

generator_loss,

loader_train,

num_epochs=5

)结果:

需要注意的函数

sampler

>>a = list(SequentialSampler(range(10)))

>>print(a)

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

a = list(BatchSampler(SequentialSampler(range(10)), batch_size=3, drop_last=True))

print(a)

[[0, 1, 2], [3, 4, 5], [6, 7, 8]]

>>a = list(BatchSampler(SubsetRandomSampler(range(10)), batch_size=3, drop_last=True))

>>print(a)

[[4, 9, 3], [7, 1, 6], [8, 2, 5]]

>>a = list(SubsetRandomSampler(range(10)))

>>print(a)

[9, 6, 4, 0, 7, 1, 3, 5, 8, 2]

np.prod

>>a = np.array([1,2,3])

>>print(np.prod(a))

6

clamp

>>a = torch.tensor([-1,-2,3,4])

>>b = a.clamp(min=0)

>>print(b)

tensor([0, 0, 3, 4])