OpenCV+python实现摄像头简单手势识别--进度条控制亮度

文章目录

- 前言

- 一、整体框架

- 二、使用步骤;

-

-

- 1.引入库;

- 2.第一步:打开摄像头;

- 3.第二步:设置回调函数;

- 4.第三步:肤色检测;

- 5.第四步:进行高斯滤波;

- 6.第五步:边缘轮廓检测;

- 7.第六步:求出手势的凹凸点;

- 8.第七步: 利用凹凸点个数判断当前手势;

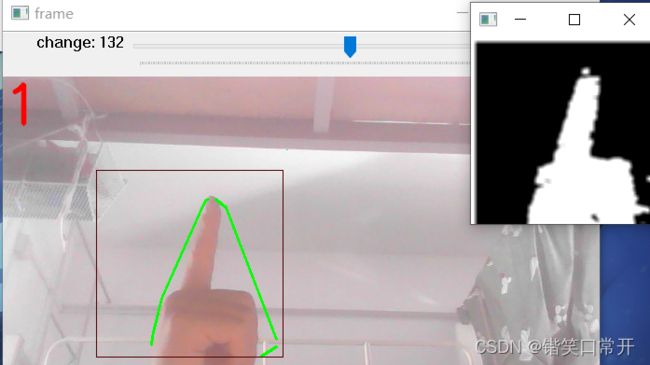

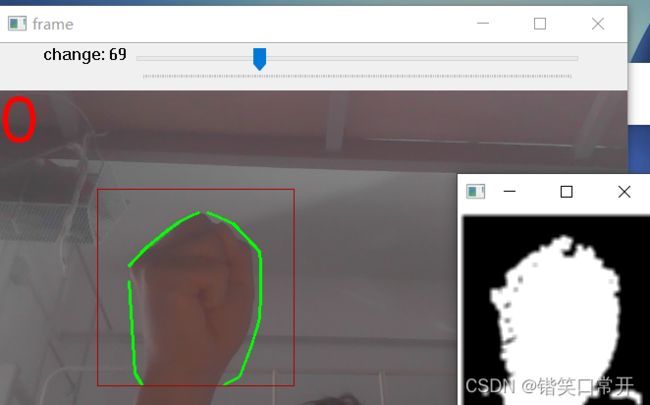

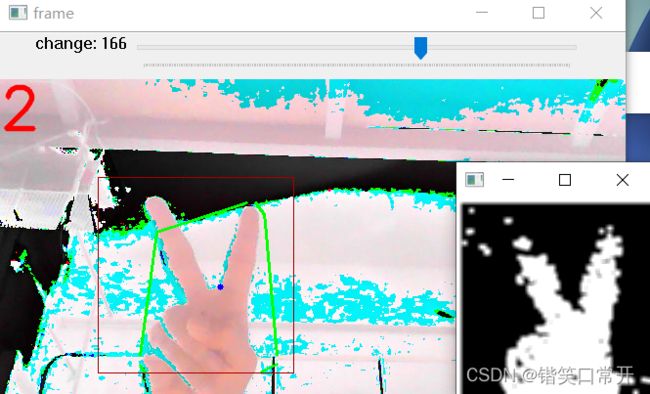

- 成果展示

- 完整代码

-

- 总结

前言

例如:随着人工智能的不断发展,计算机视觉这门技术也越来越重要,很多人都开启了学习计算机视觉,本文在Opencv基础上实现了摄像头简单手势识别–进度条控制亮度的基础内容,并没有使用深度学习技术,因此准确率并不高。

一、整体框架

∙ \bullet ∙第一步: 开启摄像头,检测每帧图片;

∙ \bullet ∙第二步: 设置回调函数,操纵滑动条来调整亮度;

∙ \bullet ∙第三步: 肤色检测,基于HSV颜色空间H,S,V范围筛选法。在HSV中 7 ∙ \bullet ∙第四步: 进行高斯滤波; ∙ \bullet ∙第五步: 边缘轮廓检测; ∙ \bullet ∙第六步: 求出手势的凹凸点; ∙ \bullet ∙第七步: 利用凹凸点个数判断当前手势。例如:0个凹凸点就是拳头,4个凹点就是布。 代码如下(示例): 可通过滑动条来调整亮度,提高识别率。 例如:本次基于摄像头的简单手势识别实验,让我对以往的知识掌握更加深刻。虽然现在手势识别都是通过深度学习中的CNN等实现的,但仅使用opencv的传统方法来实现这个功能对我来说还挺新颖的,让我对opencv更加充满了兴趣。二、使用步骤;

1.引入库;

import cv2

import numpy as np

import math

2.第一步:打开摄像头;

cap = cv2.VideoCapture(0)

while (cap.isOpened()):

ret, frame = cap.read() # 读取摄像头每帧图片

frame = cv2.flip(frame, 1) #镜像调整,将图像左右调换回来正常显示

3.第二步:设置回调函数;

def callback(object): #注意这里createTrackbar会向其传入参数即滑动条地址(几乎用不到),所以必须写一个参数

pass

cv2.createTrackbar("change", "frame", 100, 255, callback)

4.第三步:肤色检测;

# 基于hsv的肤色检测,通过HSV颜色空间来筛选所需要的像素

hsv = cv2.cvtColor(roi, cv2.COLOR_BGR2HSV)

lower_skin = np.array([0, 28, 70], dtype=np.uint8)

upper_skin = np.array([20, 255, 255], dtype=np.uint8)

5.第四步:进行高斯滤波;

# 进行高斯滤波,降低噪声的影响

mask = cv2.inRange(hsv, lower_skin, upper_skin)

mask = cv2.dilate(mask, kernel, iterations=4)

mask = cv2.GaussianBlur(mask, (5, 5), 100)

6.第五步:边缘轮廓检测;

# 找出轮廓,确定手势范围

contours, h = cv2.findContours(

mask, cv2.RETR_TREE,

cv2.CHAIN_APPROX_SIMPLE) #opencv中提供findContours()函数来寻找图像中物体的轮廓

cnt = max(contours, key=lambda x: cv2.contourArea(x))

epsilon = 0.0005 * cv2.arcLength(cnt, True)

approx = cv2.approxPolyDP(cnt, epsilon, True)

hull = cv2.convexHull(cnt)

areahull = cv2.contourArea(hull)

areacnt = cv2.contourArea(cnt)

arearatio = ((areahull - areacnt) / areacnt) * 100

7.第六步:求出手势的凹凸点;

# 求出凹凸点

hull = cv2.convexHull(approx, returnPoints=False) #convexHull能很方便的用于求多边形凸包

defects = cv2.convexityDefects(approx, hull) #使用convexityDefects计算轮廓凸缺陷

8.第七步: 利用凹凸点个数判断当前手势;

# 定义凹凸点个数初始值为0

l = 0

for i in range(defects.shape[0]):

s, e, f, d, = defects[i, 0]

start = tuple(approx[s][0])

end = tuple(approx[e][0])

far = tuple(approx[f][0])

pt = (100, 100)

a = math.sqrt((end[0] - start[0])**2 + (end[1] - start[1])**2)

b = math.sqrt((far[0] - start[0])**2 + (far[1] - start[1])**2)

c = math.sqrt((end[0] - far[0])**2 + (end[1] - far[1])**2)

s = (a + b + c) / 2

ar = math.sqrt(s * (s - a) * (s - b) * (s - c))

# 手指间角度求取

angle = math.acos((b**2 + c**2 - a**2) / (2 * b * c)) * 57

if angle <= 90 and d > 20:

l += 1

cv2.circle(roi, far, 3, [255, 0, 0], -1)

cv2.line(roi, start, end, [0, 255, 0], 2) # 画出包络线

l += 1

font = cv2.FONT_HERSHEY_SIMPLEX

成果展示

由于肤色检测的时候是用色调来提取特征,因此会被黄色调的影响,因此准确率并不算高,只能实现基本的功能,要想准确率高还得上深度学习算法。完整代码

# -*- coding: utf-8 -*-

"""

Created on Thu Apr 7 18:42:02 2022

@author: He Zekai

"""

import cv2

import numpy as np

import math

def callback(object): #注意这里createTrackbar会向其传入参数即滑动条地址(几乎用不到),所以必须写一个参数

pass

cap = cv2.VideoCapture(0)

cv2.namedWindow('frame')

cv2.resizeWindow('frame',600,800)

cv2.createTrackbar("change", "frame", 100, 255, callback)

while(cap.isOpened()):

ret,image = cap.read() # 读取摄像头每帧图片

image = cv2.flip(image,1)

cv2.rectangle(image,(100,100),(300,300),(0,0,255),0) # 用红线画出手势识别框

#滑动条控制颜色

value = cv2.getTrackbarPos('change', 'frame')

image_dst = np.uint8(image/100*value)

roi = image_dst[100:300,100:300]# 选取图片中固定位置作为手势输入

kernel = np.ones((2,2),np.uint8)

# 进行高斯滤波

lower_skin = np.array([0,28,70],dtype=np.uint8)

upper_skin = np.array([20, 255, 255],dtype=np.uint8)

mask = cv2.inRange(roi,lower_skin,upper_skin)

mask = cv2.dilate(mask,kernel,iterations=4)

mask = cv2.GaussianBlur(mask,(3,3),100)

# 基于hsv的肤色检测

hsv = cv2.cvtColor(roi,cv2.COLOR_BGR2HSV)

# 进行高斯滤波

mask = cv2.inRange(hsv,lower_skin,upper_skin)

mask = cv2.dilate(mask,kernel,iterations=4)

mask = cv2.GaussianBlur(mask,(5,5),100)

# 找出轮廓

contours,h = cv2.findContours(mask,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

cnt = max(contours,default=0,key=lambda x:cv2.contourArea(x))

epsilon = 0.0005*cv2.arcLength(cnt,True)

approx = cv2.approxPolyDP(cnt,0.05,True)

hull = cv2.convexHull(cnt)

areahull = cv2.contourArea(hull)

areacnt = cv2.contourArea(cnt)

arearatio = ((areahull-areacnt)/areacnt)*100

# 求出凹凸点

hull = cv2.convexHull(approx,returnPoints=False)

defects = cv2.convexityDefects(approx,hull)

# 定义凹凸点个数初始值为0

l=0

try:

for i in range(defects.shape[0]):

s,e,f,d, = defects[i,0]

start = tuple(approx[s][0])

end = tuple(approx[e][0])

far = tuple(approx[f][0])

pt = (100,100)

a = math.sqrt((end[0]-start[0])**2+(end[1]-start[1])**2)

b = math.sqrt((far[0] - start[0]) ** 2 + (far[1] - start[1]) ** 2)

c = math.sqrt((end[0]-far[0])**2+(end[1]-far[1])**2)

s = (a+b+c)/2

ar = math.sqrt(s*(s-a)*(s-b)*(s-c))

# 手指间角度求取

angle = math.acos((b**2 + c**2 -a**2)/(2*b*c))*57

if angle<=90 and d>20:

l+=1

cv2.circle(roi,far,3,[255,0,0],-1)

cv2.line(roi,start,end,[0,255,0],2) # 画出包络线

l+=1

font = cv2.FONT_HERSHEY_SIMPLEX

# 条件判断,知道手势后想实现的功能

if l==1:

if areacnt<2000:

cv2.putText(image_dst,"Please put hand in the window",(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

else:

if arearatio<12:

cv2.putText(image_dst,'0',(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

elif arearatio<17.5:

cv2.putText(image_dst,"1",(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

else:

cv2.putText(image_dst,'1',(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

elif l==2:

cv2.putText(image_dst,'2',(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

elif l==3:

if arearatio<27:

cv2.putText(image_dst,'3',(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

else:

cv2.putText(image_dst,'3',(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

elif l==4:

cv2.putText(image_dst,'4',(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

elif l==5:

cv2.putText(image_dst,'5',(0,50),font,2,(0,0,255),3,cv2.LINE_AA)

# cv2.imshow('frame',frame)

cv2.imshow('mask', mask)

cv2.imshow('frame', image_dst)

key = cv2.waitKey(25)& 0xFF

if key == ord('q'): # 键盘q键退出

break

except:

pass

cv2.destroyAllWindows()

cap.release()

总结