【神经网络】(13) ShuffleNetV2 代码复现,网络解析,附Tensorflow完整代码

各位同学好,今天和大家分享一下如何使用 Tensorflow 复现轻量化神经网络 ShuffleNetV2。

为了能将神经网络模型用于移动端(手机)和终端(安防监控、无人驾驶)的实时计算,通常这些设备计算能力有限,因此我们需要减少模型参数量、减小计算量、更少的内存访问量、更少的能耗。MobileNet、ShuffleNet 等轻量化网络就非常适合于一些计算能力受限的设备,牺牲少量准确率换取更快的运算速度。

在之前的章节中,我详细介绍了 MobileNetV1 和 MobileNetV2 网络模型,今天介绍的 ShuffleNet 网络也会使用到 MobileNet 的深度可分离卷积。建议先看一下我的前两篇文章:

MobileNetV1:https://blog.csdn.net/dgvv4/article/details/123415708

MobileNetV2:https://blog.csdn.net/dgvv4/article/details/123417739

左图是各个神经网络模型的准确率--参数量散点图,越靠近左上角代表模型参数量少而且准确率高。右图是单位参数量对准确率的贡献,ShuffleNet 的计算量和参数量很少,但是计算的效率很高,计算速度很快。

1. ShuffleNetV1 创新点

在讲 ShuffleNetV2 之前我们需要对 ShuffleNetV1 有一定的了解,这里主要介绍ShuffleNetV1的两个核心内容。(1)分组1x1卷积;(2)通道重排。

1.1 分组卷积(Group Convolution)

(1)在标准卷积中,输入有多少个通道,卷积核就有多少个通道。

举个例子:若输入的图像shape为5x5x6,一个卷积核的shape为3x3x6,使用3个卷积核,得到的特征图shape为3x3x3。参数量 = 5x5x6x3 = 450

![]()

(2)在分组卷积中,每个卷积核只处理部分通道。如下图,红色的卷积核只处理输入图像中红色的两个通道,绿色的卷积核只处理输入图像中间的两个绿色的通道,第三个卷积核只处理黄色的两个通道。此时,每个卷积核有两个通道,每个卷积核生成一个特征图。

举个例子:若输入的图像shape为5x5x6,一个分组卷积核的shape为3x3x2,使用3个分组卷积核,得到的特征图shape为3x3x3。参数量 = 5x5x(6/3)x(3/3)x3 = 5x5x2x1x3 = 150 。可见,分成三组,参数量为原来的三分之一。

因此,分组卷积能够有效地降低参数量和计算量。

![]()

(3)深度可分离卷积是分组卷积的一个特例。随着分组的组数不断增加,输入图像有多少个通道就分几组,每个卷积核处理一个通道的特征,每个卷积核生成一个特征图,输入图像有多少通道就生成多少张特征图,再堆叠起来。

![]()

深度可分离卷积详细内容,看我前一篇MobileNetV1的文章。

1.2 通道重排(Channel Shuffle)

分组卷积存在一个问题,各个分组之间相互独立,没有特征融合。通道重排方法实现跨组的信息交融。

如下图(a)所示,卷积核分三组,生成特征图也是三组,每组只在内部进行信息交互,组与组之间没有任何信息交融。

如图(b, c)所示,将每个组的第一份,收集起来作为下一组;每组的第二份收集起来作为下一组....这样就实现了跨组的信息交流。

![]()

举个例子来说,如下图。分组卷积生成的三组特征图,第一组1~4;第二组5~8;第三组9~12。先将特征图重塑,为三行N列的矩形。然后进行转置,变成N行三列。最后压平,从二维tensor变成一维tensor,每一组的特征图交叉组合在一起。实现各组之间的信息交融。

![]()

2. ShuffleNetV2 模型

2.1 四条轻量化网络的设计原则

(1)输入输出通道数相同时,内存访问量MAC最小

输出通道数对应的是卷积核的数量。比如输入图像的通道数是256个,那么卷积核个数最好也是256个。

(2)分组数过大的分组卷积会增加MAC

ShuffleNetV1使用的分组1x1卷积,减少了参数量、计算量,增大网络表达能力。但是增加了内存占用量。

(3)碎片化操作对并行加速(GPU)不友好

碎片化操作指多分枝、多通路,如Inception系列网络的过多的分支

(4)逐元素操作(残差网络求和、relu激活等)带来的内存和耗时不可忽略

如:避免使用残差求和,将原来的 layers.Add() 变成 layers.Concat()

2.2 网络主干结构

作者根据这四条原则设计出来 ShuffleNetV2 轻量化网络。如下图。

Channel Spilt 模块将输入图像的通道数平均分成两份,一份用于残差连接,一份用于特征提取。

Channel Shuffle 模块将堆叠的特征图的通道重新排序,实现各分组之间的特征融合。

在基本模块中,特征图size不变,通道数不变。在下采样模块中,特征图的长宽减半,通道数加倍。

![]()

3. ShuffleNetV2 代码复现

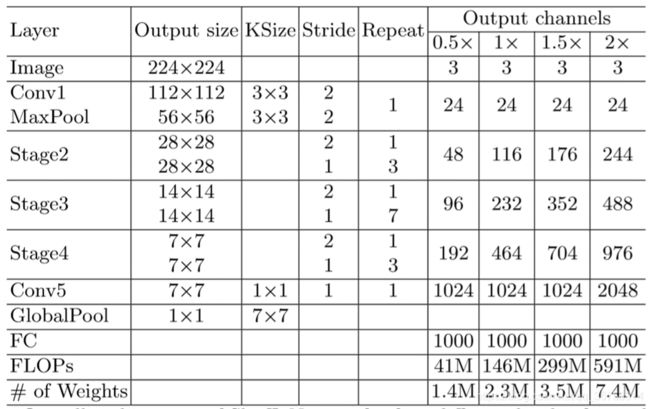

根据论文中给出的网络构架,搭建模型。其中 Stage模块 由 一个ShuffleNetv2下采样模块 和 若干个ShuffleNetv2基本模块 组成。输入图像的shape为 224x224x3。output channel列的几个宽度超参数,一般只用超参数=1那一列。FLOPs代表乘-加浮点运算次数

3.1 构建各个模块

(1)标准卷积模块

标准卷积模块是由 普通卷积+批标准化BN层+ReLU激活函数 构成

#(1)标准卷积块

def conv_block(input_tensor, filters, kernel_size, stride=1):

# 卷积+批标准化+relu激活

x = layers.Conv2D(filters, kernel_size,

strides = stride, # 步长

padding = 'same', # strides=1卷积过程中特征图size不变,strides=2卷积过程中size减半

use_bias = False)(input_tensor) # 有BN层就不需要偏置

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU()(x) # relu激活

return x # 返回一次标准卷积后的tensor(2)深度可分离卷积模块

由2.2中的网络结构图可知,深度可分离卷积块只需要包含 3x3的深度卷积 和 批标准化BN层

#(2)深度可分离卷积块

def depthwise_conv_block(input_tensor, stride=1):

# 深度可分离卷积+批标准化

# 不需要传入卷积核个数,输入有几个通道,就有几个卷积核,每个卷积核负责一个通道

x = layers.DepthwiseConv2D(kernel_size = (3,3), # 深度卷积核size默认3*3

strides = stride, # 步长

padding = 'same', # strides=1卷积过程中特征图size不变,strides=2卷积过程中size减半

use_bias = False)(input_tensor) # 有BN层就不需要偏置

x = layers.BatchNormalization()(x) # 批标准化

return x # 返回深度可分离卷积后的tensor(3)通道重排模块

Channel Shuffle 模块用于对两组堆叠在一起的特征图进行重新排列,实现各组之间的信息融合。

#(3)通道重排,跨组信息交融

def channel_shuffle(input_tensor, num=2): # 默认时2组特征:shortcut和卷积后的x

# 先得到输入特征图的shape,b:batch size,h,w:一张图的size,c:通道数

b, h, w, c = input_tensor.shape

# 确定shape = [b, h, w, num, c//num]。通道维度原来是一个长为c的一维tensor,变成2行n列的矩阵

# 在通道维度上将特征图reshape为2行n列的矩阵。

x_reshaped = tf.reshape(input_tensor, [-1, h, w, num, c//num])

# 确定转置的矩形的shape = [b, h, w, c//num, num]

# 矩阵转置,最后两个维度从2行n列变成n行2列

x_transposed = tf.transpose(x_reshaped, [0,1,2,4,3])

# 重新排列,shotcut和x的通道像素交叉排列,通道维度重新变成一维tensor

output = tf.reshape(x_transposed, [-1, h, w, c])

return output # 返回通道维度交叉排序后的tensor(4)ShuffleNetV2 基本模块

当步长为1时,先将输入特征图在通道维度上平均分成两份。如2.2中的结构图,左分支输入等于输出,右分支特征传递,将两个分支的结果堆叠在一起,通道数变成原始大小。

#(4)步长=1时的卷积块

def shufflent_unit_1(input_tensor, filters):

# 首先将输入特征图在通道维度上平均分成两份:一部分用于残差连接,一部分卷积提取特征

shortcut, x = tf.split(input_tensor, 2, axis=-1) # axis指定轴

# 现在shotcut和x的通道数都只有原来的二分之一

# 1*1卷积+3*3深度卷积+1*1卷积

x = conv_block(x, filters//2, kernel_size=(1,1), stride=1) # 1*1卷积,通道数保持不变

x = depthwise_conv_block(x, stride=1) # 3*3深度卷积

x = conv_block(x, filters//2, kernel_size=(1,1), stride=1) # 1*1卷积跨通道信息融合

# 堆叠shoutcut和x,要求两个tensor的size相同

x = tf.concat([shortcut, x], axis=-1) # 在通道维度上堆叠

# 将堆叠后2组特征图,在通道维度上重新排列

x = channel_shuffle(x)

return x # 返回步长为1时的卷积块输出的tensor(5)ShuffleNetV2 下采样模块

当步长为2时,输入图像不平分通道数。左分支先3*3深度卷积(步长=2),然后1*1卷积,输出特征图的长宽减半,通道数不变。右分支先1*1卷积降维,然后3*3深度卷积(步长=2),再1*1卷积升维。

这里要注意,左分支输出特征图数量+右分支输出特征图数量=下采样模块输出特征图数量。

已知左分支输出特征图的通道数保持不变(in_channel),下采样模块输出特征图数量(out_channel)已给出,可计算得到右分支输出特征图的通道数,将它作为右分支最后一个1*1卷积的卷积核个数。

#(5)步长=2时(下采样)的卷积块

def shufflenet_unit_2(input_tensor, out_channel):

# 输入特征图的通道数

in_channel = input_tensor.shape[-1]

# 首先将输入特征图复制一份,分别用于左右两个分支的卷积

shortcut = input_tensor

# ① 左分支的卷积部分==深度卷积+逐点卷积,输出特征图通道数等于原通道数

shortcut = depthwise_conv_block(shortcut, stride=2) # 特征图size减半

shortcut = conv_block(shortcut, filters=in_channel, kernel_size=(1,1), stride=1) # 输出特征图个数不变

# ② 右分支==1*1卷积下降通道数+3*3深度卷积+1*1卷积上升通道数

x = conv_block(input_tensor, in_channel//2, kernel_size=(1,1), stride=1)

x = depthwise_conv_block(x, stride=2)

# 右分支的通道数和左分支的通道数叠加==输出特征图的通道数out_channel

x = conv_block(x, out_channel-in_channel, kernel_size=(1,1), stride=1)

# ③ 左右分支的输出特征图在通道维度上堆叠,并且output.shape[-1]==out_channel

output = tf.concat([shortcut, x], axis=-1)

# ④ 堆叠后的2组特征在通道维度上重新排列

output = channel_shuffle(output)

return output # 返回步长=2时的输出结果(6)Stage 模块

一个Stage模块是由一个下采样模块,和多个基本模块组合而成的

#(6)构建shufflenet卷积块

# 一个shuffle卷积块是由一个shufflenet_unit_2下采样单元,和若干个shufflenet_unit_1特征传递单元构成

def stage(input_tensor, filters, n): # filters代表输出通道数

# 下采样单元

x = shufflenet_unit_2(input_tensor, out_channel=filters)

# 特征传递单元循环n次

for i in range(n):

x = shufflent_unit_1(x, filters=filters)

return x # 返回一个shufflenet卷积结果3.2 完整代码展示

结合上文的分析,完整代码如下,代码中每行都有注释,有疑问的在评论区留言

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Model

#(1)标准卷积块

def conv_block(input_tensor, filters, kernel_size, stride=1):

# 卷积+批标准化+relu激活

x = layers.Conv2D(filters, kernel_size,

strides = stride, # 步长

padding = 'same', # strides=1卷积过程中特征图size不变,strides=2卷积过程中size减半

use_bias = False)(input_tensor) # 有BN层就不需要偏置

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU()(x) # relu激活

return x # 返回一次标准卷积后的tensor

#(2)深度可分离卷积块

def depthwise_conv_block(input_tensor, stride=1):

# 深度可分离卷积+批标准化

# 不需要传入卷积核个数,输入有几个通道,就有几个卷积核,每个卷积核负责一个通道

x = layers.DepthwiseConv2D(kernel_size = (3,3), # 深度卷积核size默认3*3

strides = stride, # 步长

padding = 'same', # strides=1卷积过程中特征图size不变,strides=2卷积过程中size减半

use_bias = False)(input_tensor) # 有BN层就不需要偏置

x = layers.BatchNormalization()(x) # 批标准化

return x # 返回深度可分离卷积后的tensor

#(3)通道重排,跨组信息交融

def channel_shuffle(input_tensor, num=2): # 默认时2组特征:shortcut和卷积后的x

# 先得到输入特征图的shape,b:batch size,h,w:一张图的size,c:通道数

b, h, w, c = input_tensor.shape

# 确定shape = [b, h, w, num, c//num]。通道维度原来是一个长为c的一维tensor,变成2行n列的矩阵

# 在通道维度上将特征图reshape为2行n列的矩阵。

x_reshaped = tf.reshape(input_tensor, [-1, h, w, num, c//num])

# 确定转置的矩形的shape = [b, h, w, c//num, num]

# 矩阵转置,最后两个维度从2行n列变成n行2列

x_transposed = tf.transpose(x_reshaped, [0,1,2,4,3])

# 重新排列,shotcut和x的通道像素交叉排列,通道维度重新变成一维tensor

output = tf.reshape(x_transposed, [-1, h, w, c])

return output # 返回通道维度交叉排序后的tensor

#(4)步长=1时的卷积块

def shufflent_unit_1(input_tensor, filters):

# 首先将输入特征图在通道维度上平均分成两份:一部分用于残差连接,一部分卷积提取特征

shortcut, x = tf.split(input_tensor, 2, axis=-1) # axis指定轴

# 现在shotcut和x的通道数都只有原来的二分之一

# 1*1卷积+3*3深度卷积+1*1卷积

x = conv_block(x, filters//2, kernel_size=(1,1), stride=1) # 1*1卷积,通道数保持不变

x = depthwise_conv_block(x, stride=1) # 3*3深度卷积

x = conv_block(x, filters//2, kernel_size=(1,1), stride=1) # 1*1卷积跨通道信息融合

# 堆叠shoutcut和x,要求两个tensor的size相同

x = tf.concat([shortcut, x], axis=-1) # 在通道维度上堆叠

# 将堆叠后2组特征图,在通道维度上重新排列

x = channel_shuffle(x)

return x # 返回步长为1时的卷积块输出的tensor

#(5)步长=2时(下采样)的卷积块

def shufflenet_unit_2(input_tensor, out_channel):

# 输入特征图的通道数

in_channel = input_tensor.shape[-1]

# 首先将输入特征图复制一份,分别用于左右两个分支的卷积

shortcut = input_tensor

# ① 左分支的卷积部分==深度卷积+逐点卷积,输出特征图通道数等于原通道数

shortcut = depthwise_conv_block(shortcut, stride=2) # 特征图size减半

shortcut = conv_block(shortcut, filters=in_channel, kernel_size=(1,1), stride=1) # 输出特征图个数不变

# ② 右分支==1*1卷积下降通道数+3*3深度卷积+1*1卷积上升通道数

x = conv_block(input_tensor, in_channel//2, kernel_size=(1,1), stride=1)

x = depthwise_conv_block(x, stride=2)

# 右分支的通道数和左分支的通道数叠加==输出特征图的通道数out_channel

x = conv_block(x, out_channel-in_channel, kernel_size=(1,1), stride=1)

# ③ 左右分支的输出特征图在通道维度上堆叠,并且output.shape[-1]==out_channel

output = tf.concat([shortcut, x], axis=-1)

# ④ 堆叠后的2组特征在通道维度上重新排列

output = channel_shuffle(output)

return output # 返回步长=2时的输出结果

#(6)构建shufflenet卷积块

# 一个shuffle卷积块是由一个shufflenet_unit_2下采样单元,和若干个shufflenet_unit_1特征传递单元构成

def stage(input_tensor, filters, n): # filters代表输出通道数

# 下采样单元

x = shufflenet_unit_2(input_tensor, out_channel=filters)

# 特征传递单元循环n次

for i in range(n):

x = shufflent_unit_1(x, filters=filters)

return x # 返回一个shufflenet卷积结果

#(7)构建网络模型

def ShuffleNet(input_shape, classes):

# 构建网络输入的tensor

inputs = keras.Input(shape=input_shape)

# [224,224,3]==>[112,112,24]

x = layers.Conv2D(filters=24, kernel_size=(3,3), strides=2, padding='same')(inputs) # 普通卷积

# [112,112,24]==>[56,56,24]

x = layers.MaxPooling2D(pool_size=(3,3), strides=2, padding='same')(x) # 最大池化

# [56,56,24]==>[28,28,116]

x = stage(x, filters=116, n=3)

# [28,28,116]==>[14,14,232]

x = stage(x, filters=232, n=7)

# [14,14,232]==>[7,7,464]

x = stage(x, filters=464, n=3)

# [7,7,464]==>[7,7,1024]

x = layers.Conv2D(filters=1024, kernel_size=(1,1), strides=1, padding='same')(x) # 1*1普通卷积

# [7,7,1024]==>[None,1024]

x = layers.GlobalAveragePooling2D()(x) # 在通道维度上全局平均池化

# 按论文输出层使用全连接层,也可改为卷积层再Reshape

logits = layers.Dense(classes)(x) # 为了网络稳定,训练时再使用Softmax函数

# 完成网络架构

model = Model(inputs, logits)

return model # 返回网络模型

#(8)接收网络模型

if __name__ == '__main__':

model = ShuffleNet(input_shape=[224,224,3], # 输入图像的shape

classes=1000) # 图像分类类别

model.summary() # 查看网络结构3.3 查看网络构架

通过 model.summary() 查看网络结构,可见 ShuffleNetV2 的参数量只有两百多万,对比 MobileNetV2 的三百多万的参数量,已经非常轻量化了。

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 112, 112, 24) 672 input_1[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 56, 56, 24) 0 conv2d[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 56, 56, 12) 288 max_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 56, 56, 12) 48 conv2d_2[0][0]

__________________________________________________________________________________________________

re_lu_1 (ReLU) (None, 56, 56, 12) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

depthwise_conv2d (DepthwiseConv (None, 28, 28, 24) 216 max_pooling2d[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_1 (DepthwiseCo (None, 28, 28, 12) 108 re_lu_1[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 28, 28, 24) 96 depthwise_conv2d[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 28, 28, 12) 48 depthwise_conv2d_1[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 28, 28, 24) 576 batch_normalization[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 28, 28, 92) 1104 batch_normalization_3[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 28, 28, 24) 96 conv2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 28, 28, 92) 368 conv2d_3[0][0]

__________________________________________________________________________________________________

re_lu (ReLU) (None, 28, 28, 24) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

re_lu_2 (ReLU) (None, 28, 28, 92) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

tf.concat (TFOpLambda) (None, 28, 28, 116) 0 re_lu[0][0]

re_lu_2[0][0]

__________________________________________________________________________________________________

tf.reshape (TFOpLambda) (None, 28, 28, 2, 58 0 tf.concat[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose (TFOpLam (None, 28, 28, 58, 2 0 tf.reshape[0][0]

__________________________________________________________________________________________________

tf.reshape_1 (TFOpLambda) (None, 28, 28, 116) 0 tf.compat.v1.transpose[0][0]

__________________________________________________________________________________________________

tf.split (TFOpLambda) [(None, 28, 28, 58), 0 tf.reshape_1[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 28, 28, 58) 3364 tf.split[0][1]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 28, 28, 58) 232 conv2d_4[0][0]

__________________________________________________________________________________________________

re_lu_3 (ReLU) (None, 28, 28, 58) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_2 (DepthwiseCo (None, 28, 28, 58) 522 re_lu_3[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 28, 28, 58) 232 depthwise_conv2d_2[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 28, 28, 58) 3364 batch_normalization_6[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 28, 28, 58) 232 conv2d_5[0][0]

__________________________________________________________________________________________________

re_lu_4 (ReLU) (None, 28, 28, 58) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

tf.concat_1 (TFOpLambda) (None, 28, 28, 116) 0 tf.split[0][0]

re_lu_4[0][0]

__________________________________________________________________________________________________

tf.reshape_2 (TFOpLambda) (None, 28, 28, 2, 58 0 tf.concat_1[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_1 (TFOpL (None, 28, 28, 58, 2 0 tf.reshape_2[0][0]

__________________________________________________________________________________________________

tf.reshape_3 (TFOpLambda) (None, 28, 28, 116) 0 tf.compat.v1.transpose_1[0][0]

__________________________________________________________________________________________________

tf.split_1 (TFOpLambda) [(None, 28, 28, 58), 0 tf.reshape_3[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 28, 28, 58) 3364 tf.split_1[0][1]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 28, 28, 58) 232 conv2d_6[0][0]

__________________________________________________________________________________________________

re_lu_5 (ReLU) (None, 28, 28, 58) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_3 (DepthwiseCo (None, 28, 28, 58) 522 re_lu_5[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 28, 28, 58) 232 depthwise_conv2d_3[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 28, 28, 58) 3364 batch_normalization_9[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 28, 28, 58) 232 conv2d_7[0][0]

__________________________________________________________________________________________________

re_lu_6 (ReLU) (None, 28, 28, 58) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

tf.concat_2 (TFOpLambda) (None, 28, 28, 116) 0 tf.split_1[0][0]

re_lu_6[0][0]

__________________________________________________________________________________________________

tf.reshape_4 (TFOpLambda) (None, 28, 28, 2, 58 0 tf.concat_2[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_2 (TFOpL (None, 28, 28, 58, 2 0 tf.reshape_4[0][0]

__________________________________________________________________________________________________

tf.reshape_5 (TFOpLambda) (None, 28, 28, 116) 0 tf.compat.v1.transpose_2[0][0]

__________________________________________________________________________________________________

tf.split_2 (TFOpLambda) [(None, 28, 28, 58), 0 tf.reshape_5[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 28, 28, 58) 3364 tf.split_2[0][1]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 28, 28, 58) 232 conv2d_8[0][0]

__________________________________________________________________________________________________

re_lu_7 (ReLU) (None, 28, 28, 58) 0 batch_normalization_11[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_4 (DepthwiseCo (None, 28, 28, 58) 522 re_lu_7[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 28, 28, 58) 232 depthwise_conv2d_4[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 28, 28, 58) 3364 batch_normalization_12[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 28, 28, 58) 232 conv2d_9[0][0]

__________________________________________________________________________________________________

re_lu_8 (ReLU) (None, 28, 28, 58) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

tf.concat_3 (TFOpLambda) (None, 28, 28, 116) 0 tf.split_2[0][0]

re_lu_8[0][0]

__________________________________________________________________________________________________

tf.reshape_6 (TFOpLambda) (None, 28, 28, 2, 58 0 tf.concat_3[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_3 (TFOpL (None, 28, 28, 58, 2 0 tf.reshape_6[0][0]

__________________________________________________________________________________________________

tf.reshape_7 (TFOpLambda) (None, 28, 28, 116) 0 tf.compat.v1.transpose_3[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 28, 28, 58) 6728 tf.reshape_7[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 28, 28, 58) 232 conv2d_11[0][0]

__________________________________________________________________________________________________

re_lu_10 (ReLU) (None, 28, 28, 58) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_5 (DepthwiseCo (None, 14, 14, 116) 1044 tf.reshape_7[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_6 (DepthwiseCo (None, 14, 14, 58) 522 re_lu_10[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 14, 14, 58) 232 depthwise_conv2d_6[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_14[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 14, 14, 116) 6728 batch_normalization_17[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 14, 14, 116) 464 conv2d_10[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 14, 14, 116) 464 conv2d_12[0][0]

__________________________________________________________________________________________________

re_lu_9 (ReLU) (None, 14, 14, 116) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

re_lu_11 (ReLU) (None, 14, 14, 116) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

tf.concat_4 (TFOpLambda) (None, 14, 14, 232) 0 re_lu_9[0][0]

re_lu_11[0][0]

__________________________________________________________________________________________________

tf.reshape_8 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_4[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_4 (TFOpL (None, 14, 14, 116, 0 tf.reshape_8[0][0]

__________________________________________________________________________________________________

tf.reshape_9 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_4[0][0]

__________________________________________________________________________________________________

tf.split_3 (TFOpLambda) [(None, 14, 14, 116) 0 tf.reshape_9[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 14, 14, 116) 13456 tf.split_3[0][1]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 14, 14, 116) 464 conv2d_13[0][0]

__________________________________________________________________________________________________

re_lu_12 (ReLU) (None, 14, 14, 116) 0 batch_normalization_19[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_7 (DepthwiseCo (None, 14, 14, 116) 1044 re_lu_12[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_7[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_20[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 14, 14, 116) 464 conv2d_14[0][0]

__________________________________________________________________________________________________

re_lu_13 (ReLU) (None, 14, 14, 116) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

tf.concat_5 (TFOpLambda) (None, 14, 14, 232) 0 tf.split_3[0][0]

re_lu_13[0][0]

__________________________________________________________________________________________________

tf.reshape_10 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_5[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_5 (TFOpL (None, 14, 14, 116, 0 tf.reshape_10[0][0]

__________________________________________________________________________________________________

tf.reshape_11 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_5[0][0]

__________________________________________________________________________________________________

tf.split_4 (TFOpLambda) [(None, 14, 14, 116) 0 tf.reshape_11[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 14, 14, 116) 13456 tf.split_4[0][1]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 14, 14, 116) 464 conv2d_15[0][0]

__________________________________________________________________________________________________

re_lu_14 (ReLU) (None, 14, 14, 116) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_8 (DepthwiseCo (None, 14, 14, 116) 1044 re_lu_14[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_8[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_23[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 14, 14, 116) 464 conv2d_16[0][0]

__________________________________________________________________________________________________

re_lu_15 (ReLU) (None, 14, 14, 116) 0 batch_normalization_24[0][0]

__________________________________________________________________________________________________

tf.concat_6 (TFOpLambda) (None, 14, 14, 232) 0 tf.split_4[0][0]

re_lu_15[0][0]

__________________________________________________________________________________________________

tf.reshape_12 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_6[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_6 (TFOpL (None, 14, 14, 116, 0 tf.reshape_12[0][0]

__________________________________________________________________________________________________

tf.reshape_13 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_6[0][0]

__________________________________________________________________________________________________

tf.split_5 (TFOpLambda) [(None, 14, 14, 116) 0 tf.reshape_13[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 14, 14, 116) 13456 tf.split_5[0][1]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 14, 14, 116) 464 conv2d_17[0][0]

__________________________________________________________________________________________________

re_lu_16 (ReLU) (None, 14, 14, 116) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_9 (DepthwiseCo (None, 14, 14, 116) 1044 re_lu_16[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_9[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_26[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 14, 14, 116) 464 conv2d_18[0][0]

__________________________________________________________________________________________________

re_lu_17 (ReLU) (None, 14, 14, 116) 0 batch_normalization_27[0][0]

__________________________________________________________________________________________________

tf.concat_7 (TFOpLambda) (None, 14, 14, 232) 0 tf.split_5[0][0]

re_lu_17[0][0]

__________________________________________________________________________________________________

tf.reshape_14 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_7[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_7 (TFOpL (None, 14, 14, 116, 0 tf.reshape_14[0][0]

__________________________________________________________________________________________________

tf.reshape_15 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_7[0][0]

__________________________________________________________________________________________________

tf.split_6 (TFOpLambda) [(None, 14, 14, 116) 0 tf.reshape_15[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 14, 14, 116) 13456 tf.split_6[0][1]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 14, 14, 116) 464 conv2d_19[0][0]

__________________________________________________________________________________________________

re_lu_18 (ReLU) (None, 14, 14, 116) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_10 (DepthwiseC (None, 14, 14, 116) 1044 re_lu_18[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_10[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_29[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 14, 14, 116) 464 conv2d_20[0][0]

__________________________________________________________________________________________________

re_lu_19 (ReLU) (None, 14, 14, 116) 0 batch_normalization_30[0][0]

__________________________________________________________________________________________________

tf.concat_8 (TFOpLambda) (None, 14, 14, 232) 0 tf.split_6[0][0]

re_lu_19[0][0]

__________________________________________________________________________________________________

tf.reshape_16 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_8[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_8 (TFOpL (None, 14, 14, 116, 0 tf.reshape_16[0][0]

__________________________________________________________________________________________________

tf.reshape_17 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_8[0][0]

__________________________________________________________________________________________________

tf.split_7 (TFOpLambda) [(None, 14, 14, 116) 0 tf.reshape_17[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 14, 14, 116) 13456 tf.split_7[0][1]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 14, 14, 116) 464 conv2d_21[0][0]

__________________________________________________________________________________________________

re_lu_20 (ReLU) (None, 14, 14, 116) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_11 (DepthwiseC (None, 14, 14, 116) 1044 re_lu_20[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_11[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_32[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 14, 14, 116) 464 conv2d_22[0][0]

__________________________________________________________________________________________________

re_lu_21 (ReLU) (None, 14, 14, 116) 0 batch_normalization_33[0][0]

__________________________________________________________________________________________________

tf.concat_9 (TFOpLambda) (None, 14, 14, 232) 0 tf.split_7[0][0]

re_lu_21[0][0]

__________________________________________________________________________________________________

tf.reshape_18 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_9[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_9 (TFOpL (None, 14, 14, 116, 0 tf.reshape_18[0][0]

__________________________________________________________________________________________________

tf.reshape_19 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_9[0][0]

__________________________________________________________________________________________________

tf.split_8 (TFOpLambda) [(None, 14, 14, 116) 0 tf.reshape_19[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 14, 14, 116) 13456 tf.split_8[0][1]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 14, 14, 116) 464 conv2d_23[0][0]

__________________________________________________________________________________________________

re_lu_22 (ReLU) (None, 14, 14, 116) 0 batch_normalization_34[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_12 (DepthwiseC (None, 14, 14, 116) 1044 re_lu_22[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_12[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_35[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 14, 14, 116) 464 conv2d_24[0][0]

__________________________________________________________________________________________________

re_lu_23 (ReLU) (None, 14, 14, 116) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

tf.concat_10 (TFOpLambda) (None, 14, 14, 232) 0 tf.split_8[0][0]

re_lu_23[0][0]

__________________________________________________________________________________________________

tf.reshape_20 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_10[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_10 (TFOp (None, 14, 14, 116, 0 tf.reshape_20[0][0]

__________________________________________________________________________________________________

tf.reshape_21 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_10[0][0]

__________________________________________________________________________________________________

tf.split_9 (TFOpLambda) [(None, 14, 14, 116) 0 tf.reshape_21[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 14, 14, 116) 13456 tf.split_9[0][1]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 14, 14, 116) 464 conv2d_25[0][0]

__________________________________________________________________________________________________

re_lu_24 (ReLU) (None, 14, 14, 116) 0 batch_normalization_37[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_13 (DepthwiseC (None, 14, 14, 116) 1044 re_lu_24[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 14, 14, 116) 464 depthwise_conv2d_13[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 14, 14, 116) 13456 batch_normalization_38[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 14, 14, 116) 464 conv2d_26[0][0]

__________________________________________________________________________________________________

re_lu_25 (ReLU) (None, 14, 14, 116) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

tf.concat_11 (TFOpLambda) (None, 14, 14, 232) 0 tf.split_9[0][0]

re_lu_25[0][0]

__________________________________________________________________________________________________

tf.reshape_22 (TFOpLambda) (None, 14, 14, 2, 11 0 tf.concat_11[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_11 (TFOp (None, 14, 14, 116, 0 tf.reshape_22[0][0]

__________________________________________________________________________________________________

tf.reshape_23 (TFOpLambda) (None, 14, 14, 232) 0 tf.compat.v1.transpose_11[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 14, 14, 116) 26912 tf.reshape_23[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 14, 14, 116) 464 conv2d_28[0][0]

__________________________________________________________________________________________________

re_lu_27 (ReLU) (None, 14, 14, 116) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_14 (DepthwiseC (None, 7, 7, 232) 2088 tf.reshape_23[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_15 (DepthwiseC (None, 7, 7, 116) 1044 re_lu_27[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 7, 7, 232) 928 depthwise_conv2d_14[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 7, 7, 116) 464 depthwise_conv2d_15[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 7, 7, 232) 53824 batch_normalization_40[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 7, 7, 232) 26912 batch_normalization_43[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 7, 7, 232) 928 conv2d_27[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 7, 7, 232) 928 conv2d_29[0][0]

__________________________________________________________________________________________________

re_lu_26 (ReLU) (None, 7, 7, 232) 0 batch_normalization_41[0][0]

__________________________________________________________________________________________________

re_lu_28 (ReLU) (None, 7, 7, 232) 0 batch_normalization_44[0][0]

__________________________________________________________________________________________________

tf.concat_12 (TFOpLambda) (None, 7, 7, 464) 0 re_lu_26[0][0]

re_lu_28[0][0]

__________________________________________________________________________________________________

tf.reshape_24 (TFOpLambda) (None, 7, 7, 2, 232) 0 tf.concat_12[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_12 (TFOp (None, 7, 7, 232, 2) 0 tf.reshape_24[0][0]

__________________________________________________________________________________________________

tf.reshape_25 (TFOpLambda) (None, 7, 7, 464) 0 tf.compat.v1.transpose_12[0][0]

__________________________________________________________________________________________________

tf.split_10 (TFOpLambda) [(None, 7, 7, 232), 0 tf.reshape_25[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 7, 7, 232) 53824 tf.split_10[0][1]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 7, 7, 232) 928 conv2d_30[0][0]

__________________________________________________________________________________________________

re_lu_29 (ReLU) (None, 7, 7, 232) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_16 (DepthwiseC (None, 7, 7, 232) 2088 re_lu_29[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 7, 7, 232) 928 depthwise_conv2d_16[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 7, 7, 232) 53824 batch_normalization_46[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 7, 7, 232) 928 conv2d_31[0][0]

__________________________________________________________________________________________________

re_lu_30 (ReLU) (None, 7, 7, 232) 0 batch_normalization_47[0][0]

__________________________________________________________________________________________________

tf.concat_13 (TFOpLambda) (None, 7, 7, 464) 0 tf.split_10[0][0]

re_lu_30[0][0]

__________________________________________________________________________________________________

tf.reshape_26 (TFOpLambda) (None, 7, 7, 2, 232) 0 tf.concat_13[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_13 (TFOp (None, 7, 7, 232, 2) 0 tf.reshape_26[0][0]

__________________________________________________________________________________________________

tf.reshape_27 (TFOpLambda) (None, 7, 7, 464) 0 tf.compat.v1.transpose_13[0][0]

__________________________________________________________________________________________________

tf.split_11 (TFOpLambda) [(None, 7, 7, 232), 0 tf.reshape_27[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 7, 7, 232) 53824 tf.split_11[0][1]

__________________________________________________________________________________________________

batch_normalization_48 (BatchNo (None, 7, 7, 232) 928 conv2d_32[0][0]

__________________________________________________________________________________________________

re_lu_31 (ReLU) (None, 7, 7, 232) 0 batch_normalization_48[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_17 (DepthwiseC (None, 7, 7, 232) 2088 re_lu_31[0][0]

__________________________________________________________________________________________________

batch_normalization_49 (BatchNo (None, 7, 7, 232) 928 depthwise_conv2d_17[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 7, 7, 232) 53824 batch_normalization_49[0][0]

__________________________________________________________________________________________________

batch_normalization_50 (BatchNo (None, 7, 7, 232) 928 conv2d_33[0][0]

__________________________________________________________________________________________________

re_lu_32 (ReLU) (None, 7, 7, 232) 0 batch_normalization_50[0][0]

__________________________________________________________________________________________________

tf.concat_14 (TFOpLambda) (None, 7, 7, 464) 0 tf.split_11[0][0]

re_lu_32[0][0]

__________________________________________________________________________________________________

tf.reshape_28 (TFOpLambda) (None, 7, 7, 2, 232) 0 tf.concat_14[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_14 (TFOp (None, 7, 7, 232, 2) 0 tf.reshape_28[0][0]

__________________________________________________________________________________________________

tf.reshape_29 (TFOpLambda) (None, 7, 7, 464) 0 tf.compat.v1.transpose_14[0][0]

__________________________________________________________________________________________________

tf.split_12 (TFOpLambda) [(None, 7, 7, 232), 0 tf.reshape_29[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 7, 7, 232) 53824 tf.split_12[0][1]

__________________________________________________________________________________________________

batch_normalization_51 (BatchNo (None, 7, 7, 232) 928 conv2d_34[0][0]

__________________________________________________________________________________________________

re_lu_33 (ReLU) (None, 7, 7, 232) 0 batch_normalization_51[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_18 (DepthwiseC (None, 7, 7, 232) 2088 re_lu_33[0][0]

__________________________________________________________________________________________________

batch_normalization_52 (BatchNo (None, 7, 7, 232) 928 depthwise_conv2d_18[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 7, 7, 232) 53824 batch_normalization_52[0][0]

__________________________________________________________________________________________________

batch_normalization_53 (BatchNo (None, 7, 7, 232) 928 conv2d_35[0][0]

__________________________________________________________________________________________________

re_lu_34 (ReLU) (None, 7, 7, 232) 0 batch_normalization_53[0][0]

__________________________________________________________________________________________________

tf.concat_15 (TFOpLambda) (None, 7, 7, 464) 0 tf.split_12[0][0]

re_lu_34[0][0]

__________________________________________________________________________________________________

tf.reshape_30 (TFOpLambda) (None, 7, 7, 2, 232) 0 tf.concat_15[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose_15 (TFOp (None, 7, 7, 232, 2) 0 tf.reshape_30[0][0]

__________________________________________________________________________________________________

tf.reshape_31 (TFOpLambda) (None, 7, 7, 464) 0 tf.compat.v1.transpose_15[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 7, 7, 1024) 476160 tf.reshape_31[0][0]

__________________________________________________________________________________________________

global_average_pooling2d (Globa (None, 1024) 0 conv2d_36[0][0]

__________________________________________________________________________________________________

dense (Dense) (None, 1000) 1025000 global_average_pooling2d[0][0]

==================================================================================================

Total params: 2,216,440

Trainable params: 2,203,236

Non-trainable params: 13,204

__________________________________________________________________________________________________