【从官方案例学框架Tensorflow/Keras】双向LSTM解决文本分类任务

【从官方案例学框架Tensorflow/Keras】双向LSTM解决文本分类任务

Keras官方案例链接

Tensorflow官方案例链接

Paddle官方案例链接

Pytorch官方案例链接

注:本系列仅帮助大家快速理解、学习并能独立使用相关框架进行深度学习的研究,理论部分还请自行学习补充,每个框架的官方经典案例写的都非常好,很值得进行学习使用。可以说在完全理解官方经典案例后加以修改便可以解决大多数常见的相关任务。

摘要:【从官方案例学框架Keras】双向LSTM解决文本分类任务,训练一个两层的双向LSTM,并在IMDB电影评论数据集上进行情感二分类(pos/neg,正向/负向)

目录

- 【从官方案例学框架Tensorflow/Keras】双向LSTM解决文本分类任务

- 1 Setup

- 2 Load the IMDB movie review sentiment data

- 3 Build the model

- 4 Train and evaluate the model

- 5 Summary

1 Setup

导入所需包,和超参数的设置

import numpy as np

from tensorflow import keras

from tensorflow.keras import layers

max_features = 20000 # Only consider the top 20k words

maxlen = 200 # Only consider the first 200 words of each movie review

2 Load the IMDB movie review sentiment data

导入训练数据

- 官方示例

(x_train, y_train), (x_val, y_val) = keras.datasets.imdb.load_data(

num_words=max_features

)

print(len(x_train), "Training sequences")

print(len(x_val), "Validation sequences")

x_train = keras.preprocessing.sequence.pad_sequences(x_train, maxlen=maxlen)

x_val = keras.preprocessing.sequence.pad_sequences(x_val, maxlen=maxlen)

- 本地示例

推荐大家使用本地数据读取方式解决任务,因为使用官方示例只能解决官方数据包里的数据任务,而忽略了通用性解决数据问题的方法,如读取、预处理等操作

本地数据格式

/input/

…/train/

… …/pos/1.txt

… …/neg/1.txt

…/test/

… …/pos/1.txt

… …/neg/1.txt

本例将展示一种DataFrame格式文本读取解决方法,故将其读为DataFrame。

以上面的文件格式可以使用更简单的方法是tf.keras.preprocessing.text_dataset_from_directory

import os

import re

import string

import numpy as np

from tqdm import tqdm

import pandas as pd

from tensorflow.keras.layers.experimental.preprocessing import TextVectorization

import tensorflow as tf

def get_text_list_from_files(files):

text_list = []

files_name = os.listdir(files)

for name in tqdm(files_name):

with open(files+name,encoding='utf-8') as f:

for line in f:

text_list.append(line)

return text_list

def get_data_from_text_files(folder_name):

pos_files = "./input/aclImdb/{}/pos/".format(folder_name)

pos_texts = get_text_list_from_files(pos_files)

neg_files = "./input/aclImdb/{}/neg/".format(folder_name)

neg_texts = get_text_list_from_files(neg_files)

df = pd.DataFrame(

{

"review": pos_texts + neg_texts,

"sentiment": [0] * len(pos_texts) + [1] * len(neg_texts),

}

)

df = df.sample(len(df)).reset_index(drop=True)

return df

train_df = get_data_from_text_files("train")

test_df = get_data_from_text_files("test")

all_data = train_df.append(test_df)

custom_standardization:自定义数据预处理

vectorize_layer:构建词汇表,并将文本映射为词汇表中的索引,同时截断/填充序列长度一致

def custom_standardization(input_data):

lowercase = tf.strings.lower(input_data)

stripped_html = tf.strings.regex_replace(lowercase, "

", " ")

return tf.strings.regex_replace(

stripped_html, "[%s]" % re.escape(string.punctuation), ""

)

vectorize_layer = TextVectorization(

standardize=custom_standardization,

max_tokens=max_features,

output_mode="int",

output_sequence_length=maxlen,

)

vectorize_layer.adapt(all_data.review.values.tolist())

def encode(texts):

encoded_texts = vectorize_layer(texts)

return encoded_texts.numpy()

x_train = encode(train_df.review.values)

y_train = train_df.sentiment.values

x_val = encode(test_df.review.values)

y_val = test_df.sentiment.values

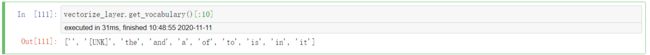

可以看出词汇表的前三个词分别是用于padding填充序列的""、用于表示未登录词的[UNK]和定冠词the

vectorize_layer.get_vocabulary()[:10]

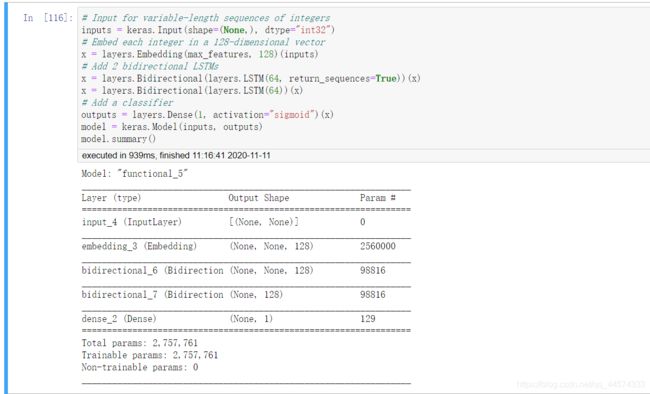

3 Build the model

建立两层LSTM模型,需要重点掌握return_sequences

若不了解的请参照【从官方案例学框架Keras】基于字符LSTM的seq2seq的4 Build the model部分进行学习,可以说搞懂return_sequences和return_state两个参数就搞懂了Keras中LSTM的使用

# Input for variable-length sequences of integers

inputs = keras.Input(shape=(None,), dtype="int32")

# Embed each integer in a 128-dimensional vector

x = layers.Embedding(max_features, 128)(inputs)

# Add 2 bidirectional LSTMs

x = layers.Bidirectional(layers.LSTM(64, return_sequences=True))(x)

x = layers.Bidirectional(layers.LSTM(64))(x)

# Add a classifier

outputs = layers.Dense(1, activation="sigmoid")(x)

model = keras.Model(inputs, outputs)

model.summary()

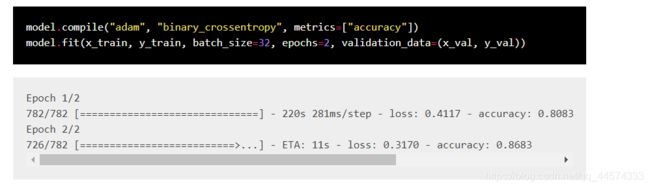

4 Train and evaluate the model

model.compile("adam", "binary_crossentropy", metrics=["accuracy"])

model.fit(x_train, y_train, batch_size=32, epochs=2, validation_data=(x_val, y_val))

使用自定义预处理方法,能使同样参数设定下的模型效果(验证集)更好,可以看出是优于官方示例方法的

5 Summary

完整代码如下

import numpy as np

from tensorflow import keras

from tensorflow.keras import layers

import os

import re

import string

from tqdm import tqdm

import pandas as pd

from tensorflow.keras.layers.experimental.preprocessing import TextVectorization

import tensorflow as tf

'''设定超参数'''

max_features = 20000 # Only consider the top 20k words

maxlen = 200 # Only consider the first 200 words of each movie review

'''读取数据'''

def get_text_list_from_files(files):

text_list = []

files_name = os.listdir(files)

for name in tqdm(files_name):

with open(files+name,encoding='utf-8') as f:

for line in f:

text_list.append(line)

return text_list

def get_data_from_text_files(folder_name):

pos_files = "./input/aclImdb/{}/pos/".format(folder_name)

pos_texts = get_text_list_from_files(pos_files)

neg_files = "./input/aclImdb/{}/neg/".format(folder_name)

neg_texts = get_text_list_from_files(neg_files)

df = pd.DataFrame(

{

"review": pos_texts + neg_texts,

"sentiment": [0] * len(pos_texts) + [1] * len(neg_texts),

}

)

df = df.sample(len(df)).reset_index(drop=True)

return df

train_df = get_data_from_text_files("train")

test_df = get_data_from_text_files("test")

all_data = train_df.append(test_df)

'''数据预处理,并序列化文本'''

def custom_standardization(input_data):

lowercase = tf.strings.lower(input_data)

stripped_html = tf.strings.regex_replace(lowercase, "

", " ")

return tf.strings.regex_replace(

stripped_html, "[%s]" % re.escape(string.punctuation), ""

)

vectorize_layer = TextVectorization(

standardize=custom_standardization,

max_tokens=max_features,

output_mode="int",

output_sequence_length=maxlen,

)

vectorize_layer.adapt(all_data.review.values.tolist())

def encode(texts):

encoded_texts = vectorize_layer(texts)

return encoded_texts.numpy()

x_train = encode(train_df.review.values)

y_train = train_df.sentiment.values

x_val = encode(test_df.review.values)

y_val = test_df.sentiment.values

'''定义LSTM模型'''

# Input for variable-length sequences of integers

inputs = keras.Input(shape=(None,), dtype="int32")

# Embed each integer in a 128-dimensional vector

x = layers.Embedding(max_features, 128)(inputs)

# Add 2 bidirectional LSTMs

x = layers.Bidirectional(layers.LSTM(64, return_sequences=True))(x)

x = layers.Bidirectional(layers.LSTM(64))(x)

# Add a classifier

outputs = layers.Dense(1, activation="sigmoid")(x)

model = keras.Model(inputs, outputs)

model.summary()

'''模型训练与验证'''

model.compile("adam", "binary_crossentropy", metrics=["accuracy"])

model.fit(x_train, y_train, batch_size=32, epochs=2, validation_data=(x_val, y_val))