Flink 环境搭建 和 入门demo

文章目录

- 一、环境搭建

-

- 1、下载

- 2、运行

- 3、访问

- 4、提交作业

- 二、入门demo

-

- 1、pom文件依赖

- 2、我的demo

- 3、集成kafka和mysql

- 3、运行结果

一、环境搭建

1、下载

下载地址:

https://flink.apache.org/downloads.html#apache-flink-1124

flink-1.12.4的下载地址:

https://apache.website-solution.net/flink/flink-1.12.4/flink-1.12.4-bin-scala_2.11.tgz

我用的是1.12.4的版本,值得注意的是这个版本要和java项目中pom文件的依赖保持一致。

2、运行

解压、运行

$ tar -zxvf flink-1.4.2-bin-hadoop26-scala_2.11.tgz

$ cd flink-1.12.4

$ ./bin/start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host MacdeMacBook-Pro-2.local.

Starting taskexecutor daemon on host MacdeMacBook-Pro-2.local.

$

3、访问

通过http://localhost:8081/ 访问后台管理页面

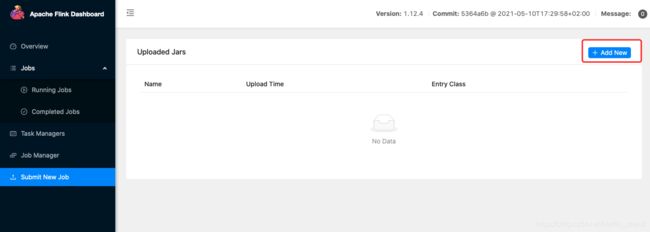

4、提交作业

把集成flink的demo提交到flink服务,执行作业。

二、入门demo

1、pom文件依赖

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-javaartifactId>

<version>1.12.4version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-java_2.11artifactId>

<version>1.12.4version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-clients_2.11artifactId>

<version>1.12.4version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>statefun-sdkartifactId>

<version>2.2.2version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>statefun-flink-harnessartifactId>

<version>3.0.0version>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpclientartifactId>

dependency>

2、我的demo

package com.rules.engine.task;

import com.alibaba.fastjson.JSONObject;

import com.rules.engine.beans.Product;

import com.rules.engine.entity.UserInfoCount;

import com.rules.engine.rule.*;

import com.rules.engine.source.MyRuleSource;

import com.rules.engine.source.RulesSource;

import com.rules.engine.utils.KafkaProducer;

import com.rules.engine.utils.MySqlSink2;

import com.rules.engine.utils.MySqlSink3;

import com.rules.engine.vo.UserInfoVo;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.BroadcastProcessFunction;

import org.apache.flink.streaming.api.functions.co.CoFlatMapFunction;

import org.apache.flink.streaming.api.functions.co.KeyedBroadcastProcessFunction;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import org.apache.flink.streaming.api.functions.sink.SinkFunction;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.streaming.api.windowing.assigners.EventTimeSessionWindows;

import org.apache.flink.streaming.api.windowing.assigners.SlidingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.util.Collector;

import java.util.Properties;

/**

* @Author dingws

* @PackageName rule_engine

* @Package com.rules.engine.task

* @Date 2021/7/7 7:25 下午

* @Version 1.0

*/

@Slf4j

public class KafkaMessageDeal3 {

public static void main(String[] args) throws Exception {

long delay = 5000L;

long windowGap = 5000L;

//1、设置运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.enableCheckpointing(6000L);

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

env.setParallelism(1);

//2、配置数据源读取数据

Properties props = new Properties();

props.put("bootstrap.servers", "172.21.88.77:9092");

props.put("group.id", KafkaProducer.TOPIC_GROUP1);

FlinkKafkaConsumer<String> consumer = new FlinkKafkaConsumer<String>(KafkaProducer.TOPIC_TEST, new SimpleStringSchema(), props);

// 配置规则服务

DataStream<Product> rulesStream = env.addSource(new MyRuleSource());

//设置数据源

DataStreamSource<String> dataStreamSource = env.addSource(consumer).setParallelism(1);

//从kafka里读取数据,转换成UserInfoVo对象

DataStream<UserInfoVo> dataStream = dataStreamSource.map(value -> JSONObject.parseObject(value, UserInfoVo.class));

dataStream

.connect(rulesStream)

.flatMap(new CoFlatMapFunction<UserInfoVo, Product, UserInfoVo>() {

private Product localProduct;

@Override

public void flatMap1(UserInfoVo userInfoVo, Collector<UserInfoVo> collector) throws Exception {

System.out.println("---------userInfoVo = " + userInfoVo);

String[] id = userInfoVo.getId().split("_");

String idTime = id[id.length - 1];

int time = Integer.parseInt(idTime);

if (localProduct.getDiscount() == 100){

if (time % 2 == 0){

collector.collect(userInfoVo);

}

} else {

if (time > 5){

collector.collect(userInfoVo);

}

}

}

@Override

public void flatMap2(Product product, Collector<UserInfoVo> collector) throws Exception {

localProduct = product;

System.out.println("---------product = " + product);

}

}).addSink(new MySqlSink2()).name("save mysql whit rule");

dataStream

.keyBy("id")

.window(SlidingProcessingTimeWindows.of(Time.seconds(60), Time.seconds(60)))

.aggregate(new AggregateFunction<UserInfoVo, UserInfoCount, UserInfoCount>() {

@Override

public UserInfoCount createAccumulator() {

UserInfoCount count = new UserInfoCount();

count.setId(1);

count.setUserId(null);

count.setCount(0);

return count;

}

@Override

public UserInfoCount add(UserInfoVo userInfoVo, UserInfoCount userInfoCount) {

if (userInfoCount.getUserId() == null){

userInfoCount.setUserId(userInfoVo.getId());

}

if (userInfoCount.getUserId().equals(userInfoVo.getId())){

userInfoCount.setCount(userInfoCount.getCount() + 1);

}

return userInfoCount;

}

@Override

public UserInfoCount getResult(UserInfoCount userInfoCount) {

return userInfoCount;

}

@Override

public UserInfoCount merge(UserInfoCount userInfoCount, UserInfoCount acc1) {

return null;

}

})

.keyBy("userId")

.addSink(new MySqlSink3());

env.execute("KafkaMessageDeal2");

}

private static DataStream<MyRule> getRulesUpdateStream(StreamExecutionEnvironment env, ParameterTool parameter) {

String name = "Rule Source";

SourceFunction<String> rulesSource = RulesSource.createRulesSource(parameter);

DataStream<String> rulesStrings = env.addSource(rulesSource).name(name).setParallelism(1);

return RulesSource.stringsStreamToRules(rulesStrings);

}

}

UserInfoVo文件实现:

import lombok.Data;

import java.util.Date;

import lombok.NoArgsConstructor;

import lombok.RequiredArgsConstructor;

import org.apache.kafka.common.serialization.StringSerializer;

import java.io.Serializable;

/**

* @Author dingws

* @PackageName rule_engine

* @Package com.rules.engine.vo

* @Date 2021/7/6 6:23 下午

* @Version 1.0

*/

@Data

@NoArgsConstructor

public class UserInfoVo implements Serializable {

private String id;

private String name;

private String deviceId;

private Long beginTime;

private Long endTime;

public UserInfoVo(UserInfoVo userInfoVo) {

this.id = userInfoVo.id;

this.name = userInfoVo.name;

this.deviceId = userInfoVo.deviceId;

this.beginTime = userInfoVo.beginTime;

this.endTime = userInfoVo.endTime;

}

}

MySqlSink2文件实现:

import com.rules.engine.vo.UserInfoVo;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.Timestamp;

import java.util.List;

/**

* @Author dingws

* @PackageName rule_engine

* @Package com.rules.engine.utils

* @Date 2021/7/9 2:10 下午

* @Version 1.0

*/

public class MySqlSink2 extends RichSinkFunction<UserInfoVo> {

// @Autowired

// private JdbcTemplate jdbcTemplate;

private PreparedStatement ps;

private Connection connection;

@Override

public void open(Configuration parameters) throws Exception {

System.out.println("------MySqlSink2 open");

super.open(parameters);

//获取数据库连接,准备写入数据库

connection = DbUtils.getConnection();

String sql = "insert into user_info(id, name, deviceId, beginTime, endTime) values (?, ?, ?, ?, ?); ";

ps = connection.prepareStatement(sql);

}

@Override

public void close() throws Exception {

System.out.println("------MySqlSink2 close");

super.close();

//关闭并释放资源

if(connection != null) {

connection.close();

}

if(ps != null) {

ps.close();

}

}

@Override

public void invoke(UserInfoVo userInfo, Context context) throws Exception {

System.out.println("------MySqlSink2 invoke");

ps.setString(1, userInfo.getId());

ps.setString(2, userInfo.getDeviceId());

ps.setString(3, userInfo.getName());

ps.setTimestamp(4, new Timestamp(userInfo.getBeginTime()));

ps.setTimestamp(5, new Timestamp(userInfo.getEndTime()));

ps.addBatch();

//一次性写入

int[] count = ps.executeBatch();

System.out.println("--------666666 成功写入Mysql数量:" + count.length);

}

}

MySqlSink3文件实现:

import com.rules.engine.entity.UserInfoCount;

import com.rules.engine.vo.UserInfoVo;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import org.apache.flink.streaming.api.functions.sink.SinkFunction;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.Timestamp;

/**

* @Author dingws

* @PackageName rule_engine

* @Package com.rules.engine.utils

* @Date 2021/7/9 2:10 下午

* @Version 1.0

*/

public class MySqlSink3 extends RichSinkFunction<UserInfoCount> {

private PreparedStatement ps;

private Connection connection;

@Override

public void open(Configuration parameters) throws Exception {

System.out.println("------MySqlSink3 open");

super.open(parameters);

//获取数据库连接,准备写入数据库

connection = DbUtils.getConnection();

String sql = "insert into user_info_count(userId, count) values (?, ?); ";

ps = connection.prepareStatement(sql);

}

@Override

public void close() throws Exception {

System.out.println("------MySqlSink3 close");

super.close();

//关闭并释放资源

if(connection != null) {

connection.close();

}

if(ps != null) {

ps.close();

}

}

@Override

public void invoke(UserInfoCount userInfoCount, Context context) throws Exception {

System.out.println("------MySqlSink3 invoke");

// ps.setInt(1, 1);

ps.setString(1, userInfoCount.getUserId());

ps.setInt(2, userInfoCount.getCount());

ps.addBatch();

//一次性写入

int[] count = ps.executeBatch();

System.out.println("--------666666 成功写入Mysql数量:" + count.length);

}

}

3、集成kafka和mysql

上面的demo中使用了kafka作为数据源,mysql作为数据输出,如果觉得用不到,可以把他们注释掉。下面我把集成kafka和mysql的配置也列出来:

- pom文件依赖

<dependency>

<groupId>org.springframework.kafkagroupId>

<artifactId>spring-kafkaartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-jdbcartifactId>

<version>2.3.3.RELEASEversion>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>8.0.16version>

dependency>

- 配置文件

spring:

datasource:

url: jdbc:mysql://172.1.16.18:3306/geniubot?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull&allowMultiQueries=true&useSSL=false&serverTimezone=GMT%2B8

password: my_passeord

username: my_username

hikari:

transaction-isolation: TRANSACTION_REPEATABLE_READ

transaction:

rollback-on-commit-failure: true

kafka:

bootstrap-servers: 172.21.88.77:9092

producer:

# 发生错误后,消息重发的次数。

retries: 0

#当有多个消息需要被发送到同一个分区时,生产者会把它们放在同一个批次里。该参数指定了一个批次可以使用的内存大小,按照字节数计算。

batch-size: 16384

# 设置生产者内存缓冲区的大小。

buffer-memory: 33554432

# 键的序列化方式

key-serializer: org.apache.kafka.common.serialization.StringSerializer

# 值的序列化方式

value-serializer: org.apache.kafka.common.serialization.StringSerializer

# value-serializer: com.rules.engine.utils.JSONSerializer

# acks=0 : 生产者在成功写入消息之前不会等待任何来自服务器的响应。

# acks=1 : 只要集群的首领节点收到消息,生产者就会收到一个来自服务器成功响应。

# acks=all :只有当所有参与复制的节点全部收到消息时,生产者才会收到一个来自服务器的成功响应。

acks: 1

consumer:

# 自动提交的时间间隔 在spring boot 2.X 版本中这里采用的是值的类型为Duration 需要符合特定的格式,如1S,1M,2H,5D

auto-commit-interval: 1S

# 该属性指定了消费者在读取一个没有偏移量的分区或者偏移量无效的情况下该作何处理:

# latest(默认值)在偏移量无效的情况下,消费者将从最新的记录开始读取数据(在消费者启动之后生成的记录)

# earliest :在偏移量无效的情况下,消费者将从起始位置读取分区的记录

auto-offset-reset: earliest

# 是否自动提交偏移量,默认值是true,为了避免出现重复数据和数据丢失,可以把它设置为false,然后手动提交偏移量

enable-auto-commit: false

# 键的反序列化方式

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

# 值的反序列化方式

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

# value-deserializer: com.rules.engine.utils.JSONDeserializer

listener:

# 在侦听器容器中运行的线程数。

concurrency: 5

#listner负责ack,每调用一次,就立即commit

ack-mode: manual_immediate

missing-topics-fatal: false