利用COCO数据集对YOLOv3进行性能测试,计算mAP值,绘制PR曲线

安装darknet

详细步骤和解释请参考 YOLOv3官网

详细 Darknet 编译请参考 大佬博客

博主介绍的非常详细,在此就不多赘述,我只是个无情的搬运工…

COCO数据集的下载和配置

根据 YOLOv3官网 通过脚本文件获取COCO数据集。这里使用的是COCO2014数据集

tips如果数据集下载速度特别慢,可以打开 get_coco_dataset.sh,在网站下载好数据集,再逐条运行每一条命令即可。

#!/bin/bash

# Clone COCO API

git clone https://github.com/pdollar/coco

cd coco

mkdir images

cd images

# Download Images

wget -c https://pjreddie.com/media/files/train2014.zip

wget -c https://pjreddie.com/media/files/val2014.zip

# Unzip

unzip -q train2014.zip

unzip -q val2014.zip

cd ..

# Download COCO Metadata

wget -c https://pjreddie.com/media/files/instances_train-val2014.zip

wget -c https://pjreddie.com/media/files/coco/5k.part

wget -c https://pjreddie.com/media/files/coco/trainvalno5k.part

wget -c https://pjreddie.com/media/files/coco/labels.tgz

tar xzf labels.tgz

unzip -q instances_train-val2014.zip

# Set Up Image Lists

paste <(awk "{print \"$PWD\"}" <5k.part) 5k.part | tr -d '\t' > 5k.txt

paste <(awk "{print \"$PWD\"}" <trainvalno5k.part) trainvalno5k.part | tr -d '\t' > trainvalno5k.txt

对COCO验证集进行检测

在darknet目录下运行下面的命令行

./darknet -i 0 detector valid cfg/coco.data cfg/yolov3.cfg yolov3.weights # '-i 0'代表使用0号GPU

运行完上述命令之后会生成results/coco_results.json文件,该文件保存了检测结果

计算mAP

该方法源于:https://blog.csdn.net/xidaoliang/article/details/88397280

1.安装pycocotools

pip install pycocotools

2.编写python脚本用于计算mAP,注意根据自己的实际情况修改两个文件路径

import matplotlib.pyplot as plt

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

import numpy as np

import skimage.io as io

import pylab,json

pylab.rcParams['figure.figsize'] = (10.0, 8.0)

def get_img_id(file_name):

ls = []

myset = []

annos = json.load(open(file_name, 'r'))

for anno in annos:

ls.append(anno['image_id'])

myset = {}.fromkeys(ls).keys()

return myset

if __name__ == '__main__':

annType = ['segm', 'bbox', 'keypoints'] # set iouType to 'segm', 'bbox' or 'keypoints'

annType = annType[1] # specify type here

cocoGt_file = 'G:/instances_val2014.json'

cocoGt = COCO(cocoGt_file) # 取得标注集中coco json对象

cocoDt_file = 'G:/coco_results.json'

imgIds = get_img_id(cocoDt_file)

print (len(imgIds))

cocoDt = cocoGt.loadRes(cocoDt_file) # 取得结果集中image json对象

imgIds = sorted(imgIds) # 按顺序排列coco标注集image_id

imgIds = imgIds[0:5000] # 标注集中的image数据

cocoEval = COCOeval(cocoGt, cocoDt, annType)

cocoEval.params.imgIds = imgIds # 参数设置

# cocoEval.params.catIds = [1] # 1代表'person'类,0代表背景

cocoEval.evaluate() # 评价

cocoEval.accumulate() # 积累

cocoEval.summarize() # 总结

3.运行.py脚本

4.可能运行期间报错

升级scikit-image

python compute_coco_mAP.py

修改Numpy版本为1.16

pip install numpy==1.16

mAP结果展示

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.334

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.585

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.345

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.194

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.365

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.439

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.291

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.446

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.470

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.304

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.502

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.593

关于结果的解释请参考:https://blog.csdn.net/u014734886/article/details/78831884

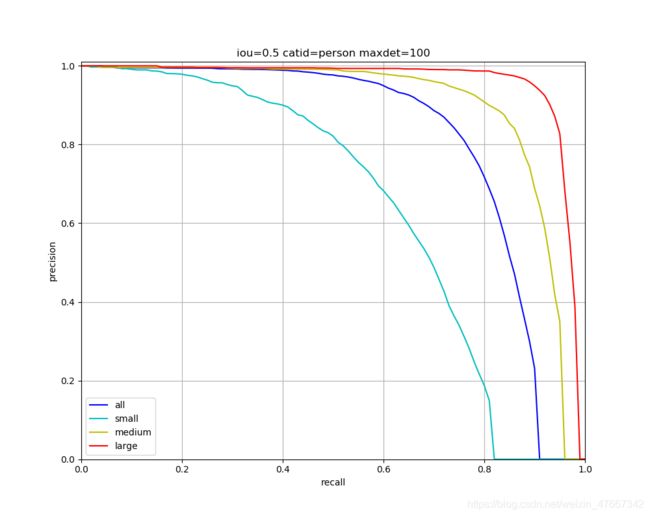

绘制PR曲线

1.修改上述脚本,改后结果如下:(其实是添加一段代码)

import matplotlib.pyplot as plt

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

import numpy as np

import skimage.io as io

import pylab,json

pylab.rcParams['figure.figsize'] = (10.0, 8.0)

def get_img_id(file_name):

ls = []

myset = []

annos = json.load(open(file_name, 'r'))

for anno in annos:

ls.append(anno['image_id'])

myset = {}.fromkeys(ls).keys()

return myset

if __name__ == '__main__':

annType = ['segm', 'bbox', 'keypoints'] # set iouType to 'segm', 'bbox' or 'keypoints'

annType = annType[1] # specify type here

cocoGt_file = 'G:/instances_val2014.json'

cocoGt = COCO(cocoGt_file) # 取得标注集中coco json对象

cocoDt_file = 'G:/coco_results.json'

imgIds = get_img_id(cocoDt_file)

print (len(imgIds))

cocoDt = cocoGt.loadRes(cocoDt_file) # 取得结果集中image json对象

imgIds = sorted(imgIds) # 按顺序排列coco标注集image_id

imgIds = imgIds[0:5000] # 标注集中的image数据

cocoEval = COCOeval(cocoGt, cocoDt, annType)

cocoEval.params.imgIds = imgIds # 参数设置

# cocoEval.params.catIds = [1] # 1代表'person'类,0代表背景

cocoEval.evaluate() # 评价

cocoEval.accumulate() # 积累

cocoEval.summarize() # 总结

# ----------下面为添加的绘图代码------------- #

# precision[t,:,k,a,m] 存储的是PR曲线在各个recall阈值的precision值

# t:阈值,k:类别,a:面积 all、small、medium、large,m:maxdet 1、10、100

pr_array1 = cocoEval.eval['precision'][0, :, 0, 0, 2]

pr_array2 = cocoEval.eval['precision'][0, :, 0, 1, 2]

pr_array3 = cocoEval.eval['precision'][0, :, 0, 2, 2]

pr_array4 = cocoEval.eval['precision'][0, :, 0, 3, 2]

x = np.arange(0.0, 1.01, 0.01)

# x_1 = np.arange(0, 1.01, 0.111)

plt.xlabel('IoU')

plt.ylabel('precision')

plt.xlim(0, 1.0)

plt.ylim(0, 1.01)

plt.grid(True)

plt.plot(x, pr_array1, 'b-', label='all')

plt.plot(x, pr_array2, 'c-', label='small')

plt.plot(x, pr_array3, 'y-', label='medium')

plt.plot(x, pr_array4, 'r-', label='large')

# plt.xticks(x_1, x_1)

plt.title("iou=0.5 catid=person maxdet=100")

plt.legend(loc="lower left")

plt.show()

核心是搞懂cocoEval.eval[‘precision’]5维数组表达的是什么,就可以根据自己需要画出各种PR曲线啦

precision[t,:,k,a,m]

# t:阈值 t[0]=0.5,t[1]=0.55,t[2]=0.6,……,t[9]=0.95

# k:类别 k[0]=person,k[1]=bycicle,.....COCO的80个类别

# a:面积 a[0]=all,a[1]=small,a[2]=medium,a[3]=large

# m:maxdet m[0]=1,m[1]=10,m[2]=100

解释:上述代码绘制的结果阈值iou=0.5,catid=person,maxdet=100时,不同area下的PR曲线

疑惑

本辣鸡源码还没搞懂,maybe会持续更新

?在计算mAP时,单独计算某一类的mAP值需要在.py脚本加一行代码

cocoEval.params.catIds = [1] # 1代表'person'类,0代表背景

我看有博主说0表示背景类,1是person。确实,我试过 如果 [0] 的话,则计算出来AP和AR全为-1(显然不对)

但是明明catIds是从0开始的

或许有哪位大佬能答疑一下撒~感激不尽!!

------------------------分割线----------------------------

疑惑似乎解开了:

是 index 和 id 的区别,具体请看 这位博主的总结

引用

https://blog.csdn.net/huangxiang360729/article/details/105853200

https://blog.csdn.net/xidaoliang/article/details/88397280

https://blog.csdn.net/FSALICEALEX/article/details/99689643

https://blog.csdn.net/FSALICEALEX/article/details/102556655

https://zhuanlan.zhihu.com/p/60707912

https://blog.csdn.net/abc13526222160/article/details/95107518