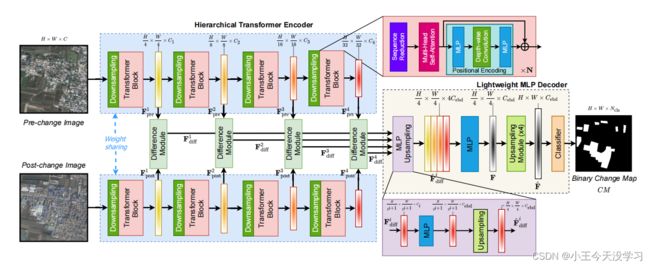

ChangeFormer(A Transformer-based Siamese Network for Change Detection)

ChangeFormer

论文地址:A Transformer-based Siamese Network for Change Detection

ChangeFormer的三个主要模块:

1.一个层次化的transformer编码器(用于提取双时相图片的粗细特征)

2.四个特征差异模块(计算不同尺度下的特征差异性)

3.一个轻量的MLP解码器(混合多层的特征差异以及预测Mask)

Hierarchical Transformer Encoder

Transformer Block

A t t e n t i o n ( Q , K , V ) = S o f t m a x ( Q K T d h e a d ) V Attention(Q,K,V)=Softmax(\frac{QK^T}{\sqrt{}d_{head}})V Attention(Q,K,V)=Softmax(dheadQKT)V

上式是一般的self-attention模块,其计算复杂度为 O ( H W 2 ) O(HW^2) O(HW2)。

加入Sequence Reduction处理( R R R为减少率):

S ^ = R e s h a p e ( H W R , C ∗ R ) S \widehat{S}=Reshape(\frac{HW}{R},C*R)S S =Reshape(RHW,C∗R)S

S = L i n e a r ( C ∗ R , C ) S ^ S = Linear(C*R,C)\widehat{S} S=Linear(C∗R,C)S

其中 S S S代表需要进行reduce的sequence,例如 Q , K , V Q,K,V Q,K,V向量, R e s h a p e Reshape Reshape为reshape操作, L i n e a r Linear Linear代表线性层。

这一步操作能够将 Q , K , V Q,K,V Q,K,V的size转为 ( H W R , C ) (\frac{HW}{R},C) (RHW,C)。这将复杂度降到了 O ( ( H W ) 2 R ) O(\frac{(HW)^2}{R}) O(R(HW)2)。

位置信息编码

F o u t = M L P ( G E L U ( C o n v 2 D 3 × 3 ( M L P ( F i n ) ) ) ) + F i n F_{out}=MLP(GELU(Conv2D_{3\times3}(MLP(F_{in}))))+F_{in} Fout=MLP(GELU(Conv2D3×3(MLP(Fin))))+Fin

F i n F_{in} Fin是self-attention提取到的特征, G E L U GELU GELU是Gaussian Error Linear Unit activation。

Downsampling Block

第一个Downsampling Block: C o n v 2 D ( K = 7 , S = 4 , P = 3 ) Conv2D(K=7,S=4,P=3) Conv2D(K=7,S=4,P=3)

后面的Downsampling Block: C o n v 2 D ( K = 3. S = 2 , P = 1 ) Conv2D(K=3.S=2,P=1) Conv2D(K=3.S=2,P=1)

Difference Module

Difference Module用来计算不同时相图片在不同层特征的差异:

F d i f f i = B N ( R E L U ( C o n v 2 D 3 × 3 ( C a t ( F p r e i , F p o s t i ) ) ) ) F^{i}_{diff}=BN(RELU(Conv2D_{3\times3}(Cat(F^i_{pre},F^i_{post})))) Fdiffi=BN(RELU(Conv2D3×3(Cat(Fprei,Fposti))))

Cat代表 tensor concatenation。

MLP Decoder

MLP Decoder用来将前面提取到的多层特征聚集在一起来进行预测change map。

MLP主要包含3步:

MLP & Upsampling

先通过一个MLP layer将不同层的特征图的通道维统一,然后将每个上采样到size: H / 4 × H / 4 H/4 \times H/4 H/4×H/4:

F ^ d i f f i = L i n e a r ( C i , C e b d ) ( F d i f f i ) ∀ \widehat{F}^i_{diff}=Linear(C_i,C_{ebd})(F^i_{diff})\forall F diffi=Linear(Ci,Cebd)(Fdiffi)∀

F ^ d i f f i = U p s a m p l e ( ( H / 4 , W / 4 ) , " b i l i n e a r " ) ( F ^ d i f f i ) \widehat{F}^i_{diff}=Upsample((H/4,W/4),"bilinear")(\widehat{F}^i_{diff}) F diffi=Upsample((H/4,W/4),"bilinear")(F diffi)

其中 C e b d C_{ebd} Cebd代表embedding dimension

Concatenation & Fusion

这一步通过MLP layer将不同特征图concat和融合:

F = L i n e a r ( 4 C e b e d , C e b e d ) ( C a t ( F ^ d i f f 1 , F ^ d i f f 2 , F ^ d i f f 3 , F ^ d i f f 4 ) ) F=Linear(4C_{ebed},C_{ebed})(Cat(\widehat{F}^1_{diff},\widehat{F}^2_{diff},\widehat{F}^3_{diff},\widehat{F}^4_{diff})) F=Linear(4Cebed,Cebed)(Cat(F diff1,F diff2,F diff3,F diff4))

Upsampling & Classification

用一个卷积将特征图F上采样为 H × W H\times W H×W:

C o n v 2 D ( K = 3 , S = 4 ) Conv2D(K=3,S=4) Conv2D(K=3,S=4)

最后,将得到的特征图通过另一个MLP layer来预测change mask CM( H × W × N c l s H\times W\times N_{cls} H×W×Ncls)

N c l s N_{cls} Ncls代表类别数即change 和 no-change :

F ^ = C o n v T r a n s p o s e 2 D ( S = 4 , K = 3 ) ( F ) \widehat{F}=ConvTranspose2D(S=4,K=3)(F) F =ConvTranspose2D(S=4,K=3)(F)

C M = L i n e a r ( C e b d , N c l s ) ( F ^ ) CM = Linear(C_{ebd},N_{cls})(\widehat{F}) CM=Linear(Cebd,Ncls)(F )