图像语义分割 -- FCN

一:图像语义分割

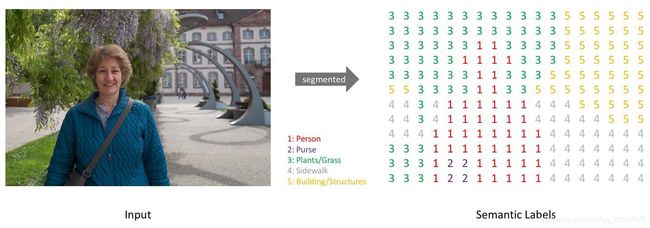

最简答理解图像语义分割呢就是看下面的图片例子:

像素级别的分类:

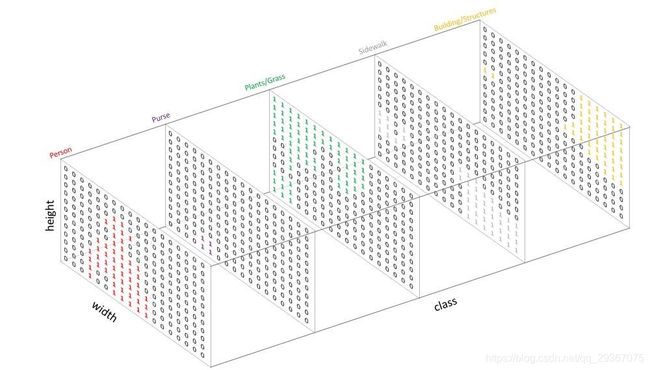

假如像素有五个类别,那么最后输出的结果在长度和宽度上是一样的,只不过通道数就是类别个数了。拆解开各个通道就是如下所示:

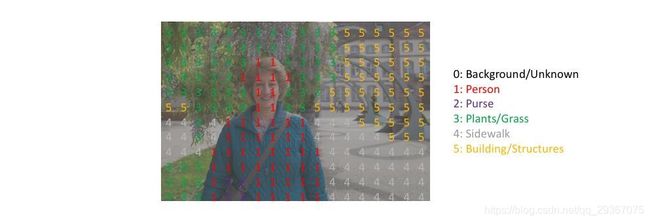

合并后就是各个像素的类别信息了,一般情况下会不同的颜色来表示各个类别,这样我们就能更加清晰看出来哪些像素是什么类别了,这也是一个监督学习的例子。

二:FCN

由于自己太懒了,关于FCN的概念和介绍请自己自行百度哈。推荐下面链接

https://zhuanlan.zhihu.com/p/31428783

https://www.pianshen.com/article/1189524224/

太多重复的我就不写了,请直接看上面的链接咯,会的就直接跳过忽略,总体来说,

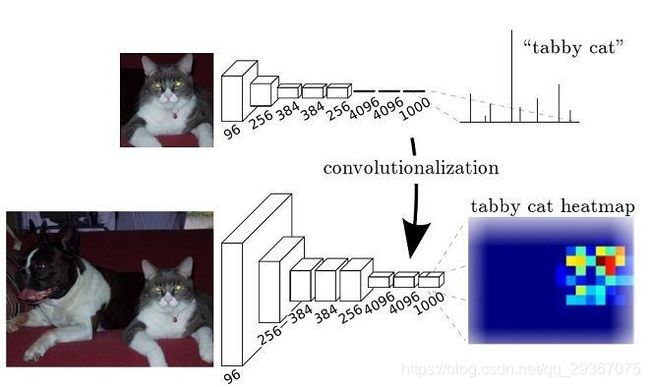

CNN是用来做图像分类的,是图像级别的,较浅的卷积层感知域较小,学习到一些局部区域的特征,较深的卷积层具有较大的感知域,能够学习到更加抽象一些的特征。

但是由于CNN在进行convolution和pooling过程中丢失了图像细节,即feature map size逐渐变小,所以不能很好地指出物体的具体轮廓、指出每个像素具体属于哪个物体,无法做到精确的分割。

FCN是把CNN最后的FC层全部改成了卷积层,这样就会得到2维的输出,能对应到原始图像每一个像素的类别,后接softmax获得每个像素点的分类信息,从而解决了分割问题。

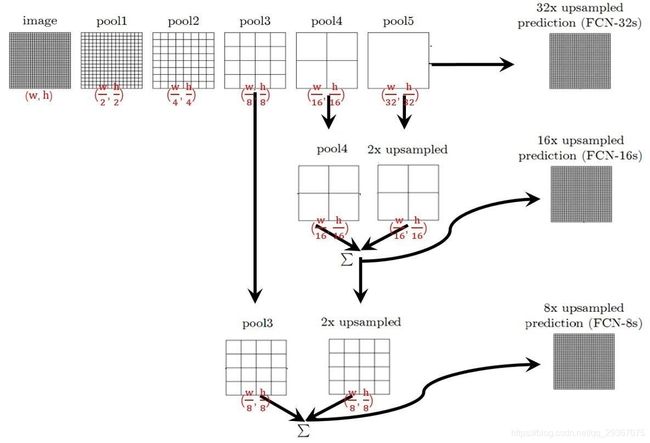

简单描述下其结构:

1:image经过多个conv和加一个max pooling变为pool1 feature,宽高变为1/2。

2:pool1 feature再经过多个conv家加一个max pooling变为pool2 feature,宽高变为1/4。

3:pool2 feature再经过多个conv加一个max pooling变为pool3 feature,宽高变为1/8。

4:pool3 feature再经过多个conv加一个max pooling变为pool4 feature,宽高变为1/16。

5:pool4 feature再经过多个conv加一个max pooling变为pool5 feature,宽高变为1/32。

这里是得到了五个特征feature,这个是不是很熟悉啊?

我们才学过的那个VGG不就是这么个结构么?除了我们上篇文章学习的VGG16,还有其他VGG结构,我们等下都会涉及到。

但是这里只是特征提取阶段,我们下一步得进行特征融合等操作,这个才是FCN的重头啊。

1:对于FCN-32,直接将最后的输出通过反卷积扩大32倍。

2:对于FCN-16,先把pool5反卷积扩大2倍,再和pool相加,最后通过反卷积扩大16倍。

3:对于FCN-8,先把pool5反卷积扩大2倍,再和pool相加,得到的值扩大2倍,再和pool3相加,最后通过反卷积扩大8倍。

四:代码实现

复用了VGG的结构。

VGG有VGG11,VGG13,VGG16和VGG19,后两者是最常用的结构,他们的特点就是

前面特征提取层都是五层结构,最后公用两个FC层,在FCN中会把这里卷积改成卷积。

输入时数据是[batch, 3, W, H]。五层分别会得到[batch, 64, W, H],[batch, 128, W, H],[batch, 256, W, H],[batch, 512, W, H],[batch, 512, W, H]。

至于VGG11,VGG13,VGG16和VGG19的结构呢,可以代码查看下

import torch

import torchvision

# Press the green button in the gutter to run the script.

if __name__ == '__main__':

net = torchvision.models.vgg11(pretrained=True) # 从预训练模型加载VGG11网络参数

print(net)

net = torchvision.models.vgg13(pretrained=True) # 从预训练模型加载VGG13网络参数

print(net)

net = torchvision.models.vgg16(pretrained=True) # 从预训练模型加载VGG16网络参数

print(net)

net = torchvision.models.vgg19(pretrained=True) # 从预训练模型加载VGG19网络参数

print(net)

为了减少数据量的计算,在反卷积的之前会先进行降维的操作,详细见代码。

完整代码如下:

from torch import nn

import torch

import torchvision

# ranges 是用于方便获取和记录每个池化层得到的特征图

# 各个vgg原始定义的特征输出的层数

ranges = {

'vgg11': ((0, 3), (3, 6), (6, 11), (11, 16), (16, 21)),

'vgg13': ((0, 5), (5, 10), (10, 15), (15, 20), (20, 25)),

'vgg16': ((0, 5), (5, 10), (10, 17), (17, 24), (24, 31)),

'vgg19': ((0, 5), (5, 10), (10, 19), (19, 28), (28, 37))

}

class VGGTest(nn.Module):

def __init__(self, vgg='vgg16'): # num_classes,此处为 二分类值为2

super(VGGTest, self).__init__()

net = {}

if vgg == 'vgg11':

net = torchvision.models.vgg11(pretrained=True) # 从预训练模型加载VGG11网络参数

elif vgg == 'vgg13':

net = torchvision.models.vgg13(pretrained=True) # 从预训练模型加载VGG13网络参数

elif vgg == 'vgg16':

net = torchvision.models.vgg16(pretrained=True) # 从预训练模型加载VGG16网络参数

elif vgg == 'vgg19':

net = torchvision.models.vgg19(pretrained=True) # 从预训练模型加载VGG19网络参数

self.pool1 = net.features[ranges[vgg][0][0]: ranges[vgg][0][1]] # [batch, 64, W, H]

self.pool2 = net.features[ranges[vgg][1][0]: ranges[vgg][1][1]] # [batch, 128,W, H]

self.pool3 = net.features[ranges[vgg][2][0]: ranges[vgg][2][1]] # [batch, 256,W, H]

self.pool4 = net.features[ranges[vgg][3][0]: ranges[vgg][3][1]] # [batch, 512,W, H]

self.pool5 = net.features[ranges[vgg][4][0]: ranges[vgg][4][1]] # [batch, 512,W, H]

def forward(self, x):

pool1_features = self.pool1(x) # 1/2

pool2_features = self.pool2(pool1_features) # 1/4

pool3_features = self.pool3(pool2_features) # 1/8

pool4_features = self.pool4(pool3_features) # 1/16

pool5_features = self.pool5(pool4_features) # 1/32

return pool1_features, pool2_features, pool3_features, pool4_features, pool5_features

class FCN32s(nn.Module):

def __init__(self, vgg='vgg16', num_classes=21): # num_classes,最后的通道数。也是像素分类的个数。

super(FCN32s, self).__init__()

# 掐年5个提取特征层

self.net_features = VGGTest(vgg)

# 最后俩个那个FC层,被改造成两个Conv层

self.FC_Layers = nn.Sequential(

# fc6

nn.Conv2d(512, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d(),

# fc7

nn.Conv2d(4096, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d()

)

# 输出层之前,先降维一下。

self.score_pool5 = nn.Conv2d(4096, num_classes, 1)

# 最后把降维后的数据进行反卷积(上采样)

self.final_x32 = nn.ConvTranspose2d(num_classes, num_classes, 32, stride=32, bias=False)

def forward(self, x):

# 得到5个特征

features = self.net_features(x)

# 最后一个特征图还要进行FC操作

last_features = self.FC_Layers(features[-1])

# 先降行通道降维,减少运算量,再上采样(反卷积法)

pool5 = self.score_pool5(last_features)

out = self.final_x32(pool5)

return out

class FCN16s(nn.Module):

def __init__(self, vgg='vgg16', num_classes=21): # num_classes,此处为 二分类值为2

super(FCN16s, self).__init__()

# 掐年5个提取特征层

self.net_features = VGGTest(vgg)

# 最后俩个那个FC层,被改造成两个Conv层

self.FC_Layers = nn.Sequential(

# fc6

nn.Conv2d(512, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d(),

# fc7

nn.Conv2d(4096, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d()

)

# 输出层之前,先降维一下。

self.score_pool5 = nn.Conv2d(4096, num_classes, 1)

self.score_pool4 = nn.Conv2d(512, num_classes, 1)

# 最后把降维后的数据进行反卷积(上采样)

self.upscore_pool5_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.final_x16 = nn.ConvTranspose2d(num_classes, num_classes, 16, stride=16, bias=False)

def forward(self, x):

# 得到5个特征

features = self.net_features(x)

# 最后一个特征图还要进行FC操作

last_features = self.FC_Layers(features[-1])

# 先降行通道降维,减少运算量,再上采样(反卷积法)

pool5 = self.score_pool5(last_features)

pool4 = self.score_pool4(features[-2])

out = pool4 + self.upscore_pool5_x2(pool5)

out = self.final_x16(out)

return out

class FCN8s(nn.Module):

def __init__(self, vgg='vgg16', num_classes=21): # num_classes,此处为 二分类值为2

super(FCN8s, self).__init__()

# 掐年5个提取特征层

self.net_features = VGGTest(vgg)

# 最后俩个那个FC层,被改造成两个Conv层

self.FC_Layers = nn.Sequential(

# fc6

nn.Conv2d(512, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d(),

# fc7

nn.Conv2d(4096, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d()

)

# 输出层之前,先降维一下。

self.score_pool5 = nn.Conv2d(4096, num_classes, 1)

self.score_pool4 = nn.Conv2d(512, num_classes, 1)

self.score_pool3 = nn.Conv2d(256, num_classes, 1)

# 最后把降维后的数据进行反卷积(上采样)

self.upscore_pool5_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.upscore_pool4_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.final_x8 = nn.ConvTranspose2d(num_classes, num_classes, 8, stride=8, bias=False)

def forward(self, x):

# 得到5个特征

features = self.net_features(x)

# 最后一个特征图还要进行FC操作

last_features = self.FC_Layers(features[-1])

# 先行通道降维,减少运算量,再上采样(反卷积法)

pool5 = self.score_pool5(last_features)

pool4 = self.score_pool4(features[-2])

pool3 = self.score_pool3(features[-3])

out = pool4 + self.upscore_pool5_x2(pool5)

out = pool3 + self.upscore_pool4_x2(out)

out = self.final_x8(out)

return out

class FCN4s(nn.Module):

def __init__(self, vgg='vgg16', num_classes=21): # num_classes,此处为 二分类值为2

super(FCN4s, self).__init__()

# 掐年5个提取特征层

self.net_features = VGGTest(vgg)

# 最后俩个那个FC层,被改造成两个Conv层

self.FC_Layers = nn.Sequential(

# fc6

nn.Conv2d(512, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d(),

# fc7

nn.Conv2d(4096, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d()

)

# 输出层之前,先降维一下。

self.score_pool5 = nn.Conv2d(4096, num_classes, 1)

self.score_pool4 = nn.Conv2d(512, num_classes, 1)

self.score_pool3 = nn.Conv2d(256, num_classes, 1)

self.score_pool2 = nn.Conv2d(128, num_classes, 1)

# 最后把降维后的数据进行反卷积(上采样)

self.upscore_pool5_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.upscore_pool4_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.upscore_pool3_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.final_x4 = nn.ConvTranspose2d(num_classes, num_classes, 4, stride=4, bias=False)

def forward(self, x):

# 得到5个特征

features = self.net_features(x)

# 最后一个特征图还要进行FC操作

last_features = self.FC_Layers(features[-1])

# 先行通道降维,减少运算量,再上采样(反卷积法)

pool5 = self.score_pool5(last_features)

pool4 = self.score_pool4(features[-2])

pool3 = self.score_pool3(features[-3])

pool2 = self.score_pool2(features[-4])

out = pool4 + self.upscore_pool5_x2(pool5)

out = pool3 + self.upscore_pool4_x2(out)

out = pool2 + self.upscore_pool3_x2(out)

out = self.final_x4(out)

return out

class FCN2s(nn.Module):

def __init__(self, vgg='vgg16', num_classes=21): # num_classes,此处为 二分类值为2

super(FCN2s, self).__init__()

# 掐年5个提取特征层

self.net_features = VGGTest(vgg)

# 最后俩个那个FC层,被改造成两个Conv层

self.FC_Layers = nn.Sequential(

# fc6

nn.Conv2d(512, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d(),

# fc7

nn.Conv2d(4096, 4096, 1),

nn.ReLU(inplace=True),

nn.Dropout2d()

)

# 输出层之前,先降维一下。

self.score_pool5 = nn.Conv2d(4096, num_classes, 1)

self.score_pool4 = nn.Conv2d(512, num_classes, 1)

self.score_pool3 = nn.Conv2d(256, num_classes, 1)

self.score_pool2 = nn.Conv2d(128, num_classes, 1)

self.score_pool1 = nn.Conv2d(64, num_classes, 1)

# 最后把降维后的数据进行反卷积(上采样)

self.upscore_pool5_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.upscore_pool4_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.upscore_pool3_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.upscore_pool2_x2 = nn.Sequential(

nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False),

nn.ReLU(inplace=True)

)

self.final_x2 = nn.ConvTranspose2d(num_classes, num_classes, 2, stride=2, bias=False)

def forward(self, x):

# 得到5个特征

features = self.net_features(x)

# 最后一个特征图还要进行FC操作

last_features = self.FC_Layers(features[-1])

# 先进行通道降维,减少运算量,再上采样(反卷积法)

pool5 = self.score_pool5(last_features)

pool4 = self.score_pool4(features[-2])

pool3 = self.score_pool3(features[-3])

pool2 = self.score_pool2(features[-4])

pool1 = self.score_pool1(features[-5])

out = pool4 + self.upscore_pool5_x2(pool5)

out = pool3 + self.upscore_pool4_x2(out)

out = pool2 + self.upscore_pool3_x2(out)

out = pool1 + self.upscore_pool2_x2(out)

out = self.final_x2(out)

return out

if __name__ == '__main__':

# model = VGGTest()

x = torch.rand(64, 3, 224, 224)

print(x.shape)

num_classes = 21 # 假设最后像素的类别是num_classes,那么通道数就是num_classes

# model = FCN32s()

# model = FCN16s()

# model = FCN8s()

# model = FCN4s()

model = FCN2s(num_classes=num_classes)

y = model(x)

print(y.shape)