基于Pytorch框架,使用CIFAR10数据集训练一个10类别图像分类器

本文参照pytorch官网中文教程【训练分类器】https://pytorch.apachecn.org/docs/1.7/06.html

在官方程序上进行了微调,此程序能够自动识别系统当前环境,并决定使用cpu还是gpu进行训练,前提是你的环境已经安装了pytorch的cpu或gpu版本。

因为网络太小,所以加速效果并不明显,官方已经解释了原因。

甚至当我训练一个回合的时候,GPU上表现更慢,当尝试加大网络会有改善。

本文运行环境

操作系统 windows 10

显卡 GTX 1080 Ti

python 3.8.8

matplotlib 3.4.0

pytorch 1.8.1

cuda 10.2

附:安装命令仅供参考

cpu环境安装命令

pip install matplotlib

conda install pytorch torchvision torchaudio cpuonly -c pytorchgpu环境安装命令

pip install matplotlib

conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Time : 2021/3/29 10:02

# @Author : LiShan

# @Email : [email protected]

# @File : classifier.py

# @Note : 采用CIFAR10数据集训练一个10类别图像分类器

import os

import time

import matplotlib.pyplot as plt

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

# 准备数据集

def dataset():

# 下载并加载数据集

transform = transforms.Compose(

[transforms.ToTensor(), # 将0~255转换为tensor,0.0~1.0

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)) # 均值(R,G,B)、标准差(R,G,B)

]) # 归一化数据集[-1,1]

# 判断是否已存在数据,来决定是否下载数据

if os.path.exists("./data/cifar-10-batches-py"):

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=False, transform=transform)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=False, transform=transform)

else:

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

# 加载训练集

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4, shuffle=True, num_workers=2)

print("训练集样本", len(trainloader))

# 加载测试集

testloader = torch.utils.data.DataLoader(testset, batch_size=4, shuffle=False, num_workers=2)

print("测试集样本", len(testloader))

# 定义目标类别

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

return trainloader, testloader, classes

# 可视化输出图像

def imshow(img):

img = img / 2 + 0.5 # unnormalize

if torch.cuda.is_available():

npimg = img.cpu().numpy()

else:

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

if os.path.exists("./img"):

pass

else:

os.mkdir("./img")

plt.savefig("./img/demo.jpg")

plt.show()

# 定义神经网络

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, (5, 5))

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, (5, 5))

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 定义损失函数

def loss(net):

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

return criterion, optimizer

# 定义训练网络函数,网络、损失评价、优化器、训练集

def train(net, criterion, optimizer, trainloader):

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

for epoch in range(1): # loop over the dataset multiple times

running_loss = 0.0

avr_loss_rate = []

for i, data in enumerate(trainloader, 0):

# get the inputs

if torch.cuda.is_available():

inputs, labels = data[0].to(device), data[1].to(device)

else:

inputs, labels = data

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

avr_loss_rate.append(running_loss / (i + 1))

if i % 1000 == 999: # print every 1000 mini-batches

print("[%d, %5d] avr_loss: %.3f" % (epoch + 1, i + 1, avr_loss_rate[-1]))

# print('[%d, %5d] loss: %.3f' % (epoch + 1, i + 1, running_loss / 1000))

# running_loss = 0.0

print("[%d] avr_loss: %.3f" % (epoch + 1, avr_loss_rate[-1]))

plt.plot(avr_loss_rate)

plt.savefig("./{}.jpg".format(epoch))

plt.show()

print('Finished Training')

# 定义保存网络函数

def save(net, path):

torch.save(net.state_dict(), path)

# 指定网络、权重文件路径,测试集,对网络性能进行测试

def test(net, path, testloader):

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 加载网络进行测试

net.load_state_dict(torch.load(path))

# 加载一组测试样本数据

data = iter(testloader).next()

if torch.cuda.is_available():

net.to(device)

images, labels = data[0].to(device), data[1].to(device)

else:

images, labels = data

imshow(torchvision.utils.make_grid(images))

print('GroundTruth: ', ' '.join('%5s' % classes[labels[j]] for j in range(4)))

# 网络输出判断结果

outputs = net(images)

_, predicted = torch.max(outputs, 1)

print('Predicted: ', ' '.join('%5s' % classes[predicted[j]] for j in range(4)))

# 测试网络在整个数据集上表现

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

if torch.cuda.is_available():

images, labels = data[0].to(device), data[1].to(device)

else:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (100 * correct / total))

# 测试每个类的准确率

class_correct = list(0. for _ in range(len(classes)))

class_total = list(0. for _ in range(len(classes)))

with torch.no_grad():

for data in testloader:

if torch.cuda.is_available():

images, labels = data[0].to(device), data[1].to(device)

else:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs, 1)

c = (predicted == labels).squeeze()

for i in range(4):

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

for i in range(len(classes)):

print('Accuracy of %5s : %2d %%' % (

classes[i], 100 * class_correct[i] / class_total[i]))

print('Finished Testing')

if __name__ == '__main__':

# 查看是否支持GPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

if torch.cuda.is_available():

print("程序发现GPU环境,将使用GPU加速训练")

else:

print("程序没有发现GPU环境,将使用CPU进行训练")

# 准备数据,训练集、测试集、目标类别

trainloader, testloader, classes = dataset()

# 随机获取一组训练样本,展示数据

images, labels = iter(trainloader).next()

# 显示图片

imshow(torchvision.utils.make_grid(images))

# 打印图片标签

print(' '.join('%5s' % classes[labels[j]] for j in range(4)))

# 设计神经网络

net = Net()

if torch.cuda.is_available():

net.to(device)

# 设计损失函数和优化器

criterion, optimizer = loss(net)

# 训练网络

start = time.time()

train(net, criterion, optimizer, trainloader)

end = time.time()

print("训练时长:{}秒".format(end - start))

# 保存训练后的网络

path = './cifar_net.pth'

save(net, path)

# 测试网络

test(net, path, testloader)

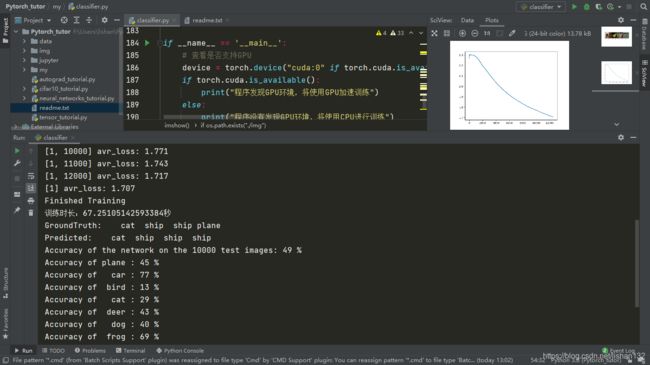

cpu运行效果

gpu训练效果

cpu训练10个回合