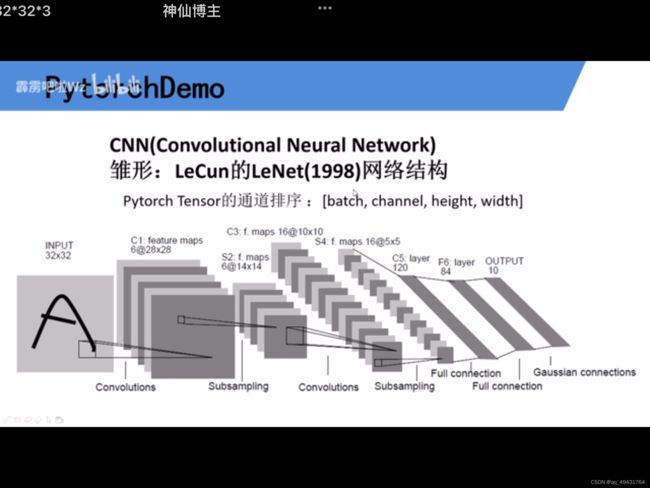

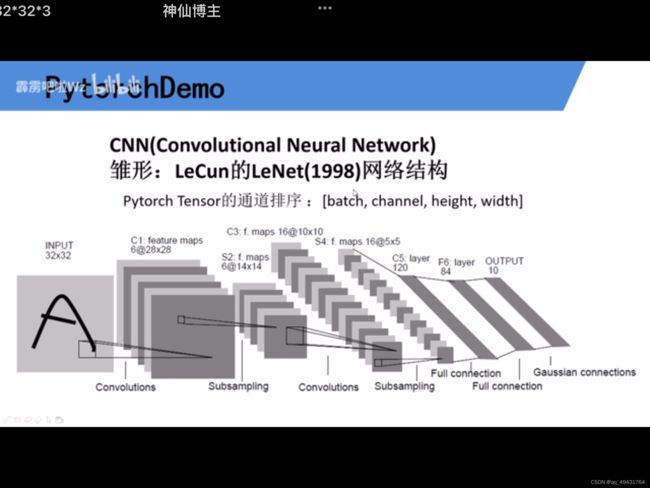

搭建Lenet并基于CIFAR10训练

1.model.py

import torch.nn as nn

import torch.nn.functional as F

#详细解释:http://lihuaxi.xjx100.cn/news/105304.html

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__() #https://blog.csdn.net/qq_31244453/article/details/104657532

self.conv1 = nn.Conv2d(3, 16, 5) # 1.in_channels输入通道数 2.卷积核个数 3,卷积核大小

self.pool1 = nn.MaxPool2d(2, 2) # (kernel_size池化核大小,stride or kernel_size 如果不指定就是池化核大小)

self.conv2 = nn.Conv2d(16, 32, 5)

self.pool2 = nn.MaxPool2d(2, 2)

self.fc1 = nn.Linear(32*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.relu(self.conv1(x)) # input(3, 32, 32) output(16, 28, 28)

x = self.pool1(x) # output(16, 14, 14)

x = F.relu(self.conv2(x)) # output(32, 10, 10)

x = self.pool2(x) # output(32, 5, 5)

#print(x.shape) #torch.Size([32, 32, 5, 5])

x = x.view(-1,32*5*5) # output(32*5*5) # 展成一维向量 (x.shape[0],-1)

# 这里-1表示一个不确定的数,就是你如果不确定你想要reshape成几行,但是你很肯定要reshape成4列,那不确定的地方就可以写成-1

#print(x)

#print(x.shape) #torch.Size([32, 800])

x = F.relu(self.fc1(x)) # output(120)

x = F.relu(self.fc2(x)) # output(84)

x = self.fc3(x) # output(10) #输出结果一般不使用relu激活

#print(x.size()) #torch.Size([32, 10])

return x

import torch

input1=torch.rand([32,3,32,32])

#[batch, channel, height, width],表示batch_size=8, 3通道(灰度图像为1),图片尺寸:224x224

#print(input1)

model=LeNet()

#print(model)

output=model(input1) #model.forward(input1)

#print(output.size() #torch.Size([32, 10])

2.train.py

import torch

import torchvision

import torch.nn as nn

from model import LeNet

import torch.optim as optim

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

# transforms.Compose 将预处理方法打包成一个整体

# 1.ToTensor 将pil图像或者np数据转化成tensor 2.normalize 使用均值和标准差 来标准化tensor

#def main():

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

#https://blog.csdn.net/jzwong/article/details/104272600

#

# # 50000张训练图片

# # 第一次使用时要将download设置为True才会自动去下载数据集

## root='./' 把数据集下载到什么地方 一般放在当前目录的data文件夹下 train=True 会导入数据集中训练集 transform为图像预处理

train_set = torchvision.datasets.CIFAR10(root='./data', train=True,

download=False, transform=transform)

## 将刚才的数据集导入 batch_size 每一批随机拿出36张拿来训练 shuffle打乱

train_loader = torch.utils.data.DataLoader(train_set, batch_size=36,

shuffle=True, num_workers=0)

# 10000张验证图片

# 第一次使用时要将download设置为True才会自动去下载数据集

val_set = torchvision.datasets.CIFAR10(root='./data', train=False,

download=False, transform=transform)

val_loader = torch.utils.data.DataLoader(val_set, batch_size=10000,

shuffle=False, num_workers=0)

#print(val_loader) #

val_data_iter = iter(val_loader) # iter 将testloader转化成可迭代的迭代器

val_image, val_label = val_data_iter.next() # 通过next方法 获取一批数据包括测试的图像 以及图像的标签值 这个非常好用!

# print(val_image.size()) #torch.Size([10000, 3, 32, 32])

# print(val_label.size()) #torch.Size([10000])

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

# def imshow(img):

# img = img / 2 + 0.5 # unnormalize 刚才标准化现在还原

# npimg = img.numpy() # 转化成numpy格式

# plt.imshow(np.transpose(npimg, (1, 2, 0))) ## 刚才是channel height width 现在要变为 height width channel 所以从(0 1 2) 改成(1 2 0)

# plt.show() # 用show展示出来

#

# # print labels

# print(' '.join(f'{classes[val_label[j]]:5s}' for j in range(4)))

#

# # show images

# imshow(torchvision.utils.make_grid(val_image))

#

net = LeNet()

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

for epoch in range(5): # loop over the dataset multiple times # 训练集需要训练多少次

running_loss = 0.0 # 累加loss

for step, data in enumerate(train_loader, start=0): # 遍历训练集样本 enumerate

# 不仅能返回每一批的数据data 还会返回这一批data所对应的步数 也就是index

# get the inputs; data is a list of [inputs, labels]

#将第一个value 赋值给inputs(每一个样本的32*32) ,第二个value 赋值给 labels(10个分类)

inputs, labels = data

# print(inputs.size()) #torch.Size([36, 3, 32, 32])

# print(labels.size()) #torch.Size([36])

#print(inputs, labels)

# zero the parameter gradients

optimizer.zero_grad() # 将历史的梯度清零 可以一次性计算多个小的batch

# forward + backward + optimize

outputs = net(inputs)

#print(outputs.size()) #torch.Size([36, 10])

#print(outputs)

loss = loss_function(outputs, labels)

#print(labels.size()) #torch.Size([36])

#print(labels)

loss.backward() # 反向传播

optimizer.step() # 参数更新 也是权重更新

# 打印耗时、损失、准确率等数据

running_loss += loss.item() # 每次计算完loss 累加到running_loss变量中

if step % 500 == 499: # print every 500 mini-batches

with torch.no_grad(): # torch.no_grad 在接下来过程中 不要计算每个节点的误差损失梯度 少占用内存或者资源

outputs = net(val_image) # 正向传播 [batch, 10]

#print("output",outputs.size()) #output torch.Size([10000, 10])

predict_y = torch.max(outputs, dim=1)[1] #torch.max 寻找输出的最大的index在哪个位置 网络预测最可能属于哪个类别

# dim = 1 在维度1 上寻找最大的值 [batch, 10] 因为第0个维度对应的是batch 要在输出的十个节点中寻找

# print(torch.max(outputs, dim=1)) # values=tensor([0.9726, 4.1877, 2.7064, ..., 3.2531, 1.4753, 2.6609]),

# #indices=tensor([3, 8, 1, ..., 5, 1, 7]))

# print(predict_y) #tensor([3, 8, 1, ..., 5, 1, 7])

# print(predict_y.size())#torch.Size([10000])

accuracy = torch.eq(predict_y, val_label).sum().item() / val_label.size(0)

#print(val_label.size()) #torch.Size([10000])

#val_label.size(0) 等价val_label.size()[0]=batch_size

# 计算出来的是个tensor 要通过item得出数值

# 将预测的标签类别和真实的标签类别进行比较 再求和 在本次测试中预测对了多少个样本

print('[%d, %5d] train_loss: %.3f test_accuracy: %.3f' %

(epoch + 1, step + 1, running_loss / 500, accuracy))

running_loss = 0.0

print('Finished Training')

save_path = './Lenet.pth'

torch.save(net.state_dict(), save_path)

#

# if __name__ == '__main__':

# main()

3.predict.py

import torch

import torchvision.transforms as transforms

from PIL import Image

from model import LeNet

def main():

transform = transforms.Compose(

[transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

net = LeNet()

net.load_state_dict(torch.load('Lenet.pth'))

im = Image.open('1.jpg')

im = transform(im) # [C, H, W]

im = torch.unsqueeze(im, dim=0) # [N, C, H, W]

with torch.no_grad():

outputs = net(im)

print(outputs)

# print(outputs.size())

predict = torch.max(outputs, dim=1)[1].numpy() #索引

print(predict)

print(classes[int(predict)])

if __name__ == '__main__':

main()