关于CIFAR10数据集的探索

365天深度学习训练营

第二周——彩色图片分类

- 选题:彩色图片分类

- 难度:新手入门

- 语言:Python3、TensorFlow2

- 时间:8月1-8月5日

- 要求:

- 学习如何编写一个完整的深度学习程序

- 了解分类彩色图片会灰度图片有什么区别

- 测试集accuracy到达72%

参考文章:深度学习100例-卷积神经网络(CNN)彩色图片分类 | 第2天

报名学习:365天深度学习训练营

相比于前一周(手写数字识别)

- 图片由原本色彩简单的图片(黑白图片),变为彩色图片,即由原来的单通道变为3通道(RGB)

一、导入库

import pandas as pd

import tensorflow as tf

from tensorflow import keras

from keras.layers import Dense, Input, Conv2D, MaxPool2D, Flatten, Dropout

from keras.models import Model,Sequential

from keras.optimizers import Adam

import matplotlib.pyplot as plt

2022-12-23 18:39:50.183425: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/usr/liangjie/soft/netcdf-fortran-4.5.4/release/lib:/home/usr/liangjie/soft/netcdf-c-4.9.0/release/lib:/home/usr/liangjie/soft/zlib-1.2.12/release/lib:/home/usr/liangjie/soft/hdf/HDF5-1.12.2-Linux/HDF_Group/HDF5/1.12.2/lib:/home/usr/liangjie/soft/gdal-3.5.0/release/gdal-release/lib64:

2022-12-23 18:39:50.183455: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

二、读取数据集

数据集说明:CIFAR-10数据集是由CIFAR(Candian Institute For Advanced Research) 收集整理的一个用于机器学习和图像识别问题的数据集。这个数据集共有60000张32 * 32的涵盖10个分类的彩色图片。

类别为:airplane,automobile,bird,cat,deer,dog,frog,horse,ship,truck

(train_images, train_labels),(test_images, test_labels) = keras.datasets.cifar10.load_data()

dict_labels = {0:'airplane',1:'automobile',2:'bird',3:'cat',4:'deer',5:'dog',6:'frog',7:'horse',8:'ship',9:'truck'}

Downloading data from https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

170498071/170498071 [==============================] - 14s 0us/step

三、训练集预览

import matplotlib.pyplot as plt

plt.figure(figsize=(10,10))

for i in range(20):

plt.subplot(5, 5, i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(dict_labels[train_labels[i][0]])

plt.show()

四、数据预处理

RGB3色图片和黑白图片的处理方式相似

train_images.shape

(50000, 32, 32, 3)

test_images.shape

(10000, 32, 32, 3)

train_images, test_images = train_images/255.0, test_images/255.0

五、构建模型

feature_input = Input(shape=(32, 32,3))

c1 = Conv2D(filters=64, kernel_size=(3,3), activation='relu', padding='same')(feature_input)

c1 = MaxPool2D(pool_size=(2,2))(c1)

c1 = Dropout(0.2)(c1)

c2 = Conv2D(filters=128, kernel_size=(3,3), activation='relu',padding='same')(c1)

c2 = MaxPool2D(pool_size=(2,2))(c2)

c2 = Dropout(0.2)(c2)

c3 = Conv2D(filters=256, kernel_size=(3,3), activation='relu',padding='same')(c2)

c3 = MaxPool2D(pool_size=(2,2))(c3)

c3 = Dropout(0.2)(c3)

f1 = Flatten()(c3)

d1 = Dense(units=128, activation='relu')(f1)

d1 = Dropout(0.2)(d1)

output = Dense(units=10, activation='softmax')(d1)

model=Model(feature_input, output)

model.compile(optimizer=Adam(0.001), loss='sparse_categorical_crossentropy', metrics=['accuracy'])

2022-12-23 18:41:05.942791: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/usr/liangjie/soft/netcdf-fortran-4.5.4/release/lib:/home/usr/liangjie/soft/netcdf-c-4.9.0/release/lib:/home/usr/liangjie/soft/zlib-1.2.12/release/lib:/home/usr/liangjie/soft/hdf/HDF5-1.12.2-Linux/HDF_Group/HDF5/1.12.2/lib:/home/usr/liangjie/soft/gdal-3.5.0/release/gdal-release/lib64:

2022-12-23 18:41:05.942888: W tensorflow/stream_executor/cuda/cuda_driver.cc:269] failed call to cuInit: UNKNOWN ERROR (303)

2022-12-23 18:41:05.942923: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (ALPHA.ITPCAS.AC.CN): /proc/driver/nvidia/version does not exist

2022-12-23 18:41:05.943166: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 32, 32, 3)] 0

conv2d (Conv2D) (None, 32, 32, 64) 1792

max_pooling2d (MaxPooling2D (None, 16, 16, 64) 0

)

dropout (Dropout) (None, 16, 16, 64) 0

conv2d_1 (Conv2D) (None, 16, 16, 128) 73856

max_pooling2d_1 (MaxPooling (None, 8, 8, 128) 0

2D)

dropout_1 (Dropout) (None, 8, 8, 128) 0

conv2d_2 (Conv2D) (None, 8, 8, 256) 295168

max_pooling2d_2 (MaxPooling (None, 4, 4, 256) 0

2D)

dropout_2 (Dropout) (None, 4, 4, 256) 0

flatten (Flatten) (None, 4096) 0

dense (Dense) (None, 128) 524416

dropout_3 (Dropout) (None, 128) 0

dense_1 (Dense) (None, 10) 1290

=================================================================

Total params: 896,522

Trainable params: 896,522

Non-trainable params: 0

_________________________________________________________________

history = model.fit(train_images, train_labels, validation_data=(test_images, test_labels), batch_size=512, epochs=20, verbose=1)

Epoch 1/20

98/98 [==============================] - 27s 272ms/step - loss: 6.5774 - accuracy: 0.1016 - val_loss: 2.3022 - val_accuracy: 0.1106

Epoch 2/20

98/98 [==============================] - 24s 246ms/step - loss: 2.3014 - accuracy: 0.1085 - val_loss: 2.3025 - val_accuracy: 0.0987

Epoch 3/20

98/98 [==============================] - 25s 257ms/step - loss: 2.2365 - accuracy: 0.1530 - val_loss: 2.0413 - val_accuracy: 0.2585

Epoch 4/20

98/98 [==============================] - 25s 258ms/step - loss: 1.8970 - accuracy: 0.2962 - val_loss: 1.6350 - val_accuracy: 0.4292

Epoch 5/20

98/98 [==============================] - 25s 258ms/step - loss: 1.6378 - accuracy: 0.4050 - val_loss: 1.4260 - val_accuracy: 0.5028

Epoch 6/20

98/98 [==============================] - 25s 255ms/step - loss: 1.4965 - accuracy: 0.4634 - val_loss: 1.3223 - val_accuracy: 0.5333

Epoch 7/20

98/98 [==============================] - 25s 259ms/step - loss: 1.3920 - accuracy: 0.5044 - val_loss: 1.2111 - val_accuracy: 0.5832

Epoch 8/20

98/98 [==============================] - 25s 257ms/step - loss: 1.3014 - accuracy: 0.5395 - val_loss: 1.1273 - val_accuracy: 0.6059

Epoch 9/20

98/98 [==============================] - 25s 255ms/step - loss: 1.2342 - accuracy: 0.5640 - val_loss: 1.0519 - val_accuracy: 0.6363

Epoch 10/20

98/98 [==============================] - 25s 256ms/step - loss: 1.1710 - accuracy: 0.5897 - val_loss: 1.0454 - val_accuracy: 0.6392

Epoch 11/20

98/98 [==============================] - 25s 254ms/step - loss: 1.1112 - accuracy: 0.6088 - val_loss: 0.9404 - val_accuracy: 0.6826

Epoch 12/20

98/98 [==============================] - 25s 255ms/step - loss: 1.0676 - accuracy: 0.6288 - val_loss: 0.9205 - val_accuracy: 0.6856

Epoch 13/20

98/98 [==============================] - 25s 254ms/step - loss: 1.0190 - accuracy: 0.6447 - val_loss: 0.9234 - val_accuracy: 0.6849

Epoch 14/20

98/98 [==============================] - 25s 260ms/step - loss: 0.9938 - accuracy: 0.6570 - val_loss: 0.8526 - val_accuracy: 0.7130

Epoch 15/20

98/98 [==============================] - 25s 254ms/step - loss: 0.9471 - accuracy: 0.6696 - val_loss: 0.8378 - val_accuracy: 0.7157

Epoch 16/20

98/98 [==============================] - 25s 255ms/step - loss: 0.9251 - accuracy: 0.6768 - val_loss: 0.8582 - val_accuracy: 0.7045

Epoch 17/20

98/98 [==============================] - 25s 254ms/step - loss: 0.9005 - accuracy: 0.6879 - val_loss: 0.8318 - val_accuracy: 0.7130

Epoch 18/20

98/98 [==============================] - 25s 255ms/step - loss: 0.8719 - accuracy: 0.6966 - val_loss: 0.7779 - val_accuracy: 0.7311

Epoch 19/20

98/98 [==============================] - 25s 255ms/step - loss: 0.8441 - accuracy: 0.7053 - val_loss: 0.7546 - val_accuracy: 0.7404

Epoch 20/20

98/98 [==============================] - 25s 254ms/step - loss: 0.8201 - accuracy: 0.7141 - val_loss: 0.7747 - val_accuracy: 0.7337

model.compile(optimizer=Adam(0.0001), loss='sparse_categorical_crossentropy', metrics=['accuracy'])

history_2 = model.fit(train_images, train_labels, validation_data=(test_images, test_labels), batch_size=512, epochs=20, verbose=1)

Epoch 1/20

98/98 [==============================] - 26s 261ms/step - loss: 0.7555 - accuracy: 0.7356 - val_loss: 0.6947 - val_accuracy: 0.7606

Epoch 2/20

98/98 [==============================] - 25s 256ms/step - loss: 0.7320 - accuracy: 0.7448 - val_loss: 0.6943 - val_accuracy: 0.7609

Epoch 3/20

98/98 [==============================] - 26s 261ms/step - loss: 0.7221 - accuracy: 0.7468 - val_loss: 0.6848 - val_accuracy: 0.7654

Epoch 4/20

98/98 [==============================] - 25s 256ms/step - loss: 0.7126 - accuracy: 0.7516 - val_loss: 0.6739 - val_accuracy: 0.7697

Epoch 5/20

98/98 [==============================] - 25s 255ms/step - loss: 0.7102 - accuracy: 0.7497 - val_loss: 0.6758 - val_accuracy: 0.7681

Epoch 6/20

98/98 [==============================] - 25s 256ms/step - loss: 0.6990 - accuracy: 0.7576 - val_loss: 0.6784 - val_accuracy: 0.7668

Epoch 7/20

98/98 [==============================] - 25s 260ms/step - loss: 0.6949 - accuracy: 0.7571 - val_loss: 0.6726 - val_accuracy: 0.7691

Epoch 8/20

98/98 [==============================] - 26s 264ms/step - loss: 0.6851 - accuracy: 0.7610 - val_loss: 0.6743 - val_accuracy: 0.7709

Epoch 9/20

98/98 [==============================] - 26s 263ms/step - loss: 0.6721 - accuracy: 0.7644 - val_loss: 0.6680 - val_accuracy: 0.7703

Epoch 10/20

98/98 [==============================] - 26s 264ms/step - loss: 0.6693 - accuracy: 0.7668 - val_loss: 0.6720 - val_accuracy: 0.7689

Epoch 11/20

98/98 [==============================] - 26s 263ms/step - loss: 0.6626 - accuracy: 0.7670 - val_loss: 0.6637 - val_accuracy: 0.7689

Epoch 12/20

98/98 [==============================] - 26s 262ms/step - loss: 0.6600 - accuracy: 0.7717 - val_loss: 0.6551 - val_accuracy: 0.7769

Epoch 13/20

98/98 [==============================] - 26s 262ms/step - loss: 0.6536 - accuracy: 0.7703 - val_loss: 0.6635 - val_accuracy: 0.7727

Epoch 14/20

98/98 [==============================] - 26s 262ms/step - loss: 0.6446 - accuracy: 0.7755 - val_loss: 0.6572 - val_accuracy: 0.7748

Epoch 15/20

98/98 [==============================] - 25s 259ms/step - loss: 0.6428 - accuracy: 0.7750 - val_loss: 0.6475 - val_accuracy: 0.7752

Epoch 16/20

98/98 [==============================] - 25s 257ms/step - loss: 0.6379 - accuracy: 0.7764 - val_loss: 0.6524 - val_accuracy: 0.7755

Epoch 17/20

98/98 [==============================] - 25s 259ms/step - loss: 0.6281 - accuracy: 0.7781 - val_loss: 0.6457 - val_accuracy: 0.7770

Epoch 18/20

98/98 [==============================] - 26s 261ms/step - loss: 0.6218 - accuracy: 0.7814 - val_loss: 0.6459 - val_accuracy: 0.7757

Epoch 19/20

98/98 [==============================] - 25s 259ms/step - loss: 0.6173 - accuracy: 0.7842 - val_loss: 0.6422 - val_accuracy: 0.7775

Epoch 20/20

98/98 [==============================] - 25s 258ms/step - loss: 0.6072 - accuracy: 0.7854 - val_loss: 0.6389 - val_accuracy: 0.7802

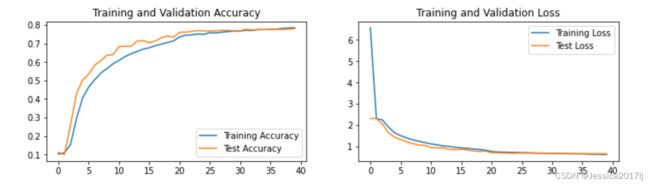

df_val_loss = history.history['val_loss']+history_2.history['val_loss']

df_val_acc = history.history['val_accuracy']+history_2.history['val_accuracy']

df_loss = history.history['loss']+history_2.history['loss']

df_acc = history.history['accuracy']+history_2.history['accuracy']

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

size = len(df_acc)

plt.plot( df_acc, label='Training Accuracy')

plt.plot( df_val_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot( df_loss, label='Training Loss')

plt.plot( df_val_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()